Multicore and parallel programming Contact information: Håkan

advertisement

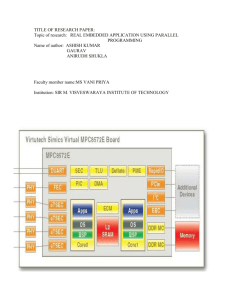

Multicore and parallel programming Contact information: Håkan Grahn, Hakan.Grahn@bth.se Today we are in the middle of a paradigm shift in the computer industry. Current and future processor generations are based on multicore architectures, and multicore processors are the main computing platform in all types of system from small-scale embedded systems to large-scale server systems. The hardware performance increase will mainly come from an increasing number of cores on each chip, and hardware manufacturers predict that the number of cores will double every second year. In order to harvest the performance potential of future multicore processors, the software also need to be parallel and scalable. However, writing parallel programs is not trivial. Therefore, a number of areas needs to be addressed in order achieve both correct and highperformance parallel software. We propose three areas as suitable for master thesis projects: Correctness issues: In sequential programs, where only one thing happens at the time, debugging can be done in a fairly deterministic way. A sequential program (usually) behaves the same way each time it is executed with the same input. Parallel programs generate new types of problems that are timing dependent, e.g., unsynchronized access of shared data, doing things in different orders depending of timing and scheduling effects, and occasional and transient bugs (a.k.a. “Heisenbugs”). Further, several of these problems may be very hard to reproduce and debug, since they only occur under certain conditions that can be difficult to reconstruct. Performance issues: In a traditional profiler for a sequential program, it is relatively easy to identify the critical path of a program, i.e., those parts of the program that consume most of the time and limit the total execution time. However, in a parallel program this is much harder, since the critical path often involves several parallel tasks with dependencies between them. Therefore, the objective is to create methods, techniques, and tools similar to traditional profilers, but focusing on those activities that are on the critical execution path in parallel software. Shared data management: Management and protection of shared data is a large challenge and also a significant source of correctness problems in a parallel program. Traditionally, locks, e.g., semaphores, have been used to handle this. However, locks have a number of inherent problems such as deadlocks, priority inversion, and hierarchical locks. Further, locks suffer from the lack of transparency. Transactional memory (TM) has been proposed as an alternative method to manage and protect shared data access. A key difference between TM and locks is that in TM the protection of shared data is associated with the data accesses themselves, while the protection mechanism is associated with a specific lock primitive (variable) independent of the shared data.