File

advertisement

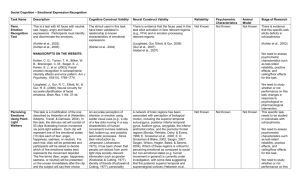

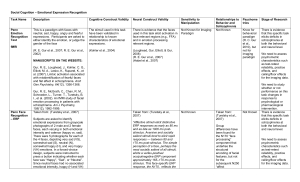

What Faces Can’t Tell Us FEB. 28, 2014 Gray Matter By LISA FELDMAN BARRETT CAN you detect someone’s emotional state just by looking at his face? It sure seems like it. In everyday life, you can often “read” what someone is feeling with the quickest of glances. Hundreds of scientific studies support the idea that the face is a kind of emotional beacon, clearly and universally signaling the full array of human sentiments, from fear and anger to joy and surprise. Increasingly, companies like Apple and government agencies like the Transportation Security Administration are banking on this transparency, developing software to identify consumers’ moods or training programs to gauge the intent of airline passengers. The same assumption is at work in the field of mental health, where illnesses like autism and schizophrenia are often treated in part by training patients to distinguish emotions by facial expression. But this assumption is wrong. Several recent and forthcoming research papers from the Interdisciplinary Affective Science Laboratory, which I direct, suggest that human facial expressions, viewed on their own, are not universally understood. The pioneering work in the field of “emotion recognition” was conducted in the 1960s by a team of scientists led by the psychologist Paul Ekman. Research subjects were asked to look at photographs of facial expressions (smiling, scowling and so on) and match them to a limited set of emotion words (happiness, anger and so on) or to stories with phrases like “Her husband recently died.” Most subjects, even those from faraway cultures with little contact with Western civilization, were extremely good at this task, successfully matching the photos most of the time. Over the following decades, this method of studying emotion recognition has been replicated by other scientists hundreds of times. In recent years, however, at my laboratory we began to worry that this research method was flawed. In particular, we suspected that by providing subjects with a preselected set of emotion words, these experiments had inadvertently “primed” the subjects — in effect, hinting at the answers — and thus skewed the results. To test this hypothesis, we ran some preliminary studies, some of which were later published in the journal Emotion, in which subjects were not given any clues and instead were asked to freely describe the emotion on a face (or to view two faces and answer yes or no as to whether they expressed the same emotion). The subjects’ performance plummeted. In additional studies, also published or soon to be published in Emotion, we took steps to further prevent our subjects from being primed, and their performance plummeted even more — indeed, their performance was comparable to that of people suffering from semantic dementia, who can distinguish positive from negative emotion in faces, but nothing finer. We even tested non-Western subjects, sending an expedition to Namibia to work with a remote tribe called the Himba. In this experiment, we presented Himba subjects with 36 photographs of six actors each making six facial expressions: smiling, scowling, pouting, wide-eyed and so on. When we asked the subjects to sort the faces by how the actors were feeling, the Himba placed all the smiling faces into a single pile, most of the wide-eyed faces into a second pile, and the remaining piles were mixed. When asked to label their piles, the Himba subjects did not use words like “happy” and “afraid” but rather words like “laughing” and “looking.” If the emotional content of facial expressions were in fact universal, the Himba subjects would have sorted the photographs into six piles by expression, but they did not. These findings strongly suggest that emotions are not universally recognized in facial expressions, challenging the theory, attributed to Charles Darwin, that facial movements might be evolved behaviors for expressing emotion. If faces do not “speak for themselves,” how do we manage to “read” other people? The answer is that we don’t passively recognize emotions but actively perceive them, drawing heavily (if unwittingly) on a wide variety of contextual clues — a body position, a hand gesture, a vocalization, the social setting and so on. The psychologist Hillel Aviezer has done experiments in which he grafted together face and body photos from people portraying different emotions. When research subjects were asked to judge the feeling being communicated, the emotion associated with the body nearly always trumped the one associated with the face. For example, when shown a scowling (angry) face attached to a body holding a soiled object (disgust), subjects nearly always identified the emotion as disgust, not anger. You can certainly be expert at “reading” other people. But you — and Apple, and the Transportation Security Administration — should know that the face isn’t telling the whole story. Lisa Feldman Barrett is a professor of psychology at Northeastern University and the director of the Interdisciplinary Affective Science Laboratory.