Homework #9 answers

07:27 Thursday, April 16, 2020 1

Stat 401 A – Homework 9 answers

1) Planetary distances (1 pt per part) a) Intercept: 0.717 with se = 0.091. Slope 0.550 with se = 0.016. b) By hand, using SAS information. T = (0.550-0.693) / 0.0162 = -8.83. c) 501

Note: predicted log distance is 6.216 (= 0.717 + 10*0.550), so predicted distance is exp(6.216) d) Prediction is smaller, but not unusually so. 95% prediction interval for planet 10 is (347, 723). Actual distance of 395 is inside.

Note: Prediction interval for log distance is (5.85, 6.58); interval for predicted distance is exp() the endpoints of that interval.

Grading notes: partial credit for no interval. partial credit for confidence interval (which does not include actual distance) e) 0.126. There are many ways you could describe this; they should all include the core concept that this variance is deviation of planetary positions from those predicted by the fitted regression.

Notes: The estimated error standard deviation is the square root of the MS for Error (or Residual).

The error variance is NOT deviation from values predicted by Bode’s law. f) by hand, using information from computer output: predicted order for distance = 1350 is (ln(1350) – 0.717)/0.550 = 11.8.

Yes, this is consistent with another planet (order 11) in between.

Grading note: OK if this was rounded to 12 g) by hand, using information from computer output: se of predicted order = 0.28, computed using se pred log distance at X=11.8, (i.e. 0.155 / 0.550)

OK if report se = 0.23, computed using error sd (0.126 / 0.550)

Notes:

Since order 11.8 is quite an extrapoloation, se pred is considerably larger than s (error sd). Much better to use se pred. But, since I asked for approximate se, ok if you used my approximate formula that uses s. If you really care about the precision of predicted X values, you will find out that both formulae are approximations. It’s just that the formula using se pred is a better approximation.

Since the predicted order is based on a single observation of distance, you need to use s or the se of the predicted obs. The se of the mean is the wrong numerator.

2) Insect diversity in tropical forest patches, 1 pt each part a) Intercept: 36.2, slope for log(area): 12.4 test of slope=0: T = 4.48, p = 0.0005 b) Many ways of writing a conclusion, for example: increasing log(area) by one unit will increase the number of butterfly species by 12.4 on average

Better: doubling the area will increase the number of butterfly species by an average of 8.6 species

Notes: This is an experimental study, so a casual claim is acceptable. On average is important.

Effect of doubling area computed as 12.4 * log(2) = 12.4*0.693. You can also calculate this change by predicting the number of species for an area of 1 = 36.2 + 12.4*ln(1) = 36.2 and for an area of 2 = 36.2 = 12.4*ln(2) = 44.8. The difference is 8.6.

The choice of areas of 1 and 2 is arbitrary. You get the same change for areas of 10 and 20, or areas of 50 and 100.

07:27 Thursday, April 16, 2020 2 c) F for lack of fit = 0.98, p = 0.40 . No evidence of lack of fit of the regression with X=log(area). d) Smallest se for the line (mean Y at given X0) occurs at X0 = mean(X) = 2.302, or area of 10.0.

Note: Best to obtained by inspection of the formula for se of the line. Could also look at se mean for different values of X. In this case, if you only look at areas in the data set (1, 10, 100, 1000), you get the right answer. e) Smallest se for a predicted observation also occurs at area = 10.0

Note: Same answer as in d. The se of a predicted observation equals sqrt(s^2 + (se mean)^2). Since s^2 is constant (does not depend on X0), this is smallest when se mean is smallest. f) Not possible. The smallest possible se(pred) is s = 23.8 for any size data set.

Note: A more careful answer computes the smallest possible se mean for n = 16 patches: s/sqrt(16) approx.. = 6. Hence, se pred is approximately 24.6 or larger. f) Still no possible. Again, smallest possible se(pred) is s=23.8 and that is with se mean = 0 (i.e. perfect knowledge of the regression line).

Note: Increasing the sample size (i.e. measuring more patches) will decrease se(mean), but do nothing (on average) to s^2 or s.

Notice that for these data, the root MSE = 23.78. To get a more precise estimate of the number of species on a patch, the investigators will have to reduce the “unexplained” variability among patches of the same size. Some options are to restrict the study to more homogeneous patches (perhaps by controlling for patch age, or a certain type of vegetation, or not studying as large a geographic area), or they will need to find additional variables that reduce the variability in the predictions.

3) Wine and mortality (1 pt each part) a)

No, a linear regression model is not appropriate. The relationship looks curved.

07:27 Thursday, April 16, 2020 3 b) Various ways to describe the association. My preference is something like:

Doubling the wine consumption reduces heart attack deaths by an average of 1.2 per 1000 men. Or non-causal:

Doubling the wine consumption is associated with a decrease in heart attack deaths by an average of 1.2 deaths per 1000 men.

Notes: The estimated slope is -1.75. The effect of doubling X is -1.75 x ln(2). Using some other multiplier (with appropriate change in the estimate) is just fine. c) Again various ways, which combine the ideas for interpreting log(X) with older ideas for multiplicative change in Y:

Doubling the wine consumption reduces heart attack deaths by an average of 21.7%. Or non-causal:

Doubling the wine consumption is associated with a decrease in heart attack deaths by 21.7%. Or even more descriptive:

A country with twice the wine consumption has 21.7% fewer heart attack deaths compared to a country with a baseline wine consumption.

Note: The estimated slope is -0.3533. So the effect of doubling wine consumption on log(mortality) is -0.3533 * log(2) = -0.244.

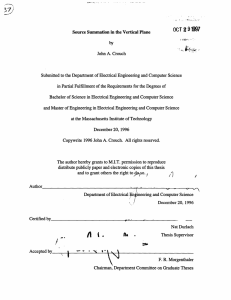

That is exp(-0.244) = 0.7828 of the baseline condition, i.e. a decrease of 1-0.7828 = 21.7%. d) The two residual plots are:

Y: Mortality Y: Log Mortality

3 4 5 6

Predicted

7 8 1.0

1.2

1.4

1.6

Predicted

1.8

2.0

2.2

The choice isn’t obvious, especially because there aren’t very many observations with small predicted values. To my eye, using log mortality is slightly better (more even vertical spread of residuals). Any well supported choice is acceptable.