1

Running head: OPERANT CONDITIONING

Operant conditioning:

Which Reinforcement Schedule Leads to the Most Resistance to Extinction

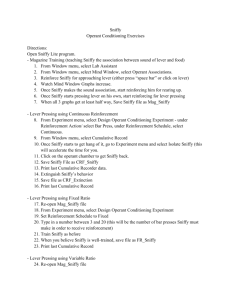

Sara Johnston

201001998

St. Francis Xavier University

2

OPERANT CONDITIONING

1. The two principles associated with operant conditioning, as described by Olson and

Hergenhahn (2009) are: 1) responses are often repeated when they are accompanied with a

reinforcing stimulus and 2) anything that can produce an increase in the rate at which an operant

response occurs is a reinforcing stimulus. It is also explained that operant conditioning is at

times, referred to as “Type R” conditioning because the focus is placed on the response that is

elicited rather than the stimulus (Olson and Hergenhahn, 2009).

2. According to Olson and Hergenhahn (2009), the skinner box is set up like a cage with a grid

floor to keep the animal contained. It includes two components, a lever which is light enough for

the animal to press and a food cup into which the food is dispensed. When the animal presses the

lever, the food is dispensed into the cup, presenting the reward. Skinner kept track of the animals

behavior by means of a cumulative recording device. The cumulative record keeps track of time

on the x-axis and the number of responses on the y-axis. Olson and Hergenhahn (2009) indicate

that as the animal makes bar pressing actions, the line on the record rises. With this we can infer

that the steeper the incline, the more rapid the responses are being made, while a flat line

indicates that there is no action.

3. A constant reinforment schedule (CRF) is a condition in which the animal receives

reinforcement every time the desired response is produced (Olson and Hergenhahn, 2009).

Unlike a CRF schedule, Olson and Hergenhahn (2009) explain that when an animal is being

conditioned on a partial reinforcement schedule (PRF) the desired response (in this case, bar

pressing) is only reinforced part of the time. There are four sub schedules which use PRF method

of scheduling. A fixed ratio schedule (FR) will give the animal reinforcement after a set number

of desired responses are made while a fixed interval schedule (FI) will provide the animal with

3

OPERANT CONDITIONING

reinforcement after a set amount of time has elapsed. Olson and Hergenhahn (2009) go on to

explain that when a variable ratio (VR) reinforcement schedule is used, the animal receives

reinforcement after a certain average of responses have been made. Finally, a variable interval

(VI) reinforcement schedule provides the animal with reinforcement after an average interval of

time has elapsed.

Spontaneous recovery is defined by Olson and Hergenhahn (2009) as the elicitation of a

previously conditioned response to a previously conditioned stimulus following extinction; this

is only spontaneous recovery when no pairings between the conditioned stimulus and the

unconditioned stimulus have occurred following the extinction.

4. Shaping by successive approximation is a method of shaping used in which the animal is

reinforced only to the responses that become similar to the response the experimenter wishes to

produce. This method would be used in the initial stages of training Sniffy to bar press. During

the magazine training sessions, Sniffy would be given manual reinforcements (food pellet) for

being near the bar press. Once Sniffy remained in the vicinity of the bar press, reinforcement

would be given for approaching the bar press, and then for touching the bar press. Finally

through shaping by successive approximation, Sniffy would learn to bar press for food on his

own. (Olson and Hergenhahn, 2009)

5. The schedule of reinforcement presented to the animal has a major impact on the response rate

of bar pressing produced by the animal. This rate differs depending on which type of schedule is

used. CRF schedules will produce a higher response rate but have weaker resistance to extinction

while PRF schedules produces a lower response rate while maintaining a stronger resistance to

extinction. Olson and Hergenhahn (2009) show that during a fixed interval reinforcement

4

OPERANT CONDITIONING

schedule, the animal will begin to bar press at a very slow rate, however, this will gradually

increase as the end of the interval nears. This gradual increase produces an interesting pattern on

the cumulative records which is referred to as the fixed interval scallop. A fixed ratio on the

other hand produces a very high and steady response rate, making the fixed ratio schedule the

best for learning a new behavior. It is observed through the cumulative records that both the FI

and the FR produce a pause in response from the animal immediately after the reinforcement is

delivered, this is called the post reinforcement pause (Olson and Hergenhahn 2009). The variable

interval schedule produces a response rate somewhat slower than that of the fixed interval

schedule, however, it does not produce the scalloping effect. Finally, the variable ratio response

schedule will elicit the highest response rate of all the schedules, the cumulative records of this

schedule show that the pause created by the FR does not occur during the VR schedule.

6. During the first 10 minutes of observing sniffy, five baseline behaviours were noted. Sniffy

drank water from his water dispenser, approached the bar press and even pressed the bar and

received food. Upon receiving this food Sniffy ate it, and then continued to sniff and pace around

the box as if looking for something, perhaps searching for more methods of receiving

reinforcement.

7. Prior to any training, Sniffy’s baseline bar-pressing rate was 0.5 bar presses/min.

8. It took approximately 5 minutes to accomplish magazine training.

9. After completing VR 25 training, it took 15 minutes to reach extinction. During the first two

minutes, Sniffy attempted 38 bar presses.

10. Following completion of VI 25 training, it took 17 minutes to reach extinction. During the

first two minutes, Sniffy bar pressed 20 times

5

OPERANT CONDITIONING

11. Following the completion of FR 25 training, it took 10 minutes to reach extinction. During

the first two minutes, Sniffy bar pressed 89 times.

12. Following the completion of FI 25 training, it took 10 minutes to reach extinction.

13. The results of this study indicate that the FI schedule produced a rate of extinction of 10

minutes, this being the schedule having the least resistance to extinction. Following the FI

schedule from fastest to slowest is as followed: FR- 10 minutes, VR-15 minutes, and VI-17.5

minutes.

Penser (2013) explains that the expected order of results from this study would be as followed

(fastest to slowest) : FI, FR,VI, VR . The FI schedule produces the fastest rate of extinction

because it is not the number of bar presses that have determined reinforcement but rather the

passing of a fixed amount of time. Due to this, the animal has learned how much time must pass

for the reward to present itself, and after this time passes without reinforcement, the animal will

stop bar pressing. A Fixed ratio will also produce a weak resistance to extinction for a similar

reason, when the animal does not receive reinforcement after bar pressing the learned amount of

times, they will discontinue the behaviour. Variable schedules lead to much greater resistance to

extinction, for the animal believes they will eventually be reinforced so they continue the

attempts of bar pressing. A CRF schedule produces the weakest resistance to extinction

compared to the four PRF schedules because during the CFR the animal is given reinforcement

every time, therefore, when reinforcement is not presented during the extinction trials, the animal

almost immediately discontinues its attempts. (E. Penser, Personal Communication, April 1st , 2013)

14. The knowledge of operant conditioning and the various training schedules is important in the

real world for it leads to a better understanding of how to elicit or extinguish certain behaviours

6

OPERANT CONDITIONING

in a more efficient manner. These methods of training can be used in real world situations such

as the workplace or in a teaching setting. In a the workplace, employers may choose either a

fixed interval schedule of pay or a fixed ratio schedule to elicit better suit the type of job. For

example, a teacher may perform better on a fixed interval schedule in which they receive a pay

cheque every set number of weeks. This schedule of reinforcement would produce a sustainable

long lasting career in which the effort put in by the teacher works equally as hard throughout the

year, and a little extra right before pay day. In contrast, a fixed ratio schedule of work may be

more efficient in a production line type job wherein pay is determined by productivity. This

method will produce hard efficient workers, but will also lead to a greater burnout rate. Another

method employers can use to increase productivity is based on a variable interval schedule.

Some employers may check in on employees at random times throughout the day to ensure work

is being completed efficiently and effectively. This varied interval schedule will keep employees

working hard to ensure that their boss does not catch them slacking off.

Having knowledge of reinforcement schedules is also useful for extinguishing a behaviour, for

example variable ratio schedules of reinforcement contributes to gambling addiction. This is

because the gambler is just waiting for that next pay out. Knowing this can help develop

programs for extinguishing this addiction.

7

OPERANT CONDITIONING

Reference:

Olson, M. H., & Hergenhahn, B. R. (2009). An introduction to theories of learning.

Pearson/Prentice Hall.