Flip

advertisement

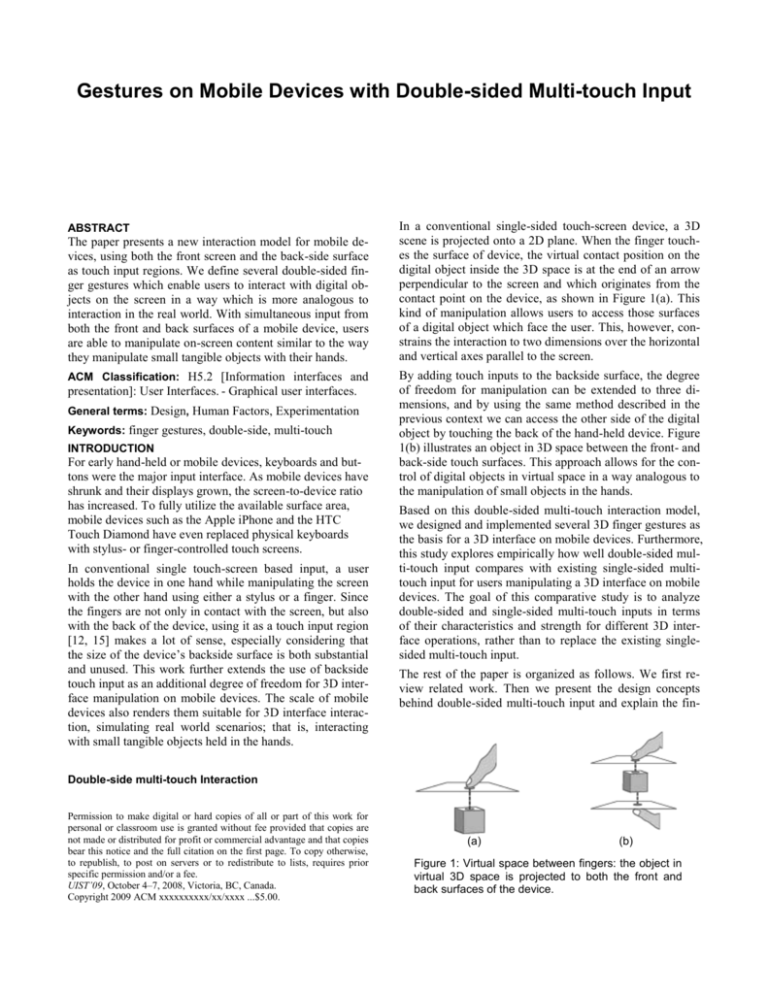

Gestures on Mobile Devices with Double-sided Multi-touch Input ABSTRACT The paper presents a new interaction model for mobile devices, using both the front screen and the back-side surface as touch input regions. We define several double-sided finger gestures which enable users to interact with digital objects on the screen in a way which is more analogous to interaction in the real world. With simultaneous input from both the front and back surfaces of a mobile device, users are able to manipulate on-screen content similar to the way they manipulate small tangible objects with their hands. ACM Classification: H5.2 [Information interfaces and presentation]: User Interfaces. - Graphical user interfaces. General terms: Design, Human Factors, Experimentation Keywords: finger gestures, double-side, multi-touch INTRODUCTION For early hand-held or mobile devices, keyboards and buttons were the major input interface. As mobile devices have shrunk and their displays grown, the screen-to-device ratio has increased. To fully utilize the available surface area, mobile devices such as the Apple iPhone and the HTC Touch Diamond have even replaced physical keyboards with stylus- or finger-controlled touch screens. In conventional single touch-screen based input, a user holds the device in one hand while manipulating the screen with the other hand using either a stylus or a finger. Since the fingers are not only in contact with the screen, but also with the back of the device, using it as a touch input region [12, 15] makes a lot of sense, especially considering that the size of the device’s backside surface is both substantial and unused. This work further extends the use of backside touch input as an additional degree of freedom for 3D interface manipulation on mobile devices. The scale of mobile devices also renders them suitable for 3D interface interaction, simulating real world scenarios; that is, interacting with small tangible objects held in the hands. In a conventional single-sided touch-screen device, a 3D scene is projected onto a 2D plane. When the finger touches the surface of device, the virtual contact position on the digital object inside the 3D space is at the end of an arrow perpendicular to the screen and which originates from the contact point on the device, as shown in Figure 1(a). This kind of manipulation allows users to access those surfaces of a digital object which face the user. This, however, constrains the interaction to two dimensions over the horizontal and vertical axes parallel to the screen. By adding touch inputs to the backside surface, the degree of freedom for manipulation can be extended to three dimensions, and by using the same method described in the previous context we can access the other side of the digital object by touching the back of the hand-held device. Figure 1(b) illustrates an object in 3D space between the front- and back-side touch surfaces. This approach allows for the control of digital objects in virtual space in a way analogous to the manipulation of small objects in the hands. Based on this double-sided multi-touch interaction model, we designed and implemented several 3D finger gestures as the basis for a 3D interface on mobile devices. Furthermore, this study explores empirically how well double-sided multi-touch input compares with existing single-sided multitouch input for users manipulating a 3D interface on mobile devices. The goal of this comparative study is to analyze double-sided and single-sided multi-touch inputs in terms of their characteristics and strength for different 3D interface operations, rather than to replace the existing singlesided multi-touch input. The rest of the paper is organized as follows. We first review related work. Then we present the design concepts behind double-sided multi-touch input and explain the fin- Double-side multi-touch Interaction Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. UIST’09, October 4–7, 2008, Victoria, BC, Canada. Copyright 2009 ACM xxxxxxxxxx/xx/xxxx ...$5.00. (a) (b) Figure 1: Virtual space between fingers: the object in virtual 3D space is projected to both the front and back surfaces of the device. ger gestures to be used for 3D interface manipulation. Next, we describe a user study comparing the speed of doublesided and single-sided multi-touch input, and users’ subjective perceptions thereof. We discuss the implications of this study and suggest how to improve multi-touch input interaction. Finally, we draw conclusions and describe future work. RELATED WORK Unimanual and Bimanual Forlines et al. [6] conducted an experiment to compare the difference between unimanual and bimanual, direct-touch and mouse input. Their results show that users benefit from direct-touch input in bimanual tasks. A study by Tomer et al. [10] reveals that two-handed multi-touch manipulation is better than one-handed multi-touch in object manipulation tasks, but only when there is a clear correspondence between fingers and control points. Leganchuk et al. [8] conducted experiments to validate the advantage of bimanual techniques over unimanual ones. Kabbash et al. [7] studied the “asymmetric dependent” bimanual manipulation technique, in which the task of one hand depends on the task of the other hand, and showed that if designed appropriately, two-handed interaction is better than onehanded interaction. Precision pointing using touch input In addition to two well-known techniques, Zoom-Pointing and Take-Off, the high precision touch screen interaction project [1] proposes two complementary methods: CrossKeys and Precision-Handle. The former uses virtual keys to move a cursor with crosshairs, while the latter amplifies finger movement with an analog handle. Their work improves pointing interaction at the pixel level but encounters difficulties when targets are near the edge of screen. Benko et al. [5] developed a technique called dual-finger selection that enhances selection precision on a multi-touch screen. The technique achieves pixel-level targeting by dynamically adjusting the control-display ratio with a secondary finger while the primary finger moves the cursor. Back side input Under the table interaction [16] combines two touch surfaces in one table. Since users cannot see the touch points on the underside of the table, the authors propose using visual feedback on the topside screen to show the touch points on the underside of the table, which improves the touch point precision on the underside. HybridTouch [13] expands the interaction surface of a mobile device to its backside. This is done by attaching a single-touch touch pad to the backside of the device, enabling the nondominate hand to perform document scrolling on the backside touch pad. LucidTouch [15] develops pseudotransparency for a mobile device’s backside interaction: by using a camera extended from the device’s backside to capture the locations, fingers operating on the device’s backside are shown on the front screen. Wobbrock et al. [18] also conducted a series of experiments to compare the performance of the index finger and thumb on the front and the rear sides of a mobile device. Baudisch et al. [4] created a device called nanoTouch which enables direct-touch input on the backside of very small devices, with the shift technique to solve the “fat finger” problem. Gesture recognition Wu et al. [20] articulate a set of design principles for constructing multi-hand gestures on direct-touch surfaces in a systematic and extensible manner, allowing gestures with the same interaction vocabulary to be constructed using different semantic definitions of the same touch data. Wobbrock et al. [19] present an approach to designing tabletop gestures which relies on eliciting gestures from users instead of employing gestures created by system developers. Oka et al. [11] proposed a method to track users’ finger tip trajectories while presenting an algorithm to recognize multi-finger symbolic gestures. 3D space interaction Wilson et al. [17] proposed the idea of proxy objects to enable the physically realistic reaction of digital objects in the 3D space inside a table surface. Hancock et al. [9] explored 3D interaction involving the rotation and translation of digital objects on a tabletop surface with limited depth, and compared user performance in tasks using one-, twoand three-touch respectively. Our work emphasizes direct-touch finger gestures that involve simultaneously touching both sides of a mobile device. While these gestures may applied to various applications on mobile devices, we here focus on 3D object interaction via double-sided input manipulation and on how well it compares with single-sided input for the manipulation of a 3D interface on mobile devices. DOUBLE-SIDE MULTI-TOUCH INTERACTION Although multi-touch input enables document scrolling and zooming, manipulation is constrained to two dimensions over the horizontal or vertical planes of a mobile device’s 2D touch screen. By adding touch inputs from the device’s backside, the degree of freedom for manipulation can be extended to a pseudo third dimension. This 3D space concept is as described in the under-table interaction work [16], in which the virtual 3D space shown in the device’s display is a “fixed volume, sandwiched” between the front and the back surfaces of the device. We thus extend this tablebased 3D concept to our double-sided multi-touch interaction model on a mobile device, and create a new set of touch gestures for manipulating 3D objects. Hardware Prototype A double-sided multi-touch device prototype was created as shown in Figure 2. This was accomplished with two iPod touch devices attached back-to-back. In this case the backside iPod touch device became the backside multitouch pad for the device. Each iPod touch had a 3.5 inch, 320x480px capacitive touch screen, and supported up to five simultaneous touch points. The width, height, depth, and weight of each device were 62mm, 110mm, 20mm, and 230g respectively. The touch input signals of the backside iPod touch device, including the locations of the touch points, were then transmitted to the front side iPod touch device through an ad hoc WiFi connection. Note that the iPod touch device we used at the time supported at most five simultaneous touch points: when the sixth finger touched the screen, all touch events were cancelled. We have found that five touch points are sufficient to implement our double-sided, multi-finger touch gestures. Based on previous research on back-of-device interaction, users lack visual feedback indicating the positions of their fingers on the back side of the screen [15]. In our work, we have found it was difficult to touch the target point on the back side without visual feedback. As a visual aid, we added green dots to indicate where the backside fingers are touching. These dots let us know whether the backside fingers are in the right location to perform gestures such as grabbing or flipping. They correspond to the cursor on personal computers; targets are not occluded because each dot is only one pixel. Double-side multi-finger touch gestures In traditional single-sided touch interaction, a 3D object is manipulated by touching one face of the object. In contrast, manipulating a real-world object in a physical 3D space involves a rich set of multi-finger actions such as grabbing, waving, pushing, or flipping the target object. If the object is soft or elastic, it can also be stretched or kneaded into different shapes. Based on the double-sided multi-touch interaction model, we designed several double-sided multifinger touch gestures similar to those used when manipulating 3D objects in the physical world. Figure 2: Prototype of our double side multi-touch input mobile device. The location of back side touch points are displayed to provide visual feedback. A gesture is a sequence of interactions. Baudel and Beaudouin-Lafon [3] proposed a model for designing gestures where each command is divided into phases from initialization to ending phase. In our model, each gesture possesses three states: begin, dynamic, and end. The begin state in some situations is a common state shared by different gestures: the identity of the gesture is determined according to the fingers’ movement sequence. Once a gesture is recognized, it enters the dynamic state, during which the begin states of other gestures are not triggered until the current gesture ends. Figure 3 shows the order of the three gesture states. Here we present five sample double-sided Gesture states begin dynamic end Figure 3: A sequence of three gesture states. The begin state may correspond to more than one gesture. multi-touch gestures used in 3D interface operations: Grab, Drag, Flip, Push, and Stretch. Grab In the real world, grabbing an object (for example, a coin or a card) by hand involves using at least two fingers to apply opposing pressure on two or more surfaces of the target. Mapping this physical grabbing action onto our doublesided multi-touch input interface yields the gesture triggered by two fingers from opposite side of the surface touching the same target object. If the distance between the two touch points on opposing sides is less than a predefined threshold, then the Grab gesture enters its begin state and the two touch points are recorded for further recognition of other gestures. In our model, the Grab gesture includes the begin and end states but not the dynamic state. When we want to rotate or drag a small object, we first grab it; hence the action following a Grab depends on the relative movement of the fingers. Since the two sides’ touch points do not always make contact with the device at the same time, the Grab gesture enters its begin state if the two side touch points are close enough: the order and delay between the two touch events does not affect the Grab begin state. The Grab gesture enters the end state if one or two fingers leave the surface of the device. As shown in Figure 4, 𝑇𝑓 is the touch point of the front side and 𝑇𝑏 is from the back. L is the distance between 𝑇𝑓 and 𝑇𝑏 . When L is short enough and when both 𝑇𝑓 and 𝑇𝑏 are in the display region to which the object is projected, the Grab gestured is triggered and object is grabbed by the two fingers. Figure 4 to Figure 8 are synthetic photos in order to show relative positions of backside fingers. Drag In the real world, dragging a small object by hand involves first grabbing the object with opposing fingers and then moving the object by sliding the two fingers together in a single target direction. Mapping the physical dragging action onto our model yields the Drag gesture, which starts in Figure 4: The Grab gesture is triggered when the distance between the two touch points on opposing sides is less than a predefined threshold. Figure 5: The Drag gesture. Touch points 𝑇𝑓 and 𝑇𝑏 trigger the begin state. 𝑇𝑓 ′ and 𝑇𝑏 ′ are the touch points after the fingers move. Let 𝐹 = ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′ ∙ ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′, ; if 𝐹 is positive, than the Drag gesture enters the dynamic state and the object moves along ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′. the same begin state as the Grab gesture. As Figure 5 shows, when the fingers move, ⃑⃑⃑ Tf represents the vector starting from the initial touch point Tf and ending at the new touch point Tf '. ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ Tb Tb ' is the corresponding backside vector. Let F be the inner product of ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ and ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′; the sign of F determines whether the Drag or Flip gesture is to be triggered. If F is positive, the gesture is recognized as Drag because it indicates that the two fingers are sliding in ⃑⃑⃑⃑⃑⃑⃑⃑⃑ the same direction: the target object thus moves along𝑇 𝑓 𝑇𝑓 ′. If either finger leaves the surface, the Drag gesture ends so the system can wait for other events to trigger the next gesture’s begin state. Flip In the real world, similar to dragging, when flipping a small object by hand, we first grab the object and then flip it with two fingers sliding in opposite directions. Mapping the physical drag action onto our model yields the Flip gesture, which also enters the same begin state as the Grab gesture. As shown in Figure 6, the definition of ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′, ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′, and F is the same as that in the Drag gesture. The only difference is that during the Flip gesture, the opposing directions of ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ and ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′ cause F to be negative. Once the Flip gesture enters its dynamic state, ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ is the torque exerted on the object which forces it to rotate with respect to the axis perpendicular to ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ which passes through the center of the object. As with the Drag gesture, when one or two of the fingers leaves the surface, the Flip gesture enters the Figure 6: The Flip gesture. Let 𝐹 = ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ ∙ ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑏 𝑇𝑏 ′, ; if 𝐹 is negative, the Flip gesture enters the dynamic state and the object rotates about the axis perpendicular to ⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓 𝑇𝑓 ′ which passes through the center of the object. Figure 7: The Push gesture. and are touch points from back side. The object moves toward the user when fingers keep touching it; the velocity varies according to number of touching fingers. end state and the object stops rotating. As the Drag and Flip gestures cannot occur at the same time, the rotation and translation of the object are independent of each other. Push In the real world, when we exert force on an object to push it, our hands maintain contact with a certain point on the object. Correspondingly, we define the Push gesture as that which moves the digital object along the axis perpendicular to the touch screen. When the user pushes the object by touching the backside screen, the object moves toward the user. In our model, the begin state of the Push gesture is triggered by tapping the surface twice by two or more fingers. The gesture enters the dynamic state when the fingers keep on touching the same position, and the object moves in the direction the fingers are pushing. The more fingers on the object, the faster it moves. When the number of fingers touching the object is less than two, the Push gesture ends. Figure 7 indicates how this gesture works. Stretch In the real world, stretching an elastic body by hand involves first grabbing the object at several positions and then applying forces at these positions to stretch or knead it. Mapping this physical stretch action onto a double-sided multi-touch input device yields the Stretch gesture, which enters the begin state when two sets of the Grab gesture begin. When the fingers move, the Stretch gesture enters dynamic mode and the size of object varies according to the length change of ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓1 ′𝑇𝑓2 ′. The gesture ends if any of the four fingers leaves the surface. Figure 8 illustrates the Stretch gesture. Figure 8: The Stretch gesture. Index 1 and 2 represent different hands. Let 𝐿 = ⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑⃑ 𝑇𝑓1 ′𝑇𝑓2 ′,; the object en⃑ larges/deflates as |𝐿| increases/decreases. USER EVALUATION To understand how well users are able to manipulate the 3D interface with double-sided multi-touch interaction on mobile devices, we conducted a user study that compares double-sided and single-sided multi-touch input in terms of speed and the subjective preferences of the users. Participants Fourteen students (12 males, 2 females) from our university were recruited as participants in the study. Half of them have experience of using touch input on mobile devices. The other 7 participants have never used any touch input interface on mobile devices before. Only one of them is left-handed. Ages ranged from 19 to 22. Apparatus The experiment was performed on a single-sided multitouch input device (a single Apple iPod touch device) and on a double-sided multi-touch input device (two Apple iPod touch devices attached back-to-back as shown in Figure 2). To assess the ease with which participants were able to use single-sided and double-sided finger gestures, several 3D interface tasks were designed. Test programs automatically logged the task completion times. Procedure and design Tasks were designed to test each gesture individually. Each task had two versions that differ only in the input interface: one only for single-sided input devices, and the other for the double-sided input device. As to the order of the tasks, half of the participants started with the single-sided tasks first and ended with the double-sided tasks, while the other half started with the double-sided tasks and ended with the single-sided tasks. The primary measure was the task completion time. Additional measures included gesture dynamic time, which is defined as the time between the gesture begin and end states depicted in Figure 3. Gesture dynamic time could be used to derive the non-gesture time when users are thinking what gesture to perform. There were a total of six tasks. The first five tasks tested individual gestures and the last task tested a combination of gestures. Ten rounds were performed of each task that required only one gesture, and five rounds of the combination task. Each participant needed about 30 minutes to finish all of the twelve tasks, after which they filled out a questionnaire about handedness and about their experience using the prototype. Tasks Task 1 – Select Nine squares of same size form a 3x3 matrix on screen and one of them is highlighted. When the user selects the highlighted square, that round’s task is completed. In the next round, the matrix is randomly rearranged. In the singlesided input version, the user taps twice to select a target square, and in the double-sided input version, the user selects the square with the Grab gesture. Task 2 - Move object in xy-plane Four corner squares are labeled with index numbers 1 to 4, and a moveable object is in the middle of the screen. The user moves the object in the xy-plane to each of the four corner squares in order from square 1 to 4 to complete the round’s task. In the single-sided version, the user first selects the object by tapping it twice and then slides a finger to move the object to the target position. For double-sided input, the user moves the square using the Drag gesture. Task 3 - Rotate A die lies in the middle of the screen, with an arrow beside it indicating the assigned rotation direction. In each round the users rotate the dice from one face to the other face. In the single-sided input version, users rotate the dice by sliding two fingers in the same direction, while in the doublesided version the dice is rotated using the Flip gesture. Task 4 - Move object along z-axis Two frames aligned along the z-axis indicate the start and end positions. A cube lying between the two frames is initially at the position of one frame: in each round the user’s task is to move it to the position of the other plane. The gesture to move the object along the z-axis in the single-sided input version is expanding/shrinking as shown in Figure 9(d), and the corresponding gesture in the doublesided version is Push. Task 5 - Change object size A square frame surrounds a concentric cube. The inner length of the frame is larger than the cube side. In each round user must modify the size of frame or cube to fit the cube to the inner length of the frame. In the single-sided input version, the user selects the target and then enlarges/reduces its size by expanding/shrinking it with index finger and thumb. In the double-sided version, the target object size is changed using the Stretch gesture. Task 6 - Combinational operation In this task, a die and a cubic frame are in different positions inside a 3D virtual space. The position of the cubic frame is constant during a round, and its size is different from that of the die. To complete the round’s task, there are three requirements: (1) move the die into the cubic frame, (2) adjust the size of the die to fit the frame, and (3) rotate the die so that the specified number faces the user. There is no constraint as to which requirement should be met first, so the user may manipulate the die in whatever order he or she wants. In this task the user may also move the camera viewport to get a better view of the 3D space. The gestures used to move the camera along the xy-plane and along the z-axis are the same as that for moving an object in the single-sided input version. In the single-sided input version, since the expanding/shrinking and single-finger-sliding gestures map to more than one action, the operation is separated into three modes: scale mode, object move mode and camera move mode. The user switches among these three modes using correspondingly labeled buttons. Figure 9 shows the 6 tasks and corresponding gestures. Single-side gestures are aligned in the left of double-side gestures. (a) Task 1 (b) Task 2 (c) Task 3 (d) Task 4 (e) Task 5 (f) Task 6 Figure 9: Sample tasks and corresponding gestures in single-sided (left) and double-sided (right) version. (a) - (f) are task 1 to task 6 respectively. Task 6 of each version involves five gestures. Tasks Single-Side Double-Side select 0.81 (0.18) 1.05 (0.40) move object in xy-plane 3.13 (0.64) 4.40 (1.47) rotate 1.34 (0.37) 1.34 (0.39) 11.61 (4.42) 11.44 (1.98) 6.68 (1.35) 5.84 (2.37) 48.67 (23.02) 58.93 (12.34) move object in z-axis change size combinational operation Table 1: Average task completion time (and standard deviations) in a round in seconds. RESULTS AND OBSERVATIONS Task complete times Table 1 shows the 14 participants’ average completion times for each task using single-sided and double-sided input devices. Each participant performed tasks one to five 10 times each. Because it took longer, each participant performed task six 5 times. We performed a variance analysis based on ANOVA with Greenhouse-Geisser correction. Our findings are as follows. For task one (selection), there was a borderline significant difference (F1,13=5.086, p=0.42): the average task completion time using singlesided input (0.81 seconds) was 23% faster than the average task completion using double-sided input (1.05 seconds). For task two (move object on the xy-plane), there was a significant difference (F1,13=14.565, p=0.002): the time using single-sided input (3.13 seconds) was 29% faster than that using double-sided input (4.40 seconds). For tasks three to six (rotate, move object along the z-plane, change object size, combinational operation), there was no significant difference between the single-sided input and doublesided input: task three (F1,13=0.194, p=0.880), task four (F1,13=0.000, p=0.991), task five (F1,13=1.148, p=0.303), and task six (F1,13=2.524, p=0.136). The average task completion time ratio for each task is shown in Figure 10. Since task completion time is task- 140% 129% 100% 99% 121% 87 % Non-gesture time Task complete time Non-gesture Ratio Single-side 126.5s 242.3s 52%(8%) Double-side 123.7s 294.6s 42%(6%) Table 3: Average non-gesture time ratios (and standard deviations) of combinational operation measured in five rounds over 14 participants. dependent, time ratios between single-sided and doublesided in the same task show the amount of relative differences. According to Figure 10, single-side gestures are performed faster in actions which involve accessing only one side of a digital object such as selecting, moving in xyplane. For actions which possess more 3D metaphor, there is no significant difference in the task completion time between single-sided and double-sided gestures inputs. Non-gesture time ratio In task of combination operation, participants performed actions through five gestures in arbitrary sequences. Table 3 shows the average non-gesture time of combinational operation for the participants. Non-gesture time measures the time when participants were not performing any gestures or when their fingers were off the device’s input surfaces. A high time ratio between non-gesture time and task completion time indicates that participants took more time to think about what gestures to use than to perform the actual finger gestures. Our result in Table 3 shows that the non-gesture ratio for the single-side input interaction is 23% larger than that of double-side version. This difference suggests that participants needed more time to translate 3D object manipulation into single-sided gestures than doublesided gestures. Observations In tasks one and two, users were able to more quickly select and move objects in the xy-plane using single-sided input. While a user was performing the Grab or Drag gesture, he or she alternately switched holding and operating hands, because when the object was near the edge of the screen, the hand on the other edge of the screen was unable to reach the object. In tasks three and four, the differences between both input methods were not significant. As for changing an object’s size, the Stretch gesture worked well and was faster than the single-sided version. The overall results showed that some actions were suitable for singlesided manipulation while some were done more quickly in double-sided input interaction. Other than the combination task, each task required only one gesture: participants quickly adapted to these tasks. However, in the combination task, participants sometimes stopped manipulating the object to figure out what gesture they should use to perform the required action. Figure10: Task completion time ratio between doubleside and single-side input gestures. In the single-sided input version, the user held the device in his or her non-dominant hand. The screen was parallel to the user’s palm in a fixed position and the user performed gestures using his or her dominant hand. In contrast, when using the double-sided input device, the user grasped the edge of the device so the backside surface would be accessible to the fingers. Double-sided gestures such as Grab, Drag, Flip, and Push were done with only one hand. During the experiments, when performing the Stretch gesture, users typically adjusted their hand posture in order to grab the target object bimanually. While stretching the object, some users had trouble holding the device in a fixed position relative to their hands. Since the length of the thumb was about half of the screen height, if a small target object deviated from the center of the display region, the user was sometimes forced to move his or her hands or rotate the device so that both hands could reach the small region to perform the Stretch gesture. This phenomenon may have distracted the user’s attention from the screen. Also, there was the risk of the device falling out if not held carefully. In single-sided input manipulation, the users always used their index and middle fingers to do the selecting. For double-sided input, the users held the device bimanually with their thumbs in front of the screen and the other eight fingers on the back side; therefore the selection was done by the thumbs, which occluded many pixels on the screen. The contact area of the fingers was affected by the orientation of the thumbs with respect to the screen, thus varying the positions of the touch points that the screens could sense. Subjective agreements Table 2 shows the average agreement ratings for various statements. The result for statement 1 clearly reveals that participants preferred to use only one finger when moving an object on the xy-plane. Although the task completion time results indicated that some actions were completed faster using single-sided input, the agreement ratings revealed that almost all of the participants felt that doublesided interaction with gestures more closely approximated real-world interaction (statement 5). The result for statement 3 reflected that users found double-sided gestures more intuitive to learn than single-sided gestures. The result for statement 8 indicated that double-sided gestures were more suitable for bimanual than unimanual operation. In terms of ergonomics, the relative thickness of the handheld device had less of an impact on single-sided input interaction. However, participants reflected that if the prototype had been as thin as a normal mobile device, the double-sided input method would have been more comfortable to use. All of the participants had had experience using touch screen input devices like automated teller machines, so it was easier for them to learn the single-sided gestures. None of the participants had ever used back-of-device input before these experiments, and they spent more time getting used to this technique. DISCUSSION Agee Single-Side Double-Side 5.0 4.0 Dissagree 3.0 2.0 1.0 1 2 3 4 5 6 7 8 1. It is easy to move the object in x-y-plane. SingleSide 4.6 (0.6) DoubleSide 3.6 (0.9) 2. This input method is easy to use. Statement 3.9 (0.7) 3.4 (0.8) 3. These gestures are intuitive for me to learn. 3.6 (1.0) 3.4 (0.9) 4. It is easy to change scale of the object. 3.9 (0.5) 5. It is like real world interaction. 3.3 (0.8) 4.0 (0.7) 6. It is easy to move the object along z-axis. 3.1 (0.9) 4.1 (1.0) 7. It is easy to flip the object. 3.1 (1.2) 3.3 (1.2) 8. It is easy to use by both hands. 2.7 (1.0) 3.5 (1.0) 3.4 (1.1) Table 2: Average ratings of agreements. Data are sorted in descendant order of rating about single-side input on 5-point Likert scale from 1(strongly disagree) to 5 (strongly agree). Numbers in the parentheses represent standard deviations. Based on the results and observations from the user evaluation, here are some issues about designing input methods on touch-input mobile devices. The first is posture when holding the device: for gestures which require that two hands simultaneously touch the front and back sides, users fixed the devices within their palms. This posture constrained their fingers to access only those regions near the center of the screen. Users may need to be careful not to let the device fall out of their hands. Actions that involve accessing content near the edges of the screen should avoid using these kinds of gestures. Another issue about double-sided input devices is unconscious touch points. Users usually hold mobile devices fixed with two or more fingers touching the device backside. The system should identify such “fixing” or unconscious touch points and ignore them so they do not affect the recognition of intentional ges-tures. Techniques such as accelerometers may aid in detecting how the device is being held so the system can decide which touch points to filter out when recognizing gestures. Regarding dimensions of operation, for actions such as object selection and movement in the xy-plane, users need only access one surface of the object and the movements are in only two dimension. For these kinds of actions, single-sided gestures are more suitable and can be performed faster because of simplicity. For actions involving accessing the backsides of objects or motion along the zaxis, double-sided gestures more closely approximate the interaction between objects and hands in the real world. Combining the merits of both single-sided and doublesided gestures to design a hybrid input method may help users. CONCLUSION & FUTURE WORK We present novel finger gestures that use both the front and back sides for mainpulating 3D interface of a mobile device. Algorithms to recognize these finger gestures were defined. We conducted a user study that quantitatively and subjectively compared user behavior when manipulating a mobile device’s 3D interface between conventional single-sided and double-sided multi-touch input gestures and concluded what kind of operations are suitable for double-sided input concerning their characteristics. In this paper, we focused on digital 3D space; we plan to next apply this double-sided multi-touch technique to more commonly-used applications such as text input and precision selection on a small screen device. The prototype, a combination of two iPod touches, is cumbersome as mobile devices go: we plan to implement this technique on a lighter, thinner device. 9. Mark Hancock, Sheelagh Carpendale, and Andy Cockburn, Shallow-depth 3d interaction: design and evaluation of one-, two- and three-touch techniques, In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, April 28–May 03, 2007. 10. Moscovich, T. and Hughes, J. Indirect mappings of multi-touch input using one and two hands, In Proceeding of the twenty-sixth annual SIGCHI conference on Human Factors in Computing Systems, April 5–10, 2008. 11. Oka, K., Sato, Y., and Koike, H. (2002). Real-time tracking of multiple fingertips and gesture recognition for augmented desk interface systems. In IEEE International Conference on Automatic Face and Gesture Recognition. 12. Schwesig, C., I. Poupyrev, and E. Mori. Gummi: a bendable computer. In Proceedings of CHI'2004. 2004: ACM. 13. Sugimoto, M. and Hiroki, K. HybridTouch: an intuitive manipulation technique for PDAs using their front and rear surfaces. In Proceedings of MobileHCI '06. REFERENCES 14. Wexelblat, A. (1995). An approach to natural gesture in virtual environments. ACM TOCHI, 2 (3). 1. Albinsson, P.-A. and Zhai, S. High precision touch screen interaction. In Proceedings of CHI, pp. 105–112, New York, NY, USA, 2003. ACM Press. 15. Wigdor, D., Forlines, C., Baudisch, P., Barnwell, J., Shen, C. LucidTouch: A See-Through Mobile Device. In Proceedings of UIST 2007. 2. Balakrishnan, R. and Hinckley, K. Symmetric bimanual interaction. In Proceedings of CHI, pp. 33–40, New York, NY, USA, 2000. ACM Press. 16. Wigdor, D., Leigh, D., Forlines, C., Shipman, S., Barnwell, J., Balakrishnan, R., Shen, C. Under the table interaction. Proceedings of UIST 2006. 3. Baudel, T. and Beaudouin-Lafon, M. (1993). Charade: remote control of objects using free-hand gestures. Communications of ACM, 36(7). 17. Wilson, A. D., Izadi, S., Hilliges, O., Garcia-Mendoza, A., Kirk, D. Bringing physics to the surface. In Proceedings of 21st ACM Symposium on User Interface and Software Technologies (ACM UIST), Monterey, CA, USA, October 19–22, 2008. 4. Baudisch, P. and Chu, G. Back-of-device Interaction allows creating very small touch devices. In Proceedings of CHI 2009, Boston, MS, April 4–9, 2009. 5. Benko, H., Wilson, A., and Baudisch, P. Precise Selection Techniques for Multi-Touch Screens. In Proceedings of CHI 2006. 6. Forlines, C., Wigdor, D., Shen, C., Balakrishnan, R. (2007). Direct-Touch vs. Mouse Input for Tabletop Displays. In Proceedings of the 2007 CHI Conference on Human Factors in Computing Systems. 7. Kabbash, P., Buxton, W., & Sellen, A. (1994). Twohanded input in a compound task. ACM CHI. 8. Leganchuk, A., Zhai, S., & Buxton, W. (1998). Manual and cognitive benefits of two-handed input: an experimental study. ACM TOCHI. 18. Wobbrock, J.O., Myers, B.A. and Aung, H.H. (2008) The performance of hand postures in front and back of device interaction for mobile computing. International Journal of Human-Computer Studies 66 (12). 19. Wobbrock, J., Morris, M.R., and Wilson, A. UserDefined Gestures for Surface Computing. In Proceedings of CHI 2009, in press. 20. Wu M., Shen C., Ryall K., Forlines C., Balakrishnan R. Gesture Registration, Relaxation, and Reuse for MultiPoint Direct-Touch Surfaces. In Proceedings of IEEE TableTop, pp. 185–192, IEEE Computer Society, Los Alamitos, USA (2006).