Solutions to Exercises for Chapter 16 16.1. The question posed in

advertisement

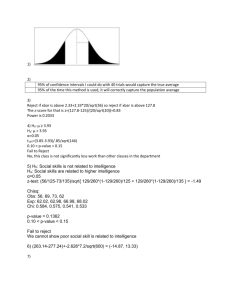

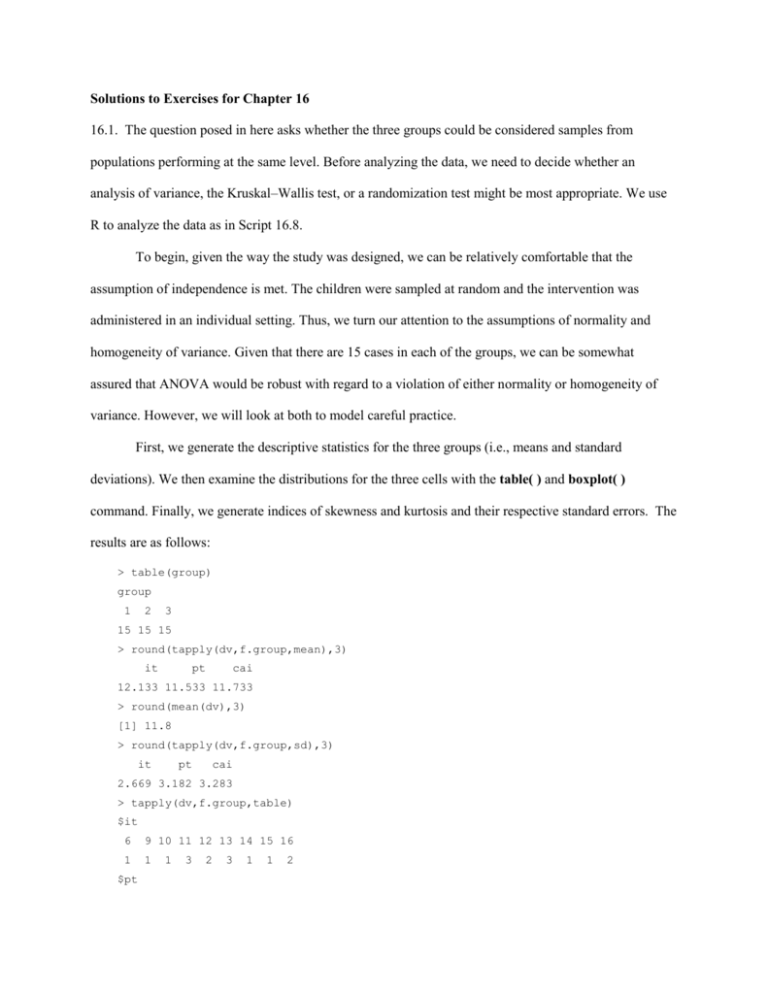

Solutions to Exercises for Chapter 16 16.1. The question posed in here asks whether the three groups could be considered samples from populations performing at the same level. Before analyzing the data, we need to decide whether an analysis of variance, the Kruskal–Wallis test, or a randomization test might be most appropriate. We use R to analyze the data as in Script 16.8. To begin, given the way the study was designed, we can be relatively comfortable that the assumption of independence is met. The children were sampled at random and the intervention was administered in an individual setting. Thus, we turn our attention to the assumptions of normality and homogeneity of variance. Given that there are 15 cases in each of the groups, we can be somewhat assured that ANOVA would be robust with regard to a violation of either normality or homogeneity of variance. However, we will look at both to model careful practice. First, we generate the descriptive statistics for the three groups (i.e., means and standard deviations). We then examine the distributions for the three cells with the table( ) and boxplot( ) command. Finally, we generate indices of skewness and kurtosis and their respective standard errors. The results are as follows: > table(group) group 1 2 3 15 15 15 > round(tapply(dv,f.group,mean),3) it pt cai 12.133 11.533 11.733 > round(mean(dv),3) [1] 11.8 > round(tapply(dv,f.group,sd),3) it pt cai 2.669 3.182 3.283 > tapply(dv,f.group,table) $it 6 9 10 11 12 13 14 15 16 1 1 $pt 1 3 2 3 1 1 2 6 7 9 10 12 13 14 15 16 1 2 1 2 1 4 1 2 1 $cai 5 7 10 11 12 14 15 16 17 1 1 3 2 1 2 1 1 6 8 10 12 14 16 3 it pt cai FIGURE 16.6 Boxplots for Exercise 16.1. In examining the output, we are looking for reasonable means and standard deviations, as well as possible outliers. We will assume that the means and standard deviations are reasonable; in your own work, you will be able to make that determination. The results of the table( ) and the boxplot( ) commands indicate that there might be an outlier at the low end of the first group. At this point, we will consider the issue of normality in a more formal fashion. We will look at skewness, kurtosis, and apply the shapiro.test( ) function: > round(tapply(dv,f.group,skewness),3) it pt cai -0.555 -0.458 -0.286 > round(tapply(dv,f.group,SEsk),3) it pt cai 0.58 0.58 0.58 > round(tapply(dv,f.group,kurtosis),3) it pt cai 0.704 -1.006 -0.044 > round(tapply(dv,f.group,SEku),3) it pt cai 1.121 1.121 1.121 > tapply(dv,f.group,shapiro.test) $it Shapiro-Wilk normality test W = 0.9562, p-value = 0.626 $pt Shapiro-Wilk normality test W = 0.9238, p-value = 0.2199 $cai Shapiro-Wilk normality test W = 0.9566, p-value = 0.6331 Given the measures of skewness and kurtosis, all of them are well within 2 standard errors of zero, suggesting that all three samples are consistent with what you would expect if you were drawing random samples out of normal populations. Furthermore, the results from the shapiro.test( ) function for each group show no evidence of departure from normality. Thus, we would conclude that the data appear to meet the assumption of normality. Finally, we consider the issue of homogeneity of variance using the leveneTest( ) function from the car package. From the descriptive statistics above, you can see that the standard deviations are all about the same: > leveneTest(dv ~ f.group,data=data) Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 2 0.2868 0.7521 42 The p-value from the Levene test indicates that the variability appears to be equivalent across the groups. In summary, we do not have any evidence that the assumptions have been violated, and if they have, the violations are not large enough to be problematic. Indeed, when samples sizes exceed 10 and all groups are of the same size, many statisticians do not even bother testing assumptions, as ANOVA is said to be robust. Thus, we conclude that a conventional ANOVA would be the appropriate statistical technique to apply to these data. > oneway.test(dv ~ f.group,var.equal=TRUE) One-way analysis of means data: dv and f.group F = 0.1498, num df = 2, denom df = 42, p-value = 0.8613 The analysis results in an observed F-statistic of .1498 with an associated p-level of .86. As the p-level of the observed test statistic is larger than our level of significance ( = .05), we do not reject the null hypothesis. In conclusion, these data are consistent with the null hypothesis that the three samples come from populations with the same mean. Thus, these three treatments do not seem to have had any differential effects. 16.2. Exercise 16.2 is another three-group study calling for a comparison to determine if the three groups are equivalent with regard to level of “readiness for school.” Keep in mind that the data are fictitious. Given the nature of the study, the children are assumed to come from many different centers. In addition, it was a sampling of all children entering kindergarten, not a sampling of centers from which we further sampled children, and thus we probably do not need to worry about the assumption of independence. Again, we enter the data into R and construct a data frame. We then generate descriptive statistics: > table(group) group 1 2 3 15 11 13 > round(tapply(dv,f.group,mean),3) fd hd nd 19.467 24.818 19.692 > round(mean(dv),3) [1] 21.051 > round(tapply(dv,f.group,sd),3) fd hd nd 5.083 3.894 5.468 Note that the numbers of cases in each of the groups are different, suggesting that we need to look more carefully at the assumptions. Again, we will assume that the means and standard deviations look reasonable. Now, we’ll look at the within-group distributions: > #Examining cell distributions > tapply(dv,f.group,table) $fd 9 13 15 16 17 20 21 22 23 25 27 1 1 1 1 2 3 1 1 1 1 $hd 19 22 23 24 27 29 33 1 2 2 2 2 1 1 $nd 10 13 15 16 18 19 21 23 26 30 1 1 1 1 1 2 1 3 1 1 2 30 25 20 15 10 fd hd nd FIGURE 16.7 Boxplots for Exercise 16.2. Looking at the frequency distributions, we’ll assume that the minimum and maximum values are within range, and there do not appear to be any extreme outliers, which is also supported by the boxplots in Figure 16.7. Looking at the issue of normality, > round(tapply(dv,f.group,skewness),3) fd hd nd -0.307 0.801 0.053 > round(tapply(dv,f.group,SEsk),3) fd hd nd 0.580 0.661 0.616 > round(tapply(dv,f.group,kurtosis),3) fd -0.156 hd nd 0.719 -0.110 > round(tapply(dv,f.group,SEku),3) fd hd nd 1.121 1.279 1.191 > tapply(dv,f.group,shapiro.test) $fd Shapiro-Wilk normality test W = 0.9706, p-value = 0.8662 $hd Shapiro-Wilk normality test W = 0.9392, p-value = 0.5111 $nd Shapiro-Wilk normality test W = 0.9875, p-value = 0.9985 As in Exercise 16.1, all of the measures of skewness and kurtosis are within 2 standard errors of zero, and the results from the shapiro.test( ) applied to each group indicate large p-values for each group, respectively. Thus, we would conclude that the three groups might have come from populations that are normally distributed. Regarding homogeneity of variance, > leveneTest(dv ~ f.group,data=data) Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 2 0.6577 0.5242 36 The p-value from Levene’s test gives us reason to believe that the data are consistent with the homogeneity assumption. In conclusion, overall the data appear to be in line with the assumptions for ANOVA; if an assumption has been violated, the degree of violation probably is not sufficient to cause problems. Again, in light of the apparent correspondence of the data with the assumptions, we would recommend using analysis of variance to examine the equality of the three population means: > oneway.test(dv ~ f.group,var.equal=TRUE) One-way analysis of means data: dv and f.group F = 4.4943, num df = 2, denom df = 36, p-value = 0.01810 Thus, we reject the null hypothesis; these results suggest that the three groups are not samples from three populations with the same mean. At this point, we know only that at least one of the three group means is different from one or both of the others; we do not know which group means are different from which other group means. Follow-up procedures that will allow us to make more specific statements will be covered in Chapter 17. 16.3. This exercise is a four-group study calling for a comparison of the groups regarding level. As for the previous two exercises, we read the data into R with the c( ) function and create a data frame. We then generate descriptive statistics including sample sizes, means, and standard deviations: > #Obtaining descriptive statistics > table(group) group 1 2 3 4 10 10 10 10 > round(tapply(dv,f.group,mean),3) ma sc la ss 59.7 54.6 70.1 72.0 > round(mean(dv),3) [1] 64.1 > round(tapply(dv,f.group,sd),3) ma sc la ss 11.823 18.307 17.387 7.717 Although the four samples sizes are all 10, the samples are not very large, so we may be more concerned about the degree to which the data conform to the assumptions for analysis of variance, particularly normality. In addition, the smallest standard deviation (7.72) is less than one-half of the largest standard deviation (18.31), so we may also want to be concerned with the assumption of homogeneity of variance. But before looking at assumptions, we should look at the within-cell distributions: > tapply(dv,f.group,table) $ma 45 46 50 53 58 62 74 75 76 1 1 1 1 2 1 1 1 1 $sc 39 42 43 45 49 55 63 68 99 1 1 2 1 1 1 1 1 $la 51 53 56 63 86 90 91 92 1 1 2 2 1 1 1 1 $ss 65 66 67 70 71 74 76 91 1 2 1 1 1 2 1 1 1 100 90 80 70 60 50 40 ma sc la ss FIGURE 16.8 Boxplots for Exercise 16.3. We’ll assume that the minimum and maximum values are within range, but there appear to be some large outliers in the science and social studies groups. This is confirmed by looking at the boxplots in Figure 16.8. The boxplots also suggest the possibility of nonnormal distributions and nonequivalent variances. Let’s consider the assumptions of normality more carefully: > round(tapply(dv,f.group,skewness),3) ma sc la ss 0.326 1.823 0.350 1.822 > round(tapply(dv,f.group,SEsk),3) ma sc la ss 0.687 0.687 0.687 0.687 > round(tapply(dv,f.group,kurtosis),3) ma -1.435 sc la ss 3.537 -2.084 4.044 > round(tapply(dv,f.group,SEku),3) ma sc la ss 1.334 1.334 1.334 1.334 > tapply(dv,f.group,shapiro.test) $ma Shapiro-Wilk normality test W = 0.896, p-value = 0.1979 $sc Shapiro-Wilk normality test W = 0.7926, p-value = 0.01180 $la Shapiro-Wilk normality test W = 0.8117, p-value = 0.02010 $ss Shapiro-Wilk normality test W = 0.8088, p-value = 0.01853 The skewness and kurtosis values suggest problems with the assumption of normality, particularly with the science and social studies groups. The results from the shapiro.test( ) suggest that only the math groups might be reasonably considered to be from a normal population. In looking at the assumption of homogeneity of variance, > leveneTest(dv ~ f.group,data=data) Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 3 1.3997 0.2587 36 The assumption of equivalent variances might be tenable, suggesting that the nonnormality may be the result of the outliers. Thus, we may consider using the Kruskal–Wallis test as an alternative to the conventional ANOVA: > kruskal.test(dv ~ f.group) Kruskal-Wallis rank sum test Kruskal-Wallis chi-squared = 10.3842, df = 3, p-value = 0.01557 Historically, this would have been the only reasonable choice. We obtained an H-statistic of 10.38, with an associated p-level of .0156. Thus, it appears that the observed differences among the groups did not occur by chance. Follow-up techniques to determine which groups are different from which other groups will be presented in Chapter 17. With the computing power available today, we might want to consider one of the resampling/randomization approaches. We look at both bootstrapping and permutations. Using a bootstrap approach, we construct 99,999 bootstrap samples and count the number of samples that equal or exceed the actual SSag: > pvalue [1] 0.0332 With regard to the permutation approach and given 4 groups of 10 cases each, there are over 196,056,000,000,000,000,000 possible arrangements, suggesting that we would be wise to consider the Monte Carlo p-value approach! Thus, we look at a sample of 99,999 permutations of the 40 cases, counting the number of times we equal or exceed the actual SSag: > pvalue [1] 1e-05 Translated, the p-value is .00001. Thus, it would appear that the different teachers of the different academic subjects (math, science, language arts, and social studies) are evaluated differently.