Lecture 4

advertisement

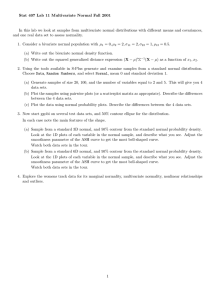

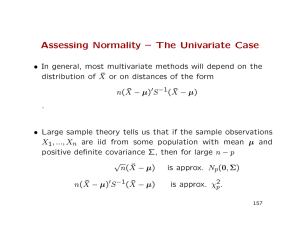

Sampling from a MVN Distribution BMTRY 726 1/17/2014 Sample Mean Vector • We can estimate a sample mean for X1, X2, …, Xn X 1 n X1 X 2 ... X n n X1 j j 1 n X 2 j 1n j 1 n X pj j 1 1 n X1 j n j 1 X1 1 n X X 2 n j 1 2 j 1n n X pj X p j 1 1 n n j 1 Xj Sample Mean Vector • Now we can estimate the mean of our sample • But what about the properties of X ? – It is an unbiased estimate of the mean – It is a sufficient statistic – Also, the sampling distribution is: X ~ N p , 1n Sample Covariance • And the sample covariance for X1, X2, …, Xn s11 s ' j 21 S n11 j 1 x j x x j x s p1 • Sample variance sii s 2 i 1 n 1 x n j 1 ij xi 2 • Sample Covariance sik 1 n 1 x n j 1 ij xi x kj xk s12 s22 sp2 s1 p s2 p s pp Sample Mean Vector • So we can also estimate the variance of our sample • And like X, S also has some nice properties – It is an unbiased estimate of the variance – It is also a sufficient statistic – It is also independent of X • But what about the sampling distribution of S? Wishart Distribution • Given Z1 , Z 2 ,..., Z n ~ NID p 0, Σ , the distribution of j1 Z j Z'j is called a Wishart distribution with n degrees of freedom. n • A n 1 S j 1 x j x x j x has a Wishart distribution ' n with n -1 degrees of freedom • The density function is Wn 1 A Σ A 2 p n1 2 p p 1 4 n p 2 2 Σ e n1 2 tr AΣ1 2 n i i1 2 where A and are positive definite p Wishart cont’d • The Wishart distribution is the multivariate analog of the central chi-squared distribution. – If A1 ~ Wq A1 Σ and A 2 ~ Wq A 2 Σ are independent then A1 A 2 ~ Wq r A1 A 2 Σ – If A ~ Wn A Σ then CAC’ is distributed Wn CAC ' CΣC ' – The distribution of the (i, i) element of A is aii j 1 xij xi n 2 ~ ii 2n1 Large Sample Behavior • Let X1, X2, …, Xn be a random sample from a population with mean and variance (not necessarily normally distributed) 1 μ p and 11 Σ p1 1 p pp Then X and S are consistent estimators for and . This means P X μ P SΣ as n as n Large Sample Behavior • If we have a random sample X1, X2, …, Xn a population with mean and variance, we can apply the multivariate central limit theorem as well • The multivariate CLT says n X μ N p 0, Σ and X μ X μ ~ ' 1 n 1 2 p Checking Normality Assumptions • Check univariate normality for each component of X – Normal probability plots (i.e. Q-Q plots) – Tests: • Shapiro-Wilk • Correlation • EDF • Check bivariate (and higher) – Bivariate scatter plots – Chi-square probability plots Univariate Methods • If X1, X2,…, Xn are a random sample from a p-dimensional normal population, then the data for the ith trait are a random sample from a univariate normal distribution (from result 4.2) • -Q-Q plot (1) Order the data xi1 xi2 ... xin (2) Compute the quantiles q1 q2 ... qn according to qj j 12 1 12 z 2 e dz n 2 leads to (3) Plot the pairs of observations j 12 qj j 1, 2,..., n n 1 x q , x q ,..., x q i1, 1 i 2, 2 i n, n Correlation Tests • Shapiro-Wilk test • Alternative is a modified version of Shapiro-Wilk test • Uses correlation coefficient from the Q-Q plot rQ x x q q x x q q n j 1 i i j 2 n j 1 i j i j n j 1 2 j • Reject normality if rQ is too small (values in Table 4.2) Empirical Distribution Tests • Anderson-Darling and Kolmogrov-Smirnov statistics measure how much the empirical distribution function (EDF) Fn xi number observations less than or equal to xi n differs from the hypothesized distribution F x, θ using θˆ to estimate θ • For a univariate normal distribution ˆ x xx θ 2 , θ 2 , and F x, θˆ = s s • Large values for either statistic indicate observed data were not sampled from the hypothesized distribution Multivariate Methods • You can generate bivariate plots of all pairs of traits and look for unusual observations • A chi-square plot checks for normality in p > 2 dimensions (1) For each observation compute d 2j x j x S 1 x j x , j 1, 2,..., n ' (2) Order these values from smallest to largest d21 d22 ... d2n (3) Calculate quantiles for the chi-squared distribution with p d.f. q1 q2 ... qn j 12 P p2 q j n Multivariate Methods (1) Plot the pairs d , q , d , q ,..., d , q 2 1 1 2 2 2 n 2 n d 2j qj Do the points deviate too much from a straight line? Things to Do with non-MVN Data • Apply normal based procedures anyway – Hope for the best…. – Resampling procedures • Try to identify an more appropriate multivariate distribution • Nonparametric methods • Transformations • Check for outliers Transformations • The idea of transformations is to re-express the data to make it more normal looking • Choosing a suitable transformation can be guided by – Theoretical considerations • Count data can often be made to look more normal by using a square root transformation – The data themselves • If the choice is not particularly clear consider power transformations Power Transformations • Commonly use but note, defined only for positive variables • Defined by a parameter l as follows: y j x lj if y j ln x j l0 if l 0 • So what do we use? – Right skewed data consider l < 1 (fractions, 0, negative numbers…) – Left skewed data consider l > 1 Power Transformations • Box-Cox are a popular modification of power transformations where yj x lj 1 l y j ln x j if if l0 l 0 • Box-Cox transformations determine the best l by maximizing: 2 n n 1 l l ln n j 1 y j y j l 1 j 1 ln x j l x 1 n y j 1n j 1 j n 2 l Transformations • Note, in the multivariate setting, this would be considered for every trait • However… normality of each individual trait does not guarantee joint normality • We could iteratively try to search for the best transformations for joint and marginal normality – May not really improve our results substantially – And often univariate transformations are good enough in practice • Be very cautious about rejecting normality Next Time • Examples of normality checks in SAS and R • Begin our discussion of statistical inference for MV vectors