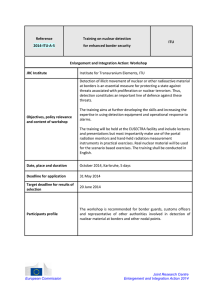

1AC---TOC

advertisement