Open Source Infrastructure for Development and

advertisement

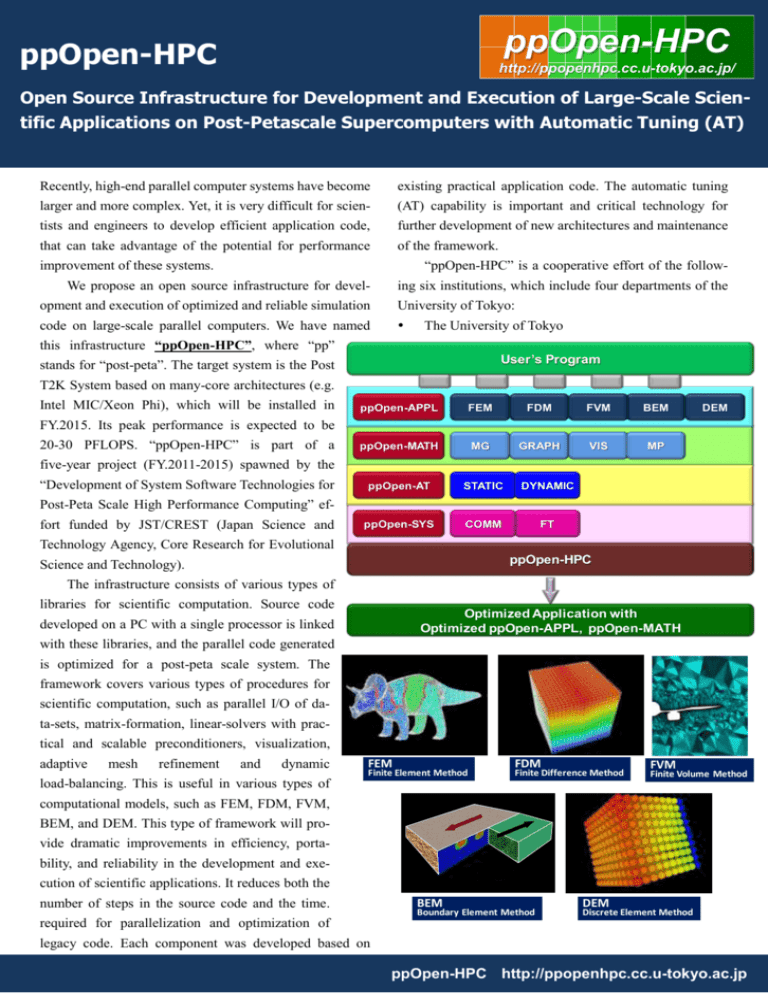

ppOpen-HPC ppOpen-HPC http://ppopenhpc.cc.u-tokyo.ac.jp/ Open Source Infrastructure for Development and Execution of Large-Scale Scientific Applications on Post-Petascale Supercomputers with Automatic Tuning (AT) Recently, high-end parallel computer systems have become existing practical application code. The automatic tuning larger and more complex. Yet, it is very difficult for scien- (AT) capability is important and critical technology for tists and engineers to develop efficient application code, further development of new architectures and maintenance that can take advantage of the potential for performance of the framework. “ppOpen-HPC” is a cooperative effort of the follow- improvement of these systems. We propose an open source infrastructure for devel- ing six institutions, which include four departments of the opment and execution of optimized and reliable simulation University of Tokyo: code on large-scale parallel computers. We have named The University of Tokyo this infrastructure “ppOpen-HPC”, where “pp” User’s Program stands for “post-peta”. The target system is the Post T2K System based on many-core architectures (e.g. Intel MIC/Xeon Phi), which will be installed in ppOpen-APPL FEM FDMii FVM BEM ppOpen-MATH MG ii GRAPH VIS MP ppOpen-AT STATIC ii DYNAMIC ppOpen-SYS COMM ii FT DEM FY.2015. Its peak performance is expected to be 20-30 PFLOPS. “ppOpen-HPC” is part of a five-year project (FY.2011-2015) spawned by the “Development of System Software Technologies for Post-Peta Scale High Performance Computing” effort funded by JST/CREST (Japan Science and Technology Agency, Core Research for Evolutional ppOpen-HPC Science and Technology). The infrastructure consists of various types of libraries for scientific computation. Source code Optimized Application with Optimized ppOpen-APPL, ppOpen-MATH developed on a PC with a single processor is linked with these libraries, and the parallel code generated is optimized for a post-peta scale system. The framework covers various types of procedures for scientific computation, such as parallel I/O of data-sets, matrix-formation, linear-solvers with practical and scalable preconditioners, visualization, adaptive mesh refinement and dynamic FEM Finite Element Method FDM Finite Difference Method load-balancing. This is useful in various types of FVM Finite Volume Method computational models, such as FEM, FDM, FVM, BEM, and DEM. This type of framework will provide dramatic improvements in efficiency, portability, and reliability in the development and execution of scientific applications. It reduces both the number of steps in the source code and the time. BEM Boundary Element Method DEM Discrete Element Method required for parallelization and optimization of legacy code. Each component was developed based on ppOpen-HPC http://ppopenhpc.cc.u-tokyo.ac.jp Information Technology Center (ITC) A set of libraries related to node-to-node communication Atmospheric & Ocean Research Institute (AORI) and fault-tolerance. Center for Integrated Disease Information Research (CIDIR) Libraries Graduate School of Frontier Sciences (GSFS) in ppOpen-APPL, ppOpen-MATH, and ppOpen-SYS are called from user’s programs written in Academic Center for Computing and Media Studies Fortran and C/C++. All issues related to hybrid parallel (ACCMS), Kyoto University programming are “hidden” from users. ppOpen-AT also Japan Agency for Marine-Earth Science and Tech- optimizes load-balancing between the host-CPU and ac- nology (JAMSTEC) celerators/co-processors on heterogeneous computing The expertise provided by researchers at the above institu- nodes. Developed libraries of ppOpen-HPC will be open tions covers a wide range of disciplines related to scientific for public. Permission is granted to copy, distribute and/or computing, such as system software, numerical li- modify software and document of ppOpen-HPC under the brary/algorithm research, computational mechanics and terms of The MIT license. ppOpen-HPC will be installed to earth sciences. Six key issues for algorithms and applica- various types of supercomputers, and will utilized for re- tions directed towards post-peta/exa scale computing are as search and development using large-scale supercomputer follows: systems. Moreover, ppOpen-HPC will be introduced to Hybrid/Heterogeneous Architectures graduate and undergraduate classes of universities for de- Mixed Precision Computation velopment of parallel simulation codes for scientific Auto-Tuning/Self-Adaptation computing. Fault Tolerance Communication/Synchronization Reducing Al- 3Q 2012 (Ver.0.1.0) gorithms Reproducibility of Results ppOpen-HPC for Multicore Clusters (K computer, T2K, Cray etc.) In ppOpen-HPC, these six issues are carefully considered. ppOpen-HPC includes the following four components: 3Q 2013 (Ver.0.2.0: Current Release) ppOpen-APPL ppOpen-HPC for Multicore Clusters/Intel Xeon/Phi A set of libraries corresponding to each of the five methods New Features Preliminary version of ppOpen-AT/STATIC noted above (FEM, FDM, FVM, BEM, DEM), providing I/O, optimized linear solvers, matrix assembling, AMR, Both of Flat MPI and OpenMP/MPI Hybrid are available in all components of ppOpen-APPL etc. ppOpen-MATH Performance of ppOpen-MATH/VIS-FDM3D is much improved. A set of common numerical libraries, such as multigrid ppOpen-MATH/MP-PP (tool for generation of solvers, parallel graph libraries, parallel visualization, and remapping table in ppOpen-MATH/MP) is a library for coupled multi-physics simulations. newly developed. ppOpen-AT AT enables smooth and easy shifts to further development New functions (loop sprit, loop fusion, reordering statements) are added to ppOpen-AT on new and future architectures, through use of 3Q 2014 ppOpen-AT/STATIC and ppOpen-AT/DYNAMIC. A spe- cial directive-based AT language is developed for specific 3Q 2015 procedures in scientific computing, focused on optimum Final version for Post-Peta-Scale System memory access. Further optimization on the target system Prototype for Post-Peta-Scale System ppOpen-SYS Kengo Nakajima Supercomputing Research Division Information Technology Center l The University of Tokyo Email: nakajima@cc.u-tokyo.ac.jp