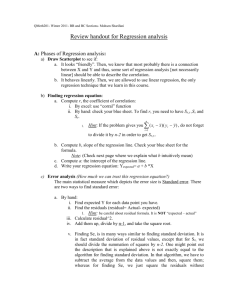

Regression Analysis

advertisement

1 Regression Analysis The study of relationships between variables. A very large part of statistical analysis revolves around relationships between a response variable Y one or more predictor variables X. In mathematics a relationship occurs when a formula links two quantities: Diameter and Circumference of a Circle diameter Circumference 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 5.5 6 6.5 7 1.57079633 3.14159265 4.71238898 6.28318531 7.85398163 9.42477796 10.9955743 12.5663706 14.1371669 15.7079633 17.2787596 18.8495559 20.4203522 21.9911486 2 Circumference 25 20 15 10 5 0 0 2 4 6 8 The circumference of a circle c = d 3 Compound Interest Capital C is invested at a constant interest rate r (added annually) Say C = 1000, r = 0.05 (5%) The cumulated capital and interest after t years C(t) is: C(0) = 1000 C(1) = 1000 + 0.05 * 1000 =1050 C(2) = 1050 + 0.05 *1050 = 1050 + 52.5 = 1102.5 … A little bit of algebra gives the following : C(t ) 1000(1 r ) t A formula links C(t) with t. This is a deterministic relationship - C(t) can be exactly calculated for every value of t (an integer) . 4 Capital 1800 1600 1400 1200 1000 800 600 400 200 0 0 1 2 3 4 5 6 7 8 9 10 Here we have some differences - the graph C does not start at 0. Also, although this is not clearly apparent from the graph the relationship is a curve. If we extend the time axis to 100 years we get: Capital 140000 120000 100000 80000 60000 40000 20000 0 0 10 20 30 40 50 60 70 80 90 100 5 A relationship of the form y = a + bx is linear Some relationships however are stochastic involve a random component 6 Westwood Co Data on Lot Size and Number of Man-Hours Westwood Company Example Production Run run 1 2 3 4 5 6 7 8 9 10 Lot Size Xi size 30 20 60 80 40 50 60 30 70 60 Man-Hours Yi MH 73 50 128 170 87 108 135 69 148 132 7 In this example although the relationship is clear from the graph we do not have a theory that would allow us to link number of man-hours to lot size (without some empirical observation). It is in fact clear that such an exact relationship cannot exist: We observe that for lot size 60 we had three observations and they all required a different number of man-hours to process. Nevertheless it would be useful to derive some approximate formula to allow for costing and/or scheduling of jobs. Weight of a Car and MPG (USA 1991) We might expect heavier cars to have an inferior efficiency. 8 MPG and weight We would not expect however that the weight of cars would give us a complete description of MPG (but it may an important factor). In Ex 3 we can actually apply a bit of "theory" (common sense) to suggest that: MH = job set-up time + lot size * time process unit + variation variation - random component due to a multitude of factors affecting an individual job. If the equation is to be useful in predicting workload variation will be small. 9 The suggested relationship is linear. Y = a + bX + error The statistical task is to chose a and b in some sensible way Least Squares R y (a bx ) i i Best a and b to minimise R i 2 i 10 Why ? ensures that all points are involved in the choice of a and b R not a good idea (see example) i | R | too difficult mathematically. i R is the next choice - Also as we shall see 2 i the solution has some very nice properties. More importantly why R the vertical distance? i In what follows it is assumed that there is an asymmetry between Y and X. X’s are regarded as fixed known constants, only the Y's involve a random component. X’s lot size - treated as if the data were collected as follows: Decide on 10 lots of sizes x1=30 , x2 = 20 … Then observe the number of man hours each takes to process. 11 Weight of a car affects MPG not the other way round. The Weight of the car is a fixed known quantity. In many examples where regression is used the direction of the relationship is much less obvious (example Heights and Weights of people, imports and GNP) - It has be assumed by the analyst. In particular in mathematics: if y = a + bx then x=c+dy where d 1 -a and c = b b however in regression y=a+bx + r , r are "errors" in y. but x=c+dy+s , s are "errors" in x. If we are given only a and b, c and d cannot be calculated. We need to look at other aspects of the data to compute them. 12 Solution to Least Squares SSE R 2 i SSE - Sum of Squares Error (also called Residual sum of squares) Task: choose a and b to minimise SSE Algebraic theory tells us that this is achieved by solving: ( SSE ) 0 a ( SSE ) 0 b SSE R ( y a bx ) 2 i i 2 i ( SSE ) ( y a bx ) a a i 2 i (derivative of sum = sum of derivatives) ( y a bx ) 2( y a bx ) ( y a bx ) (chain rule) a a ( y a bx ) 1 a 2 i i i i i i i i 13 So ( SSE ) 2 ( y a bx ) a i i 2( y na b x ) i i Equating this to 0 gives: na y b x or a y bx Equation 1 ( SSE ) 2 x ( y a bx ) and setting this to 0 b gives: i i i xy a x b x 0 Equation 2 2 Eqs 1 and 2 are called the normal equations - we shall use them again later: 14 Using 1 to get rid of a in 2 gives xy ( y bx )nx b x 0 xy nxy b( x nx ) or xy nxy b x nx 2 2 2 2 2 Again: b xy nxy x nx 2 2 a y bx So to compute a and b we need: xy , x , y , x and n 2 Example Westwood Co xy 61800, x 500, y 1100, x 28400, n 10 2 61800 10 * 50 * 110 2 28400 10 * 2500 a 110 2 * 50 10 b Man-hours = 10 + 2 * Lot size. 15 Interpretation: it takes 10 man-hours to set up a job, 2 man-hours to process a unit. (on average). We can use the equation to predict the work load required for a job of any size: lot size 35 estimate 10+2*35 =80 mh. lot size 60 estimate 10+ 2*60 =130 mh note: we had three cases of lot 60 in the data 128, 135 and 132 An estimate for size 60 based on those 3 would be 395 13167 . -> regression is not just 3 interpolation. Lot size 100 estimate 10+2*100=210 extrapolation (beyond observed range of x). Lot size 10000 estimate 10+2*10000=20010 ??? Do we believe this?! In practice (i.e. except for exam questions) regression estimates would be calculated using a computer package. 16 MPG on Weight Variable Coefficient s.e. of Coeff 48.7393 1.976 -0.00821362 0.0006738 t-ratio prob Constant 24.7 < 0.0001 Weight -12.2 < 0.0001 Computers being computers tend to output a lot more information and lots of spurious decimal places. (most of the output was deleted here). a = 48.74 , b = -0.008214 b is very small - does this mean that Weight has little effect on MPG? NO ! b is small here because MPG is in miles per gallon 20 - 40 say, Weight is in lbs 1700 - 4200. If Weight was measured in tons b =-18.4 =2240*-0.00824 (a would stay the same), if y was YPG (yards per gallon) both b and a would change (x 1760). Predictions can be made as above but note that no vehicle will ever exceed 48 (a ) MPG, more seriously a 3 tonne car can only go backwards will have negative MPG. 17 Notation: yi = a + bxi +ri y - dependent variable x - independent variable r - residual a , , constant. The estimated value at x = 0 b, , slope. The change in y for 1 unit change in x. y (y-hat) , prediction, fit - The estimate value for some x. y a bx i i Hats are used to indicate an estimated value. r y y residual (at xi). i i i Fit summaries: Error sum of squares = SSE = r 2 i Total sum of squares = SSTO = ( y y ) (error sum of squares if b=0) i 2 18 Regression Sum of Squares = SSR = SSTO - SSE. SSR is interpreted as the sum of squares explained by the regression. These lead to a basic indicator of the quality of the regression Coefficient of determination = r-squared = r2 = R2 = SSR SSTO = proportion of explained variation . 0 r2 1 For the Westwood data r2 = 0.996 = 99.6% For MPG data r2 = 75.6% r2 is invariant to scale changes in y or x = corr(x,y)2 Although not perfect (no single summary can be) r2 is a primary indicator of the usefulness of a regression. 19 What is a good (high) r2 ? No absolute answer - although r2=0 -> b=0 -> no relationship quite small r2 can be statistically significant (with enough data) r2 will depend on the inherent variability in the y's In data with 'no problems' Social surveys, education, medical, biological a good value of r2 is around 0.4. eg. A - level results to predict University performance. 0.4 - 0.5 bio-mechanical, industrial data 0.6 + should be expected eg. r2 between breaking and bending moment of planks 0.6. engineering, scientific experiments - accurate measurements and good theoretical background for the model: 0.9 + 20 Care must be taken though as one or two points may severely affect r2. It is quite easy to construct where the 'relationship' between x and y is confined to a single data point and r2 is very close to 1. Mean Squared Error MSE SSE S n2 2 Variance of the residuals, (n-2) is used so that the estimate is unbiased. S MSE standard deviation about the line. Is a basis for computing accuracy of estimates of coefficients and predictions. 21 Properties of the regression line: In the derivation of eq1 we had ( SSE ) 2 ( y a bx ) 0 a i i ( y a bx ) R 0 i i i The sum of the residuals = vertical departures = 0 a is chosen in such a way that the line is central in the vertical direction a y bx if b = 0 (y doesn't depend on x) then a = average of y's. This form also means that the point ( x , y ) the centroid of the data lies on the estimated line. The properties emerging from equation 2 are more subtle. x R 0 (x x)R 0 i i i i 22 Imagine a stick (weightless) at lengths Xi tie weights of magnitude Ri The stick is balanced around x there is no tendency for the line to twist. Rewriting the formula for b is useful xy nxy x nx 1 nx ( xy y ) n x nx b 2 2 2 2 1 (x x) y x nx 2 i 2 i (x x) y k y x nx i 2 2 i i i b = k1y1+k2y2 … b is a linear combination of y's ( doesn't depend on y2) this makes the properties of b simple (for testing, confidence intervals etc) further the k's have some nice properties: k i x x 1 where SSX = SSX x nx i 2 2 23 1 (x x) 0 SSX k i i as ( x x ) 0 i Sum of the k's is 0. (x x) SSX 1 k SSX SSX SSX 2 2 i i 2 2 k x k ( x x ) adding x k 0 ( x x )( x x ) 1 SSX ( x x ) 1 SSX SSX SSX i i i i i i 2 i i Sum of kx is 1. These results make the calculations of the statistical properties of b relatively simple. Finally a is also a linear combination of the y's A prediction Y = a+bx is also LC of Ys. A residual Yi -Yh is also LC of Ys. 24 Statistical Model for regression. To be able to conduct tests (is slope = 0, constant = 0) make confidence statements etc. we need a statistical framework for the regression model. Errors are independent Normal with 0 mean. Y a bx e where e ~ N(0,2) or equivalently Y ~ N(a+bx,2) The assumption is not too serious in practice the normal model is usually quite good for 'errors' in continuous variables. There are some situations however where the regression idea needs to be adapted (a different algorithm is needed). Most commonly these arise if Y is confined to a small number of values: Y - binary = (Yes or No)=(1 or 0) Y - takes on a small number of values: 25 eg. (none, some, lots) = ? (0, 1, 2) ???? goals in soccer x = 0, 1, 2, … but mostly small values. But with more than 5 realistic, ordered categories I would probably use ordinary regression. The independence assumption is more frequently violated and a different approach might be required: example time series Y - house prices , x- time suppose the average growth is 5% . For random (unaccounted for reasons) the x=2000 is below average it is quite likely that the growth will not fully recover during 2001 this introduces a dependence between the 'errors'. (the errors could still be Normal though) This problem will also arise if the relationship is miss-specified - the relationship between x and y is a curve and a line is fitted - this will introduce sequential dependence between the residuals (see examples later). 26 The two cases require different approaches - in times series we should account of - in fact take advantage of the correlation in the modelling. In the second case we should specify the model correctly and thus get rid of the problem A third assumption that is a bit hidden is that the 2 constant across the range of the data - the variation around the line is the same all along it. In many situations the variance in y (and hence e) may change with y. In a real 'Westwood' example processing smaller lots will involve less variation than processing large lots in which there is more opportunity for things to go right/wrong. We shall examine some ways of correcting such a problem. We note at this stage that we shall need some way of assessing whether our particular regression suffers from any of these departures. 27 Statistical properties of the estimates. Having a statistical model for the data allows to properly assess the estimation procedure. The estimates a, b, y and r are all linear combinations of the y's . Linear combinations of Normals are Normally distributed so all the estimates are Normally distributed - all that we have to do is to compute the means and variances of these estimates and we have a complete specification of their distribution. Reminder: If L k y k y ... k y 1 1 2 2 i i then E ( L) k E ( y ) V ( L) k V ( y ) if the y's are indpendent = k if y's all have variance . i i 2 i i 2 2 2 i E (b) k E ( y ) k ( x ) k k x 0 1 i i i i i i i b is an unbiased estimate of - on average the estimate equal to true value. 28 V (b) k V ( y ) k 2 2 i 2 i i 2 SSX 2 x nx 2 2 i In the estimate of V(b) 2 is replaced by its estimate s2. Observe that the greater SSX is, the higher the precision in the estimate of b. SSX measures the spread of Xs =(n-1)V(X) - a wide base for X provides a good estimate of the slope. 1 a y bx y x k y n 1 a ( k x) y n i i i i i Note that a is a prediction from the regression at x = 0. We will evaluate the properties of a general prediction. y( x ) a bx y bx bx y b( x x ) h h h h E ( y) E ( y ) ( x x ) E (b) x x x x h h Unbiased prediction. h 29 V ( y) V ( y b( x x )) V ( y ) ( x x ) V (b) 2Cov ( y , b) (x x) 0 n SSX 1 (x x) ( ) n SSX h 2 h 2 2 2 h 2 2 h because Cov( y , b) 0 1 1 Cov ( y , b) Cov ( y , k y ) kV ( y ) k 0 n n n i i i i i i (the 2nd equals requires y's independent, the third V(y)=2) 1 (x x) V ( y) n SSX 2 2 h The variance of a prediction at xh depends on the variation in slope and the number of data points but also on the distance of xh form the centre of the x's. Means and individual predictions: Scenario 1 The accountant of Westwood Co. wants to evaluate the profit/loss on jobs with a lot size of 50. 30 Based on the data the estimate is 10+2*60 =130 This estimate is based on 'only' 10 points so it would be useful to know how accurate it is: x 50 , xh=60, SSX=28400-25000=3400, n=10 and SSE=60 SSE is computed by computing ri s and summing their squares, a messy formula, or as actually as output from package. So s2 =60/8 = 7.5 . 1 (60 50) V ( y) 7.5 0.970588 10 3400 2 SD( y) 0.9852 So the precision of the estimate is approx. 2 (=2*1 which is an approximation to t(8,0.05)*0.9852). The estimate is accurate to with 2% with 95% confidence - pretty good for 'only' 10 points. We remarked earlier that we had an alternative estimate at x = 60 note: we had three cases of lot 60 in the data 128, 135 and 132 31 An estimate for size 60 based on those 3 would be 395 13167 . . The standard error of this estimate 3 SD(u ) 2.028 about twice that of the regression. There is no such thing as a free lunch…,definitely not in statistics, where did the extra precision come from? There must be more information used to get y for it to have higher precision. The extra information comes from the other points in the data, To use that information we have assumed y = a + bx this links all the points together. It will not always be the case that you will observe such an improvement - this is an artificial data set for which the linear relationship is an excellent explanation. 32 Scenario 2. The sales manager of Westwood Co wants to quote a price (in Mhs) for a specific job with lot size 60. How does this differ from scenario 1? The accountant wanted the average price of a job, here we are talking about a specific job. Unless the sales manager knows of some specific reasons why this job may require more (or less) than average man-hours the best estimate is the average: ~ y 10 2 * 60 130 but V (~ y ) V ( y) V ( y) and is estimated by 1 (x x) 1 (x x) s s s 1 n SSX n SSX 2 2 2 h 2 2 h =7.5 + 0.97 = 8.5 The model assumption was that the variation about the line was 2 which is estimated by s2. 33 In Scenario 1 we were after the mean of Y at x=60 and errors that arise are due to errors in the estimates a,b, as these are based on samples. In Scenario 2 to these we have to add the inherent variation in Y - not all jobs of size 60 will take the same time. Testing. As always statistical testing parallels confidence intervals just the emphasis is a different way round. The most common form of test in regression is b=0 – i.e. is there any relationship between x and y at all, at all. The test takes the standard form: t bb as t distributed sd (b) 0 with df = df associated with s = n-2 b - observed value, b0 is hypothesised value (often = 0), tests about a and predictions can be conducted in a similar way. An excellent rule is |t|<2 do not reject the hypothesis. 34 Traditionally F-tests are employed in regression and virtually every package quotes them (even though they are not really interesting) 2 s / df f as F(df , df ) s / df 1 1 1 2 2 2 2 s12/df1 is an estimate of variance if hypothesis is true (something larger if it is false) s22/df2 is an estimate of variance anyway.(doesn't depend on hypothesis) The "overall F" is a test of b = 0 . The numerator is an estimate of 2 if b = 0 (SSR) , the denominator is MSE which estimates 2 whatever b is This is compared to F(1, n-2) . F(1,df)=(t(df))2 and as the t-test is simpler and nearly always quoted the F-test is redundant. F-tests are important in analysis of variance (several t-tests put together). 35 In multiple regression where there is more than one explanatory variable (x) and more than one b the F tests all b=0 simultaneously - If F not significant the data are only suitable for the waste bin. 36 Computer output The Westwood Co example has been analysed using x different packages to illustrate the output. 37 Data Desk Line 1 = dependent variable Line 2 = Data desk allows regression on a subset of points defined by selector. L3 = r2 (adjusted = garbage) L4 = estimate of s and df L6 =SSR and overall F-test L7 = SSE , df, MSE L9 = a , SD(a), t-value for a=0, significance a=0 L10 = b , SD(b), t-value for b=0, significance b=0 38 MINITAB Regression Analysis The regression equation is C3 = 10.0 + 2.00 C2 Predictor Constant C2 S = 2.739 Coef 10.000 2.00000 StDev 2.503 0.04697 R-Sq = 99.6% T 4.00 42.58 P 0.004 0.000 R-Sq(adj) = 99.5% Analysis of Variance Source Regression Residual Error Total DF 1 8 9 SS 13600 60 13660 MS 13600 7 F 1813.33 P 0.000 C3 = man-hours, C2=lot size Exactly the same stuff but in a different order. 39 Splus *** Linear Model *** Call: lm(formula = V3 ~ V2, data = new.data, na.action = na.omit) Residuals: Min 1Q Median 3Q Max -3 -2 -0.5 1.5 5 Coefficients: Value Std. Error t value Pr(>|t|) (Intercept) 10.0000 2.5029 3.9953 0.0040 V2 2.0000 0.0470 42.5833 0.0000 Residual standard error: 2.739 on 8 degrees of freedom Multiple R-Squared: 0.9956 F-statistic: 1813 on 1 and 8 degrees of freedom, the p-value is 1.02e-010 V3 – Man-hours, V2 - lot size Line 3-Line 5 basic summary of residuals Followed by coefficients estimate ( s) r2 (no adjusted r2 this is a statistician's package) The good old F-test Splus also outputs some graphs etc - discuss such things shortly. 40 Checking the assumptions. The procedures for validating a regression model are graphical, no tests are (usually) conducted. Graphs are used to identify problems with model specification (is it linear), outliers - odd observations that do not fit , observation that have a large impact on the estimates (influence) , heteroscedascity - no constant variance in English, independence of residuals and normality of residuals. The basic tool is the scatter plot of something against something (possibly, and something if the package supports 3D plots -Data Desk and S-plus). The first plot is Y against X usually conducted before a regression is fitted to see if the relationship is linear. 41 Did this with Westwood and it seemed OK. Another look at the MPG plot Is it a curve or my bad drawing? You wouldn't try a straight line fit to the data below: 42 The MPG data is probably most typical of real data sets , not really clear is it a line or not. If there is no strong theoretical reason for believing the relationship to be non-linear - start with a line and look if there are any problems. Even in example 3 if we had only half the data (below 50 or above 50) we would probably fit lines to start with. Fitting a line to data removes the basic trend (increase or decrease). It may be easier to see what is going on. The residual plot r y y The plot of r against x - in practice y is a basic tool for seeing all is OK. However as computing power we can get fancier r is scale dependent can't say are the residuals big or small. 43 To get round this standardised residuals = r/s These are approximately N(0,1) in theory so anything above 2.5 or below -2.5 is suspicious, larger in magnitude than 3 doubtful , larger than 4 ridiculous -> model doesn't fit for this point. But that is not quite correct, the variances of the observed residuals are not actually equal, they get larger further away from the centre data. With computers being good at arithmetic most offer studentised residuals these take account of the changing variance - more accurately N(0,1). Internally and externally studentised residuals differ by the way the variance is estimated. These are refinements that are helpful but not strictly necessary, using old fashioned standardised residuals is adequate but you might as well use better tools if they are available. 44 Raw residuals Westwood No obvious problems unless the 4+ is rather big . s = sqrt(7.5) 2+ so probably not suspicious when divided by s. 45 ESR Westwood We now know that it is about 2.5 in standard units no great concern - the refinement is practically irrelevant. MPG data 46 The curvature hypothesis not really borne out by this plot Some tendency for positive residuals at the edges and negative ones in the centre (might indicate a concave curve) but not really enough to worry about. Also there is a car with a lousy MPG for its weight (large negative residual) - (Ford Mustang sporty - light and big engine) but the residual not really big enough to be worrying. Before going further we need to look at some residual plots with obvious problems so that we can see what to look for. Real data sets often have lots of problems or subtle problems and it is difficult to unambiguously decide what is going on. The simplest way is to illustrate is on artificial data sets that have one specific problem each. 47 In the examples below modified Westwood Co data are used. Outlier - Suppose there was a typo in the recording of the data a 40 become 90 . or… due to poor workmanship the lot had to be processed again. (total workload recorded) Either way this observation is unlike the others. Scatter Residual Dependent variable is: outlierNo Selector R squared = 85.8% , s = 15.56 with 10 - 2 = 8 degrees of freedom Variable Coefficient Constant 22.3529 Size 1.85294 48 r2 lower but still OK. Line is affected b decr. a inc. Remedy : This observation is NOT part of this model . Remove it from the data set. Seek explanation why is it different. Curvature ynew = 30 + sqrt(y-30) Economies of scale: with larger lot sizes it takes less time to process a unit. Scatter Residuals Scatter hardly any indication of problems. 49 Residuals tend to be the same sign at the edges of the data. (here negative - convex relationship): the residual signs here are: - (- +)+++(++-)-r2 = 98.2% no indication of problems Coefficients not comparable. Remedy - Get the form of the relationship. Transform the X, Ys or both - see later. USA population. Note: Non-independence of residuals will produce a similar plot. A curved relationship will induce nonindependence Heteroscedascity - changes in variance. Ynew = Y + random*(Y) Variance of Y proportional to Y2 50 Diagnostic - triangular shape to residual plot. Effects - sub-optimal line No bias but as (here) small (X,Y) more accurate they should be given more say in determining the line. Tests, expressions for variances invalid. Remedy: Correct model: weighted regression. Transformations may help, a curved model often more appropriate. These are the most common features of problematic residual plots Residual analysis is often more informative than the regression itself it identifies the odd cases - Mustang and MPG. 51 The rule is no patterns in residual plots ? Unevenly spaced integer xs . NO problems Rounded Y - regression almost perfect (Don't have exact normality). Some case studies.