Improved TF-IDF Ranker

Improved TF-IDF Ranker

_____________________________________________________________________________________

CSC 7481 class project

Submitted By

Muralidhar Chouhan

Professor

Dr. Yejun Wu

1

Contents

2

1.

Introduction

1.1 Problem Statement

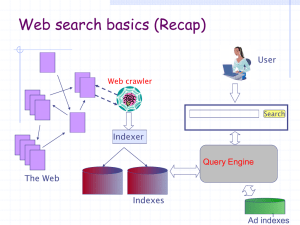

Tf–Idf , term frequency–inverse document frequency ranker is a popular mechanism to calculate the relevance of very large documents. Given a query, a traditional Tf-Idf ranker would calculate the weight of each document with respect to the words from the query. This technique works well when the user query shares the words from the document. But, due to the richness of natural language, a query can be expressed in different ways by different users. There needs a mechanism which retrieves the concepts which are most similar to the query from user.

1.2 Solution

This project compares traditional TF-IDF ranker with an improved version. The improved TF-IDF ranker uses TF-IDF ranker in two levels for retrieving the relevant documents. First level fetches the heavily weighed documents with respect to the words falling in the query. Second level mechanism is used to find out the Keywords (terms that define the document) from each of the document obtained from the first level.

Finally, the project uses semantic similarity implementation, from a previous work done by me as a

Master’s project to calculate the semantic similarity between a document’s keywords and the query. This final step helps us to get the documents which are semantically similar to the input query.

This project is validates using the TREC dataset, confusion track. An experiment was conducted for the first 10 queries from the bench mark dataset. This project has showed the significant increase in precision and recall values compared to the traditional TF-IDF ranker.

1.3 Previous Work

This project has used one of my previous works, my MS Project titled, ‘Text retrieval using semantic similarity and TF-IDF Ranker’. In my earlier work I have implemented a sematic similarity method to calculate the semantic relatedness between the sentences. It used TF IDF ranker as a first cut to retrieve the documents based on the words in input query. Then there is mechanism developed to extract the sentences which share the words from the query. Finally, semantic similarity between extracted sentences and the input query is calculated. This project has showed very good accuracy for confusion track trec dataset. One major limitation for this work was semantic similarity calculation between sentences is a very expensive operation . Each document might need 10-15 semantic similarity computations, which degrades the overall system performance.

3

1.4 New Work (Course project)

My current work, ‘ Improved TF IDF Ranker ’ is an enhancement to my earlier work. It introduced a second level TF IDF Ranker ( TF IDF Ranker II ) to extract the Keywords (Words that define the document) from each document. Finally, apply the method for calculating semantic similarity between keywords and the query to retrieve the semantically relevant documents. Also, a module is developed to generate a file containing distinct words from the whole set of documents with respective Inverse document frequency values. This module is precompiled to generate the file, which is being used in TF IDF Ranker II to find out the keywords from the documents. This work has improved the performance by limiting one semantic similarity operation per document.

Here is the summary of new work done for the course project ,

TF IDF ranker II , which identifies the keywords from each document. Using which an improved document retrieval system is developed.

A document retrieval system based on traditional TF IDF ranker is implemented. It is compared with an improved system.

Module generating a corpus containing distinct words from the document set with respective Inverse document frequency values. It has generated the corpus with more than 30k words.

User Interface, showing text retrievals using both methods (Traditional TF IDF vs

Improved TF IDF).

An experiment with TREC dataset. Results and precision recall bar graphs for both methods.

4

2.

Background

2.1 TF –IDF Ranker

It is often used as a weighting factor in information retrieval and text mining. Terms that appear often in a document should get high weights. The more often a document contains a term, the more likely that the document is about the term. It is captures using Term frequency ( TF ).

Terms that appear in many documents should get low weights. This is captures using Inverse Document Frequency ( IDF ).

The weight of a term in a document is calculated using below formula [5],

W i,j

=TF i,j

* log (N/DF i,j

)

Where,

W i,j is the I’th worn in jth document

TF i,j is the number of times ith term appears in jth document.

N is total number of documents

2.2 Semantic similarity calculation

Semantic similarity between sentences is calculated using semantic information and the word order information. This project has used an implementation from my previous work which calculates the semantic relatedness between two set of strings which used Wordnet lexical database, to calculate the semantic relatedness. The score lies between 0 and 1. 0 representing least similarity score. 1 being the highest similarity index.

5

3.

Design

3.1

Traditional TF-IDF Ranker:

Traditional TF IDF ranker is an implementation of the popular information retrieval technique, TF-IDF ranker [3].

Input query is preprocessed using a preprocessor, which tokenizes the input text and removes the stop words. Document set is passed to a primary filter which filters the documents which share the words from query. Now the TF-IDF ranker is applied on the documents got from previous step.

Now the input query has been parsed to list of tokens. Suppose the input query has n words w1,w2,..wn.

And let’s say we have document set, D1,D2….DN. Where N is the total number of documents we want to search for the input query.

6

TF-IDF ranker would identify number of times each word appears in each of the documents as shown below.

D1

W1 TF11

W2 TF21

W3 TF31

:

:

Wn TFn1

D2

TF12

TF22

TF32

TFn2

D3 , , D

N

DF

TF1N DF1

TF2N DF2

TF3N DF3

TFnN DFn

Where TF ij is the term frequency of word w i

in document D j.

DF in the above table indicates the document frequency. It indicates number of times a word has appeared in different documents. DF i indicates document frequency of word Wi in document collection (i.e D,

D2..DN).

Calculate the weight

Now we have the term frequency vs document frequency matrix for our input query and document set.

The weight of each word is calculated using below formula.

W i,j

=TF i,j

* log (N/DF i

)

W1

W2

W3

:

:

Wn

D1

W

11

W

21

W

31

W n1

D2

W

12

W

22

W

32

W n2

Weight sum S1 S2

Where,

W i,j is the weight of word Wi in document Dj,

TF i,j

is the term frequency of word Wi in document Dj,

D3

7

, , D

N

W

1N

W

2N

W

3N

W nN

S

N

DF

DF1

DF2

DF3

DFn

DF i is the document frequency of the word Wi,

N is total number of documents.

Now, the weightage of each document is calculated by summing up all the weights of query words

(w1,w2..wn). S1 in the above figure indicated sum of the weights

(

W

11,

W

21…

W n1

).

Sort all the documents according to the weights. Pick top Q documents for further processing. Q is chosen such as the weight of each document crosses a particular threshold

1.

8

3.2 Improved TF IDF Ranker:

9

Fig. Implementation using TF IDF Ranker II

Above figure shows the document retrieval approach using TF IDF ranker II, which helps us to get the

Keywords from the documents.

We choose top S documents obtained from the previous method. Here we use another threshold

2

(

2<

1) to get the set of docs for further processing. Extract the keywords (Words which have high TF and low DF) from each document.

For each document, calculate the semantic similarity between its keyword set and the query using

Wordnet semantic analyzer. Sort the documents w.r.t score, eliminate the docs with score less than 0.5.

Display the remaining documents.

10

Below matrix is formed for each document. TF for all the distinct words are calculated and IDF is obtained from a precompiled corpus (as shown in fig. Word-IDF pairs) which contains word – IDF pairs.

There by calculating weight of each word in the document. Top 10 words with highest weight are chosen as the keywords for the document.

Doc IDF Weight

W1

W2

TF1

TF2

TF3

IDF1

IDF2

IDF3

We1

We2

We3 W3

:

:

Wn TFn IDFn Wen

TF i

is the term frequency of the word W i

inside a document. IDF i

is the Inverse document frequency of the word W i

.

Word-IDF pairs

11

4.

Results

This project has used the Trec dataset, confusion track for the validation. Below table shows the top 10 queries with known results as given in the bench mark dataset.

CF5

CF6

CF7

CF8

CF9

CF10

CF3

CF4

CF1

CF2

Query Document

CF1 FR940405-2-00069

CF2 FR940907-2-00134

CF3 FR940506-1-00017

CF4 FR941212-1-00049

CF5 FR940222-2-00066

CF6 FR940912-2-00020

CF7 FR940317-1-00199

CF8 FR940920-1-00021

CF9 FR940812-2-00240

CF10 FR940419-2-00019

Following results are obtained for the traditional TF-IDF Ranker and Improved TF IDF Ranker.

TF TDF

Doc ID

FR940413-2-00130

FR940405-2-00069

FR941116-2-00045

FR940907-2-00134

FR940506-1-00017

FR940506-1-00020

FR940506-1-00017

FR941212-1-00049

FR940222-2-00066

FR940317-1-00036

FR940413-2-00130

FR940912-2-00020

FR940317-1-00199

FR940317-1-00197

FR940413-2-00130

FR940920-1-00021

FR940812-2-00238

FR940812-2-00240

FR940419-2-00019

FR940419-2-00015

TF IDF + Semantic

Weight Doc ID

28.18 FR940405-2-00069

Score

0.991

14.844 FR940922-2-00038

85.011 FR940721-2-00016

27.139 FR940907-2-00134

0.892

0.859

0.846

30.036 FR940506-1-00017

26.034 FR940506-1-00020

21.171 FR941212-1-00049

15.723 FR940506-1-00024

29.813 R940222-2-00066

14.701 FR940812-2-00253

133.51 FR940419-2-00085

32.121 FR940912-2-00020

56.191 FR940317-1-00199

17.632 FR940516-2-00112

51.369 FR940920-1-00021

47.864 FR940317-1-00064

23.905 FR940812-2-00240

23.71 FR940812-2-00230

35.334 FR940419-2-00016

33.414 FR940419-2-00019

0.875

0.847

0.974

0.875

0.786

0.773

0.976

0.877

0.961

0.862

0.909

0.81

0.894

0.881

0.907

0.808

12

As seen in above table, queries CF1, CF4, CF8 and CF9 have shown better results using the improved TF

IDF Ranker compared to the traditional system.

4.1 Precision and Recall

Below tables show the precision and recall values calculated for the queries CF1-CF10 in both systems.

As seen in the table precision and recall values have significantly increased for the queries CF1, CF4,CF8 and CF9.

Fig. Precision Recall for queries CF1 – CF10

13

4.2 Bar chat

1,2

1

TF IDF( P)

TF IDF (R)

Semantic( P)

Semantic(R)

0,8

0,6

0,4

0,2

0

1 2 3 4 5 6 7 8 9 10

Precision& Recall bar chat : Old system vs New system

Above bar chat shows precision recall values for the queries CF1 – CF10 for both traditional and improved TF IDF.

As seen in above bar chat, precision recall values are either same are improved for the new system.

14

5.

Screenshots

Query search using method1 (Traditional TF IDF)

15

Query search using (Improved TF IDF)

16

6.

Conclusion:

This project has improvised traditional TF IDF ranker by introducing TF IDF Ranker II, which helped us to find the Keywords from the document, There by comparing semantic similarity between keywords and input query. It has successfully showed that using semantic analyzer has good improvement in precision and recall values over traditional system. Also it has addressed the performance issue caused in my earlier work (MS project), which was based on extracting sentences from the documents and comparing with the input query. Next, it used a dataset from Text Retrieval Conference Data (TREC) to validate the project.

One limitation of TF-IDF Ranker is, terms that occur in query input text but that cannot be found in documents gets zero scores. Hence, we might lose some relevant documents in the first cut.

17

References

[1] Li, Yuhua,et.al, “Sentence Similarity Based on Semantic Nets and Corpus Statistics,” IEEE Trans on knowledge and data engineering, vol 18, no.8,2006.

[2] Dao, Thanh, Troy Simpson, “Measuring similarity between the sentences” .Web.

[3] TfIdf Ranker, ‘http://vetsky.narod2.ru/catalog/tfidf_ranker/’ .web.

[4] Confusion track, TREC dataset ‘http://trec.nist.gov/data/t5_confusion.html’ .Web.

[5] Chouhan, Muralidhar, “Text retrieval using semantic similarity and TF IDF Ranker.”

18