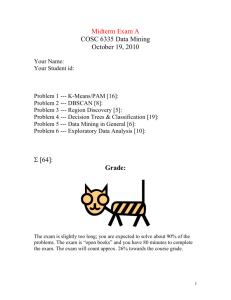

Assignment #4 - Data Mining Lab

advertisement

Data Mining and Knowledge Discovery (KSE525)

Assignment #4 (May 21, 2015, due: June 4)

1. [8 points] The effectiveness of the SVM depends on the selection of kernels.

trick?

(b) Consider the quadratic kernel K(u, v) = (u • v + 1)2.

= Φ(u) • Φ(v) for some Φ.

(a) What is the kernel

Show that this is a kernel, i.e., K(u, v)

[Hint: I did the proof for K(u, v) = (u • v)2 in class.]

2. [10 points] Discuss the advantages and disadvantages of the four clustering methods: k-means, EM,

BIRCH, and DBSCAN.

You had better fill out the table below.

Advantages

Disadvantages

k-means

EM

BIRCH

DBSCAN

3. [6 points] Suppose that the data mining task is to cluster points (with (x, y) representing a location)

into three clusters, where the points are A1(2,10), A2(2,5), A3(8,4), B1(5,8), B2(7,5), B3(6,4), C1(1,2), C2(4,9).

The distance function is the Euclidean distance.

center of each cluster, respectively.

after the first round of execution.

Suppose initially we assign A1, B1, and C1 as the

Use the k-means algorithms.

(a) Show the three cluster centers

(b) Show the final three clusters.

4. [10 points] LIBSVM is one of the most popular tools for the SVM.

Download the Wine data set available at the URL below.

Let’s practice to use LIBSVM.

Then, arbitrarily divide wine.scale into the

training set and the test set of approximately the same size.

Run svm-train to build a classification

model using the training set and run svm-predict to test the accuracy of the model using the test set.

Identify the misclassified objects in the test set and report the accuracy of the model.

You need to

mention which kernel is used (the default is the Gaussian kernel).

LIBSVM: http://www.csie.ntu.edu.tw/~cjlin/libsvm/

Wine data set: http://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/multiclass.html#wine

5. [16 points] Answer the following questions using R.

well as your answer (result).

For each question, hand in your R code as

For these questions, using the commands below, load the Wine data set,

standardize the data set, and define the wssplot function.

> data(wine, package="rattle")

> df <- scale(wine[-1])

> wssplot <- function(data, nc=15, seed=1234){

+

wss <- (nrow(data)-1)*sum(apply(data,2,var))

+

for (i in 2:nc){

+

set.seed(seed)

+

wss[i] <- sum(kmeans(data, centers=i)$withinss)}

+

plot(1:nc, wss, type="b", xlab="Number of Clusters",

+

1)

ylab="Within groups sum of squares")}

Explain the meaning of the wssplot function.

Determine the good number of clusters (k)

according to the result of executing this function as below.

[Hint: find the point where the

value of y-axis starts not decreasing very rapidly.]

> wssplot(df)

2)

Perform k-means clustering with the value of k obtained in (1) over the standardized data set

and report the number of records belonging to each cluster.

the best one using the “nstart” parameter.

Repeat 25 times and pick up

Also, before k-means clustering, make sure to

execute the following command to guarantee that the results are reproducible.

> set.seed(1234)

3)

Calculate variable means for each cluster in the original metric (i.e., not in the standardized

metric).

4)

[Hint: use the “aggregate” command.]

Print out the confusion matrix.

incorrect clusters.

Also, explain how many records of Type 3 are assigned to

[Hint: the “Type” variable is the class label of the Wine data set.]