The Deep Web

advertisement

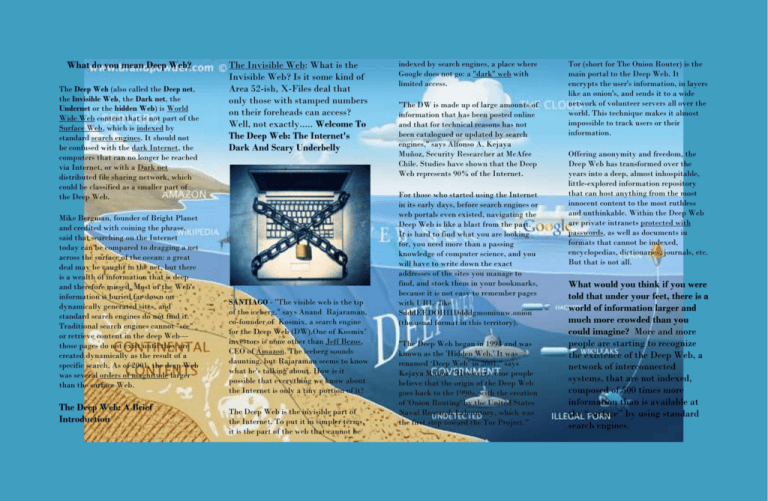

What do you mean Deep Web? The Deep Web (also called the Deep net, the Invisible Web, the Dark net, the Undernet or the hidden Web) is World Wide Web content that is not part of the Surface Web, which is indexed by standard search engines. It should not be confused with the dark Internet, the computers that can no longer be reached via Internet, or with a Dark net distributed file sharing network, which could be classified as a smaller part of the Deep Web. Mike Bergman, founder of Bright Planet and credited with coining the phrase, said that searching on the Internet today can be compared to dragging a net across the surface of the ocean: a great deal may be caught in the net, but there is a wealth of information that is deep and therefore missed. Most of the Web's information is buried far down on dynamically generated sites, and standard search engines do not find it. Traditional search engines cannot "see" or retrieve content in the deep Web— those pages do not exist until they are created dynamically as the result of a specific search. As of 2001, the deep Web was several orders of magnitude larger than the surface Web. The Deep Web: A Brief Introduction The Invisible Web: What is the Invisible Web? Is it some kind of Area 52-ish, X-Files deal that only those with stamped numbers on their foreheads can access? Well, not exactly..... Welcome To The Deep Web: The Internet's Dark And Scary Underbelly SANTIAGO - "The visible web is the tip of the iceberg," says Anand Rajaraman, co-founder of Kosmix, a search engine for the Deep Web (DW).One of Kosmix’ investors is none other than Jeff Bezos, CEO of Amazon. The iceberg sounds daunting, but Rajaraman seems to know what he's talking about. How is it possible that everything we know about the Internet is only a tiny portion of it? The Deep Web is the invisible part of the Internet. To put it in simpler terms, it is the part of the web that cannot be indexed by search engines, a place where Google does not go: a "dark" web with limited access. "The DW is made up of large amounts of information that has been posted online and that for technical reasons has not been catalogued or updated by search engines," says Alfonso A. Kejaya Muñoz, Security Researcher at McAfee Chile. Studies have shown that the Deep Web represents 90% of the Internet. For those who started using the Internet in its early days, before search engines or web portals even existed, navigating the Deep Web is like a blast from the past. It is hard to find what you are looking for, you need more than a passing knowledge of computer science, and you will have to write down the exact addresses of the sites you manage to find, and stock them in your bookmarks, because it is not easy to remember pages with URLs like SdddEEDOHIIDdddgmomiunw.onion (the usual format in this territory). "The Deep Web began in 1994 and was known as the 'Hidden Web.' It was renamed ‘Deep Web’ in 2001," says Kejaya Muñoz. "However, some people believe that the origin of the Deep Web goes back to the 1990s, with the creation of 'Onion Routing' by the United States Naval Research Laboratory, which was the first step toward the Tor Project.” Tor (short for The Onion Router) is the main portal to the Deep Web. It encrypts the user's information, in layers like an onion's, and sends it to a wide network of volunteer servers all over the world. This technique makes it almost impossible to track users or their information. Offering anonymity and freedom, the Deep Web has transformed over the years into a deep, almost inhospitable, little-explored information repository that can host anything from the most innocent content to the most ruthless and unthinkable. Within the Deep Web are private intranets protected with passwords, as well as documents in formats that cannot be indexed, encyclopedias, dictionaries, journals, etc. But that is not all. What would you think if you were told that under your feet, there is a world of information larger and much more crowded than you could imagine? More and more people are starting to recognize the existence of the Deep Web, a network of interconnected systems, that are not indexed, composed of 500 times more information than is available at the “surface” by using standard search engines. The Deep Web represents a vast portion of the world wide web that is not visible to the average person. Ordinary search engines to find content on the web using software called “crawlers”. This technique is ineffective for finding the hidden resources of the Web. Wikipedia is a good example of a site that uses crowd sourcing to harvest information from the web and deep web to create a vast swath of information on the “surface.” Do you think that “The Deep Web” is Important? The Deep Web is important because as it becomes easier to explore, we are going to see a much broader and more accurate distribution of information. The surface is made up of information that generally directs people towards commercial endeavors, buying, selling advertising. The use of the deep web will be instrumental in informing the public on new technologies, free educational materials and groundbreaking ideas. Bringing these issues to the surface will help to bring society forward and out of a purely commercialized mindset. As always, do some of your own research and find out how using deep web searches can help you in your own endeavors. the large sites analysis for determining total record or document count for the site. Exactly 700 sites were inspected in their randomized order to obtain the 100 fully characterized sites. All sites inspected received characterization as to site type and coverage; this information was used in other parts of the analysis. "The invisible portion of the Web will continue to grow exponentially before the tools to uncover the hidden Web are ready for general use" Deep Web Size Analysis: In order to analyze the total size of the deep Web, we need an average site size in documents and data storage to use as a multiplier applied to the entire population estimate. Results are shown in Figure 4 and Figure 5. As discussed for the large site analysis, obtaining this information is not straightforward and involves considerable time evaluating each site. To keep estimation time manageable, we chose a +/- 10% confidence interval at the 95% confidence level, requiring a total of 100 random sites to be fully characterize. We randomized our listing of 17,000 search site candidates. We then proceeded to work through this list until 100 sites were fully characterized. We followed a less-intensive process to The 100 sites that could have their total record/document count determined were then sampled for average document size (HTML-included basis). Random queries were issued to the searchable database with results reported as HTML pages. A minimum of ten of these were generated, saved to disk, and then averaged to determine the mean site page size. In a few cases, such as bibliographic databases, multiple records were reported on a single HTML page. In these instances, three total query results pages were generated, saved to disk, and then averaged based on the total number of records reported on those three pages. Deep Web Site Qualification: An initial pool of 53,220 possible deep Web candidate URLs was identified from existing compilations at seven major sites and three minor ones. After harvesting, this pool resulted in 45,732 actual unique listings after tests for duplicates. Cursory inspection indicated that in some cases the subject page was one link removed from the actual search form. Criteria were developed to predict when this might be the case. The Bright Planet technology was used to retrieve the complete pages and fully index them for both the initial unique sources and the one-link removed sources. A total of 43,348 resulting URLs were actually retrieved. We then applied a filter criterion to these sites to determine if they were indeed search sites. This proprietary filter involved inspecting the HTML content of the pages, plus analysis of page text content. This brought the total pool of deep Web candidates down to 17,579 URLs. Subsequent hand inspection of 700 random sites from this listing identified further filter criteria. Ninety-five of these 700, or 13.6%, did not fully qualify as search sites. This correction has been applied to the entire candidate pool and the results presented. Some of the criteria developed when hand-testing the 700 sites were then incorporated back into an automated test within the Bright Planet technology for qualifying search sites with what we believe is 98% accuracy. Additionally, automated means for discovering further search sites has been incorporated into our internal version of the technology based on what we learned. Deep Web is Higher Quality: "Quality" is subjective: If you get the results you desire, that is high quality; if you don't, there is no quality at all. When Bright Planet assembles quality results for its Web-site clients, it applies additional filters and tests to computational linguistic scoring. For example, university course listings often contain many of the query terms that can produce high linguistic scores, but they have little intrinsic content value unless you are a student looking for a particular course. Various classes of these potential false positives exist and can be discovered and eliminated through learned business rules. Our measurement of deep vs. surface Web quality did not apply these more sophisticated filters. We relied on computational linguistic scores alone. We also posed five queries across various subject domains. Using only computational linguistic scoring does not introduce systematic bias in comparing deep and surface Web results because the same criteria are used in both. The relative differences between surface and deep Web should maintain, even though the absolute values are preliminary and will overestimate "quality." Deep Web Growing Faster than Surface Web Lacking time-series analysis, we used the proxy of domain registration date to measure the growth rates for each of 100 randomly chosen deep and surface Web sites. These results are presented as a scatter gram with superimposed growth trend lines in Figure 7. General Deep Web Characteristics: Deep Web content has some significant differences from surface Web content. Deep Web documents (13.7 KB mean size; 19.7 KB median size) are on average 27% smaller than surface Web documents. Though individual deep Web sites have tremendous diversity in their number of records, ranging from tens or hundreds to hundreds of millions (a mean of 5.43 million records per site but with a median of only 4,950 records), these sites are on average much, much larger than surface sites. The rest of this paper will serve to amplify these findings. The mean deep Web site has a Webexpressed (HTML-included basis) database size of 74.4 MB (median of 169 KB). Actual record counts and size estimates can be derived from one-inseven deep Web sites. On average, deep Web sites receive about half again as much monthly traffic as surface sites (123,000 page views per month vs. 85,000). The median deep Web site receives somewhat more than two times the traffic of a random surface Web site (843,000 monthly page views vs. 365,000). Deep Web sites on average are more highly linked to than surface sites by nearly a factor of two (6,200 links vs. 3,700 links), though the median deep Web site is less so (66 vs. 83 links). This suggests that well-known deep Web sites are highly popular, but that the typical deep Web site is not well known to the Internet search public. One of the more counter-intuitive results is that 97.4% of deep Web sites are publicly available without restriction; a further 1.6% are mixed (limited results publicly available with greater results requiring subscription and/or paid fees); only 1.1% of results are totally subscription or fee limited. This result is counter intuitive because of the visible prominence of subscriber-limited sites such as Dialog, Lexis-Nexis, Wall Street Journal Interactive, etc. (We got the document counts from the sites themselves or from other published sources.) However, once the broader pool of deep Web sites is looked at beyond the large, visible, fee-based ones, public availability dominates. Analysis of Largest Deep Web Sites: More than 100 individual deep Web sites were characterized to produce the listing of sixty sites reported in the next section. 2. Site characterization required three steps: 1. 2. 3. Estimating the total number of records or documents contained on that site. Retrieving a random sample of a minimum of ten results from each site and then computing the expressed HTML-included mean document size in bytes. This figure, times the number of total site records, produces the total site size estimate in bytes. Indexing and characterizing the search-page form on the site to determine subject coverage. 3. 4. Estimating total record count per site was often not straightforward. A series of tests was applied to each site and are listed in descending order of importance and confidence in deriving the total document count: 1. E-mail messages were sent to the webmasters or contacts listed for all sites identified, requesting verification of total 5. record counts and storage sizes (uncompressed basis); about 13% of the sites shown in Table 2 provided direct documentation in response to this request. Total record counts as reported by the site itself. This involved inspecting related pages on the site, including help sections, site FAQs, etc. Documented site sizes presented at conferences, estimated by others, etc. This step involved comprehensive Web searching to identify reference sources. Record counts as provided by the site's own search function. Some site searches provide total record counts for all queries submitted. For others that use the NOT operator and allow its stand-alone use, a query term known not to occur on the site such as "NOT ddfhrwxxct" was issued. This approach returns an absolute total record count. Failing these two options, a broad query was issued that would capture the general site content; this number was then corrected for an empirically determined "coverage factor," generally in the 1.2 to 1.4 range. A site that failed all of these tests could not be measured and was dropped from the results listing. Deep Web Technologies is a software company that specializes in mining the Deep Web — the part of the Internet that is not directly searchable through ordinary web search engines.[1] The company produces a proprietary software platform "Explorit" for such searches. It also produces the federated search engine ScienceResearch.com, which provides free federated public searching of a large number of databases, and is also produced in specialized versions, Biznar for business research, Mednar for medical research, and customized versions for individual clients.[2] Data User Experience 1. Traditional search engines operate many automated programs, known as spiders or crawlers, which roam from page to page on the Web by following hyperlinks and retrieve, index and categorize the content that they find. Most deep Web content resides in databases, rather than on static HTML pages, so isn’t indexed by search engines and remains hidden from users. A search engine capable of interrogating the deep Web would provide users with access to vast swathes of unexplored data. 3. Currently, if Web users want to explore the deep Web, they must know the Web address of one or more sites on the deep Web and, even then, must invest significant amounts of time and effort visiting sites, querying them and examining the results. Several organizations have recognized the importance of making deep Web content accessible from a single, central location -- in the same way as surface Web content -- and are developing new search engines that can query multiple deep Web data sources and aggregate the results. Structure Advantages of “The Deep Web” The deep Web, otherwise known as the hidden or invisible Web, refers to Web content that general purpose search engines, such as Google and Yahoo!, cannot reach. The deep Web is estimated to contain 500 times more data than the surface Web, according to the University of California and, if it could be accessed easily, would provide numerous benefits to Web users. 2. The deep Web not only contains many times more data than the surface Web, but data that is structured, or at least semi-structured, in databases. This structure could be exploited by deep Web technologies, such that data -financial information, public records, medical research and many other types of material -- could be cross-referenced automatically, to create a globally linked database. This, in turn, could pave the way towards the so-called Semantic Web -- the web of data that can be processed directly or indirectly by machines -- originally envisaged by Tim Berners-Lee, the inventor of the World Wide Web. Perception 4. Web users currently perceive the Web not so much by the content that actually exists as by the tiny proportion of the content that is indexed by traditional search engines. Professor John Allen Paulos once said, “The Internet is the world's largest library. It's just that all the books are on the floor." However, the deep Web contains structured, scholarly material from reliable academic and non-academic sources, so the perception of the Web could change for the better, if that material could be retrieved easily.