this Document - Microsoft Research

advertisement

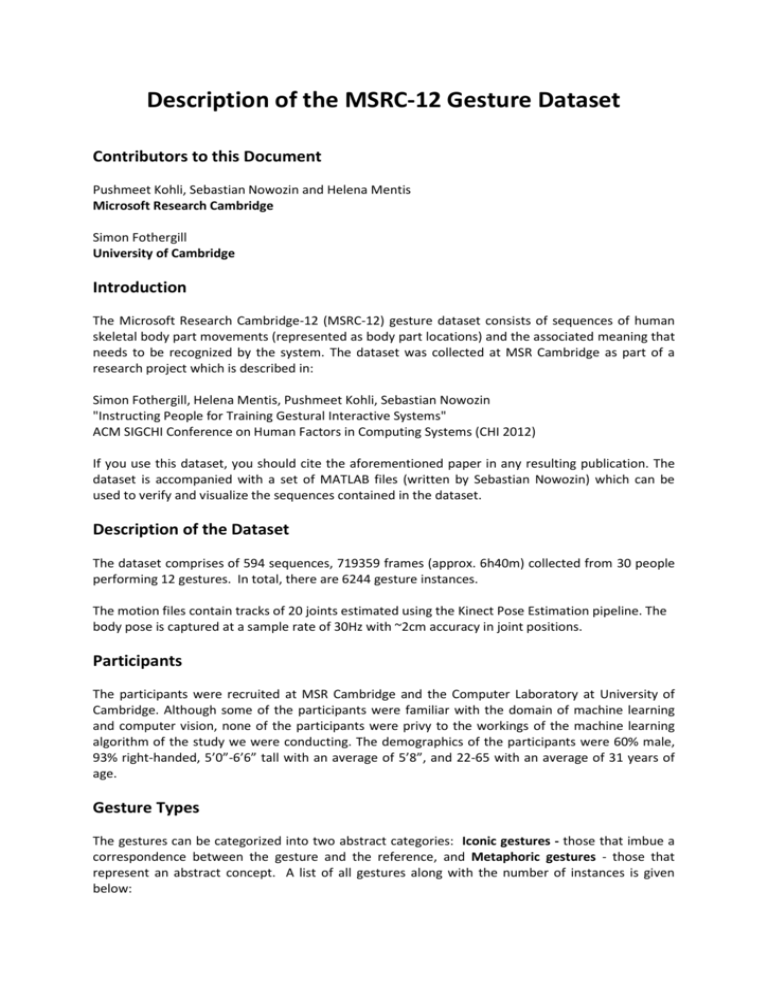

Description of the MSRC-12 Gesture Dataset Contributors to this Document Pushmeet Kohli, Sebastian Nowozin and Helena Mentis Microsoft Research Cambridge Simon Fothergill University of Cambridge Introduction The Microsoft Research Cambridge-12 (MSRC-12) gesture dataset consists of sequences of human skeletal body part movements (represented as body part locations) and the associated meaning that needs to be recognized by the system. The dataset was collected at MSR Cambridge as part of a research project which is described in: Simon Fothergill, Helena Mentis, Pushmeet Kohli, Sebastian Nowozin "Instructing People for Training Gestural Interactive Systems" ACM SIGCHI Conference on Human Factors in Computing Systems (CHI 2012) If you use this dataset, you should cite the aforementioned paper in any resulting publication. The dataset is accompanied with a set of MATLAB files (written by Sebastian Nowozin) which can be used to verify and visualize the sequences contained in the dataset. Description of the Dataset The dataset comprises of 594 sequences, 719359 frames (approx. 6h40m) collected from 30 people performing 12 gestures. In total, there are 6244 gesture instances. The motion files contain tracks of 20 joints estimated using the Kinect Pose Estimation pipeline. The body pose is captured at a sample rate of 30Hz with ~2cm accuracy in joint positions. Participants The participants were recruited at MSR Cambridge and the Computer Laboratory at University of Cambridge. Although some of the participants were familiar with the domain of machine learning and computer vision, none of the participants were privy to the workings of the machine learning algorithm of the study we were conducting. The demographics of the participants were 60% male, 93% right-handed, 5’0”-6’6” tall with an average of 5’8”, and 22-65 with an average of 31 years of age. Gesture Types The gestures can be categorized into two abstract categories: Iconic gestures - those that imbue a correspondence between the gesture and the reference, and Metaphoric gestures - those that represent an abstract concept. A list of all gestures along with the number of instances is given below: A. Iconic Gestures ID 2 4 6 8 10 12 Description Crouch or hide Put on night vision goggles Shoot a pistol Throw an object Change weapon Kick Tag name G2 Duck G4 Goggles G6 Shoot G8 Throw G10 Change weapon G12 Kick Instances 500 508 511 515 498 502 B. Metaphoric Gestures ID 1 3 5 7 9 11 Description Start System/Music/Raise Volume Navigate to next menu / move arm right Wind up the music Take a Bow to end music session Protest the music Move up the tempo of the song / beat both arms Tag name G1 lift outstretched arms G3 Push right G5 Wind it up G7 Bow G9 Had enough G11 Beat both Instances 508 522 649 507 508 516 Instruction method To study how the instruction modality affected the movements of the subjects, we collected data by giving different types of instructions to our participants. We chose to provide participants with three familiar, easy to prepare instruction modalities and their combinations that did not require the participant to have any sophisticated knowledge. The three were (1) descriptive text breaking down the performance kinematics, (2) an ordered series of static images of a person performing the gesture with arrows annotating as appropriate, and (3) video (dynamic images) of a person performing the gesture. We wanted mediums to be transparent so they fulfil their primary function of conveying the kinematics. More concretely, for each gesture we roughly have data using: Text (10 people), Images (10 people), Video (10 people), Video with text (10 people), Images with text (10 people). File Naming Convention There are 594 pose sequences (.csv files) along with 594 associated “tagstream” files which contain information about when a particular gesture from the list above should be detected. The format of the file names is: P[#A]_[#B]_g[#C][A]_s[#D]. [csv|tagstream] [#A] and [#B] encode the instruction that was given to the subject (see table below and the paper). The presence of the Alphabet “A” after #C indicates that movements generated when two instructions were given (eg. text + images, or text + video). [#C] denotes the identifier of the gesture being performed in the file. This is redundant information since this information is also present in the tag stream file. [#D] denotes the identifier for the human subject whose movements are captured in the file. MATLAB files The dataset comes with a set of Matlab scripts (written by Sebastian Nowozin) which allow you to verify the content and to visualize the data. The basic description of the files is as follows: 1. 2. 3. 4. LOAD_FILE -- Load gesture recognition sequence SKEL_VIS -- Visualize a skeleton in 3D coordinates. Demo 1: Visualize a sequence. Verify integrity of the dataset F.A.Qs. Q: Is it collected by a Kinect sensor? A: Yes Q: Please can I have RGB and depth map data? A: We are not able to share this data for legal reasons, as they make it too easy to identify the person. Q: Where is the file: P2_1_2A_p12.tagstream? A: The initial release on the dataset included a typographic error - this file was named: p2_1_2A_p12.tagstream with a lowercase 'p'. Q: Why does P1_1_12A_p19.csv contain all 0s for every frame, even the corresponding tagstream file says there should be 10 'kick' gestures? A: On a couple of occasions we did not realise the Kinect capture system hadn't recognised (and therefore recorded) the skeleton but we still annotated the RGB footage. Unfortunately I think this is the case for file P1_1_12A_p19.csv - the performer was quite tall... Q: Why does the letter “A” occurs after #C, not #B as in the initial release of the documentation? A: There was a mistake in the initial documentation. The letter "A" should be after #C as it refers to the instruction for the performance of the gesture, not the group of performers. It means that the instruction used a compound semiotic modality such as Video + Text or Image + Text. Q: Why are the descriptions of the gestures not consistent with the tag names in the tagstream files? A: This is for historical reasons. A mapping is provided in later versions of the documentation. The tag names focus more on the kinematics of the performances, which could be assigned any domain specific meaning. The documentation describes the gestures in the context of specific example applications such as music playing systems. Q: Why are there no g and s in the names of the files? A: The initial release of the dataset was updated with this clearer naming convention after 11/07/2012. Q: The table in the initial release of the documentation is a bit confusing for me: does the "+" sign means AND or OR? A: The "+" sign mean UNION of the two sets of performers. Community The dataset was advertised in various RSS feeds, at CVPR 2012 and at cvpapers.com. Interest has already been shown from people collecting a large and diverse quantity of training data and carrying out gesture recognition research at institutions including the University of Central Florida. Acknowledgements We would like to thank the staff, students and interns of Microsoft Research, Cambridge and the University of Cambridge for participating in our user study. We would also like to thank Olivia Nicell for help with data gathering and tabulation.