Answers

advertisement

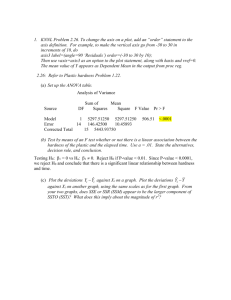

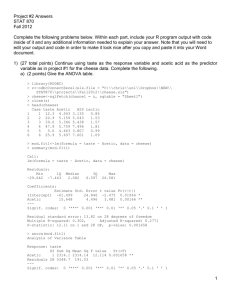

Test #1 Answers STAT 870 Fall 2012 Complete the problems below. Make sure to fully explain all answers and show your work to receive full credit! 1) (31 total points) A study was performed to determine the effects of a drug on lowering blood cholesterol levels in people. The file cholest.csv is a comma delimited file containing the data. I read the data into R in the following manner: > cholest<-read.table(file = "C:\\data\\cholest.csv", header = TRUE, sep = ",") > head(cholost) x y 1 0 -5.25 2 27 -1.50 3 71 59.50 4 95 32.50 5 0 -7.25 6 28 23.50 There were 164 people who participated in the study, and each row in the data set represents one person. Within the data set, x is a predictor variable indicating the amount of compliance (expressed as a percentage) over a period of time with taking the drug as specified by the study protocol. Also, y is a response variable indicating the amount of improvement in cholesterol levels (the larger the value, the more improvement). Using this data, complete the following. a) (9 points) Describe the relationship between x and y using a sample linear regression model (no transformations of the variables should be used). > mod.fit<-lm(formula = y ~ x, data = cholest) > summary(mod.fit) Call: lm(formula = y ~ x, data = cholest) Residuals: Min 1Q Median -55.83 -13.69 0.15 3Q 15.59 Max 60.07 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -2.30725 3.44903 -0.669 0.504 x 0.58410 0.04967 11.760 <2e-16 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 22.11 on 162 degrees of freedom Multiple R-squared: 0.4605, Adjusted R-squared: 0.4572 F-statistic: 138.3 on 1 and 162 DF, p-value: < 2.2e-16 > plot(x = cholest$x, y = cholest$y, xlab = "Compliance", ylab = "Improvement") > curve(expr = mod.fit$coefficients[1] + mod.fit$coefficients[2]*x, add = TRUE) 1 100 80 60 40 -20 0 20 Improvement 0 20 40 60 80 100 Compliance The sample model is Ŷ 2.3073 0.5841X . For every one unit increase in compliance, the amount of improvement is estimated to increase by 0.5841. Putting this into perhaps a more meaningful scale, we could say that with every 10% increase in compliance, the amount of improvement in cholesterol level is estimated to be 5.8. b) (8 points) Perform a hypothesis test to determine if there is sufficient evidence of a linear relationship between compliance and the amount of improvement. Use = 0.1. i) H0:1=0 vs. Ha:10 ii) t = 11.76 and p-value < 210-16 i) = 0.1 ii) Because 210-16 < 0.1, reject H0 iii) There is sufficient evidence to indicate a linear relationship between compliance and the amount of improvement. c) (8 points) Find the 90% prediction interval for the amount of improvement if the compliance value is 50 (50% compliance with taking the drug). Interpret the interval. The interval is Yˆ h t(1 / 2;n 2) Var(Yh(new ) Yˆ h ) where 2 ˆ h ) MSE 1 1 (Xh X) Var(Yh(new ) Y 2 n (Xi X) > predict(object = mod.fit, newdata = data.frame(x = 50), se.fit = TRUE, interval = "prediction", level = 0.90) $fit fit lwr upr 1 26.89774 -9.794262 63.58973 $se.fit [1] 1.797873 2 $df [1] 162 $residual.scale [1] 22.1066 The 90% prediction interval is -9.79 < Y < 63.49. With 90% confidence, the amount of an improvement for a person is between -9.79 and 63.49. Another way to interpret the interval is as follows: If the sampling process were repeated many, many times with a prediction interval calculated each time, we would expect approximately 90% of the intervals to contain Y. d) (6 points) Using your interval from part c), is there sufficient evidence to indicate that a 50% compliance in taking the drug will improve cholesterol levels? Explain. This is quite a wide interval! Because the interval contains 0, there is not sufficient evidence to indicate that a 50% compliance will improve cholesterol levels. A few students thought that the confidence interval for E(Y) would be more appropriate to examine in this situation. The reason is because it helps you to determine if there will be an improvement on average. This would be o.k. to do. The confidence interval does not contain 0, so there is evidence that the drug would be beneficial on average. 2) (36 total points; Chapter 3 homework) The file diamond_prices.csv is a comma delimited file containing the carat size of a diamond and its corresponding price. I read the data into R in the following manner: > diamonds<-read.table(file = "C:\\data\\diamond_prices.csv", header = TRUE, sep = ",") > head(diamonds) carat price 1 0.30 745.9184 2 0.30 865.0820 3 0.30 865.0820 4 0.30 721.8565 5 0.31 940.1322 6 0.31 890.8626 Use price as the response variable and carat as the predictor variable to complete the problems below. a) (6 points) Find and state the sample linear regression model (no transformations of the variables should be used). > mod.fit<-lm(formula = price ~ carat, data = diamonds) > summary(mod.fit) Call: lm(formula = price ~ carat, data = diamonds) Residuals: Min 1Q -1297.5 -346.2 Median -66.5 3Q 249.3 Max 3776.3 Coefficients: 3 Estimate Std. Error t value Pr(>|t|) (Intercept) -1316.73 90.82 -14.50 <2e-16 *** carat 6645.02 131.83 50.41 <2e-16 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 640.3 on 306 degrees of freedom Multiple R-squared: 0.8925, Adjusted R-squared: 0.8922 F-statistic: 2541 on 1 and 306 DF, p-value: < 2.2e-16 The sample model is price 1316.73 6645.02carat . b) Using the model in part a), comment on the four items below. In each of your answers, make sure to specifically state what measures (e.g., plots) were examined along with justification for your conclusions. i) (6 points) Is the predictor variable correctly specified in the model? (linear relationship between carat and price) A plot of the residuals vs. carat shows somewhat of a quadratic pattern among the residuals. This indicates the predictor variable may not be correctly specified in the model. ii) (6 points) Is there evidence of non-constant error variance? A plot of the residuals vs. predicted values shows somewhat of a funnel pattern (variability in residuals is increasing as a function of Ŷ ). The Levene test rejects constant variance. The Box-Cox method suggests a Y1/3 transformation and the corresponding interval for does not contain 1. iii) (6 points) Are there any outliers? A plot of the semi-studentized residuals vs. predicted values shows about 6 outliers. The largest is approximately equal to 6. Through using a normal approximation, this would be quite unusual to occur. In particular, one would expect about 1 out of 308 observations to have a semistudentized residual outside of 3. Also, semistudentized residuals as large as 6 are very, very, very unusual. iv) (6 points) Is the normality assumption of the error terms satisfied? A QQ-plot and a histogram of semi-studentized residuals show that the normality assumption may be violated. Both show a large departure from normality on the right side. Below are the code and plots: > source("C:/chris/unl/Dropbox/NEW/STAT870/Chapter3/examine.mod.simple.R") > save.it<-examine.mod.simple(mod.fit.obj = mod.fit, const.var.test = TRUE, boxcox.find = TRUE) > names(save.it) [1] "sum.data" "lambda.hat" > save.it$lambda.hat [1] 0.33 "semi.stud.resid" "levene" "bp" 4 > save.it$levene Levene's Test for Homogeneity of Variance (center = median) Df F value Pr(>F) group 1 26.852 3.995e-07 *** 306 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Response vs. predictor 3000 1000 Residuals 0 -1000 Response variable 2000 4000 6000 8000 1.0 0.8 0.6 Predictor variable 0.2 0.4 0.8 0.6 0.4 0.2 Predictor variable Residuals vs. predictor Dot plot 1.0 Box plot 0.2 0.6 0.8 1.0 0.2 0.4 0.6 0.8 1.0 Predictor variable Predictor variable Residuals vs. estimated mean response ei vs. estimated mean response * Dot plot 6 Box plot 0.4 1000 3000 5000 Estimated mean response 4 1000 3000 5000 Estimated mean response 0.3 0.1 0.2 Density 0.4 3000 1000 0 Residuals 0 Histogram of semistud. residuals 0.0 -1000 0 50 100 150 200 250 300 -2 Observation number 0 2 4 6 Semistud. residuals Normal Q-Q Plot Box-Cox transformation plot -600 -800 -1000 0 2 log-Likelihood 4 -400 6 95% -2 Semistud. residuals 2 -2 0 Residuals vs. observation number 110 131 278 279 294 0 Semistud. residuals 3000 1000 Residuals 0 -1000 Response variable 2000 4000 6000 8000 Response variable 2000 4000 6000 8000 116 -3 -2 -1 0 1 2 3 -2 -1 0 1 2 Theoretical Quantiles c) (6 points) Suggest one change to the model that would be appropriate to examine given the results from b). 5 There are a few different ways that one could proceed. For example, one could try a Y1/3 transformation as suggested by the Box-Cox method. Alternatively, one could try to add a carat2 term to the model. 3) (33 total points) Complete the problems below. a) (7 points) How does one decide between using a confidence interval or a prediction interval for the response? Explain. It depends upon what you are interested in. If you would like to know the average response value E(Y), choose the confidence interval. If you would like to know the response value Y itself, choose a prediction interval. b) (7 points) The population model is Yi=0+1Xi+i where i independent N(0,2). Using the plot below, describe what 2 measures. Population Linear Regression Model Y Yi i=0+0 i i 1X i+ 1X i Observed Value YX 0 1X i E(Y)=0 + 1X X Observed Value 2 measures the variability of the points around the E(Y) = 0 + 1X line. In other words, it measures the variability of the i’s as shown in the plot. c) (7 points) Least squares estimators have the following property, E(b0) = 0 and E(b1) = 1. Describe what this property means and why it is desirable. The least squares estimators b0 and b1 are unbiased estimators of 0 and 1, respectively. This is desirable because it shows that if we were to repeat the sampling process many, many times, we would expect the average of the estimators to be approximately the same as the corresponding parameters. d) (12 points) Describe what SSTO, SSE, and SSR measure without using any equations or plots. Why would one want SSE to be very small relative to SSTO? SSTO measures the total possible error that one could have when predicting Y with the sample mean. SSE measures the error left over after performing a regression. SSR measures the amount of variability in Y accounted for by the regression model. We want SSE to be as small as possible because this shows the regression model is accounting for most of the variability in Y, which then means R2 will be close to 1. 6