Introduction by Prof. Wang This paper based on the program made by

advertisement

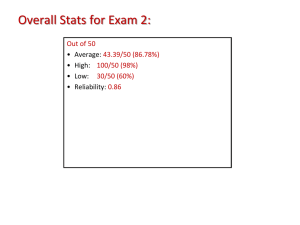

Introduction by Prof. Wang 1. This paper based on the program made by van der Linden (2003) for assembling test. The relevant expended topics of automated test assembly are like CAT, such as exposure rate and content balance. 2. The other issue is the technology of automated item generation. Sometimes test developers want to expand their item pool via automated generated items by computer system. At the same time, the difficulty of these new items can be known as they are made. To achieve this purpose, the cognitive processes involved in solving the test items are needed. Under assuming that the item features which influence the difficulty of the items (called radicals) or those that have only negligible effects (called incidentals) can be defined well, the effect of psychometrical model can be investigated. 3. Linear Logistic Test Model is frequently used as the methodological foundation of item generation rule. Susan Ambreston has been discussed item generation from 1999 to 2009. She illustrated the psychometric model and applied to automatically generate items for spatial ability. However, Susan Ambreston claimed that it is generally fail in efforts about automated item generation. The effect size of prediction of item difficulty is hard to achieve enough large level (0.7). 4. In this study, item generation on the fly from a pool of calibrated item families means family parameters are assumed to be estimated. In this case, item parameters are random effect in each family rather than fixed effect. It is because the developer generated items which belong to the specific families according to their radicals. The family parameters have been estimated before. Therefore these new items parameter should be equal to family parameter they belong if the variation within family is zero. If the variation within family exists, item effects are treated as random effect in the family population. The extent of the variability within family was investigated in this study. The result showed the large variability lead to smaller family information. 5. Why using item information will overestimate information when only family parameters were calibrated? It is because family information is the expected information about theta in the response to a random item from its family. Illustration as fallow graph, the first graph is information calculated by certain item parameter. The middle graph is information for item parameter with random effect. Those curves represent the possible information curve by the uncertain item parameter. The family information was showed as the right graph. The expected value of information for uncertain item parameter will 1 be lower than the information for the certain item parameter. Figure. The illustration of the overestimated information as item information in stead of family information. 6. Moreover, the reliability will decrease when family information is used. 7. It is not necessary to generate items online. But in low stake test, item generation still can be applied. Since no one cares the result of test, the precision of item parameter is not important anymore. Discussion after Wayne’s presentation 1. This model is similar with testlet model: logit(P)= n ( j jt ) let jt ( j jt ) , jt ~ N (0, t2 ) , jt ~ N ( j , t2 ) family model (second-level model): jf ~ N ( f , 2f ) 2. Jacob: There might be correlation between the radical feature. Prof. Wang’s answer: yes, therefore we can add interaction term for r in function 4. 3. Why the generation technology is useful in open-ended items, but the generated parameters for multiple–choice items are always imprecise? It is because the effects of distracter features on the difficulty of an item are generally difficult to predict, but distracters exactly efficient difficulty very much in multiple–choice items. For example, a question described as “Where is the capital of Australia? ”. One set of distracters is “(A) Tokyo (B) NanJin (C) BeiJin (D)Canberra” and the other set of distracters is “(A) Sydney 2 (B) Melbourne (C)Copenhagen (D)Canberra”. It is easily to answer correctly in the first set of distracters but hard in the second. The item difficulty will be different between different set of distracters. 4. However, the model used in this paper is 3 parameter normal ogive (3PNO) model. Since multiple-choice items are needed more researches, Is using 3PNO a little unreasonable? 5. Chen-Wei: In figure 3, the PIP curve is higher than CF curve always. Why we still use family information (CF) for item generation rather than item information (PIP). Answer: because they are different case (or situation in practice). Even if these two curves are put in one figure, the comparison between them is meaningless. PIP is used as item parameters are known and we just assembly them in a test. CF is used as we just have family parameters and generate items from families. 6. Information for PIP increase as the within family variability increase (W-B ratio) because there are more informative items can be selected when variance between item is large. In contrast to FC, the information is draw down as the W-B ratio rise because the informative family is selected but noise effect from individual item (random effect) lead information decreasing when there is variance of item parameter within family. 7. The differential item function for Family parameters can be researched in the feature. (call it as differential family function?) 3