SRS

advertisement

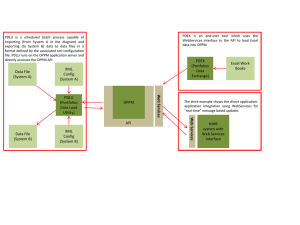

Page 1 SYSTEM REQUIRMENTS SPECIFICATION Abstract: This project gives the solution on duplicate detection in more complex hierarchical structures, like XML data. We present a novel method for XML duplicate detection, called XMLDup. XMLDup uses a Bayesian network to determine the probability of two XML elements being duplicates, considering not only the information within the elements but also the way that information is structured. In addition, to improve the efficiency of the network evaluation, a novel pruning strategy, capable of significant gains over the unoptimized version of the algorithm, is presented. Through experiments, we show that our algorithm is able to achieve high precision and recall scores in several data sets. XMLDup is also able to outperform another state-of-the-art duplicate detection solution, both in terms of efficiency and of effectiveness. 1.0 Aim/ Problem definition of the project: The main aim of the project is to detect the duplicate in the structured data. The proposed system focuses on a specific type of error, namely fuzzy duplicates, or duplicates for short. Duplicates are multiple representations of the same real-world object (e.g., a person) that differ from each other because, for example, one representation stores an outdated address. Existing system. Most commonly we use a relational database to store the data. In this case, the detection strategy typically consists of comparing pairs of tuples (each tuple represent- ing an object) by computing a similarity score based on their attribute values. Then, two tuples are classified as duplicates if their similarity is above a predefined threshold. However, this narrow view often neglects so their available related information as, for instance, the fact that data stored in a relational table relates to data in other tables through the foreign key. Page 2 1.02. Proposed System The proposed system finds the duplicates in structured (XML) data. Through experiments, we show that our algorithm is able to achieve high precision and recall scores in several datasets. 1.1 Description of the project in short: The main aim of the project is to detect the duplicate in the structured data. In this paper, we first present a probabilistic duplicate detection algorithm for hierarchical data called XML Dup. Our approach for XML duplicate detection is centered around one basic assumption: The fact that two XML nodes are duplicates depends only on the fact that their values are duplicates and that their children nodes are duplicates. Thus, we say that two XML trees are duplicates if their root nodes are duplicates. Proposed system works on some conditional probabilities a) The probability of the values of the nodes being duplicates, given that each individual pair of values contains duplicates. b) The probability of two nodes being duplicates given that their values and their children are duplicates. c) The probability of the children nodes being duplicates, given that each individual pair of children are duplicates. d) The probability of a set of nodes of the same type being duplicates given that each pair of individual nodes in the set are duplicates. 2.0 Process Summary a. b. c. d. Take the two XML files which contain few records are duplicates. Parse the XML file using XML Parser API. Compare the nodes of one file with another. If the two nodes are similar more than the threshold value, then those two nodes are duplicates. e. Compare proposed system with XML dup and check that our system is giving better recall. Page 3 2.1 Algorithms 3.0 Deliverables Software: Eclipse, JDK1.6, MySQL Application: Desktop Application. 3.01 Usefulness/ Advantages 1. Saves the time of the user to see to find the duplicates in structured data. 2. Automatically extract the parts from the structured data. Page 4 3.1 Operating Environment Software Requirement Operating System : Windows Technology : Java (JDK) Front End : Swing Database : MySQL Database Connectivity : JDBC. API XML Parser API, : Hardware Requirement Processor : At Least Pentium Processor Ram : 64 MB Hard Disk : 2 GB 3.2 Design and Implementation Constraints We have to take such two XML files which will have some duplicate record so that we can find it. Then main motive of the proposed system is just to find the duplicate in XML. Our contribution work will neglect the inner nodes attributes. Contribution work: In the proposed system, they are implementing XML Dup algorithm in which they are considering all attributes of all nodes. But in our system instead of taking all attributes for comparison we will just consider root attribute and left other. So it will give better performance than previous. 4.0 Modules Information 1. Module : The module contains some basic GUI, which takes XML files as input. 2. Module: Page 5 Parse the XML files using the XML Parser API, and get the individual nodes. 3. Module: Compare the nodes with each other and check whether these are greater than the threshold value or not. If they then take those nodes. 4. Module Compare proposed system with XML dup algorithm and check that our system is giving better recall than the previous system. Project Plan Modules Module 1 Module 2 Module 3 Module 4 Code Delivery date Code delivered (Percentage) % in 25% 50% 75% 100% Note from Author: This project is very useful to find the duplicates in the hierarchical data. Showing the XML data in the hierarchical form on the GUI is very challenging work for this project.