Lab_Activity_8

advertisement

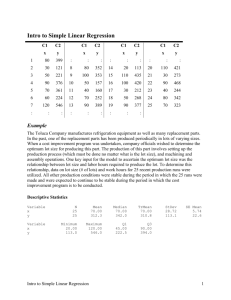

Lab Activity 8 (08/01/2013) Part 1: Use the “Marketshare” dataset (adapted from dataset C3 in the text). The data are 36 monthly observations on variables affecting sales of a product. The objective is to determine an efficient model for predicting and explaining market share sales. The predictors are average monthly price (X1), amount of advertising exposure based on gross Nielson rating (X2), whether a discount price was in effect (X3=1 if discount, 0 otherwise), whether a package promotion was in effect (X4=1 if promotion, 0 otherwise), and time in months (X5). (a) (10pts) Do a “best subsets” procedure to identify a possible model for predicting Y=Share. In Minitab, use StatRegressionBest Subsets. Enter all predictors as “Free predictors.” What are the best three predictors? What are the best four predictors? Response is Y_Share Vars 1 1 2 2 3 3 4 4 5 R-Sq 62.5 9.3 66.0 65.8 70.7 69.1 71.1 70.7 71.1 R-Sq(adj) 61.4 6.6 64.0 63.8 67.9 66.2 67.4 66.9 66.3 Mallows Cp 6.9 62.2 5.3 5.5 2.5 4.1 4.0 4.5 6.0 S 0.16420 0.25545 0.15865 0.15915 0.14979 0.15365 0.15101 0.15215 0.15348 x 3 _ x D x 1 i 4 x _ s _ 5 p c p _ r o r t i u o i c n m m e t o e X,,, X X X X X X X X X X X X X X X X X X X X X X X X x 2 _ n i e l s e n Best three predictors are: X1 Price, X3 Discount, X4 Promotion Best four predictors are: X1 Price, X3 Discount, X4 Promotion, X5 time I have highlighted in the chart above. (b) (10pts) For the best 3-predictor model, use StatRegressionRegression to estimate the regression equation (write it below). Also, fit the best 4-predictor model by using StatRegression Regression. Based on the information in the ANOVA table in each of these two models, calculate the AIC and BIC values respectively (10pts/each statistic). Y_Share = 3.19 - 0.353 x1_price + 0.399 x3_Discount + 0.118 x4_promo Analysis of Variance Source Regression Residual Error Total DF 3 32 35 SS 1.72830 0.71795 2.44626 MS 0.57610 0.02244 F 25.68 P 0.000 AIC=36*ln(0.71795/36)+2*4 =-140.94+8= -132.94 BIC=36*ln(0.71795/36)+4*ln(36)= -140.94+14.33= -126.61 Y_Share = 3.05 - 0.279 x1_price + 0.405 x3_Discount + 0.119 x4_promo - 0.00206 x5_time Analysis of Variance Source Regression Residual Error Total DF 4 31 35 SS 1.73932 0.70693 2.44626 MS 0.43483 0.02280 F 19.07 P 0.000 AIC=36*ln(0.70693 /36)+2*5=-141.49+10= -131.49 BIC=36*ln(0.70693 /36)+ 5*ln(36)= -141.49+17.92= -123.57 (3pts)Based on the AIC values of the two models you considered in part b, which model is considered better? It is better when three variables are in the model because the AIC of it is lower. (3pts)Based on the BIC values of the two models you considered in part b, which model is considered better? The four variables model is better because the BIC of it is lower. (c) (10pts) Still consider the five predictor variables, use stepwise procedure to select the best model by using StatRegressionStepwise. In the “Methods” option, consider stepwise procedure and set the alpha-to-enter = alpha-to-remove = 0.10. Stepwise Regression: Y_Share versus x1_price, x2_nielsen, ... Alpha-to-Enter: 0.1 Alpha-to-Remove: 0.1 Response is Y_Share on 5 predictors, with N = 36 Step Constant 1 2.420 2 2.374 3 3.185 x3_Discount T-Value P-Value 0.418 7.53 0.000 0.403 7.43 0.000 0.399 7.79 0.000 0.100 1.85 0.073 0.118 2.29 0.029 x4_promo T-Value P-Value x1_price T-Value P-Value -0.35 -2.24 0.032 S R-Sq R-Sq(adj) Mallows Cp 0.164 62.53 61.42 6.9 0.159 66.04 63.99 5.3 0.150 70.65 67.90 2.5 There are three variables finally in the model. They are X1price, X3discount and X4promotion. (d) (10pts) Continue with part (c) and still use stepwise procedure. But, here set alpha-to-enter=0.05 and alpha-to-remove=0.10. Did you get the same result as in part (c)? Stepwise Regression: Y_Share versus x1_price, x2_nielsen, ... Alpha-to-Enter: 0.05 Alpha-to-Remove: 0.1 Response is Y_Share on 5 predictors, with N = 36 Step Constant 1 2.420 x3_Discount T-Value P-Value 0.418 7.53 0.000 S R-Sq R-Sq(adj) Mallows Cp 0.164 62.53 61.42 6.9 Not the same result. Only one variable in the model finally is same in this part. (e) (10pts) Continue with part (c), now, we consider the backward elimination approach (start with the full model and get rid of the “weakest” predictor each time). Here, in the “Methods” option, click “Backward elimination” and set alpha-to-remove=0.10. Did you get the same result as in part (c)? Stepwise Regression: Y_Share versus x1_price, x2_nielsen, ... Backward elimination. Alpha-to-Remove: 0.1 Response is Y_Share on 5 predictors, with N = 36 Step Constant 1 3.067 2 3.047 3 3.185 x1_price T-Value P-Value -0.28 -1.42 0.165 -0.28 -1.46 0.155 -0.35 -2.24 0.032 x2_nielsen T-Value P-Value -0.00002 -0.10 0.917 x3_Discount T-Value P-Value 0.405 7.58 0.000 0.405 7.73 0.000 0.399 7.79 0.000 x4_promo T-Value P-Value 0.120 2.20 0.036 0.119 2.29 0.029 0.118 2.29 0.029 -0.0022 -0.68 0.500 -0.0021 -0.70 0.492 0.153 71.11 66.30 6.0 0.151 71.10 67.37 4.0 x5_time T-Value P-Value S R-Sq R-Sq(adj) Mallows Cp 0.150 70.65 67.90 2.5 Yes. I got three variables left in this model and they are the same as what they were in part C Part 2. (20pts) Consider the circled observations in the following scatter plots, are they outliers? If yes, are they outlying respect to their Y value, or X value, or both? (A) (B) (C) A) B) C) D) (D) Yes. Respect to X. Yes. Respect to X and Y. Yes. Respect to Y. No. Part 3. Use the dataset “Prostate” available online, which consists of n = 97 prostate cancer patients. The response of interest is prostate specific antigen (y = PSA_level), a blood chemistry measurement. Among the predictors, we’ll consider the cancer volume (x1= Capsular) and a measure of the invasiveness of the cancer (x2 = CancerVol) for this activity. (a). 10pts--Suppose the researcher wanted to use X1 to explain the response but she was not sure whether X2 would be useful in explaining the variation in the data set given the existence of X1. Use an added-variable plot to help her to make a decision. Notice that in order to obtain an added-variable plot, you need to: Step1: regress Y on X1 and save the residuals, called RESI1 (click “Storage” option and check residuals while fitting the regression model via StatRegressionRegression) Step2: regress X2 on X1 and save the residuals, called RESI2. Step3: draw a scatter plot of RESI1 versus RESI2. Scatterplot of RESI1 vs RESI2 200 150 RESI1 100 50 0 -50 -100 -10 0 10 RESI2 20 30 (b). (5pts) Do you observe any pattern above, what do you suggest on this add-variable plot? It is an up sloping pattern that fits the dots in the graph. It shows that the predictor SESI2 might be a significant addition to this model. (c). (4pts) Fit both X1 and X2 in the model and look at the last part of your regression analysis under “Unusual Observations”. Locate the row for observation 96, what is the Residual, and standardized/student residual? Unusual Observations Obs 47 55 79 91 94 95 96 97 CancerVol 15.3 23.3 14.2 25.8 45.6 18.4 17.8 32.1 PSA_level 13.07 14.88 31.82 56.26 107.77 170.72 239.85 265.07 Fit 73.41 57.66 67.87 63.58 132.90 74.35 56.00 123.49 SE Fit 11.88 11.68 10.98 13.00 17.71 8.74 5.59 13.95 Residual -60.35 -42.78 -36.05 -7.32 -25.13 96.36 183.84 141.58 St Resid -2.07RX -1.46 X -1.22 X -0.26 X -0.97 X 3.19R 5.93R 5.02RX R denotes an observation with a large standardized residual. X denotes an observation whose X value gives it large leverage. The residual is 183.84. And the standardized residual is 5.93. (d) (5pts) There is another way to find standardized/student residual, when in the steps of Regression>Regression, Choose Storage, you can also choose Residual and Standardized Residual. Minitab will generate two new columns with residuals and standardized residuals. Can you locate the row for observation 96, do you get the same answer as in (c)? Do you notice any other large value of standardized residuals (greater than 2.5 or smaller than -2.5), which observation it belongs to? I got the same answer. The two others are 95 and 97.