Best subsets regression

advertisement

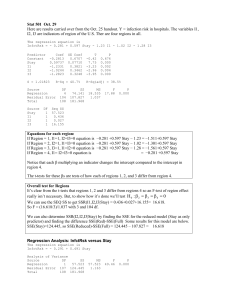

Model selection Best subsets regression Statement of problem • A common problem is that there is a large set of candidate predictor variables. • Goal is to choose a small subset from the larger set so that the resulting regression model is simple, yet have good predictive ability. Example: Cement data • Response y: heat evolved in calories during hardening of cement on a per gram basis • Predictor x1: % of tricalcium aluminate • Predictor x2: % of tricalcium silicate • Predictor x3: % of tetracalcium alumino ferrite • Predictor x4: % of dicalcium silicate Example: Cement data 105.05 y 83.35 16 x1 6 59.75 x2 37.25 18.25 x3 8.75 46.5 x4 19.5 83 .35 10 5.0 5 6 16 37 .25 59 .7 5 8.7 5 18 .2 5 19 .5 46 .5 Two basic methods of selecting predictors • Stepwise regression: Enter and remove predictors, in a stepwise manner, until no justifiable reason to enter or remove more. • Best subsets regression: Select the subset of predictors that do the best at meeting some well-defined objective criterion. Why best subsets regression? # of predictors (p-1) # of regression models 1 2 : ( ) (x1) 2 4 : ( ) (x1) (x2) (x1, x2) 3 8: ( ) (x1) (x2) (x3) (x1, x2) (x1, x3) (x2, x3) (x1, x2, x3) 16: 1 none, 4 one, 6 two, 4 three, 1 four 4 Why best subsets regression? • If there are p-1 possible predictors, then there are 2p-1 possible regression models containing the predictors. • For example, 10 predictors yields 210 = 1024 possible regression models. • A best subsets algorithm determines the best subsets of each size, so that choice of the final model can be made by researcher. What is used to judge “best”? • • • • R-squared Adjusted R-squared MSE (or S = square root of MSE) Mallow’s Cp R-squared SSR SSE R 1 SSTO SSTO 2 Use the R-squared values to find the point where adding more predictors is not worthwhile because it leads to a very small increase in R-squared. Adjusted R-squared or MSE n 1 SSE n 1 R 1 1 MSE SSTO n p SSTO 2 a Adjusted R-squared increases only if MSE decreases, so adjusted R-squared and MSE provide equivalent information. Find a few subsets for which MSE is smallest (or adjusted R-squared is largest) or so close to the smallest (largest) that adding more predictors is not worthwhile. Mallow’s Cp criterion The goal is to minimize the total standardized mean square error of prediction: p which equals: 1 2 E Yˆ n i 1 ip E Yi 2 n 2 1 n ˆ ˆ p 2 E Yip E Yi Var Yip i 1 i 1 which in English is: p some bias some variance Mallow’s Cp criterion Mallow’s Cp statistic Cp SSE p MSE ( X 1 ,..., X p 1 ) n 2 p estimates p where: • SSEp is the error sum of squares for the fitted (subset) regression model with p parameters. • MSE(X1,…, Xp-1) is the MSE of the model containing all p-1 predictors. It is an unbiased estimator of σ2. • p is the number of parameters in the (subset) model Facts about Mallow’s Cp • Subset models with small Cp values have a small total standardized MSE of prediction. • When the Cp value is … – near p, the bias is small (next to none), – much greater than p, the bias is substantial, – below p, it is due to sampling error; interpret as no bias. • For the largest model with all possible predictors, Cp= p (always). Using the Cp criterion • So, identify subsets of predictors for which: – the Cp value is smallest, and – the Cp value is near p (if possible) • In general, though, don’t always choose the largest model just because it yields Cp= p. Best Subsets Regression: y versus x1, x2, x3, x4 Response is y Vars R-Sq R-Sq(adj) C-p S 1 1 2 2 3 3 4 67.5 66.6 97.9 97.2 98.2 98.2 98.2 64.5 63.6 97.4 96.7 97.6 97.6 97.4 138.7 142.5 2.7 5.5 3.0 3.0 5.0 8.9639 9.0771 2.4063 2.7343 2.3087 2.3121 2.4460 x x x x 1 2 3 4 X X X X X X X X X X X X X X X X Stepwise Regression: y versus x1, x2, x3, x4 Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is y on 4 predictors, with N = Step Constant 1 117.57 2 103.10 3 71.65 x4 T-Value P-Value -0.738 -4.77 0.001 -0.614 -12.62 0.000 -0.237 -1.37 0.205 1.44 10.40 0.000 1.45 12.41 0.000 1.47 12.10 0.000 0.416 2.24 0.052 0.662 14.44 0.000 2.31 98.23 97.64 3.0 2.41 97.87 97.44 2.7 x1 T-Value P-Value x2 T-Value P-Value S R-Sq R-Sq(adj) C-p 8.96 67.45 64.50 138.7 2.73 97.25 96.70 5.5 4 52.58 13 Example: Modeling PIQ 130.5 PIQ 91.5 100.728 MRI 86.283 73.25 Height 65.75 170.5 Weight 127.5 .5 .5 91 130 3 8 .28 .7 2 86 100 .75 3.25 65 7 7.5 70.5 12 1 Best Subsets Regression: PIQ versus MRI, Height, Weight Response is PIQ Vars R-Sq R-Sq(adj) C-p S 1 1 2 2 3 14.3 0.9 29.5 19.3 29.5 11.9 0.0 25.5 14.6 23.3 7.3 13.8 2.0 6.9 4.0 21.212 22.810 19.510 20.878 19.794 H e i M g R h I t W e i g h t X X X X X X X X X Stepwise Regression: PIQ versus MRI, Height, Weight Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is PIQ on 3 predictors, with N = 38 Step Constant 1 4.652 2 111.276 MRI T-Value P-Value 1.18 2.45 0.019 2.06 3.77 0.001 Height T-Value P-Value S R-Sq R-Sq(adj) C-p -2.73 -2.75 0.009 21.2 14.27 11.89 7.3 19.5 29.49 25.46 2.0 Example: Modeling BP 120 BP 110 53.25 Age 47.75 97.325 Weight 89.375 2.125 BSA 1.875 8.275 Duration 4.425 72.5 Pulse 65.5 76.25 Stress 30.75 0 11 0 12 . 75 3.25 47 5 5 5 .37 7. 32 89 9 75 25 1. 8 2. 1 25 .275 4. 4 8 .5 65 .5 72 .75 6. 25 30 7 Best Subsets Regression: BP versus Age, Weight, ... Response is BP Vars R-Sq R-Sq(adj) C-p S 1 1 2 2 3 3 4 4 5 5 6 90.3 75.0 99.1 92.0 99.5 99.2 99.5 99.5 99.6 99.5 99.6 89.7 73.6 99.0 91.0 99.4 99.1 99.4 99.4 99.4 99.4 99.4 312.8 829.1 15.1 256.6 6.4 14.1 6.4 7.1 7.0 7.7 7.0 1.7405 2.7903 0.53269 1.6246 0.43705 0.52012 0.42591 0.43500 0.42142 0.43078 0.40723 D u W r e a i t A g B i g h S o e t A n P u l s e S t r e s s X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X X Stepwise Regression: BP versus Age, Weight, BSA, Duration, Pulse, Stress Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is BP on 6 predictors, with N = 20 Step Constant 1 2.205 2 -16.579 3 -13.667 Weight T-Value P-Value 1.201 12.92 0.000 1.033 33.15 0.000 0.906 18.49 0.000 0.708 13.23 0.000 0.702 15.96 0.000 Age T-Value P-Value BSA T-Value P-Value S R-Sq R-Sq(adj) C-p 4.6 3.04 0.008 1.74 90.26 89.72 312.8 0.533 99.14 99.04 15.1 0.437 99.45 99.35 6.4 Best subsets regression • Stat >> Regression >> Best subsets … • Specify response and all possible predictors. • If desired, specify predictors that must be included in every model. (Researcher’s knowledge!) • Select OK. Results appear in session window.