6:1 Multiple Linear Regression.

advertisement

Notes AGR 206

687320275

Revised: 2/6/2016

Chapter 6. Multiple Linear Regression

6:1 Multiple Linear Regression.

Multiple Linear Regression (MLR) is one of the most commonly used and useful statistical methods. MLR is

particularly helpful for observational studies where treatments are not imposed and therefore, the multiple

explanatory variables tend not to be orthogonal to each other.

The concept of orthogonality is used repeatedly in this subject and in multivariate statistics. Two variables

are said to be orthogonal to each other if they have no covariance. In other words, they exist in completely

separate subspaces within the observation or sample space, which in geometric terms means that they are

"perpendicular" to each other.

ANOVA

REGRESSION

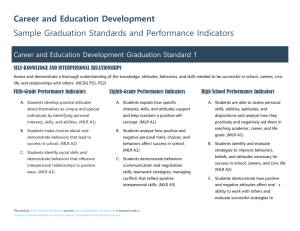

Figure 1. Multiple linear regression is one of the most general

statistical techniques. Most techniques, particularly ANOVA for any

type of experimental design and treatment structure, are special

cases of MLR.

In these lectures we will cover almost all basic aspects of MLR, and some more advanced topics. First, the

general concept and the different types of uses of MLR are presented. Although there are no "formulas" in that

section, it is probably one of the most important things to learn, because the application of different methods

within MLR, such as selection of variables and biased regression, is completely dependent on the goals of the

analysis. Second, as usual we define the model and the typical assumptions, which immediately leads into the

screening of data and adequacy of model. Collinearity is one of the most difficult problems in MLR, so a good

deal of detail is offered there, and two methods for dealing with collinearity, Ridge Regression and Principal

Components Regression, are introduced later on. Finally, we study the methods to decide how to reduce the

number of variables in the model, and how to validate the model for the specific purpose of the study.

6:2 Concept & Uses of MLR.

Through MLR one can establish a least-squares estimation of a relationship between one dependent and

many independent or explanatory variables. This relationship can involve both quantitative (continuous) and

qualitative (class) variables. We will focus on the analysis of data where most or all of the variables are

continuous.

Rawlings et al., (1998) list six different uses for a regression equation:

687320275

1

Notes AGR 206

687320275

Revised: 2/6/2016

1. Description of the behavior of Y in a specific data set. The validity of the model is limited to

the data in the sample, and there is no need to refine the model or eliminate variables. In

the Spartina data set, did biomass tend to increase or decrease with increasing pH?

2. Prediction and estimation of future responses in the population represented by the

sample. This objective concerns only the estimation of expected responses, not

parameters. In this case, the model can be refined and the number of variables reduced to

reduce the cost of obtaining the sample of X's necessary to make the estimations and

predictions. How can we predict biomass in the marshes without having to clip?

3. Extrapolation outside the range of the data used to develop the model. This is similar to

point 2, with the additional complication that there will be predictions outside the original

multivariate range of X's. Emphasis should be in validation and periodical maintenance of

the model by obtaining new observations. Can we predict biomass in an increasing

number of estuaries?

4. Estimation of parameters. The precision of the estimates can be increased by eliminating

redundant and unrelated variables, but there is a risk of introducing too much bias in the

estimates by removing variables that are important. Assuming we know that the

relationships are linear and without interactions, how much does pH affect biomass?

5. Control of a system or process. This implies that a cause-and-effect relationship must be

established between the X's and Y. If no causality is demonstrated, there is not basis for

trying to control Y by changing X. If I change the pH, will biomass change in a predictable

amount?

6. Understanding of processes. This is related to point 4, where parameter estimates

indicated how much and in which direction any given X affects Y. However, the linear

model may be too restrictive to the real process, which may be non-linear. MLR can help

identify variables or groups of variables that are important for further modeling. Why is

there a predictably different expected biomass when pH, etc. change?

6:3 Model and Assumptions.

6:3.1 Linear, additive model to relate Y to p independent variables.

Note: here, p is number of variables, but some authors use p for number of parameters, which is one more

than variables due to the intercept.

Yi=0+ 1 Xi1+…+ p Xip+i

where i are normal and independent random variables with common variance 2.

In matrix notation the model and solution are exactly the same as for SLR:

Y= X+

b=(X’X)-1(X’Y)

6:3.1.1

All equations used for SLR (in matrix notation) apply without change.

The equations are easiest in matrix notation.

687320275

2

Notes AGR 206

687320275

Revised: 2/6/2016

s 2 b MSE X X

Yˆ X b

1

h

h

1

s Yˆh MSE X h XX X h

2

where Xh is a row vector with its first element being 1

and the rest of the elements being values of each X for which

the expected Y is being estimated.

In general, for estimating L'b

s2 L'b MSE L' X X1 L

where L is a row vector of coefficients selected by the user to

reflect the value or Ho of interest.

6:3.2 Response plane and error.

Just as in SLR errors are measured as the vertical deviations from each observation to the prediction line, in

MLR they are measured as the "vertical" (i.e. parallel to the axis of the response variable) distance from the

observation to the prediction plane (Figure 2). The residuals are calculated as in simple linear regression, where

ei=Yi-E{Yi}=Yi-Yhati.

Figure 2. Example of a regression plane for two independent variables.

6:4 Performing MLR in JMP: the basics.

Performing the procedure for MLR in JMP is extremely simple. By showing how simple it is to get almost all

the output you need to work an MLR situation, I place the emphasis on understanding and interpreting the

results. Take for example the Body Fat example from Neter et al., (1996). The response variable Y is body fat

content; X1 is triceps skinfold thickness, X2 is thigh circumference, and X3 is midarm circumference. The data

are in the file bodyfat.jmp. In this example, MLR is applied for prediction and estimation (#2 of the above list); to

predict body fat in people without having to use more time-consuming and expensive methods.

687320275

3

Notes AGR 206

687320275

Revised: 2/6/2016

In order to practice and fully follow the example, it is recommended that you get and open the bodyfat.jmp

data and perform the operations as they are described in the text.

Select Analyze -> Fit Model

and in the window enter Y in

the Y box and all three X’s in

the Model Effects box. The

model specification should

look like the one in this

picture.

Personality should be S.

Least Squares. Choose the

Minimal Report and click Run

Model.

In the results window, click on

the red triangle by Response Y

and select Estimates ->

Sequential Tests. Then select

Correlation of Estimates.

Next, from the same drop-down

menu at the red triangle, select Row

Diagnostics -> Plot Residuals by

Predicted. Then select Press, and

later Durbin-Watson test. For this

example, assume that the samples

are in the temporal order in which

they were obtained, so we can test

for temporal independence of errors.

687320275

4

Notes AGR 206

Revised: 2/6/2016

687320275

Now select Save Columns ->

Studentized Residuals, Hats,

Cook’s D influence, and Mean

Confidence interval.

Finally, right- click

(Windows) or CTL-click

(Mac) on the body of the

Parameter Estimates

table. In the drop-down

menu that appears

sequentially select all the

columns that were not

already checked.

At this point almost all information needed to do a complete interpretation and evaluation of the MLR is displayed

in the results window or in the original data table.

1. R2 and Ra2. R-square is the coefficient of determination, and it represents the proportion of the variance in the

response variable (body fat) that is explained by the three explanatory variables.

R 1

2

SSE

SSTotal

This is a measure of how good the model is, but it has the problem that it always increases as more variables or

parameters are added to the model. Unless we add a variable whose sample values are perfectly correlated with

other variables already in the model, even the addition of superfluous variables will make R 2 increase. On the

other hand, Ra2 is “adjusted” for the loss of degrees of freedom in the error as more variables are added to the

model. Thus, the adjusted Ra2 will decrease when we add variables that do not explain enough additional

variance in Y to justify the loss of degrees of freedom of the error.

687320275

5

Notes AGR 206

687320275

Ra2 1

Revised: 2/6/2016

MSE

SSTotal

n 1

2. The Analysis of Variance table shows the sums of squares, degrees of freedom and mean squares for the whole

model, residual or error, and total. The F ratio tests the Ho that the full model is no better than just a simple mean

to describe the data. A probability value lower than 0.05 (or your preferred critical level) leads to a rejection of the

hypothesis and opens the possibility of determining which parameters are significantly different from zero, or in

other words, what variables explain a significant part of the variance in Y.

3. The Parameter Estimates table is critical for MLR. The second column contains the values of the estimated

parameters or partial regression coefficients. Each coefficient represents the expected change in Y for each unit

of change in the corresponding X, with all other variables remaining constant. These values of the partial

regression coefficients depend on the units used for the variables in the model. Therefore, they are not directly

comparable.

4. The standard error for each estimate is given in this column. Recall that the estimated partial regression

coefficients are linear combinations of normal random variables. Thus, they are normal random variables

themselves. The printed standard errors are used to test whether the estimated parameters are significantly

different from zero, and to construct confidence intervals for their expected values.

5. The t-ratio is the estimated parameter divided by its standard error. It tests the Ho: parameter=0. Please, at this

point note that things get more interesting as in the previous table we saw that the Ho: all parameters=0 rejected

and in this table we see that not a single parameter is significantly different from zero. What is happening?!

6. The confidence intervals for the parameters are calculated by adding and subtracting from the estimated value

the product of the standard error and the t value for P=0.05 (two-tailed).

687320275

6

Notes AGR 206

Revised: 2/6/2016

687320275

1

2

4

3

7

6

5

8

9

10

11

12

13

7. The standardized betas or estimated standardized partial regression coefficients are the coefficients obtained

from regressing standardized Y on the standardized X’s. Typically we use bi’ to represent them. Standardization

is obtained by subtracting the mean and dividing by the standard deviation. It is not actually necessary to run the

new regression, because

bi' bi

sXi

sY

where bi is the coefficient for Xi in the regular MLR, sXi is the standard deviation of Xi, and sY is the standard

deviation of the response variable. The standardized partial regression coefficients can be used to compare the

magnitude of the effects of different predictors on the response variable. The value of the b’ for a predictor X is

687320275

7

Notes AGR 206

Revised: 2/6/2016

687320275

the change in Y, in standard deviations of Y, associated with an increase of X equal to one standard deviation of

X.

8. The VIF is the variance inflation factor. This is a statistic used to assess multicollinearity (correlation among the

predictors), one of the main problems of MLR in observational studies. Each predictor has an associated VIF.

The variance inflation factor of Xi is the reciprocal of 1 minus the proportion of variance of Xi explained by the rest

of the X’s.

VIFi

1

1 Ri2

Ri2 is the proportion of variance of Xi explained by the other X’S. The VIF get its name from the fact that it is the

factor by which the variance of each estimated coefficient increases due to the collinearity among X’s. That’s

right. Collinearity (or correlations) among the X variables increases the variance of the b’s without necessarily

changing the overall R2 of the model. This is behind the apparent paradox described in point 5, where it was

determined that no parameter is significantly different from zero based on the type III SS, but the model is highly

significant.

9. The effects test shows the sum of squares, df and F test for each variable in the equation. Once again, these

tests do not seem to reject the Ho: parameter=0 for any of the b’s. These tests are constructed with the Type III

sum of square, which is the default for JMP. A detailed introduction to types of SS is given below. At this point

suffice it to say that type III SS only considers that part of the variation in Y that is exclusively explained by the

factor under consideration.

10. The PRESS statistic is the prediction error sum of squares, a statistic that is similar to the SSE. Models with

smaller PRESS are better. PRESS is calculated as the sum of squares of the errors when the error for each

observation is calculated with a model obtained while holding the observation out of the data set (Jackknifing).

PRESS is used for model building or variable selection.

Y Y

n

PRESSp

2

i

1

i i

n 1

The subscript p indicates that there are p variables in the model, and i(i) indicates that the predicted value for

observation i was obtained without observation i in the data set.

11. Correlation of estimates. The fact that the b’s (we use b’s as short for “estimated partial regression coefficients”)

have correlations once again emphasizes their random nature. The correlation between pairs of estimated

coefficients also relates to the problem of collinearity. Usually, when there is a high correlation between two

parameters, each parameter will have a high variance. Intuitively, this means that minor random changes in the

sample data might be accommodated by the model by large associated changes in the two b’s. The b’s vary

widely from sample to sample, but the overall model fit is equally good across samples!

12. The Sequential Tests are based in the Type I SS. These are calculated according to the order in which the

variables were entered in the model. Thus, variables entered first get “full credit” for any previously unexplained

variance in Y that they can explain. Variables only get a chance to explain the variance in Y not previously

explained by earlier X’s. The sum of type I SS should equal the total SS. Type I SS are useful to understand the

relationships among variables in the model and to test for effects when there is a predetermined order in which

variables should be entered. For example, suppose that you are developing a method to estimate plant mass in

a crop or an herbaceous native plant community. The method has to be quick and inexpensive. Because you are

interested in prediction, you are not concerned with the variance of b’s. Canopy height is a very quick and

inexpensive non-destructive measure, so you would include it in all models first and use its type I SS to assess

its explanatory power.

13. Durbin-Watson statistic. This is a test of first-order autocorrelation in the residuals. It is assumed that the data

are ordered by time or spatial position on a transect. If the data have no time sequence or position on a transect,

this statistic is meaningless. A small P value (say less than 0.01) indicates that there is a significant temporal or

spatial autocorrelation in the errors, which violates the assumption of independence.

687320275

8

Notes AGR 206

687320275

Revised: 2/6/2016

6:5 Four Types of Sum of Squares.

When X variables are not orthogonal, which is usually the case when data do not come from designed

experiments, it is not possible to assign a unique portion of the total sum of squares to each variable. In order to

understand the explanatory power and importance of each X, 4 different types of sum of squares are used.

Thus, understanding and interpreting the partition of SS performed in MLR and ANOVA requires that one

understand the different types of SS, particularly types I and III.

SAS defines four types of sum of squares (SAS Institute, Inc. 1990; SAS/STAT User's Guide). In the vast

majority of analyses, the relevant SS are Type I and Type III. I mention all the types for completeness and

understanding. This discussion applies to any linear model, including all ANOVA models.

In order to understand the differences among the concepts of different types of SS, it is necessary to

consider the potential sources of differences in their values.

1. First, the simplest situation, where all SS are equal in value, is a designed

experiment where all effects are orthogonal, all treatment combinations are

observed (no missing cells or combinations), and all cells have the sum number

of observations (balanced experiment).I=II=III=IV.

2. Second, the next level of complexity is when not all effects are orthogonal, but

all cells are represented and have the same number of observations. I≠II=III=IV.

3. Third, when cell sizes are not equal and effects are not orthogonal, but all cells

are represented I≠II≠III=IV.

4. If in addition to the conditions in case 3 there are missing cells, I≠II≠III≠IV.

Type I SS are the sequential sums of squares. They depend on the order in which effects (or variables) are

included in the model. The order is determined by the order in which effects are specified in the MODEL

statement in SAS, or the order in which they are entered into the Model Effects in the Fit Model platform of JMP.

The Type I SS for any effect is the sum of squares of the Y variable explained by the effect that is not explained

by any of the effects previously entered in the model. Thus, it is completely dependent on the order in which

effects are entered, except when effects are orthogonal to each other. The sum of Type I SS is the same as the

total sums of squares for the whole model (for example, SSRegression=type I SS). Type I SS and Type III SS

are always the same for the last effect in the model.

Type II and III SS are the partial sums of squares for an effect. This partial SS is the SS explained by the

effect under consideration that is not explained by any of the other effects or variables. The use of F tests based

on type II and III SS is conservative in the sense that it completely ignores the variations in the response

variable the can be explained by more than one effect. The sum of the type II/III SS can be anything between 0

and the total SS of the model or regression.

In MLR (in AGR206) we deal mostly with continuous variables (no grouping variables), so there are no cells

nor possibility for unequal cell sizes. Thus, typically type II and type III SS will be the same. In general, type I

and II/III are the most commonly used SS.

687320275

9

Notes AGR 206

687320275

Revised: 2/6/2016

Figure 3. Schematic representation of the different types of sum of squares

relevant for multiple linear regression. This is figure 7.1 from Neter et al., (1996).

Figure 3 illustrates the partitioning of the total SS using type I SS for the regression of Y on two X's as the

X's are entered into the model in different order. A semi-standard notation is used where commas separate

effects or variables included in the model, and bars separate the variable or variables in question from those

already in the model. Thus, SSE(X1) is the SS of the error when X1 is in the model, SSR(X1, X2) is the SS of the

regression when both variables are in the model, and SSR(X2|X1) is the increment in the SSR (and thus the

reduction in SSE) caused by including X2 after X1.

Usually, the type III SS for a variable will be smaller than the type I SS when they are entered first. However,

this is not the case for "suppressor" variables, which have a larger type III than type I SS. This fact still escapes

my intuition and imagination. An excellent explanation for the different types of sum of squares can be found in

the General Linear Models sections of StatSoft’s electronic textbook

(http://www.statsoft.com/textbook/stathome.html).

The concept of extra sum of squares, particularly type III SS allows the construction of general linear tests

for individual of groups of variables. For example, one may want to test whether the regression coefficients for

X1 and X3 are zero in a model with 5 independent variables, Y = 0 + 1 X1 +2 X2 + 3 X3 + 4 X4 + 5 X5 + .

The F test would be:

F

SSR(X1 , X3 X2 , X 4 ,X5 ) / 2

SSE(X1 , X2 , X 3, X 4 , X5 ) (n 6)

6:6 Partial correlations and plots.

When many explanatory variables are present, as in MLR, it is difficult to visually assess the relationship

between Y and any one of the X's by looking at the scatterplots, because the effects of the variable may be

687320275

10

Notes AGR 206

Revised: 2/6/2016

687320275

masked by the effects of the other variables. In this case, it is necessary to use the concept of partial

regression, correlation, and plots.

The partial correlation between Y and X is defined as the correlation between these two variables when all

other variables are controlled for. The partial correlation and regression may reveal unexpected relationships.

This concept is illustrated in Figure 4. Because the predictors exhibit a high positive correlation and X2 has a

strong negative effect on Y2, the effect of X1 on Y2 is reversed and appears to be negative, while in fact it is

positive.

The partial correlation between a response variable Y and one predictor out of many is calculated in the

Multivariate platform of JMP.

2

X1

1

0

-1

-2

3

Correlations

Varia ble

X1

X2

Y2

X2

2

X1

1.00 00

0.88 68

-0 .487 0

X2

0.88 68

1.00 00

-0 .672 1

Y2

-0 .487 0

-0 .672 1

1.00 00

1

0

-1

-2

Y2

2

Partial Corr

1

Varia ble

X1

X2

Y2

X1

•

0.86 51

0.31 85

X2

0.86 51

•

-0 .595 1

Y2

0.31 85

-0 .595 1

•

0

-1

-2

pa rtial led wi th re spect to al l other vari able s

-2 -1

0

1

2

-2 -1

0

1

2

3 -2

-1

0

1

2

Figure 4. Effects of correlations among the predictors on the apparent simple correlations

with the response variable. These correlations and partial correlations are based on a random

sample from a data set where X1 and X2 follow a bivariate normal distribution with correlation

equal to 0.9. The true model relating Y to the predictors is: Y = 0.3 X1 - 0.7 X2 + , where has

a normal distribution with variance equal to 0.25.

The partial correlation between two variables, say Y and X1 for the Body Fat example, is the correlation

between Y and X1 after correction for the linear relationships between Y and the other X’s and X1 and the other

X’s. To understand the meaning of the partial correlation intuitively, consider how it can be calculated. First,

regress Y on the other X’s, in this example X2 and X3. Save the residuals and call them eY(X2,X3). Second,

regress X1 on X2 and X3. Save the residuals and call them eX1(X2,X3). Finally, correlate eY(X2,X3) and

eX1(X2,X3). The results from JMP are presented below.

687320275

11

Notes AGR 206

Revised: 2/6/2016

687320275

Partial

correlation in

the Body Fat

example.

The errors obtained in the first two steps can be used to assess the linearity and strength of the relationship

between Y and X1, controlling for the other variables. Partial regression plots represent the marginal effects of

each X variable on Y, given that all other X’s are in the model. In the case of Y and X1 in the example above,

we plot eY(X2, X3) vs. eX1(X2, X3).

Partial regression plot

of Y and X1

Figure 6-5. Partial regression of residuals in Y vs. residuals in X1. No assumptions are violated.

The partial regression is simply the regression of the residuals of Y on the residuals of X1. The scatter plot

should be inspected as a regular residual plot, looking out for non-linearity, heterogeneity of variance, and

outliers. The relationship appears to be linear with homogeneous variance over levels of X1, and without

outliers.

6:7 Generalized MLR.

6:7.1 Linear, and intrinsically linear models.

Linearity refers to the parameters. Thus, a model is linear if it can be expressed as a linear combination of

parameters. The model can involve any functions of X’s as long as this functions do not involve parameters

whose true values are unknown.

As a result, not all linear models represent a hyperplane in the variable space.

Yi=0+ 1 f1(Xi1)+…+ p fp(Xip)+i

687320275

generic linear model

12

Notes AGR 206

687320275

Revised: 2/6/2016

For example, the first function could be sin[3X+ln(2+0.75X)]. In particular, powers of X are used as these

functions, creating polynomials of any degree desired.

Intrisically linear models are models that seem not to be linear but that by rearranging terms or changing

variables can be expressed as in the generic equation above.

6:7.2 Polynomial regression.

Polynomial regression is a special case where the functions are powers of X. More complex and general

models include interactions among variables. These interactions can be incorporated simply as new variables

resulting from the product of the values of the original variables interacting. By doing a substitution of variables

one can see models with interactions as regular linear models. For example, a polynomial on X of order 3 can

be expressed as a regular linear model.

Y 0 1 X1 2 X12 3 X13

X12 X 2

X13 X 3

Y 0 1 X1 2 X 2 3 X 3

Likewise, the interaction between two different variables can be transformed into a new variable and

approached as regular MLR. An interaction between two variables, say X1 and X2, means that the effect of

either one on Y depends on

the level of the other. For example, let Y=2X1X2. An increase in the value of X2 by

one unit will make Y change by 2X1. When X1=1 the effect of the unit change in X2 is an increase of Y by 2

units. When X1=3 the effect of the unit change in X2 is an increase of Y by 6 units.

NOTE: the concept and intuition of statistical interaction is essential. Two variables have an interaction when

the effect of one of them on the response depends on the level of the other one. In the clover example used for

homework 2, there was an interaction between group and days. This means that the effect of temperature

depended on the age of the plants. When plants were very young, temperature groups did not differ in plant

size. After plants had a chance to grow for a while, the average sizes became different among groups. The

same fact can be stated by saying that the effect of time on size of plants (growth rate) depended on the group.

687320275

13