Solving the Correspondence Problem in Feature Based Stereo Vision

advertisement

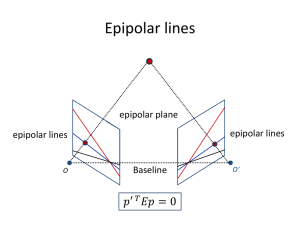

Correspondence Problem in Stereo Vision Machine Vision 140.429 Andre Van Der Walt: 01019368 Prenath Samaratunga: 00213934 Introduction The search for the correct match of a point is called the correspondence problem and is one of the central and most difficult parts of the stereo problem. Several algorithms were published to compute the disparity between images like the correlation method, the correspondence method, or the phase difference method. If a single point can be located in both stereo images then, given that the relative orientation between the cameras is known, the three dimensional world coordinates may be computed. For a given pair of stereo images and the known orientation parameters of the cameras, the corresponding points are supposed to be on the epipolar lines. Definition of problem: Find the correspondence between two – or more – images. Understood as determining where the same physical entities are depicted in the different images in question. See Figure 1. The correspondence problem is also known as tracking, registration and feature matching to mention a few of the other commonly used phrases. Figure 1: The general correspondence problem. Solving the correspondence problem There are two types of solutions, we will discuss, to the correspondence problem in stereo vision; Area-based stereo vision and feature based (edge detected) stereo vision. There are many other solutions to the problem but these are the most commonly used. Area based Stereo Vision solution Figure 2. Two similar images with area of similarity chosen (Right) and epipolar line drawn (Left). These are the images to be referred to. The area based stereo vision solution requires that similarity between locations needs to be defined such that reliability of the match may be evaluated, and that a similarity measure can be computed at each chosen location in the image. The questions to be answered are: • Where in the image may correspondence analysis be carried out? • What is an appropriate measure of similarity? • How are corresponding locations found? 1. Where correspondence analysis may be carried out? Homogeneous regions - are less likely to produce a reliable result. Local in-homogeneity - of the similarity criterion defines a base for reliability analysis. 2. What measures of similarity to use? An appropriate measure of similarity is using texture, grey scale, or colour. Each of the criteria is evaluated on a local neighbourhood in order to suppress noise artefacts. The extent of the neighbour-hood depends on the average size of objects in the image (obviously, this has an impact on the accuracy of the result). The use of colour requires a norm on a 3-dimensional feature space. A practical approach to this problem is to find features in each of the images. Then measure how similar the neighbourhoods of a given feature in one image are to the neighbourhood of the other. In order to do this a measure of similarity is needed. A typical similarity measures between image patches is correlation. It is noted, that this is very similar to seeing one patch as a kernel and convolving the other image with it. Correlation between two image patches, P1 and P2, can be obtained as follows: 1. Arrange the elements of P1 and P2 into vectors x1 and x2. 2. Calculate the mean of each vector, x1 and x2, i.e.: 3. Calculate the variance of each vector, x1 and x2, var1 and var2, i.e.: 4. Calculate the covariance, cov, between the two vectors, x1 and x2, i.e.: 5. Then the covariance, , is given by: An enhancement to the basic approach of; find feature in both images and compare them all to each other, is to constrain the search space, by using epipolar line constraints. This is another way of saying, that only some features in one image can match a given feature in the other. There are two main reasons for this, firstly to limit the number of computations required. Secondly this is a way to incorporate prior knowledge or assumptions of how the images are formed. The Epipolar Constraint Any location in the image shows reflection of objects in the scene, that lie on a line from the image location though the lens. The projection of all points on this line onto any other image is a line. The search for a corresponding location in a second image is restricted to a welldefined line in this image (epipolar line) The origins of the two camera co-ordinate systems and an arbitrary point in 3-d space form an epipolar plane. Any point in this plane is projected onto epipolar lines in the two images that are given by the intersection of this plane with the image planes. For each point of the left image compute for every position on the corresponding epipolar line the similarity measure. D is optimal if it is minimum. Summary of Area-based Stereo Vision Area-based stereo vision methods: • solve the correspondence problem at any point in the image. • require a similarity measure that can be evaluated everywhere in the image. • may produce unreliable results, if features of the similarity measure do not vary much over large regions (For this reason is it better to use feature based solution to the problem.) • disregard the fact that grey levels are for the most part an image feature and not a surface feature. Constraints: • Epipolar constraint (strong condition, must hold). • Similarity constraint (weak condition, may hold). • Continuity constraint (weak condition, may hold). Feature-Based Stereo Vision Solution Area-Based Stereo Vision (ABSV) sometimes fails because similarity computations are unreliable if similarity features are weakly pronounced. Feature-Based Stereo Vision (FBSV): • compute locations where distinct features are discernable. • Solve the correspondence problem at those locations only. • FBSV is often faster than ABSV: fewer locations to be processed correspondence analysis (which needs O(N2) computation time) • Solution to the correspondence problem does not depend on illumination object features are searched for. • Conjugate pairs are found only if the features are present in both images. • The number of locations, where depth may be computed, is smaller than ABSV. • Accuracy of depth computation may be better because feature locations may computed with sub-pixel accuracy. in as in be Features Features are evaluated at those edges of the image, which are object-dependant. • Edge detection • Feature extraction • Differentiation between scene-dependant, illumination-dependant and view dependant edges Edge Features: • location of the edge • strength of the edge • orientation of the edge Edge neighbourhood Features: • colour at the edge • texture at the edge • brightness at the edge Feature Extraction = Edge Detection Search for the exact location of the edge: zero crossing of the second derivative. Getting Edge Attributes Zero-Crossings with Sub-Pixel Accuracy Sub-Pixel Accuracy Using an Edge Model Sub-pixel accuracy from interpolated zero crossings suffers from sensitivity to noise in the image. Linear interpolations at different edge locations interfere with each other. Edge Model Solving the Correspondence Problem in Feature Based Stereo Vision Advantage: only few candidates need to be matched. Disadvantage: no support from disparity information in the immediate neighbourhood. Constraints in Feature Based Stereo Vision Several constraints limit the possible choices of conjugate pairs: 1. Constraints based on the image acquisition geometry 2. Constraints based on properties of the imaged objects 3. Assumptions on the continuity of disparities and figures 4. Upper limits on the disparity and its change 5. Assumptions on the order of projected points in the two images Some of the constraints are strong conditions (i.e., they must hold always), some of them are weak conditions (i.e., there are cases, where they may not hold). It is in the responsibility of the developer / researcher to choose constraints that are appropriate for a given problem. Geometric Constraints • Epipolar constraint. • Geometric similarity of features. • Unique, unambiguous mapping between corresponding locations. Problems Similarity features depend on the result of the edge detection that in itself depends on image content, noise and blurring. Uniqueness of mapping is not always given. Except for the epipolar constraint, geometric constraints pose weak conditions on the problem. Object-Based Constraints: Similarity of Features • Edge types must match • Occlusion and highlight edges may not be used. Requires edge classification that itself requires depth computation and surface reconstruction bootstrapping problem. Object at the edge must be the same in the two images • Same colour, texture, ... Requires investigating features around the edges. Object-Based Constraints – Disparity If depth varies slowly so does the disparity (continuity of disparity). Disparity limit: The minimum distance of an object from the camera gives rise of an upper limit of the disparity, because Local Disparity Limit Order of Conjugate Pairs The local disparity limit as a constraint requires pre-processing by an area based stereo vision method (e.g., block matching on a 16x16 matrix): • Result after filtering is used as an estimate of the local disparity. • Actual disparity may vary by a pre-specified amount from the block matching disparity. The constraint on the order of conjugate pairs indicates that points ordered A,B,C in the left image have the same order in the right image. Stereo Analysis based on ZC Vectors for each pair of epipolar lines do repeat until each edge location is part of a conjugate pair or no pairings are possible • compute zero crossings (zc) at various resolutions. • create a disparity histogram of possible disparities. • apply the global disparity limit for given regions. • compute a local disparity histogram for this region. • compute disparities for each edge point in the region. • remove edge locations that are part of a conjugate pair. Computation of Zero-Crossings Disparities after First Match Global Disparity Histogram Local Disparity Histogram Summary of Feature-based Stereo Vision Feature-Based Stereo Vision as compared to area-based stereo vision • enables the computation of conjugate pairs with sub-pixel accuracy • results in a sparse map of depths (possibly requiring depth interpolation) • may include object-dependent constraints in the solution of the correspondence problem • computes disparities faster and more reliable as it works on fewer locations for finding conjugate pairs References: [1] E. Trucco and A. Verri. "Introductory to Techniques For 3-D Computer Vision." Prentice Hall. 1998. [2] Zhengyou Zhang. "Determining the epipolar geometry and its uncertainty: A review". International Journal of computer vision, 27(2), 1998 [3] Lecture notes http://www.cs.uwa.edu.au/~robyn Computer Vision 412. [4] Web Site, http:// isgwww.cs.uni-magdeburg.de/bv/script/cb/VLcv06.pdf [5] Web Site, http:// isgwww.cs.uni-magdeburg.de/bv/script/cb/VLcv07.pdf