Slides

advertisement

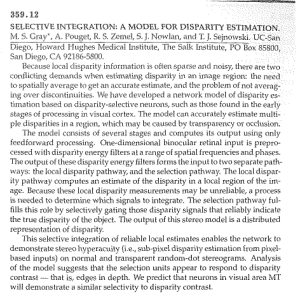

Epipolar lines epipolar plane epipolar lines epipolar lines O Baseline 𝑝′ 𝑇 𝐸𝑝 = 0 O’ Rectification • Rectification: rotation and scaling of each camera’s coordinate frame to make the epipolar lines horizontal and equi-height, by bringing the two image planes to be parallel to the baseline • Rectification is achieved by applying homography to each of the two images Rectification 𝐻𝑙 O 𝐻𝑟 Baseline 𝑞′ 𝑇 𝐻𝑙 −𝑇 𝐸𝐻𝑟 −1 𝑞 = 0 O’ Cyclopean coordinates • In a rectified stereo rig with baseline of length 𝑏, we place the origin at the midpoint between the camera centers. • a point 𝑋, 𝑌, 𝑍 is projected to: – – 𝑓(𝑋−𝑏/2) 𝑓𝑌 Left image: 𝑥𝑙 = , 𝑦𝑙 = 𝑍 𝑍 𝑓(𝑋+𝑏/2) 𝑓𝑌 Right image: 𝑥𝑟 = , 𝑦𝑟 = 𝑍 𝑍 • Cyclopean coordinates: 𝑏(𝑥𝑟 + 𝑥𝑙 ) 𝑋= , 2(𝑥𝑟 − 𝑥𝑙 ) 𝑏(𝑦𝑟 + 𝑦𝑙 ) Y= , 2(𝑥𝑟 − 𝑥𝑙 ) 𝑓𝑏 𝑍= 𝑥𝑟 − 𝑥𝑙 Disparity 𝑓𝑏 𝑥𝑟 − 𝑥𝑙 = 𝑍 • Disparity is inverse proportional to depth • Constant disparity ⟺ constant depth • Larger baseline, more stable reconstruction of depth (but more occlusions, correspondence is harder) (Note that disparity is defined in a rectified rig in a cyclopean coordinate frame) Random dot stereogram • Depth can be perceived from a random dot pair of images (Julesz) • Stereo perception is based solely on local information (low level) Moving random dots Compared elements • Pixel intensities • Pixel color • Small window (e.g. 3 × 3 or 5 × 5), often using normalized correlation to offset gain • Features and edges (less common) • Mini segments Dynamic programming • Each pair of epipolar lines is compared independently • Local cost, sum of unary term and binary term – Unary term: cost of a single match – Binary term: cost of change of disparity (occlusion) • Analogous to string matching (‘diff’ in Unix) String matching • Swing → String S Start t r i n g S w i n g End String matching • Cost: #substitutions + #insertions + #deletions S t r i n g S w i n g Dynamic Programming • Shortest path in a grid • Diagonals: constant disparity • Moving along the diagonal – pay unary cost (cost of pixel match) • Move sideways – pay binary cost, i.e. disparity change (occlusion, right or left) • Cost prefers fronto-parallel planes. Penalty is paid for tilted planes Dynamic Programming Start 𝑇𝑖𝑗 = max(𝑇𝑖−1,𝑗 + 𝐶𝑖−1,𝑗→𝑖,𝑗 , 𝑇𝑖−1,𝑗−1 + 𝐶𝑖−1,𝑗−1→𝑖,𝑗 , 𝑇𝑖−1,𝑗−1 + 𝐶𝑖,𝑗−1→𝑖,𝑗 ) Complexity? Probability interpretation: Viterbi algorithm • Markov chain • States: discrete set of disparity 𝑛 𝑃 𝑑1 , … , 𝑑𝑛 = 𝑃𝑖 (𝑑1 ) 𝑃𝑖 𝑑𝑖 𝑃𝑖−1,𝑖 (𝑑𝑖−1 , 𝑑𝑖 ) 𝑖=2 • Log probabilities: product ⟹ sum Probability interpretation: Viterbi algorithm • Markov chain • States: discrete set of disparity − log 𝑃 𝑑1 , … , 𝑑𝑛 𝑛 = − log 𝑃𝑖 𝑑1 − (log𝑃𝑖 𝑑𝑖 + log𝑃𝑖−1,𝑖 𝑑𝑖−1 , 𝑑𝑖 ) 𝑖=2 • Maximum likelihood: minimize sum of negative logs • Viterbi algorithm: equivalent to shortest path Dynamic Programming: Pros and Cons • Advantages: – Simple, efficient – Achieves global optimum – Generally works well • Disadvantages: Dynamic Programming: Pros and Cons • Advantages: – Simple, efficient – Achieves global optimum – Generally works well • Disadvantages: – Works separately on each epipolar line, does not enforce smoothness across epipolars – Prefers fronto-parallel planes – Too local? (considers only immediate neighbors) Markov Random Field • Graph 𝐺 = 𝑉, 𝐸 In our case: graph is a 4-connected grid representing one image • States: disparity • Minimize energy of the form 𝐸(𝒟) = 𝑉𝑝,𝑞 𝑑𝑝 , 𝑑𝑞 + (𝑝,𝑞)∈𝐸 𝐷𝑝 (𝑑𝑝 ) 𝑝∈𝑉 • Interpreted as negative log probabilities Iterated Conditional Modes (ICM) • Initialize states (= disparities) for every pixel • Update repeatedly each pixel by the most likely disparity given the values assigned to its neighbors: min 𝑑𝑝 𝑉𝑝,𝑞 𝑑𝑝 , 𝑑𝑞 + 𝐷𝑝 (𝑑𝑝 ) 𝑞∈𝒩(𝑝) • Markov blanket: the state of a pixel only depends on the states of its immediate neighbors • Similar to Gauss-Seidel iterations • Slow convergence to (often bad) local minimum Graph cuts: expansion moves • Assume 𝐷 𝑥 is non-negative and 𝑉 𝑥, 𝑦 is metric: – 𝑉 𝑥, 𝑥 = 0 – 𝑉 𝑥, 𝑦 = 𝑉 𝑦, 𝑥 – 𝑉 𝑥, 𝑦 ≤ 𝑉 𝑥, 𝑧 + 𝑉 𝑧, 𝑦 • We can apply more semi-global moves using minimal s-t cuts • Converges faster to a better (local) minimum α-Expansion • In any one round, expansion move allows each pixel to either – change its state to α, or – maintain its previous state Each round is implemented via max flow/min cut • One iteration: apply expansion moves sequentially with all possible disparity values • Repeat till convergence α-Expansion • Every round achieves a globally optimal solution over one expansion move • Energy decreases (non-increasing) monotonically between rounds • At convergence energy is optimal with respect to all expansion moves, and within a scale factor from the global optimum: 𝐸(𝒟𝑒𝑥𝑝𝑎𝑛𝑠𝑖𝑜𝑛 ) ≤ 2𝑐𝐸(𝒟 ∗ ) where max 𝑉(𝛼, 𝛽) 𝑐= 𝛼≠𝛽∈𝒟 min 𝑉(𝛼, 𝛽) 𝛼≠𝛽∈𝒟 α-Expansion (1D example) 𝑑𝑝 𝑑𝑞 α-Expansion (1D example) 𝛼 𝛼 α-Expansion (1D example) 𝛼 𝛼 α-Expansion (1D example) 𝛼 𝐷𝑝 (𝛼) 𝐷𝑞 (𝛼) 𝑉𝑝𝑞 𝛼, 𝛼 = 0 𝛼 α-Expansion (1D example) 𝛼 But what about 𝑉𝑝𝑞 (𝑑𝑝 , 𝑑𝑞 )? 𝐷𝑝 (𝑑𝑝 ) 𝐷𝑞 (𝑑𝑞 ) 𝛼 α-Expansion (1D example) 𝛼 𝑉𝑝𝑞 (𝑑𝑝 , 𝑑𝑞 ) 𝐷𝑝 (𝑑𝑝 ) 𝐷𝑞 (𝑑𝑞 ) 𝛼 α-Expansion (1D example) 𝛼 𝐷𝑞 (𝛼) 𝑉𝑝𝑞 (𝑑𝑝 , 𝛼) 𝐷𝑝 (𝑑𝑝 ) 𝛼 α-Expansion (1D example) 𝛼 𝐷𝑝 (𝛼) 𝑉𝑝𝑞 (𝛼, 𝑑𝑞 ) 𝐷𝑞 (𝑑𝑞 ) 𝛼 α-Expansion (1D example) 𝛼 𝑉𝑝𝑞 (𝑑𝑝 , 𝛼) 𝑉𝑝𝑞 (𝛼, 𝑑𝑞 ) 𝑉𝑝𝑞 (𝑑𝑝 , 𝑑𝑞 ) Such a cut cannot be obtained due to triangle inequality: 𝑉𝑝𝑞 (𝛼, 𝑑𝑞 ) ≤ 𝑉𝑝𝑞 𝛼 𝑑𝑝 , 𝑑𝑞 + 𝑉𝑝𝑞 (𝑑𝑝 , 𝛼) Common Metrics • Potts model: 0 𝑉 𝑥, 𝑦 = 1 𝑥=𝑦 𝑥≠𝑦 • 𝑉 𝑥, 𝑦 = 𝑥 − 𝑦 • 𝑉 𝑥, 𝑦 = 𝑥 − 𝑦 2 • Truncated ℓ1 : 𝑉 𝑥, 𝑦 = 𝑥−𝑦 𝑇 𝑥−𝑦 <𝑇 otherwise • Truncated squared difference is not a metric Reconstruction with graph-cuts Original Result Ground truth A different application: detect skyline • • • • • Input: one image, oriented with sky above Objective: find the skyline in the image Graph: grid Two states: sky, ground Unary (data) term: – State = sky, low if blue, otherwise high – State = ground, high if blue, otherwise low • Binary term for vertical connections: – If the state of a node is sky, the node above should also be sky (set to infinity if not) – If the state of a node is ground, the node below should also be ground • Solve with expansion move. This is a binary (two state) problem, and so graph cut can find the global optimum in one expansion move