TESTING FOR THE SIGNIFICANCE OF A SUBSET OF

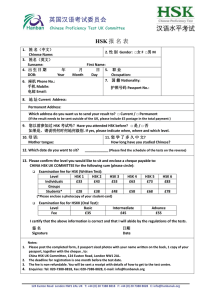

advertisement

HETEROSCEDASTICITY Regression of lnsalary on years of experience for professors- USE DATA 3-11 Original Equation-with Uncorrected HSK . reg lnsalary years yearssq Source SS df MS Model Residual 10.8438849 9.38050436 2 5.42194244 219 .042833353 Total 20.2243892 221 .091513074 lnsalary Coef. years yearssq _cons .0438528 -.0006273 3.809365 Std. Err. .0048287 .0001209 .0413383 t 9.08 -5.19 92.15 Number of obs F( 2, 219) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.000 0.000 = = = = = = 222 126.58 0.0000 0.5362 0.5319 .20696 [95% Conf. Interval] .0343361 -.0008655 3.727894 .0533696 -.0003891 3.890837 Formal Tests of HSK To carry out the White’s Test: under equation above, estat imtest, white To carry out a Breusch-Pagan test: estat hettest, normal or iid or fsstat To carry out a specification test for non-linearity: estat ovtest (This is Ramsey’s specification error test) Breusch-Pagan / Cook-Weisberg test for heteroskedasticity .estat hettest, normal Ho: Constant variance Variables: fitted values of lnsalary chi2(1) = 16.20 Prob > chi2 = 0.0001 White Heteroskedasticity Test: .estat imtest, white White's test for Ho: homoskedasticity against Ha: unrestricted heteroskedasticity chi2(4) = 20.00 Prob > chi2 = 0.0005 .5 0 -1 -.5 Residuals 3.8 4 4.2 Fitted values 4.4 4.6 An important command to remember is rvfplot. This command generates the above graph when used after regression (reg) command and provides a visual check on the existence of heteroscedasticity. There seems to be evidence for heteroscedasticity given the changing variance of the residuals across observations. Methods for Correcting for Heteroscedasticity The easiest way to correct for HSK is to use the command: .reg Y X1 X2, vce(robust) This yields HSK corrected robust standard errors (if the structure of HSK is unknown). This method generates Eicker-Huber-White HSK corrected standard errors.. Below is the result of such a correction using the robust option. . rvfplot . reg lnsalary years yearssq, vce(robust) Linear regression Number of obs F( 2, 219) Prob > F R-squared Root MSE lnsalary Coef. years yearssq _cons .0438528 -.0006273 3.809365 Robust Std. Err. .0043609 .0001179 .026119 t 10.06 -5.32 145.85 P>|t| 0.000 0.000 0.000 = = = = = 222 216.87 0.0000 0.5362 .20696 [95% Conf. Interval] .0352582 -.0008597 3.757889 .0524475 -.000395 3.860842 . display e(r2_a) .5319428 Since this option does not yield adj. Rsq, we need to use an additional command to get it: .display e(r2_a) This command yields the adj. Rsq=0.5319428 However, using the vce(robust) option is not the same as using the Generalized Least Squares (Weighted Least Squares Method) which must be preferred to correct fully the problem of HSK. Here are the steps to be taken to carry out a Feasible Generalized Least Squares Method (when HSK structure is unknown). 1) 2) 3) 4) 5) run the regression, predict the uhat (the estimated residuals), call it u! generate squared u run the regression of usq on the independent variables predict the fitted values from this regression, call it v! rerun the regression by weighing each variable with 1/v (see class notes for generating this method) Correcting for HSK: FGLS Method: General Method . reg lnsalary years yearssq Source SS df MS Model Residual 10.8438849 9.38050436 2 219 5.42194244 .042833353 Total 20.2243892 221 .091513074 lnsalary Coef. years yearssq _cons .0438528 -.0006273 3.809365 Std. Err. .0048287 .0001209 .0413383 t 9.08 -5.19 92.15 Number of obs F( 2, 219) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.000 0.000 = = = = = = 222 126.58 0.0000 0.5362 0.5319 .20696 [95% Conf. Interval] .0343361 -.0008655 3.727894 .0533696 -.0003891 3.890837 . predict u, residual . gen usq=u^2 . reg usq years yearssq Source SS df MS Model Residual .080454658 .996377813 2 219 .040227329 .00454967 Total 1.07683247 221 .004872545 usq Coef. years yearssq _cons .0060837 -.0001288 -.011086 Std. Err. .0015737 .0000394 .0134726 t 3.87 -3.27 -0.82 Number of obs F( 2, 219) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.001 0.411 = = = = = = 222 8.84 0.0002 0.0747 0.0663 .06745 [95% Conf. Interval] .0029821 -.0002065 -.0376386 .0091853 -.0000512 .0154665 . predict v (option xb assumed; fitted values) . reg lnsalary years yearssq [aweight=1/v] (sum of wgt is 1.9809e+04) Source SS df MS Model Residual 12.4573794 2.58113177 2 216 6.22868971 .011949684 Total 15.0385112 218 .068983996 lnsalary Coef. years yearssq _cons .0312545 -.0002426 3.867963 Std. Err. .0025661 .000063 .0114291 t 12.18 -3.85 338.43 Number of obs F( 2, 216) Prob > F R-squared Adj R-squared Root MSE P>|t| 0.000 0.000 0.000 Alternatively, one can use the command vwls to correct for HSK. = = = = = = 219 521.24 0.0000 0.8284 0.8268 .10931 [95% Conf. Interval] .0261968 -.0003667 3.845436 .0363122 -.0001185 3.89049 . gen sqrtusq=sqrt(usq) . vwls lnsalary years yearssq, sd(sqrtusq) Variance-weighted least-squares regression Goodness-of-fit chi2(219) = 218.59 Prob > chi2 = 0.4951 lnsalary Coef. years yearssq _cons .0436123 -.0006114 3.807325 Std. Err. .0006536 .0000188 .0037622 Number of obs Model chi2(2) Prob > chi2 z 66.73 -32.44 1011.99 P>|z| 0.000 0.000 0.000 = 222 = 15451.64 = 0.0000 [95% Conf. Interval] .0423313 -.0006483 3.799951 .0448933 -.0005744 3.814699 Notice that there are some slight differences in the coefficient estimates between these two approaches, and the vwls command does not generate adj.Rsq. I would prefer to use the previous method (FGLS) in steps. Estimation by FGLS under HSK disturbances a) Breusch-Pagan Specification 1. Regress the original model, calculate uhat and uhatsq, then regress uhatsq on known factors causing HSK. This is the auxiliary regression. 2. Then type: “predict yhat” to get the estimated (fitted) values of uhatsq. 3. Weight (w)used in FGLS estimation is the inverse of the squared root of the yhat (the fitted uhatsq) Problem: No guarantee that the yhat will be positive, may not take the square root. If this situation arises, treat negative values as positive (by taking the absolute value) and then take the square root. 4. Multiply each variable with the weights (w), including the constant. 5. Regress: reg Yw w X1w X2w, no constant (suppressing the constant term) where Yw is the product of the dependent variable and the inverse of the squared root of the yhat (the fitted uhatsq) b) Glesjer Specification 1. Regress the original model, calculate uhat and absuhat (by gen absuhat=abs(uhat)), then regress absuhat on known factors causing HSK. This is the auxiliary regression. 2. Then type: “predict yhat2” to get the estimated (fitted) values of absuhat. 3. Weight (w)used in FGLS estimation is the inverse of the yhat2 (the fitted absuhat) Problem: No guarantee that the yhat will be positive, may not take the square root. If this situation arises, treat negative values as positive (by taking the absolute value). 4. Multiply each variable with the weights (w), including the constant. 5. Regress: reg Yw w X1w X2w, no constant (suppressing the constant term) where Yw is the product of the dependent variable and the inverse of the squared root of the yhat (the fitted absuhat) c) Harvey-Godfrey Specification 1. Regress the original model, calculate uhat and lnuhat (by gen lnhat=log(uhat)), then regress lnuhat on known factors causing HSK. This is the auxiliary regression. 2. Then type: “predict yhat3” to get the estimated (fitted) values of lnuhat. 3. Weight (w)used in FGLS estimation is the inverse of the squared root of the antilog of yhat3 (the fitted lnuhat). Taking anti-log means to “exponentiate.” There is no problem of negative values because exponentiation generates only positive values. 4. Multiply each variable with the weights (w), including the constant. 5. Regress: reg Yw w X1w X2w, no constant (suppressing the constant term) where Yw is the product of the dependent variable and the inverse of the squared root of the yhat (the fitted lnuhat) All these methods are alternative ways of correcting for HSK.