14.1 Least square method

14.1 Least Squares Method

The data in the motivating example can be modeled by y i

0

1 x i

i

, i

1 , , 10

.

0

is the basic quarterly sales,

1

reflects the increased or decreased quarterly sales per student population unit (1000) and

i

’s are the unexpected variations or random errors. If

1

is positive, it implies that as student populations increases, quarterly sales increase. Conversely, negative

1

implies that more students might result in less quarterly sales. Therefore, estimating

0

and

1

is crucial in determining the relationship between the student population and quarterly sales. The well-known method in estimating

0

and

1

is the least squares method proposed by Gauss in eighteen century. For the general setting, y i

0

1 x i

i

, i

1 , , n

The least squares method is to find the estimates of

0

and

1

, b

0

and b

1

, which can minimize the object function

S (

0

,

1

)

i n

1

( y i

0

1 x i

)

2 i n

1

i

2

.

That is, for any values of b

0

, b

1

, S ( b

0

, b

1

)

S ( b

0

, b

1

) . The following explains why the least squares method work from two points of view.

(a) Algebraic point of view:

Suppose y i

60

5 x i

i

, i

1 ,..., 10 , is the true model for the data in the

' motivating example. Also, we assume all the random errors s i

are

very

3

small.

Then, heuristically,

S ( 60 , 5 )

i

10

1

( y i

60

5 x i

) 2 i

10

1

( 60

5 x i

i

60

5 x i

) 2

10

i

1

i

2

However,

S ( 59 , 5 )

10 i

1

( y i

59

5 x i

)

2

10 i

1

( 60

5 x i

i

59

5 x i

)

2 i

10

1

( 1

i

)

2 i

10

1

i

2

,

S ( 60 , 4 )

10 i

1

( y i

60

4 x i

)

2 i

10

1

( 60

5 x i

i

60

4 x i

)

2 i

10

1

( x i

i

)

2 i

10

1

i

2 and

S ( 59 , 4 )

i

10

1

( y i

59

4 X i

)

2

10 i

1

( 60

5 x i

i

59

4 x i

)

2

10 i

1

( 1

x i

i

)

2

10 i

1

i

2

These 4 equations imply the object function S (

0

,

1

) attains its minimum as the parameters estimates equal to their true counterparts.

That is, under small random errors (which usually happen in practice), the parameter estimates b

0

and b

1

might be quite close to the true value of the parameters as 60 and 5 given above.

(b) Geometric point of view:

The least squares method is to find the “best” line which the data points

( x

1

, y

1

), ( x

2

, y

2

), , ( x

10

, y

10

)

are closest to.

Now, we demonstrate the procedure to find b

0

and b

1

. In calculus, the maximum or the minimum of a two variables of function f(x,y) can be found by solving

f ( x ,

x y )

0 and

f ( x ,

y y )

0 first, then check the

Hessian matrix (the second partial derivative) matrix is positive definite or negative definite. Therefore, we need to find the solutions of the

normal equations:

4

S (

0

0

,

1

)

0

and

S (

0

1

,

1

)

0

.

It is quite complicated to find the solutions of the above equations directly.

However, it is much easier by let

0

0

1 x , x n

i

1 n x i

and thus

S (

0

,

1

)

i n

1

( y i

0

1 x i

)

2 i n

1

( y i

(

0

1 x )

1

( x i

x ))

2

i n

1

( y i

0

1

( x i

x ))

2

S (

0

,

1

).

Then, we find b

0

, b

1

minimizing S (

0

,

1

) and b

0

can be obtained by the equation b

0

b

0

b

1 x .

S (

0

0

,

1

)

i n

(

1

( y i

0

1

( x i

0

x ))

2

)

n i

1

2 ( y i

0

1

( x i

x ))

0

Since i n

1

1

( x i

x )

1 i n

1

( x i

x )

1 i

(

n

1 x i

n x )

1

( n i

1 x i

i n

1 x i

)

0 ,

Thus,

n i

1

2 ( y i

0

1

( x i

x ))

2 i n

1

( y i

0

)

2 ( i n

1 y i

n

0

)

0 .

b

0

i n

1 y i n

y

S ( b

0

1

,

1

)

n

(

i

1

( y i

y

1

( x i

x ))

2

)

1

2 i n

1

( y i

y

1

( x i

x ))( x i

x )

0

5

n i

1

( y i

y )( x i

x )

1 i n

1

( x i

x ) 2

0

b

1

n i

1

( y i

i n

1

( x i y )( x i

x )

2

x )

s

XY s

XX

, where s

XX

n i

1

x i

x

2 i n

1 x i

2 n x

2 , s

XY

i n

1

( x i

x )( y i

y )

n i

1 x i y i

n x y .

Thus, b

0

b

0

b

1 x

y

b

1 x

.

The fitted regression equation (the fitted line) is y

ˆ

b

0

b

1 x

y

s

XY s

XX

(

x

x

)

.

The fitted value for the i’ th observation is y

ˆ i

b

0

b

1 x i

In the motivating example, i

10

1 x i

140 , i

10

1 y i

1300 , i

10

1 x i

2

2528 , i

10

1 y i

2

184730 , x

14 , y

130 ,

10 i

1 x i y i

21040 ,

Thus, s

XY

21040

10

14

130

2840 and s

XX

2528

10

14

2

568

b

1

s

XY s

XX

2840

568

5 and b

0

y

b

1 x

130

5

14

60 .

y

ˆ

60

5 x is the fitted regression equation.

That is, an increase in the student population of 1000 is associated with an increase of $5000 in expected quarterly sales, i.e., quarterly sales are expected to increase by $5 per student.

Example 1: y i

0

-2

2

0

2

2

5

1

Please fit the model Y

0

1

X

.

5

3

9

1

9

0

9

0

9

1

10

-1

6

[sol]:

Thus, i

10

1 x i

S

XY

60 , i

10

1 y i

5 ,

10 i

1 x i

2

482 , i

10

1

32

10

6

0 .

5

2 and S

XX y i

2

21 , x

6 , y

0 .

5 ,

482

10

36

122 i

10

1 x i y i

32 ,

b

1

S

XY

S

XX

2

122

1

61

and b

0

y

b

1 x

0 .

5

6

61

49

122

.

49

122

x

61

is the fitted regression equation.

The fitted value of the first observation is y

1

49

122

x

1

61

49

122

0

61

49

122

Note:

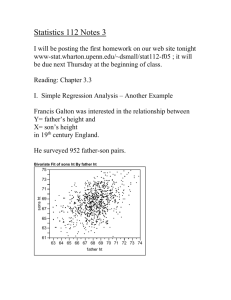

Sir Francis Galton (1822-1911) was responsible for the introduction of the word “regression”. Originally he used the term “reversion” in an unpublished address. He investigated the relationship between the child’s height (y) and parent’s height (x). The fitted regression equation he obtained was something like

y

2

3

x

x

.

Example 2:

Suppose the model is y i

0

1 x i

i

, i

1 , , 20 ,

i

~ N

, and i

20

1 x i

1330 , i

20

1 y i

1862 .

8 , i

20

1 x i

2

90662 ,

20 i

1 y i

2

173554 .

26 , i

20

1 x i y i

124206 .

9

Find the least squares estimate and the fitted regression equation

[solution:] s

XX

i

20

1 x i

2

20

x

2

90662

20

1330

20 s

XY

i

20

1 x i y i

20

x y

124206 .

9

20

2

2217

1330

20

1862 .

8

20

330 .

7

7

Then, the least squares estimate is b

1

s

XY s

XX

330 .

7

2217

0 .

149 , b

0

y

b

1 x

1862 .

8

20

0 .

149

1330

20

83 .

232

The fitted regression equation is

ˆ y

83 .

232

0 .

149 x

.

Online Exercise:

Exercise 14.1.1

8