Notes 15 - Wharton Statistics Department

advertisement

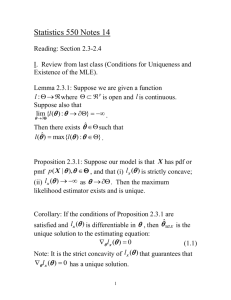

Statistics 550 Notes 15

Reading: Section 2.4, 3.1-3.2

I. Numerical Methods for Finding MLEs

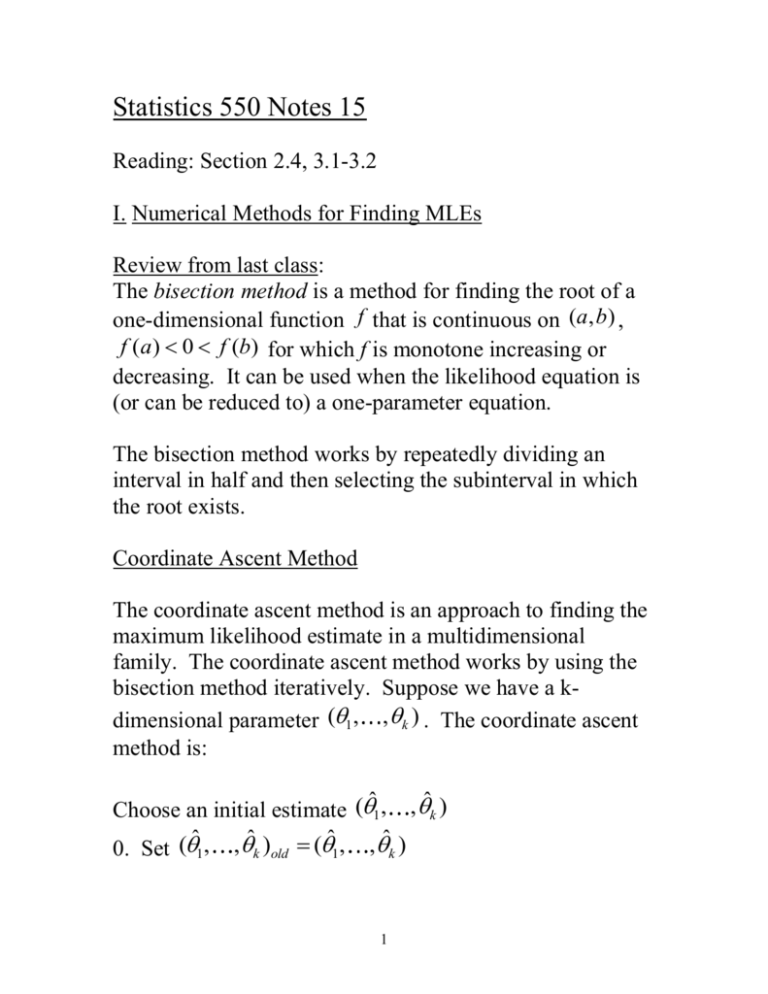

Review from last class:

The bisection method is a method for finding the root of a

one-dimensional function f that is continuous on (a, b) ,

f (a) 0 f (b) for which f is monotone increasing or

decreasing. It can be used when the likelihood equation is

(or can be reduced to) a one-parameter equation.

The bisection method works by repeatedly dividing an

interval in half and then selecting the subinterval in which

the root exists.

Coordinate Ascent Method

The coordinate ascent method is an approach to finding the

maximum likelihood estimate in a multidimensional

family. The coordinate ascent method works by using the

bisection method iteratively. Suppose we have a kdimensional parameter (1 , ,k ) . The coordinate ascent

method is:

Choose an initial estimate (ˆ1 , ,ˆk )

0. Set (ˆ1 , ,ˆk )old (ˆ1 , ,ˆk )

1

1. Maximize lx (1 ,ˆ2 , ,ˆk ) over 1 using the bisection

ˆ

ˆ

method by solving l (1 , 2 , , k ) 0 (assuming the

1

log likelihood is differentiable). Reset ˆ to the that

1

maximizes lx (1 ,ˆ2 ,

1

,ˆk ) .

2. Maximize lx (ˆ1 ,2 ,ˆ3 , ,ˆk ) over 2 using the bisection

method. Reset ˆ2 to the 2 that maximizes

l (ˆ , ,ˆ ,ˆ ) .

x

1

2

3

k

....

K. Maximize lx (ˆ1 ,ˆ2 ,ˆ3 , ,ˆk 1,k ) over k using the

bisection method. Reset ˆk to the k that maximizes

l (ˆ ,ˆ ,ˆ ,ˆ , ) .

x

1

2

3

k 1

k

K+1. Stop if the distance between (ˆ1 , ,ˆk )old and

(ˆ1 , ,ˆk ) is less than some tolerance . Otherwise return

to step 0.

The coordinate ascent method converges to the maximum

likelihood estimate when the log likelihood function is

strictly concave on the parameter space. See Figure 2.4.1

in Bickel and Doksum.

2

Example: Beta Distribution

x r 1 (1 x) s 1

p( x | r , s)

( r ) ( s )

( r s )

exp((r 1) log x ( s 1) log(1 x) log (r ) log ( s) log (r s))

for 0 x 1 and {(r , s) : 0 r , 0<s }

This is a two parameter full exponential family and hence

the log likelihood is strictly concave.

We found the method of moments estimates in Homework

5.

For X 1 ,

, X n iid Beta( r , s ),

n

n

i 1

i 1

l x (r , s) (r 1) log X i ( s 1) log(1 X i ) n log ( r ) n log ( s) n log ( r s)

n

l

log (r )

log ( r s)

log X i n

n

r i 1

r

r

n

l

log ( s)

log ( r s)

log(1 X i ) n

n

s i 1

s

s

For

X = (0.3184108, 0.3875947, 0.7411803, 0.4044642,

0.7240628, 0.7247060, 0.1091041, 0.1388588, 0.7347975

0.5138287, 0.2683177, 0.4685777, 0.1746448, 0.2779592,

0.2876237, 0.5833377, 0.5847999, 0.2530112, 0.5018544

0.5295680)

3

R code for finding the MLE:

# Code for beta distribution MLE

# xvec stores the data

# rhatcurr, shatcurr store current estimates of r and s

# Generate data from Beta(r=2,s=3) distribution)

xvec=rbeta(20,2,3);

# Set low and high starting values for the bisection searches

4

rhatlow=.001;

rhathigh=20;

shatlow=.001;

shathigh=20;

# Use method of moments for starting values

rhatcurr=mean(xvec)*(mean(xvec)mean(xvec^2))/(mean(xvec^2)-mean(xvec)^2);

shatcurr=((1-mean(xvec))*(mean(xvec)mean(xvec^2)))/(mean(xvec^2)-mean(xvec)^2);

rhatiters=rhatcurr;

shatiters=shatcurr;

derivrfunc=function(r,s,xvec){

n=length(xvec);

sum(log(xvec))-n*digamma(r)+n*digamma(r+s);

}

derivsfunc=function(s,r,xvec){

n=length(xvec);

sum(log(1-xvec))-n*digamma(s)+n*digamma(r+s);

}

dist=1;

toler=.0001;

while(dist>toler){

rhatnew=uniroot(derivrfunc,c(rhatlow,rhathigh),s=shatcurr,

xvec=xvec)$root;

5

shatnew=uniroot(derivsfunc,c(shatlow,shathigh),r=rhatnew

,xvec=xvec)$root;

dist=sqrt((rhatnew-rhatcurr)^2+(shatnew-shatcurr)^2);

rhatcurr=rhatnew;

shatcurr=shatnew;

rhatiters=c(rhatiters,rhatcurr);

shatiters=c(shatiters,shatcurr);

}

rhatmle=rhatcurr;

shatmle=shatcurr;

Newton’s Method

Newton’s method is a numerical method for approximating

solutions to equations. The method produces a sequence of

(0)

(1)

values , , that, under ideal conditions, converges

to the MLE ˆ .

MLE

To motivate the method, we expand the derivative of the

( j)

log likelihood around :

0 l '(ˆMLE ) l '( ( j ) ) (ˆMLE ( j ) )l ''( ( j ) )

Solving for ˆ gives

MLE

l '( ( j ) )

MLE

l ''( ( j ) )

This suggests the following iterative scheme:

ˆ

( j)

6

( j 1)

( j)

l '( ( j ) )

l ''( ( j ) ) .

Newton’s method can be extended to more than one

dimension (usually called Newton-Raphson)

( j 1)

( j)

1

l (

( j)

) l ( ( j ) )

where l denotes the gradient vector of the likelihood and l

denote the Hessian.

Comments on methods for finding the MLE:

1. The bisection method is guaranteed to converge if there

is a unique root in the interval being searched over but is

slower than Newton’s method.

2. Newton’s method:

( j)

A. The method does not work if l ''( ) 0 .

B. The method does not always converge.

See attached pages from Numerical Recipes in C book.

3. For the coordinate ascent method and Newton’s method,

a good choice of starting values is often the method of

moments estimator.

4. When there are multiple roots to the likelihood equation,

the solution found by the bisection method, the coordinate

ascent method and Newton’s method depends on the

starting value. These algorithms might converge to a local

maximum (or a saddlepoint) rather than a global maximum.

7

5. The EM (Expectation/Maximization) algorithm (Section

2.4.4) is another approach to finding the MLE that is

particularly suitable when part of the data is missing.

Example of nonconcave likelihood:

MLE for Cauchy Distribution

Cauchy model:

p( x | )

1

, x 0,

2

(1 ( x ) )

Suppose X 1 , X 2 , X 3 are iid Cauchy( ) and we observe

X1 0, X 2 1, X 3 10 .

8

Log likelihood is not concave and has two local maxima

between 0 and 10. There is also a local minimum.

The likelihood equation is

3

2( xi )

l x '( )

0

2

1

(

x

)

i 1

i

The local maximum (i.e., the solution to the likelihood

equation) that the bisection method finds depends on the

interval searched over.

R program to use bisection method

9

derivloglikfunc=function(theta,x1,x2,x3){

dloglikx1=2*(x1-theta)/(1+(x1-theta)^2);

dloglikx2=2*(x2-theta)/(1+(x2-theta)^2);

dloglikx3=2*(x3-theta)/(1+(x3-theta)^2);

dloglikx1+dloglikx2+dloglikx3;

}

When the starting points for the bisection method are

x0 0, x1 5 , the bisection method finds the MLE:

uniroot(derivloglikfunc,interval=c(0,5),x1=0,x2=1,x3=10);

$root

[1] 0.6092127

When the starting points for the bisection method are

x0 0, x1 10 , the bisection method finds a local

maximum but not the MLE:

uniroot(derivloglikfunc,interval=c(0,10),x1=0,x2=1,x3=10)

;

$root

[1] 9.775498

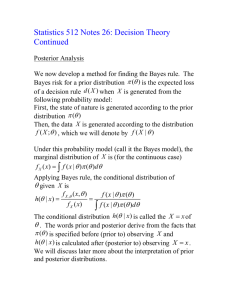

II. Bayes Procedures (Chapter 3.2)

In Chapter 3, we return to the theme of Section 1.3 which is

how to appraise and select among point estimators and

decision procedures. We now discuss how the Bayes

criteria for choosing decision procedures can be

implemented.

10

Review of the Bayes criteria:

Suppose a person’s prior distribution about is ( and

the probability distribution for the data is that X | has

probability density function (or probability mass function)

p( x | ) .

This can be viewed as a model in which is a random

variable and the joint pdf of X , is ( p ( x | ) .

The Bayes risk of a decision procedure for a prior

distribution ( , denoted by r ( ) , is the expected value

of the loss function over the joint distribution of ( X , ) ,

which is the expected value of the risk function over the

prior distribution of :

r ( ) E [ E[l ( , ( X )) | ]] E [ R( , )] .

For a person with prior distribution ( , the decision

procedure which minimizes r ( ) minimizes the expected

loss and is the best procedure from this person’s point of

view. The decision procedure which minimizes the Bayes

risk for a prior ( is called the Bayes rule for the prior

( .

A nonsubjective interpretation of the Bayes criteria: The

Bayes approach leads us to compare procedures on the

basis of

11

r ( ) R( , ) ( )

if is discrete with frequency function ( or

r ( ) R( , ) ( )d

if is continuous with density ( .

Such comparisons make sense even if we do not interpret

( as a prior density or frequency, but only as a weight

function that reflects the importance we place on doing

well at the different possible values of .

Computing Bayes estimators: In Chapter 1.3, we found the

Bayes decision procedure by computing the Bayes risk for

each decision procedure. This is usually an impossible

task. We now provide a constructive method for finding

the Bayes decision procedure

Recall from Chapter 1.2 that the posterior distribution is

the subjective probability distribution for the parameter

after seeing the data x:

p( | x) ( )

p( | x)

p( | x) ( )d

The posterior risk of an action a is the expected loss from

taking action a under the posterior distribution p ( | x ) .

r (a | x ) E p ( | x ) [l ( , a)] .

The following proposition shows that a decision procedure

which chooses the action that minimizes the posterior risk

for each sample x is a Bayes decision procedure.

12

Proposition 3.2.1: Suppose there exists a function

* ( x) such that

r ( * ( x) | x) inf{r (a | x) : a }

(1.1)

*

where denotes the action space, then ( x) is a Bayes

rule.

Proof: For any decision rule , we have

r ( , ) E[l ( , ( X ))] E[ E[l ( , ( X )) | X ]] (1.2)

By (1.1),

E[l ( , ( X )) | X x] r ( ( x) | x) r ( * ( x) | x) E[l ( , * ( X )) | X x]

Therefore,

E[l ( , ( X )) | X x] E[l ( , * ( X )) | X x]

and the result follows from (1.2).

Consider the oil-drilling example (Example 1.3.5) again.

Example 2: We are trying to decide whether to drill a

location for oil. There are two possible states of nature,

1 location contains oil and 2 location doesn’t contain

oil. We are considering three actions, a1 =drill for oil,

a2 =sell the location or a3 =sell partial rights to the location.

The following loss function is decided on

(Drill)

(Sell)

a1

a2

0

10

1

(Oil)

(Partial rights)

a3

5

(No oil) 2

6

12

1

13

An experiment is conducted to obtain information about

resulting in the random variable X with possible values 0,1

and frequency function p( x, ) given by the following

table:

Rock formation

X

0

1

0.3

0.7

1

(Oil)

0.6

0.4

(No oil) 2

Application to Example 1.3.5:

Consider the prior (1 ) 0.2, (2 ) 0.8 . Suppose we

observe x 0 . Then the posterior distribution of is

p ( x 0 | ) ( )

p ( | x 0) 2

p ( x 0 | ) ( ) ,

i

i 1

i

1

8

p

(

|

x

0)

,

p

(

|

x

0)

1

2

which equals

9

9 .

Thus, the posterior risks of the actions a1 , a2 , a3 are

1

8

r (a1 | 0) l (1 , a1 ) l (2 , a1 ) 10.67

9

9

r (a2 | 0) 2, r (a3 | 0) 5.89

*

Therefore, a2 has the smallest posterior risk and if is the

Bayes rule,

* (0) a2 .

Similarly,

14

r (a1 |1) 8.35, r (a2 |1) 3.74, r (a3 |1) 5.70

and we conclude that

* (1) a2 .

15