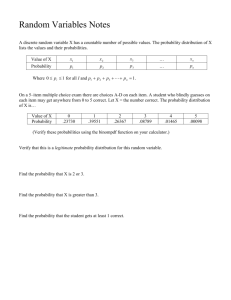

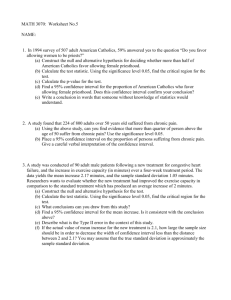

QMB 3250 - University of Florida

advertisement