CFA Level 2 Quantitative Analysis: Hypothesis Testing & Regression

advertisement

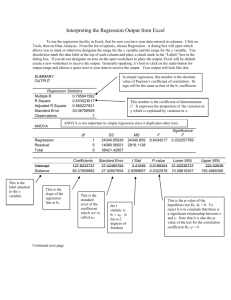

CFA Level 2 – Quantitative Analysis Testing the Existence of a Significant Relationship between Dependent Variable and Independent Variable 1. Test on Population Slope =0 ≠0 H0: H1: Test Statistic: (There is no relationship) (There is a relationship) t b1 β1 sb1 t b1 sb1 where Sb1 is the Sample Standard Deviation for Sample Slope 2. If the t-ratio > the critical t-statistics, then H0 is rejected (Beta is significantly different from zero) and evidence of a linear relationship is concluded. Test on the Existence of a Significant Correlation, =0 ≠0 H0: H1: (There is no relationship) (There is a relationship) Test Statistic: t ρ n2 1 ρ2 where (n – 2) = Degrees of Freedom 3. If the t-ratio > the critical t-statistics, then H0 is rejected (Correlation Coefficient is significantly different from zero) and evidence of a linear relationship is concluded. Test on Confidence-Interval of =0 ≠0 H0: H1: (There is no relationship) (There is a relationship) Test Statistic: If the b1 tn-2Sb1 > Zero, then H0 is rejected (Beta is significantly different from zero) and evidence of a linear relationship is concluded. b1 tn-2Sb1 P-VALUE APPROACH The p-value approach involve calculating the value of the specified test statistic and the corresponding p-value from sample data, then comparing with the chosen significance level . If the p-value < than the significant level , reject the H0. F-STATISTIC F-Statistic – The F-statistic is used to test the hypothesis that all the regression coefficients of the independent variables are simultaneously equal to zero. Expressed mathematically, the F-statistic is used to test the null hypothesis that H0: Beta1=Beta2=Beta3=0. (i.e. all the slope coefficients in the regression equation are significant as a group) For Multiple Regression Model If the F-statistic > the critical value, then H0 is rejected 1 Norman Cheung CFA Level 2 – Quantitative Analysis T-STATISTIC T-Statistic – The T-statistic is used to test the hypothesis that the only one regression coefficient of a Linear Model or one single of a Multiple Regression Model is equal to zero (Note the difference bet. T-test & F-test). Expressed mathematically, the T-statistic is used to test the null hypothesis that H0: Beta1=0. If the T-statistic > the critical value, then H0 is rejected Interpretation of R2 – “Goodness of Fit” or Coefficient of Determination The R2 indicates the percentage of the total variability of the dependent variable that can be explained by the regression model. INTERCEPT AND SLOPE COEFFICIENT The intercept coefficient may be interpreted as the level of the dependent variable given a zero value for the independent variable. This interpretation assumes that the prediction occurs within the range of values of the independent variable used in developing the regression equation. The slope coefficient represents the change in the dependent variable given a unit change in independent variable. AUTOCORRELATION Autocorrelation occurs when successive observations of the dependent variable are correlated and tends to occur when data are collected over a period of time, and a residual analysis is required to determine if autocorrelation exists. MULTICOLLINEARITY Multicollinearity exists when there is a high degree of correlation among the independent variables in a regression model. Symptoms of high multicollinearity include: 1. Large R2 but statistically insignificant regression coefficients. 2. Regression coefficients that change greatly in value when independent variables are dropped or added to the equation 3. The magnitude of one or more coefficients is unexpectedly large or small relative to expectations. 4. A coefficient appears to have a “wrong” sign (the sign is counterintuitive). The Danger of Multicollinearity – is that the coefficients of the regression equation may be unstable. When multicollinearity exists in a model, it is difficult to determine which independent variables are contributing significantly to the explanatory power of the model. Therefore, the reliability of this model for explaining which variables contribute to the forecast of the value of the dependent variable. STATISTICALLY SIGNIFICANT AND ECONOMICALLY SIGNIFICANT Statistically Significant – do have a relationship between the dependent and independent variables, say a linear relationship. Economically Significant – the impact of the independent variable on the dependent is significant in terms of quantity; i.e., the regression coefficient (or estimate of the dependent variable) is large enough, say 100, 200 instead of 0.0001 or 0.0002 etc . 2 Norman Cheung CFA Level 2 – Quantitative Analysis ANOVA – ANALYSIS OF VARIANCE TABLE Source of Variation Regression (R) Sampling Error (E) Total (T) Degrees of Freedom (df) Sum of Squares k SSR n - (k+1) SSE n-1 SST Mean Square MSE MSE F-Statistics SSR k F MSR MSE SSE n ( k 1) Standard Error of the Regression Model MSE SSE n ( k 1) More CFA info & materials can be retrieved from the followings: For visitors from Hong Kong: http://normancafe.uhome.net/StudyRoom.htm For visitors outside Hong Kong: http://www.angelfire.com/nc3/normancafe/StudyRoom.htm 3 Norman Cheung