We are only going to deal with the linear regression model

advertisement

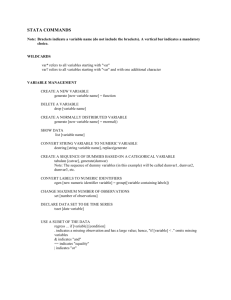

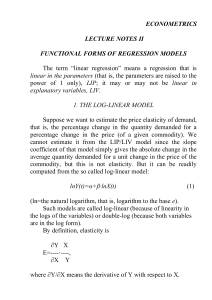

Yale University Social Science Statistical Laboratory Introduction to Regression and basic data Analysis A StatLab Workshop. We are only going to deal with the linear regression model The simple (or bivariate) LRM model is designed to study the relationship between a pair of variables that appear in a data set. The multiple LRM is designed to study the relationship between one variable and several of other variables. In both cases, the sample is considered a random sample from some population. The two variables, X and Y, are two measured outcomes for each observation in the data set. For example, lets say that we had data on the prices of homes on sale and the actual number of sales of homes: Price(thousands of $) Sales of new homes X y 160 126 180 103 200 82 220 75 240 82 260 40 280 20 And we want to know the relationship between X and Y. Well, what does our data look like? y 126 20 160 280 x 1 Yale University Social Science Statistical Laboratory We need to specify the population regression function, the model we specify to study the relationship between X and Y. This is written in any number of ways, but we will specify it as: where Y is an observed random variable (also called the endogenous variable, the lefthand side variable). X is an observed non-random or conditioning variable (also called the exogenous or right-hand side variable). is an unknown population parameter, known as the constant or intercept term. is an unknown population parameter, known as the coefficient or slope parameter. u is is an unobserved random variable, known as the disturbance or error term. Once we have specified our model, we can accomplish 2 things: Estimation: How do we get a "good" estimates of and ? What assumptions about the PRF make a given estimator a good one? Inference: What can we infer about and how do we form confidence intervals for them. from sample information? That is, and and/or test hypotheses about The answer to these questions depends upon the assumptions that the linear regression model makes about the variables. The Ordinary Least Squres (OLS) regression procedure will compute the values of the parameters and (the intercept and slope) that best fit the observations. We want to fit a straight line through the data, from our example above, that would look like this: 2 Yale University Social Science Statistical Laboratory y Fitted values y 126 20 160 280 x Obviously, no straight line can exactly run through all of the points. The vertical distance between each observation and the line that fits “best”—the regression line—is called the error. The OLS procedure calculates our parameter values by minimizing the sum of the squared errors for all observations. Why OLS? It is considered the most reliable method of estimating linear relationships between economic variables. It can be used in a variety of environments, but can only be used when it meets the following assumptions: Insert Assumptions: 3 Yale University Social Science Statistical Laboratory Regression (Best Fit) Line So, now that we know the assumptions of the OLS model, how do we estimate and ? The Ordinary Least Squares estimates of and of and and are defined as the particular values that minimize the sum of squares for the sample data. The best fit line associated with the n points (x1, y1), (x2, y2), . . . , (xn, yn) has the form y = mx + b where slope m n( xy) ( x)( y ) n ( x 2 ) ( x ) 2 int ercept b y m( x) n So we can take our data from above and substitute in to find our parameters: Sum Price(thousands of $) Sales of new homes x y 160 126 180 103 200 82 220 75 240 82 260 40 280 20 1540 528 slope m n( xy) ( x)( y ) int ercept b n ( x ) ( x ) 2 2 xy 20,160 18,540 16,400 16,500 19,680 10,400 5,600 107280 x2 25,600 32,400 40,000 48,400 57,600 67,600 78,400 350000 7(107280) (1540) * (528) 62160 0.79286 78400 7(350000) (1540) 2 y m( x) 528 0.79286(1540) 249.8571 n 7 4 Yale University Social Science Statistical Laboratory Thus our least squares line is y = 0.79286x + 249.857 Now let’s do this in a much easier manner in SPSS: Interpreting data: Let’s take a look at the regression diagnostics from our example above: I. Interpreting log models The log-log model: Yi 1 X iB 2 e ui Is this linear in the parameters? How do we estimate this? rewrite : ln Yi B2 ln X i u i Where a=lnB1 Slope coefficient B2 measures the elasticity of Y with respect to X, that is, the percentage change in Y for a given percentage change in X The model assumes that the elasticity coefficent between Y and X, B2 remains constant throughout.—the change in lnY per unit change in lnX (the elasticity B2) remains the same no matter at which lnX we measure the elasticity. demand 10 1 1 9 price 5 Yale University Social Science Statistical Laboratory lndemand 2.30259 0 0 2.19722 lnprice The log-linear model: ln Yt 1 2 t ut B2 measures the constant proportional change or relative change in Y for a given absolute change in the value of the regressor: B2=relative change in regressand / absolute change in regressor If we multiply the relative change in Y by 100, will then get the percentage change in Y for an absolute change in X, the regressor. gnp 420819 191857 17889 28798 var4 6 Yale University Social Science Statistical Laboratory lngnp 12.95 12.1645 17889 28798 var4 The lin-log model: Now interested in finding the absolute change in Y for a percent change in X Yi 1 2 ln X i ui =Change in Y / relative change in X The absolute change in Y is equal to B2 times the relative change in X—if the latter is multiplied by 100, then it gives the absolute change in Y for a percentage change in X 7