Statistics 2014, Fall 2001

advertisement

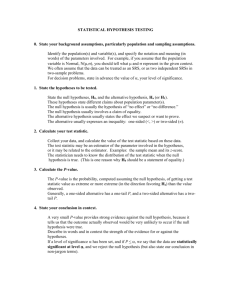

1 Chapter 4 – Decision Making for a Single Sample Definition: Inferential statistics is the branch of statistics concerned with inferring the characteristics of populations (i.e., parameter values) based on the information contained in sample data sets. Inferential statistics includes estimation of parameters and hypothesis testing. There are two branches of statistical inference, 1) estimation of parameters and 2) testing hypotheses about the values of parameters. We will consider estimation first. Defn: A point estimate of a parameter is a specific numerical value, ˆ , of a statistic, ̂ , based on the data obtained from a sample. A particular statistic, such as ̂ , used to provide a point estimate of a parameter is called an estimator. Note: ̂ is a function of X1, X2, ..., Xn, the elements of a random sample, and is thus a random variable, with its own sampling distribution. Note: Nothing is said in the definition about the “goodness” of the estimate. Any statistic is an estimate of a parameter. For a particular parameter , some statistics provide better estimates than others. We need to examine the statistical properties of each estimator to decide which one gives the best estimate for a parameter. ˆ . If Defn: The point estimator ̂ is said to be an unbiased estimator of the parameter if E ˆ is called the bias of the estimator the estimator is not unbiased, then the difference B E ̂ . Example: Assume that we have a r.s. X1, X2, ..., Xn from a distribution with unknown mean . We want to find an unbiased estimator of . From the linearity property of expectation, we have 1 n 1 n 1 n E X E X i E X i . Hence for any distribution, the sample mean is an n i 1 n i 1 n i 1 unbiased estimator of the distribution mean. Example: Same situation, but now we want to find an unbiased estimator of the variance, 2 , of the distribution. Also from the linearity property of expectation, we have 2 1 1 n n E S 2 E Xi X E X i2 X 2 2 XX i n 1 i 1 n 1 i 1 1 1 n n 2 2 E X i2 nX 2 E X i nE X . n 1 i 1 n 1 i 1 2 2 2 But 2 E X i2 2 , and X E X E S 2 2 n . Hence 2 1 2 2 2 2 . Hence for any distribution, the sample variance is an n 1 n unbiased estimator of the distribution variance. 2 Sometimes there are several unbiased estimators of a given parameter. For example, each member of the sample is also an unbiased estimator of the distribution mean. We want to decide which of them provides the best estimate, based on the characteristics of the sampling distributions of the estimators. Suppose that we have selected a r.s. X1, X2, ..., Xn from a distribution that is characterized by an unknown parameter . Suppose that we have two statistics estimator should we use? ̂1 and ̂ 2 , both of which are unbiased estimators of . Which Since we want our particular estimate to be close to the true value of , we want to use an estimator whose sampling distribution has small variance. Defn: In the class of all unbiased estimators of a parameter , that estimator whose sampling distribution has the smallest variance is called the minimum variance unbiased estimator (MVUE) for . ˆ E ˆ Defn: The mean square error of an estimator ̂ of a parameter is MSE 2 . ˆ V ˆ B 2 . If we are talking about the class of Note: We can also write the MSE as MSE ˆ V ˆ . unbiased estimators of , then B = 0, and MSE Defn: If we have two possible estimators, ˆ MSE 1 given by . ˆ MSE 2 ̂1 and ̂ 2 , of . The relative efficiency of ̂ 2 to ̂1 is Example: We have a r.s. X1, X2, ..., Xn from a distribution with unknown mean and standard deviation . We could estimate the distribution mean using the sample mean, or using a single member, say X1, of the sample. Which statistic has the better relative efficiency? We have MSE X V X B 2 2 , since the sample mean is unbiased. We also have n 2 MSE X1 V X1 B 2 , since X1 is also unbiased. Hence the relative efficiency of X to X1 is n. Hence for n 2, the sample mean is a more efficient estimator of than a single element of the sample. Defn: The standard error of an estimator ̂ of a parameter is just the square root of the variance of ˆ V ˆ . the sampling distribution of ̂ : S .E. 3 Examples of parameters, together with best estimators and estimates, are given in the table below. Unknown parameter Estimator Point Estimate x 2 X 2 1 n S Xi X n 1 i 1 s2 p p̂ 1 2 X Pˆ n X1 X 2 x1 x2 p1 p2 Pˆ1 Pˆ2 pˆ1 pˆ 2 2 Examples: p. 135, Exercises 4-2, 4-10 Hypothesis Testing Often, instead of estimating the value of a parameter based on sample data, we simply want to decide whether we believe a specific assertion about the value of the parameter. Definition: An hypothesis is a statement about the value of a population parameter. Examples: 1) Nothing outlasts the Energizer. 2) More doctors recommend Tylenol for the relief of headache pain than any other pain reliever. Hypotheses are tested in pairs, to decide which of the two statements is more believable. The Null Hypothesis, H0 This hypothesis usually represents the state of no change or no difference, from the researcher’s point of view. Often the null hypothesis is a statement of current belief about the value of the parameter; the researcher doubts the null hypothesis and wants to disprove it. Symbolically, this hypothesis is never a strict inequality. The Alternative Hypothesis, Ha This statement is what the researcher is attempting to prove. It usually is the negation of the null hypothesis. Symbolically, this hypothesis is always a strict inequality. This hypothesis can take one of three forms for a parameter and a given number 0: 1) Ha: > 0 2) Ha: < 0 3) Ha: 0 4 Examples: Let pT be the proportion of all doctors who recommend Tylenol, and let pA be the proportion of all doctors who recommend alternatives to Tylenol. We want to test the following two hypotheses against each other: H0: pT pA vs. Ha: pT > pA Whenever incomplete information, such as that from a sample, is used to make an inference about the value of a population parameter, there is the risk of making a mistake. In a hypothesis testing situation, there are two possible mistakes that could be made. Type I Error This type of error occurs when our test leads us to reject the null hypothesis when, in fact, the null hypothesis is true. The probability of making a Type I error is denoted by the Greek letter , and is called the significance level of the test. In scientific research, a Type I error is usually considered to be more serious. This error is made when the researcher concludes that she has proved what she wanted to prove, but this conclusion is mistaken. Type II Error This type of error occurs when our test leads us to fail to reject the null hypothesis when, in fact, the null hypothesis is false. The probability of making a Type II error is denoted by the Greek letter . This error is made when the researcher concludes that the data do not give sufficient evidence to support the researchers original conjecture, but in fact the conjecture is true. Later research may then provide sufficient evidence to validate the researcher’s conjecture. Possible Results of a Hypothesis Test Reject H0 Fail to Reject H0 ____H0 True________Ha True___ |__Type I Error__|___________| |____________|_Type II Error_| Before conducting a hypothesis test, the researcher decides on an acceptable level of risk for committing a Type I error (i.e., chooses a value for ). Most commonly, = 0.05. If a Type I error is deemed to have more serious consequences, then the researcher may choose a smaller value for , such as 0.01 or 0.001. The researcher also chooses the amount of evidence to collect (sample size or sizes). Test Statistic The researcher summarizes the information contained in the simple random sample(s) in the form of a test statistic, a random variable whose value is calculated from the sample data. The value of this statistic will tell the researcher whether to reject H0 or to fail to reject H0. The test statistic must be chosen so that its probability distribution under the null hypothesis is known. Rejection Region( or Critical Region) The rejection region is that range of possible values of the test statistic such that, if the actual value falls in this region, the researcher will reject H0 and conclude that Ha is true. The boundary point(s) of this region is(are) called the critical value(s) of the test. 5 The form of the rejection region depends on the form of the alternative hypothesis: 1) If the alternative hypothesis has the form Ha: > 0, then the rejection region is a right-hand tail of the distribution of the test statistic, with area . 2) If the alternative hypothesis has the form Ha: < 0, then the rejection region is a left-hand tail of the distribution of the test statistic, with area . 3) If the alternative hypothesis has the form Ha: 0, then the rejection region is the union of a right-hand tail and a left-hand tail of the distribution of the test statistic, each having area /2. Note: You may be wondering why we don’t simply always choose a very small value for the significance level of the test, so that we will have a very slim chance of making a Type I error. The reason is that, for a given level of evidence (sample size(s)), if we make the probability of a Type I error smaller, we will automatically increase the probability of making a Type II error. Our goal is to make both probabilities as small as possible. Usually, the consequences of making a Type I error are more serious than the consequences of making a Type II error. Hence, we want to control . It is important, however, to make both and as small as possible. We do this by choosing an appropriate sample size(s). For a given chosen value of , if we make our sample size(s) larger, we will decrease the probability of making a Type II error. Steps in Statistical Hypothesis Testing The following steps must appear in each statistical hypothesis test. The first four steps are the set-up of the test. These steps are completed before the researcher chooses a sample(s) and collects data. Step 1: State the null hypothesis, H0, and the alternative hypothesis, Ha. The alternative hypothesis represents what the researcher is trying to prove. The null hypothesis represents the negation of what the researcher is trying to prove. (In a criminal trial in the American justice system, the null hypothesis is that the defendant is innocent; the alternative is that the defendant is guilty; either the jury rejects the null hypothesis if they find that the prosecution has presented convincing evidence, or the jury fails to reject the null hypothesis if they find that the prosecution has not presented convincing evidence). Step 2: State the size(s) of the sample(s). This represents the amount of evidence that is being used to make a decision. State the significance level, , for the test. The significance level is the probability of making a Type I error. A Type I error is a decision in favor of the alternative hypothesis when, in fact, the null hypothesis is true. A Type II error is a decision to fail to reject the null hypothesis when, in fact, the null hypothesis is false. Step 3: State the test statistic that will be used to conduct the hypothesis test. The following statement should appear in this step: “The test statistic is _________ , which under H0 has a _____________ probability distribution (with ____ degrees of freedom).” Step 4: Find the critical value for the test. This value represents the cutoff point for the test statistic. If the value of the test statistic computed from the sample data is beyond the critical value, the decision will be made to reject the null hypothesis in favor of the alternative hypothesis. Step 5: Calculate the value of the test statistic, using the sample data. (We may also compute a pvalue, and make our decision based on a comparison of the p-value with our chosen level of significance.) 6 Step 6: Decide, based on a comparison of the calculated value of the test statistic and the critical value of the test, whether to reject the null hypothesis in favor of the alternative. If the decision is to reject H0, the statement of the conclusion should read as follows: “We reject H0 at the (value of ) level of significance. There is sufficient evidence to conclude that (statement of the alternative hypothesis).” If the decision is to fail to reject H0, the statement of the conclusion should read as follows: “We fail to reject H0 at the (value of ) level of significance. There is not sufficient evidence to conclude that (statement of the alternative hypothesis).” Power and Sample Sizes We want to minimize the probability of making an error in our conclusion. The only way to reduce both and simultaneously is to increase the sample size(s). We want to choose a sample size that will optimize the power of the test. Defn: The power, 1 - , of a hypothesis test is the probability of rejecting the null hypothesis when in fact the null hypothesis is false. I.e., the power of the test is the probability of correctly rejecting a false null hypothesis. Power is a measure of the sensitivity of a test. By sensitivity we mean the ability to detect effects, or differences, when they are actually present. If a difference d Powerd 1 P(Type II error | d ) . 0 exists, then We decide what size difference d we wish to be able to detect, in units of the population standard deviation, and we decide on a value of the Power(d); this number is between 0 and 1, preferably greater than 0.5. We also decide on the type I error rate, . Knowing these three quantities, we can find the necessary sample size from a chart of Operating Characteristic Curves for the test.