Notes 20 - Wharton Statistics Department

advertisement

Statistics 550 Notes 20

Reading: Section 4.1-4.2

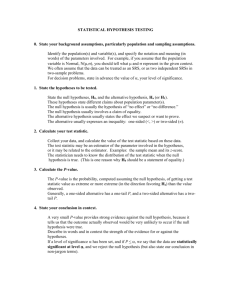

I. Hypothesis Testing Basic Setup (Chapter 4.1)

Review

Motivating Example: A graphologist claims to be able to

identify the writing of a schizophrenic person from a

nonschizophrenic person. The graphologist is given a set

of 10 folders, each containing handwriting samples of two

persons, one nonschizophrenic and the other schizophrenic.

In an experiment, the graphologist made 6 correct

identifications. Is there strong evidence that the

graphologist is able to better identify the writing of

schizophrenics better than a person who was randomly

guessing would?

Probability model: Let p be the probability that the

graphologist successfully identifies the writing of a

randomly chosen schizophrenic vs. nonschizophrenic

person. A reasonable model is that the 10 trials are iid

Bernoulli with probability of success p .

Hypotheses:

H 0 : p 0.5

H1 : p 0.5

The alternative/research hypothesis H1 should be the

hypothesis we’re trying to establish.

1

Test Statistic: W ( X1 , , X n ) that is used to decide whether

to accept or reject H 0 .

In the motivating example, a natural test statistic is

W ( X1 ,

, X10 ) i 1 X i , the number of successful

10

identifications of schizophrenics the graphologist makes.

The observed value of this test statistic is 6.

Critical region: Region of values C of the test statistic for

which we reject the null hypothesis, e.g., C {6, 7,8,9,10} .

Errors in Hypothesis Testing:

True State of Nature

Decision

H1 is true

H 0 is true

Type I error

Correct decision

Reject H 0

Accept (retain) H 0

Correct decision

Type II error

The best critical region would make the probability of a

Type I error small when H 0 is true and the probability of a

Type II error small when H1 is true. But in general there is

a tradeoff between these two types of errors.

Size of a test: We say that a test with test statistic

W ( X1 , , X n ) and critical region C is of size if

max P [W ( X1 ,

0

, X n ) C] .

2

The size of the test is the maximum probability (where the

maximum is taken over all that are part of the null

hypothesis; for the motivating example, the null hypothesis

only has one value of in it) of making a Type I error

when the null hypothesis is true. A test with size is

said to be a test of (significance) level .

Suppose we use a critical region C {6, 7,8,9,10} with the

, X10 ) i 1 X i for the motivating

10

test statistic W ( X1 ,

example. The size of the test is Pp 0.5 (Y 6) 0.377 where

Y has a binomial distribution with n=10 and probability

p=0.5.

Power: The power of a test at an alternative 1 is the

probability of making a correct decision when is the true

parameter (i.e., the probability of not making a Type II

error when is the true parameter).

The power of the test with test statistic

, X10 ) i 1 X i and critical region

C {6, 7,8,9,10} at p=0.6 is Pp 0.6 (Y 6) 0.633 and at

W ( X1 ,

10

p=0.7 is Pp 0.7 (Y 7) 0.850 where Y has a binomial

distribution with n=10 and probability p. The power

depends on the specific parameter in the alternative

hypothesis that is being considered.

Power function: C ( ) P [W ( X1 , , X n ) C ]; 1 .

3

Neyman-Pearson paradigm: Set the size of the test to be at

most some small level, typically 0.10, 0.05 or 0.01 (most

commonly 0.05) in order to protect against Type I errors.

Then among tests that have this size, choose the one that

has the “best” power function. In Chapter 4.2, we will

define more precisely what we mean by “best” power

function and derive optimal tests for certain situations.

For the test statistic W ( X1 , , X10 ) i 1 X i , the critical

region C {6, 7,8,9,10} has a size of 0.377; this gives too

high a probability of Type I error. The critical region

C {8,9,10} has a size of 0.0547, which makes the

probability of a Type I error reasonably small. Using

C {8,9,10} , we retain the null hypothesis for the actual

experiment for which W was equal to 6.

10

P-value: For a test statistic W ( X1 , , X n ) , consider a

family of critical regions {C : } each with different

sizes. For the observed value of the test statistic Wobs from

the sample, consider the subset of critical regions for which

we would reject the null hypothesis, {C : Wobs C } . The

p-value is the minimum size of the tests in the subset

{C : Wobs C } ,

p-value = min Size(test with critical region C ) .

{C :Wobs C }

The p-value is the minimum significance level for which

we would still reject the null hypothesis.

4

The p-value is a measure of how much evidence there is

against the null hypothesis; it is the minimum significance

level for which we would still reject the null hypothesis.

Consider the family of critical regions Ci {i 1 X i i} for

the motivating example. Since the graphologist made 6

correct identifications, we reject the null hypothesis for

critical regions Ci , i 6 . The minimum size of the critical

regions Ci , i 6 is for i=6 and equals 0.377. The p-values

is thus 0.377.

10

Scale of evidence

p-value

<0.01

Evidence

very strong evidence against

the null hypothesis

Strong evidence against the

null hypothesis

weak evidence against the

null hypothesis

little or no evidence against

the null hypothesis

0.01-0.05

0.05-0.10

>0.1

Warnings:

(1) A large p-value is not strong evidence in favor of H 0 .

A large p-value can occur for two reasons: (i) H 0 is true or

(ii) H 0 is false but the test has low power at the true

alternative.

5

(2) Do not confuse the p-value with P( H 0 | Data) . The pvalue is not the probability that the null hypothesis is true.

II. Testing simple versus simple hypotheses: Bayes

procedures

Consider testing a simple null hypothesis H 0 : 0

versus a simple alternative hypothesis H1 : 1 , i.e.,

under the null hypothesis X ~ P( X | 0 ) P0 and under the

alternative hypothesis X ~ P( X | 1 ) P1 .

Example 1: X 1 ,

, X n iid N ( ,1) . H 0 : 0 , H1 : 1 .

Example 2: X has one of the following two distributions:

P(X=x)

0

1

2

3

4

0.1

0.1

0.1

0.2

0.5

P0

P1

0.3

0.3

0.2

0.1

0.1

Bayes procedures: Consider 0-1 loss, i.e., the loss is 1 if we

choose the incorrect hypothesis and 0 if we choose the

correct hypothesis. Let the prior probabilities be on 0

and 1 on 1 . The posterior probability for 0 is

P( X | 0 ) ( 0 )

P( 0 | X )

P( X | 0 ) ( 0 ) P( X | 1 ) (1 ) .

The posterior risk for 0-1 loss is minimized by choosing the

hypothesis with higher posterior probability.

6

Thus, the Bayes rule is to choose H 0 (equivalently 0 ) if

P( X | 0 ) (0 ) P( X | 1 ) (1 ) and choose

H1 (equivalently 1 ) otherwise.

1

For ( 0 ) (1 ) 2 , the Bayes rule is choose H 0 if

P( X | 0 ) P( X | 1 ) and choose H1 otherwise.

1

Note that the Bayes risk for the prior ( 0 ) (1 ) 2 of a

test is 0.5*P(Type I error)+0.5*P(Type II error). Thus, the

1

(

)

(

)

0

1

Bayes procedure for the prior

2 minimizes

the sum of the probability of a type I error and the

probability of a type II error.

Example 1 continued: Suppose ( X1 , , X 5 ) (1.1064,

1.1568, -0.1602, 1.0343, -0.1079), X 0.6059 . Then

5

5 ( X i 0) 2

1

i 1

0.001613

P( X | 0)

exp

2

2

5

5 ( X i 1) 2

1

i 1

0.002739

P( X | 1)

exp

2

2

We choose H 0 : 0 if

P( X | 0) ( 0) P( X | 1) ( 1) , or equivalently

P( X | 0) ( 0)

1,

P( X | 1) ( 1)

7

0.001613* ( 0)

which for this data is 0.002739* ( 1) 1. Writing

( 1) (1 ( 0)) , we have that we choose

H 0 : 0 for priors with ( 0) 0.6294 and

H1 : 1 for priors with ( 0) 0.6294 .

III. Neyman-Pearson Lemma (Section 4.2)

In the Neyman-Pearson paradigm, the hypotheses are not

treated symmetrically: Fix 0 1 . Among tests having

level (Type I error probability) , find the one that has the

“best” power function.

For simple vs. simple hypothesis, the best power function

means the best power at H1 : 1 . Such a test is called

the most powerful level test.

The Neyman-Pearson lemma provides us with a most

powerful level test for simple vs. simple hypotheses.

Analogy: To fill up a bookshelf with books with the least

cost, we should start by picking the one with the largest

width/$ and continue. Similarly, to find a most powerful

level test, we should start by including in the critical

region those sample points that are most likely under the

alternative relative to the null hypothesis and continue.

Define the likelihood ratio statistic by

8

L( X , 0 , 1 )

p ( X | 1 )

p( X | 0 ) ,

where p ( x | ) is the probability mass function or

probability density function of the data X.

The statistic L takes on the value when

p( X | 1 ) 0, p( X | 1 ) 0 and by convention equals 0

when both p( X | 1 ) 0, p( X | 1 ) 0 .

We can describe a test by a test function ( x ) . When

( x ) 1 , we always reject H 0 . When ( x ) c , 0 c 1,

we conduct a Bernoulli trial and reject with probability c

(thus we allow for randomized tests) When ( x ) 0 , we

always accept H 0 .

We call k a likelihood ratio test if

1 if L( x,0 ,1 ) k

k ( x ) c if L( x,0 ,1 ) k

0 if L( x, , ) k

0 1

Theorem 4.2.1 (Neyman-Pearson Lemma): Consider

testing H 0 : 0 ( P( X | 0 ) P0 ) vs.

H1 : 1 ( P( X | 1 ) P1 )

(a) If 0 and k is a size likelihood ratio test, then k is

a most powerful level test

9

(b) For each 0 1 , there exists a most powerful size

likelihood ratio test.

(c) If is a most powerful level test, then it must be a

level likelihood ratio test except perhaps on a set A

satisfying P0 ( X A) P1 ( X A) 0

Example 1: X 1 , , X n iid N ( ,1) . H 0 : 0 , H1 : 1 .

The likelihood ratio statistic is

n

1

1

2

exp

( xi 1)

2

2

L( X , 0 , 1 ) i 1 n

1

1 2

exp

xi

2

2

i 1

1

exp

x

i

2

i 1

n

exp n / 2 i 1 xi

n

Rejecting the null hypothesis for large values of

L( X ,0 ,1 ) is equivalent to rejecting the null hypothesis for

large values of

n

i 1

Xi .

What should the cutoff be? The distribution of

n

i1 X i under the null hypothesis is N (0, n) so the most

powerful level tests rejects for

n

i 1

Xi

n

normal.

(1 ) where is the CDF of a standard

10

Example 2:

P0

0

0.1

1

0.1

P(X=x)

2

3

0.1

0.2

P1

0.3

0.3

0.2

0.1

0.1

3

2

0.5

0.2

L( x,0 ,1 ) 3

4

0.5

The most powerful level 0.2 test rejects if and only if X=0

or 1.

There are multiple most powerful level 0.1 tests, e.g., 1)

reject the null hypothesis if and only if X=0; 2) reject the

null hypothesis if and only if X=0; 3) flip a coin to decide

whether to reject the null hypothesis when X=0 or X=1.

Proof of Neyman-Pearson Lemma:

(a) We prove the result here for continuous random

variables X. The proof for discrete random variables

follows by replacing integrals with sums.

*

Let be the test function of any other level test besides

k . Because * is level , EP0 * . We want to show

*

that k ( x )dP1 ( x ) ( x )dP1 ( x ) .

*

(

(

x

)

( x ))( p1 ( x ) kp0 ( x )) dx and show

We examine k

*

that (k ( x ) ( x ))( p1 ( x ) kp0 ( x )) dx 0 . From this,

we conclude that

*

*

(

(

x

)

(

x

))

p

(

x

)

(

(

x

)

( x ))kp0 ( x )) dx

k

1

k

11

The latter integral is 0 because

*

(

x

)

p

(

x

)

d

x

,

k

0

( x) p0 ( x)dx

*

Hence, we conclude that (k ( x ) ( x )) p1 ( x ) 0 as

desired.

*

To show that (k ( x ) ( x ))( p1 ( x ) kp0 ( x )) dx 0 , let

S { x : k ( x ) * ( x )}

S { x : k ( x ) * ( x )}

S 0 { x : k ( x ) * ( x )}

Suppose x S . This implies k ( x ) 0 which implies

that p1 ( x ) kp0 ( x ) . Thus,

S

(k ( x) * ( x))( p1 ( x) kp0 ( x))dx 0 .

Also, similarly,

*

(

(

x

)

( x))( p1 ( x) kp0 ( x))dx 0 and

k

S

S

0

(k ( x) * ( x))( p1 ( x) kp0 ( x))dx = 0

0

(since p1 ( x) kp0 ( x) for x S ).

Thus,

*

(

(

x

)

( x ))( p1 ( x ) kp0 ( x )) dx 0 and this shows that

k

*

(

(

x

)

( x )) p1 ( x ) 0 as argued above.

k

12

(b) Let (c) P0 [ p1 ( x) cp0 ( x)] 1 F0 (c) where F0 is the

p1 ( x )

cdf of p ( x ) under P0 . By the properties of CDFs, (c ) is

0

nonincreasing in c and right continuous.

By the right continuity of (c ) , there exists c0 such that

(c0 ) (c0 ) . So define

p1 ( x )

1

if

c0

p

(

x

)

0

(c0 )

p1 ( x )

( x)

if

c0

(

c

)

(

c

)

p

(

x

)

0

0

0

p ( x)

if 1

c0

0

p0 ( x )

Then,

(c0 )

p ( x)

p1 ( x )

EP0 ( x ) P0 ( 1

c0 )

P

(

c0 )

0

p0 ( x )

(c0 ) (c0 )

p0 ( x )

(c0 )

(c0 )

[ (c0 ) (c0 )]

(c0 ) (c0 )

So we can take k to be c0 .

*

(c) Let be the test function for any most powerful level

test. By parts (a) and (b), a likelihood ratio test k with

*

size can be found that is most powerful. Since and

k are both most powerful, it follows that

13

( ( x)

k

*

( x )) p1 ( x ) 0

(1.1)

Following the proof in part (a), (1.1) implies that

( ( x)

k

*

( x ))( p1 ( x ) kp0 ( x )) dx 0

*

which can be the case if and only if ( x ) ( x ) 0 when

p1 ( x ) kp0 ( x ) (i.e., L( X ,0 ,1 ) k ) and * ( x ) ( x ) 1

when p1 ( x) kp0 ( x) (i.e., L( X ,0 ,1 ) k ) except on a

set A satisfying P0 ( X A) P1 ( X A) 0 .

14