Quantitative Data Analysis: Inferential Statistics

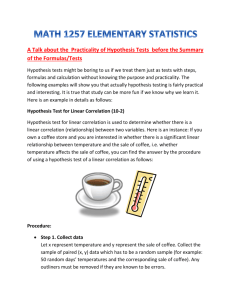

advertisement

EDUC 7741 Quantitative data analysis-inferential statistics Paris Quantitative Data Analysis: Inferential Statistics Purpose of inferential statistics is to make inferences from a sample to a larger group (population). Data from descriptive measures of the sample (mean, standard deviation) are called statistics. The corresponding descriptive measures from the population (mu, sigma) are called parameters. Parametric (normal distribution tied)statistical methods are based on the assumptions of: Normality of distribution, homogeneity of variance of parent population, and at least interval type data. See Creswell….page 230, fig. 8.2 NOTE: Nonparametric methods must be used if any of these assumptions are likely to be violated…. more about nonparametrics later. Parameters are not computed…rather are “inferred” from the information gathered from the statistics of the sample. Nature of inferential statistics involves the testing of hypotheses. Hypotheses are statements about one or more population parameters. If the hypothesis is consistent with sample data, the hypothesis is accepted as a viable value for the parameter. If the sample data are not consistent with the hypothesis, the hypothesis is rejected- meaning that the hypothesized value is not a viable value for the population parameter. In order to make inferences (accept or reject hypothesis) about a value….the probability of occurrence of a particular value of a statistic (mean, standard deviation) must be considered. Probability is based on understanding sampling distribution of the statistic. Sampling distribution- the values of a statistic (mean, standard deviation) from all the possible samples of a given size. EDUC 7741 Quantitative data analysis-inferential statistics Paris Significance level (alpha-level) is a criterion used in making a decision about a hypothesis. It is established prior to testing the hypothesis. Many researchers today…simply report what the actual significance level is of their statistical test. It is the probability such that the statistic would appear by chance if the hypothesis is true. If less than this probability, the statistic did not occur by chance and the hypothesis is rejected. Common levels are .05, .01 and occasionally .10. In examining, we use the standard normal distribution. Mean of 0 and standard deviation of 1.0. Total area under the curve = 100%. See example page 242 fig. 8.5. Hypothesis testing Types of statistical tests t-test of significance- used to test the hypothesis that two groups are or are not equal on a particular parameter. OR that the population correlation coefficient is zero. F-test of significance- used to test the hypothesis that two or more population means are equal. Most frequently used with more than two groups. ANOVAs One-way (one independent variable with multiple levels) Four experimental treatments Two-way (two independent variables with multiple levels). Tests for the interaction as well as for treatments. Factorial ANOVA- more than two variables. ANCOVA-Analysis of covariance. When using a covariable to statistically adjust means on independent variable. EDUC 7741 Quantitative data analysis-inferential statistics Paris CORRELATIONAL DESIGNS Correlation- a measure of the relationship between two variables. A measure of how the two variables “covary” with respect to one another. Orhow changes in one variable compare with changes in the other variable. Are high scores on one variable associated with high scores on the other? Are high scores on one variable associated with low scores on another? Etc. Working with sets of ordered pairs from a group of individuals. Each individual has two scores- one on each of two separate measures. Correlation coefficient- an index of the extent of the relationship between the two variables. Can have values from –1.00 to +1.00. –1 means perfect negative correlation, +1 perfect positive correlation, 0 means no correlation or the variables are completely independent of each other. Reporting using notation of r = Scattergrams illustrate the relationship graphically. Uses of correlation- not used to identify cause and effect. Most often used to predict. Increased strength of relationship between variables (i.e.stronger correlation) increased accuracy in prediction. Types Pearson-product moment- both variables are interval scales- like the score example just given. Spearman rank order- both variables are ordinal scales- performance on midterm and final- rank from first to last. Point biserial- one variable on interval and other dichotomous- relationship between gender and scores on GRE verbal section. Biserial- one variable on interval, other artificial dichotomy on an ordinal scale (artificial because underlying continuous distribution-relationship between scores on midterm exam and rating of stress level(?????) Coefficient of contingency- (Phi coefficient) between two variables on nominal scale. Relationship between gender and graduation from college (coded 0, 1 for gender and 0, 1 for graduation) Examines frequencies of occurrences- numbers of males graduated, not graduated and number of females graduated and not graduated.