6.1_6.4 - Chris Bilder`s

advertisement

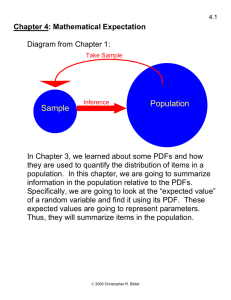

6.1 Chapter 6: Multiple Regression I 6.1 Multiple regression models 3-D Algebra Review Plot Y = 1 + 2x1 - 3x2 Y X1 1 0 3 1 -2 0 0 1 X2 0 0 1 1 The connected (Y, X1, X2) triples form a plane: 2012 Christopher R. Bilder 6.2 From above: From the X1 and X2 plane level (at Y=-3): 2012 Christopher R. Bilder 6.3 Simple linear regression One predictor variable is used to estimate one dependent variable. Take Sample Sample Inference Population Ŷi b0 b1Xi Yi 0 1Xi i Population Linear Regression Model Y Yi i=0+0 i i 1X i+ 1X i Observed Value i= Random Error YX 0 1X i E(Y)=0 + 1X X Observed Value 2012 Christopher R. Bilder 6.4 Sample Linear Regression Model Y i YYi i= 0b+0 1Xb i+ 1X ei i Unsampled Value YŶi bˆ00 bˆ11X Xi X Sampled Value 2012 Christopher R. Bilder 6.5 Multiple linear regression Two or more predictor variables are used to estimate 1 dependent variable. Take sample Sample Ŷi b0 b1Xi1 b2 Xi2 Inference Population bp 1Xi,p 1 Yi 0 1Xi1 2 Xi2 p1Xi,p1 i Notes: 1) i~independent N(0,2) 2) 0, 1, …,p-1 are parameters with corresponding estimates of b0, b1, …, bp-1 3) Xi1,…,Xi,p-1 are known constants (see Section 2.11 when the X’s are random variables – mostly everything stays the same) 4) The second subscript on Xik denotes the kth predictor variable. 5) i=1,…n Now suppose there are only 2 predictor variables: 2012 Christopher R. Bilder 6.6 2012 Christopher R. Bilder 6.7 Notes: 1) Often when we want to just refer to the first, second,… predictor variables, the i subscript is dropped from Xij. Thus, predictor variable #1 is X1, predictor variable #2 is X2,… 2) Yi=0+1Xi1+…+p-1Xi,p-1+i is called a “first-order” model since the model is linear in the predictor (independent, explanatory) variables. 3) There are p – 1 predictor variables and p parameters. 4) The coefficient on the kth predictor variable is k. This measures the effect Xk has on Y with the remaining variables in the model held constant. 5) Qualitative variables (variables not measured on a numerical scale) can be used in the multiple regression model. For example, let Xik=1 to denote female, Xik=0 to denote male. More will be done with qualitative variables in Chapter 8. 6) Polynomial regression models contain higher than first order terms in the model. For example, Yi 0 1Xi1 2 Xi12 i . More will be done with polynomial regression models in Chapter 7. 7) Transformed variables can be used just as in simple linear regression. For example, log(Yi) can be taken to be the response variable. More will be done with transformed variables in Chapter 7. 8) If the effect of one predictor variable on the response variable depends on another predictor variable, interactions between predictor variables can be included in the multiple regression model. For 2012 Christopher R. Bilder 6.8 example, Yi 0 1Xi1 2 Xi2 3 Xi1Xi2 i . More will be done with interactions in Chapter 7. 9) The word “linear” in multiple linear regression is in reference to the parameters. For example, Yi 0 1Xi1 12 Xi2 i is not a multiple LINEAR regression model. 2012 Christopher R. Bilder 6.9 6.2 General linear regression model in matrix terms Note that the Yi=0+1Xi1+…+p-1Xi,p-1+i for i=1,…,n can be written as: Y1=0+1X11+…+p-1X1,p-1+1 Y2=0+1X21+…+p-1X2,p-1+2 Y3=0+1X31+…+p-1X3,p-1+3 Yn=0+1Xn1+…+p-1Xn,p-1+n Let 1 X11 Y1 1 X Y 21 Y 2 , X= Y 1 Xn1 n X12 X22 Xn2 X1,p 1 0 1 X2,p 1 1 , and 2 Xn,p 1 p 1 n Then Y = X + , which is Y1 Y 2= Yn 1 X11 1 X 21 1 Xn1 X12 X22 Xn2 X1,p 1 0 1 X2,p 1 1 2 Xn,p 1 p 1 n Remember that has mean 0 and covariance matrix 2012 Christopher R. Bilder 6.10 2 0 0 2 0 0 2I 2 0 0 since the i are independent. Note that E(Y) = E(X + ) = E(X) = X since E() = 0 Question: What is Cov(Y)? 2012 Christopher R. Bilder 6.11 6.3 Estimation of regression coefficients (j’s) Parameter estimates are found using the least squares method. From Chapter 1: The least squares method tries to find the b0 and b1 such that SSE = (Y- Ŷ )2 = (residual)2 is minimized. Formulas for b0 and b1 are derived using calculus. For multiple regression, the least squares method minimizes SSE = (Y- Ŷ )2 again; however, Ŷ is now Ŷi b0 b1Xi1 b2 Xi2 ... bp 1Xi,p 1 As shown in Section 5.10, the least squares estimators are b ( X X )1 X Y . This holds true for multiple regression with X1,p 1 1 X11 X12 b0 1 X b X X 21 22 2,p 1 1 X and b Xn,p 1 1 Xn1 Xn2 bp 1 Properties of the least squares estimators in simple linear regression (such as: unbiased estimators and minimum variance among unbiased estimators) hold true for multiple linear regression. 2012 Christopher R. Bilder 6.12 6.4 Fitted values and residuals Ŷ1 Let Yˆ . Then Yˆ Xb since Ŷ n Ŷ1 1 X11 X1,p 1 b0 X2,p 1 b1 Yˆ 2 1 X21 1 X X b n1 n,p 1 p 1 Ŷn b0 b1X11 bp 1X1,p 1 b b X b X 0 1 21 p 1 2,p 1 b b X b X 0 1 n1 p 1 n,p 1 Also, e1 Y1 Ŷ1 e Y ˆ 2 2 Y2 e en Yn Ŷn ˆ Xb X(XX )1 X ' Y ΗY where H=X(XX)-1X Hat matrix: Y is the hat matrix 2012 Christopher R. Bilder 6.13 We will use this in Chapter 10 to measure the influence of observations on the estimated regression line. Covariance matrix of the residuals: Cov(e) = (I-H) and Cov(e ) MSE (I H) 2 2012 Christopher R. Bilder