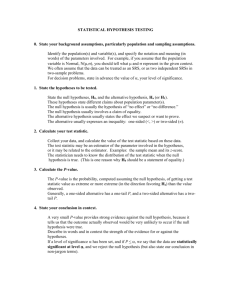

SAMPLING DISTRIBUTIONS PART I: ESTIMATING A POPULATION

advertisement

Confidence Intervals In statistics, we often prefer to construct an interval estimate of the unknown population parameter. An interval estimate proposes a range of values to account for the inherent uncertainty in any estimation process. The most frequent type of interval estimate is the confidence interval, which employs an interval formula that, a priori, contains the true parameter a high probability of the time. Unfortunately, once a particular interval is calculated from sample data, we never know if it is among those that contained the true parameter or those that did not. From our knowledge of the Central Limit Theorem and the standard normal distribution (Z), we know that the following probability statement is approximately true for large enough n: X P z / 2 z / 2 = 1 - n where the “cutoff” point z / 2 is chosen so that / 2 area is nestled in the upper tail. Standard Normal (Z) Area in this tail is exactly / 2 z / 2 Z-Values Area in this tail is exactly / 2 Q: How would you compute the z-value needed here? A: Take the given and compute either z / 2 (1) =NORMSINV(1- / 2 ) or (2) =NORMINV(1- / 2 , 0, 1) The preceding probability statement can be rearranged to state P( X z / 2 n X z / 2 n ) =1- . This says the formula X z / 2 / n will “work” (contain the true mean ) 100 (1 ) % of the time in the long run. If we replace X with an observed sample mean x , then we get a sample interval x z / 2 , which is called a 100 (1 ) % confidence interval for the mean. n Observe that we still do not know if the computed interval is one of the instances where the 10 | P a g e formula “worked.” If the underlying population we draw from is normally distributed, the confidence interval is said to be an exact 100 (1 ) % confidence interval. Otherwise we call it an approximate 100 (1 ) % confidence interval. The probability is assumed to be small, typically .05 or less. This is the probability that the formula fails in the long run. The confidence interval formula stated earlier is not very useful unless (or equivalently 2 ) is known. Soon we’ll see how to replace 2 with a sample estimate, which creates only minor changes in the formula. Example. Assuming 2 4900 , construct a 95% confidence interval for the mean repair cost in the preceding car repair example. Recall that the sample mean was x $215.66 . Solution. Estimating an Unknown Population Variance In the vast majority of real life data situations, you will not know the population variance 2 . The formulas for confidence intervals developed earlier do not apply “as is.” There are a number of ways to handle the problem, but most focus on using an estimate of the true population variance 2 . Given a random sample ( X1 , X2 ,...., Xn ) from a large population, the most common estimate of the population variance is the sample variance, denoted by s 2 , and given by the formula 1 n s2 ( xi x ) 2 . n 1 i 1 This value is computed in Excel with the VAR(:) function. The argument (:) references the spreadsheet cells containing the sample data. The sample standard deviation is s (the square root of the sample variance), and it is given by the Excel function STDEV(:). Alternatively, s can be calculated by taking the square root of VAR(:). Confidence Intervals with an Unknown Population Variance: The tdistribution In our earlier confidence interval formulas, we assumed that the standard deviation was known (see the hail dent analysis). This allowed us to use z-values from a standard normal and compute confidence intervals. If we do not know , it is tempting to simply insert the sample estimate s in its place. Fortunately, we can do this with only minor modifications. However, this requires an understanding of a new distribution called the t distribution. If a random sample is drawn from a normal distribution, the distribution of ( X ) ( s / n ) follows a t distribution with n-1 degrees of freedom (df). Note that the denominator has an s 11 | P a g e instead of a . The “degrees of freedom” is a parameter that you do not need to estimate since it depends directly on the sample size n. The t distribution is symmetric about 0 and looks a lot like the normal distribution (especially as the degrees of freedom increase). The general shape for various degrees of freedom is depicted below. t with 20 df t distribution vs. z distribution t with df >30 (almost normal) t with 10 df 0 To calculate probabilities for the t distribution, we use the TDIST function in Excel. The value p=TDIST(x, df, 1) is the area (= probability) above a nonnegative value x from a t distribution with df degrees of freedom. The value p=TDIST(x, df, 2) is the combined area above x and below –x. In a picture, Probability = p = TDIST(x, df, 2) Probability = p = TDIST(x, df, 1) -x x To convert a probability into a t-value, we use the TINV function. In Excel, TINV(p,df) is the value x such that p/2 is the area above x in a t distribution with df degrees of freedom. In a picture, 12 | P a g e AREA = p/2 x=TINV(p,df) One must remember that TINV splits the given probability (p) in half when calculating the x value. Confidence Intervals using the t Distribution If we continue to assume that the population we are sampling from is normal (or approximately normal), then we can construct a 100 (1 ) % confidence interval (CI) for the mean using the formula s x t / 2, df n Example. (Brent Pope, SMU MBA Class 46P). Airco has inspected 81 armature hubs and measured their roughness. The data is provided in Hubs.xls. Calculate a 95% confidence interval (CI) for the mean roughness. Calculate a 98% confidence interval (CI) for the mean roughness. Example. (Tamir Ayad, SMU MBA Class P43P) A new production process is being tested to reduce the number of “large” particles (2 microns and higher) that contaminate silicon wafers. A random sample of n=81 wafers is drawn and the large contaminating particles counted. The 13 | P a g e following sample statistics were computed: x 1.88 s 2 2.71 . Construct a 99% interval for the mean of the new process. Note: When there is sufficient data, you should use the HISTOGRAM function in DATA ANALYSIS (under TOOLS) to see if the data appear to be roughly normally distributed when using the t distribution. Double click on HISTOGRAM and fill out your options (select “Chart Output” to get the visual display or histogram). Assignment 2 Central Limit Theorem Problems 1. Book, 7.24 (Use CLT for n ≥ 30) NOTE: On part (d), simply determine if the sample size in part (c) is adequate. What would you recommend with respect to the sample size n=45? 2. Book, 7.25 (Use CLT for n ≥ 30) Confidence Interval Problems 3. 4. 5. Book, 8.17 (Remember, if you use s for , use t!) Book, 8.21 Book, 8.22 14 | P a g e Hypothesis Testing Hypothesis testing concerns assessing the truth of stated conjectures using formal statistical methods. The stated conjecture may be a widely held belief, a “status quo” position, or simply an opinion regarding the true state of things. In many cases, the stated conjecture is advanced even though it is believed to be false. This is because in hypothesis testing, the stated conjecturecalled the null hypothesiscan be disproved, but it cannot be proved. However, by disproving the null hypothesis, one proves that the contrary is true. The contrary of the null hypothesis is called the alternative hypothesis. To make this concrete, we will draw an analogy with the American legal system. When someone is brought to trial on criminal charges, it is because the prosecution (or more accurately, the state) believes a crime has been committed. But it is insufficient for the prosecution to simply declare a crime has been committed; instead, the prosecution must prove its case. This is why a person is presumed innocent until the prosecution convinces a jury— beyond a reasonable doubt—that they are guilty. Observe that proving a defendant’s guilt is equivalent to disproving a defendant’s innocence. In hypothesis testing, the null hypothesis is on trial. Like the defendant in a criminal case, the null hypothesis is presumed innocent (or correct) until we, as statistical prosecutors, disprove it using sample data. If we are successful in disproving the null hypothesis, then we have equivalently shown that the alternative hypothesis is true. In both court cases and statistics, we win some cases, we lose some cases, and sometimes we arrive at the wrong conclusion. In a criminal case, we are typically more fearful of putting an innocent person in jail than letting a guilty person go free, and this is why we insist the jury be convinced of a person’s guilt beyond a reasonable doubt. This is a fairly rigorous standard, but it is meant to ensure that those who are pronounced guilty really are guilty. In hypothesis testing, we adopt a similar philosophy regarding the standard needed to disprove the null hypothesis. The sample data is used to compute a test statistic, whose distribution is known (at least approximately). We then reject the null hypothesis if the computed value of this test statistic falls in a region that is very improbable if the null hypothesis is true but very likely if it is false. In other words, the data needs to offer substantial evidence that the null hypothesis is false before we reject it. Some of the most common (and useful) hypothesis tests concern population means. Here are the null ( H 0 ) and alternative ( H A ) hypotheses for three standard tests. The names will make more sense later. 1. The “Two-Tailed” Test. H 0 : a (Null) H A : a (Alternati ve) 2. The “One-Tailed” Test to the Left H0 : a HA : a or H0 : a HA : a 15 | P a g e 3. The “One-Tailed” Test to the Right H0 : a HA : a or H0 : a HA : a Example: Confiscating Scallops from Illegal Fishing Here’s a summary of the story published by Arnold Barnett in Interfaces, Vol. 25, March-April, 1995. In an effort to protect over-fishing of local scallop populations, US law requires the average1 meat per scallop to weigh 1/36 of a pound in a fishing vessel’s catch. One such vessel, arriving in Massachusetts with 11,000 bags of fresh scallops, was tested for compliance by the US Fisheries and Wildlife Service (FWS). The FWS took a “large scoop” from each of 18 “randomly selected” bags and computed the scoop’s average scallop meat. Each scoop’s average was then divided by the 1/36 of a pound US requirement so that a fraction of the standard could be reported. These 18 fractions are given below Average Meat per Scallop in the 18 Scoops (measured as a fraction of the US Requirement 1/36 lb) .93 .88 .85 .91 .91 .84 .89 .98 .87 .91 .92 .99 .90 1.14 .98 1.06 .88 .93 What sort of evidence does this sample provide that the fishing outfit has violated the law? In this case, the FWS should take as its null hypothesis “The mean weight is at least 1/36 of a pound,” versus the alternative hypothesis “The mean weight is less than 1/36 of a pound.” This is because the FWS may suspect that the law has been violated, but they need to prove that this is the case. The FWS assumes compliance as its null hypothesis (“innocent”), but if the null hypothesis is disproved, then the fishing outfit is out of compliance (“guilty”). In short, H0 is compliance with the law, HA is violation of the law. Since the data are reported in fractions of the US minimum requirement (1/36 of a pound), we choose to state the null and alternative hypotheses in terms of these fractions. In the formal language of hypothesis testing, we would write: H0: 1 HA: 1 This is a one-tailed test to the left. Here, the overall catch’s average is its population mean. Of course, calculating this mean is impossible because there are too many scallops. 1 16 | P a g e The Test Statistic The test statistic is a formula based on the sample data whose distribution is known under the null hypothesis. Certain values of the test statistic support the null hypothesis, others appear to contradict it. For the three tests described above, the test statistic most commonly used is X 0 , whose components are defined below. s/ n Symbol X 0 General Meaning The sample mean of the random sample The conjectured value of under H0 Value in this problem .931 1 s n The sample standard deviation The sample size .075 18 People often abuse the term test statistic and refer to the value one obtains for a particular sample as the “test statistic.” Technically speaking, one should say this is the value of the test statistic or the computed test statistic. Even statisticians abuse this terminology. If the null hypothesis is true, then, under suitable conditions (namely, the sample consists of independent draws from a normal distribution), ( X 0 ) (s / n ) follows a t distribution with 17 degrees of freedom. This distributional result should look familiar to you from our work with confidence intervals. If the value of this test statistic is “not too negative,” then the null hypothesis is at least plausible. In the language of statistics, we would “fail to reject” the null hypothesis. But if the value is “too negative” (in a sense refined later), then the null hypothesis becomes indefensible, and so one should conclude that it is false. In the language of statistics, when the value of the t-statistic becomes too negative we “reject” the null hypothesis and conclude that the alternative hypothesis is true. The level of the Test (α) and the Critical Values So what values of the test statistic are “so negative” that they demand rejection of the null hypothesis? In other words, what values of ( X 0 ) (s / n ) ) lead us reject the null hypothesis? The answer depends on you and your tolerance for making a mistake. Let the Greek letter alpha ( ) be a small probability that represents your tolerance for rejecting the null hypothesis when it is actually true. Using the jury analogy, this is your tolerance for convicting an innocent person. Your tolerance for making a mistake clearly depends on the situation. You might tolerate a 1 in 10 chance of falsely convicting someone of jaywalking because the consequences are minimal (a small fine), but you would probably demand a higher standard (say 1 in 100 or 1 in 1000) if the charge is murder. Typical values for include .10, .05, .01 and .001. For your selected value of , determine a region of values for ( X 0 ) (s / n ) that have probability and are least supportive of the null hypothesis (and thus most supportive of the 17 | P a g e alternative hypothesis). In the context of the scallop example, this means values of ( X 0 ) (s / n ) that fall in the leftmost “tail” of a t distribution with 17 degrees of freedom. For .05 (the default value used by most people), the values that are least supportive of the null hypothesis are those to the left of t .05,17 1.74 (see picture below). Note that these values are unlikely if the null hypothesis is true but much more likely if the alternative hypothesis is true. t distribution with 17 degrees of freedom Area = .05 Rejection region for = .05. t .05,17 1.74 =TINV(.10,17) (critical value for = .05) In the language of hypothesis testing, the value of is called the level of significance or simply the “level” of the test. The value -1.74 is called the critical value. Note that we calculate this value in Excel as TINV(.10,17) because Excel automatically splits the probability in half. Values to the left of the critical value constitute the rejection region. In our example, the value of the test statistic computed from the random sample of 18 bags is t .931667 1 3.849 . .075323 / 18 This value is far to the left of the critical value t .05,17 1.74 , and thus it does not support the null hypothesis at level .05 . This value does support the alternative hypothesis that the true mean should be smaller. We would therefore reject the null hypothesis and conclude that the average scallop weight (for the boat) is less than that allowed by US law. In the formal language of statistics: “At level 05 , there is sufficient sample information to reject the null hypothesis that the mean scallop weight for the catch is at least 1/36 of a pound. We therefore conclude that the mean scallop weight for the catch is less than 1/36 of a pound.” If we had been unable to reject the null hypothesis, then the word “sufficient” would be replaced by the word “insufficient” and we would not be able to conclude that the boat’s catch violated the law. 18 | P a g e Example. Suppose you manufacture plastic medical parts that are intended to have a mean thickness of 15 mm. You want to test the null hypothesis 15 against the alternative 15 . You collect a sample of n = 81 observations and calculate the following sample statistics x 14.87 , s 2 .25 . Conduct an appropriate hypothesis test at level .05 to see if your parts have a mean thickness of 15 mm. Here, the opposing hypotheses are H 0 : 15 H A : 15 In this test, we again use the test statistic X 15 . If the null hypothesis is true, we would rarely s/ n expect to see values of the test statistic that are “very negative” or “very positive” (i.e., either “tail”). Using .05 , this translates into two critical values: t / 2,80 1.990 and t / 2,80 1.990 (=TINV(.05,80)). The situation is pictured below. / 2 .025 / 2 .025 t .025,80 1.99 t .025,80 1.99 = TINV(.05,80) The rejection region for .05 consists of two intervals, (,1.99) and (1.99, ) . Notice that we have divided the small probability .05 in half in our picture because we have two directions that do not support our null hypothesis and .05 must accommodate both directions. However, we do not split this value when using TINV for a two-tailed test. This is because the TINV function splits the value of automatically. In our example, t .025,80 = TINV(.05,80). When doing one tailed tests, one must remember to anticipate this split. The value of out test statistic using the sample data is t 14.87 15 2.34 .25 / 81 This value falls in the portion of the rejection region below -1.99. This leads us to reject the null hypothesis and conclude that 15 . In formal statistical language: 19 | P a g e “At level 05 , there is sufficient sample information to reject that the mean is 15 mm (and thus conclude that the mean is not 15).” P-Values In each of the previous examples, we could have started with a smaller value of and still rejected the null hypothesis. How small could have been? The smallest value of that still allows you to reject the null hypothesis for the computed value of your test statistic is called the p-value for the hypothesis test. In a very informal sense, this can be thought of as the “probability that the null hypothesis is true.” Technically speaking, it is the probability of observing a value of the test statistic that is at least as unlikely as the one computed given the null hypothesis is true. Since this involves calculating a probability, you can use the TDIST function applied to the value of your test statistic to calculate the p-value. Observe that the p-value is always between 0 and 1. As the p-value approaches 0, the data offers less support for the null hypothesis and therefore more support for the alternative hypothesis. How small should your p-value be before you decide the null hypothesis is false and the alternative is true? That’s up to you. Typical threshold values are .05, .01, and .001. Some people prefer to simply report the p-value and let the audience decide. The important thing to remember is this: the smaller the p-value, the more the data contradicts the null hypothesis and supports the alternative hypothesis. Example: Scallop Confiscation (revisited). What is the p-value for the test conducted in the scallop problem? Solution. Start by drawing a picture. What is the smallest value of that still allows us to reject the null hypothesis given the current value of the test statistic t = –3.849? After a little discussion, you will realize the p-value in the scallop example is the area to the left of the value of the test statistic. Remember the test statistic follows a t distribution with 17 degrees of freedom. Because the t distribution is symmetric, this p-value is also the area to the right of the positive value t = 3.849, namely TDIST(3.849,17,1) = .000643. Example. What is the p-value for the medical part problem? Solution. Start by drawing a picture. What is the smallest value of that still allows us to reject the null hypothesis? Recall the value of the test statistic was 2.34 and the test was a two tailed test. (Answer: TDIST(2.34,80,2) = .02177) 20 | P a g e Example (Tamir Ayad, SMU Class P43P) A new production process is being considered to reduce the number of “large” particles (2 microns and higher) that contaminate silicon wafers. The old process was known to produce an average of 3.01 such particles per wafer. The new process was tested using a sample of n=81 observations. The following sample statistics were computed: x 1.88 s 2 2.71 . Is the new process better? What is the p-value for your test? Solution. Start by drawing a picture. All hypothesis tests in this course work essentially the same way. In summary: 1. 2. 3. 4. 5. State your Null and Alternative Hypotheses. Determine the appropriate test, test statistic, and critical value(s) for the given level of significance ( ). Compute a value for your test statistic based on the sample data. Depending on the value of the test statistic, either “reject” or “fail to reject” the null hypothesis at the given level of . Compute the p-value for your test if required. Assumptions for the t Test There is an important assumption underlying the t test: the underlying population we draw our random sample from is normally distributed (or approximately normally distributed). This is the same assumption used for constructing confidence intervals based on the t-statistic. The normality assumption is probably safe in our scallop case since we are actually dealing with 18 averages (one for each scoop), and we know from the CLT that averages follow a normal distribution for large enough sample sizes. However, the averages do not all have the same number of observations. Could this create problems? Could the FWS come up with a better statistical procedure for testing compliance? Could the scoops have been selected in a nonrandom fashion? Hypothesis Testing: Hints for Testing a Population mean Remember that if you want to prove something, it must be your alternative hypothesis ( H A ). If you fail to reject (“there is insufficient information at level alpha to reject the null hypothesis”), you have not proven H 0 is true. By analogy, a defendant who is found not guilty is not necessarily innocent of all charges. 21 | P a g e Here are a few tips for identifying the null and alternative hypotheses in word problems. They are a little cynical, but then so are word problems. In the real world, it’s typically easier to identify the two hypotheses. Tip #1: For tests of a population mean, H A will always be of the form (1) 0 (2) 0 or (3) 0 (equivalently, 0 or 0 ) English statements like “less than” “greater than” and “not equal to” therefore identify alternative hypotheses. Tip #2: For testing a population mean, the corresponding null hypotheses are of the form (1) H0 : 0 , (2) H0 : 0 or (3) H0 : 0 . Thus English statements like “at least,” “no more than,” etc., must be statements regarding the null hypothesis. However, for actual testing purposes, a null hypothesis of the form H 0 : 0 will be replaced by the test H 0 : 0 (with alternative H A : 0 ). Similarly, a null hypothesis of the form H 0 : 0 will be replaced by the test H 0 : 0 (with alternative H A : 0 ). There are basically three tests: H 0 : 0 H 0 : 0 H A : 0 H A : 0 x 0 t ~ tn 1 df s n reject H 0 if t t , n 1df x 0 t ~ t n 1 df s n reject H 0 if t t , n 1df H 0 : 0 H A : 0 x 0 t ~ tn 1 df n s n reject H 0 if t t / 2, n 1 df or t t / 2, n 1 df Assignment #3 1. 2. 3. 4. 5. 6. Book, 9.27 Book, 9.29 Book, 9.31 Book, 9.32 Book, 9.33 Book, 9.34 22 | P a g e