Solution

advertisement

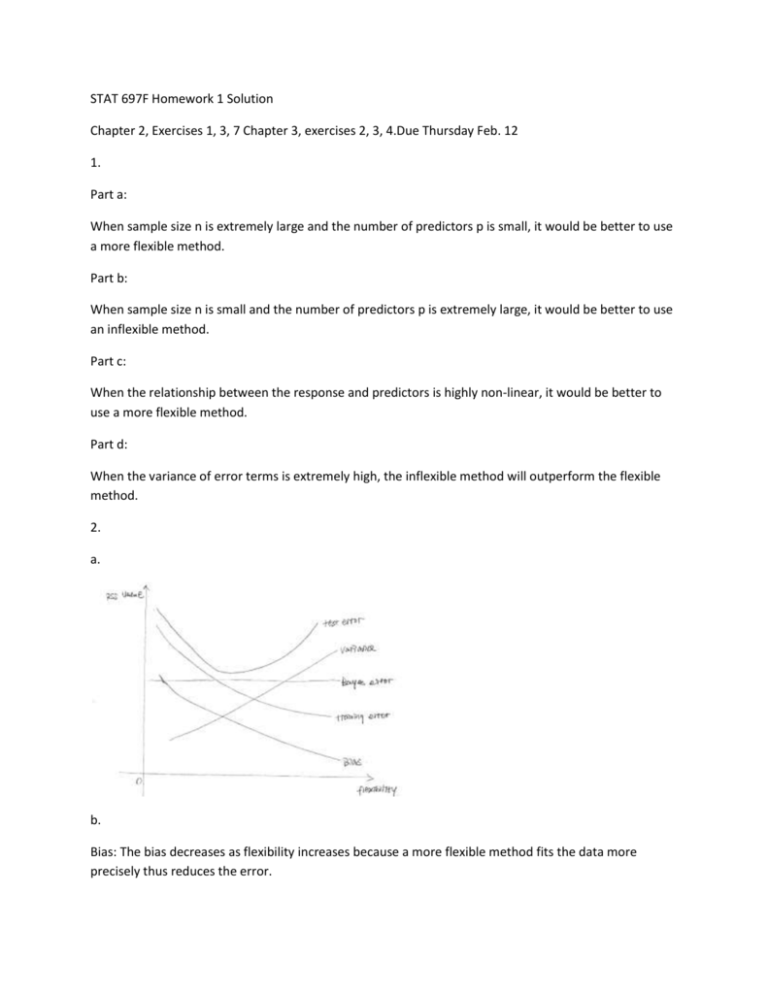

STAT 697F Homework 1 Solution Chapter 2, Exercises 1, 3, 7 Chapter 3, exercises 2, 3, 4.Due Thursday Feb. 12 1. Part a: When sample size n is extremely large and the number of predictors p is small, it would be better to use a more flexible method. Part b: When sample size n is small and the number of predictors p is extremely large, it would be better to use an inflexible method. Part c: When the relationship between the response and predictors is highly non-linear, it would be better to use a more flexible method. Part d: When the variance of error terms is extremely high, the inflexible method will outperform the flexible method. 2. a. b. Bias: The bias decreases as flexibility increases because a more flexible method fits the data more precisely thus reduces the error. Variance: The variance increases since a more flexible method would overfit the training data. Test error: The test error has a U-shape since the bias-variance trade off. Training error: The training error decreases because as flexibility increases, the model would be more specific towards the training data. Bayes error: It's irreducible error and will not change. 3. Part a: The distance are 3, 2, 3.16, 2.24, 1.41, 1.73. Part b: When k is 1, only look at the closest observation which is green. Part c: When k is 3, observations 2, 5 and 6 are the closest vote for Red. Part d: A small value of k will be a better fit in this case because as k decreases the decision boundary will be more flexible thus be closer to the highly non-linear true decision boundary. 4. KNN classifier deals with qualitative response variable. On the other hand when response variable is quatitative, we would need to use KNN regression. 5. a. i. False. ii. False. iii. True. iv. False. b. Plug in x1 = 4 and x2 = 100, the prediction would be 137.1. c. False. The magnitude of coefficient does not necessarily indicate the variable importance. 6. a. The cubic regression would give lower training RSS. b. The cubic regression would give higher testing RSS if the true relationship between X and Y is linear since the it overfit the data. c. The cubic regression would give lower training RSS. Since as the model gets more complex, the training error always decreases regardless what the true relationship is. d. It is hard to say since we don't know the true relationship.