Sums of Gamma Random Variables

advertisement

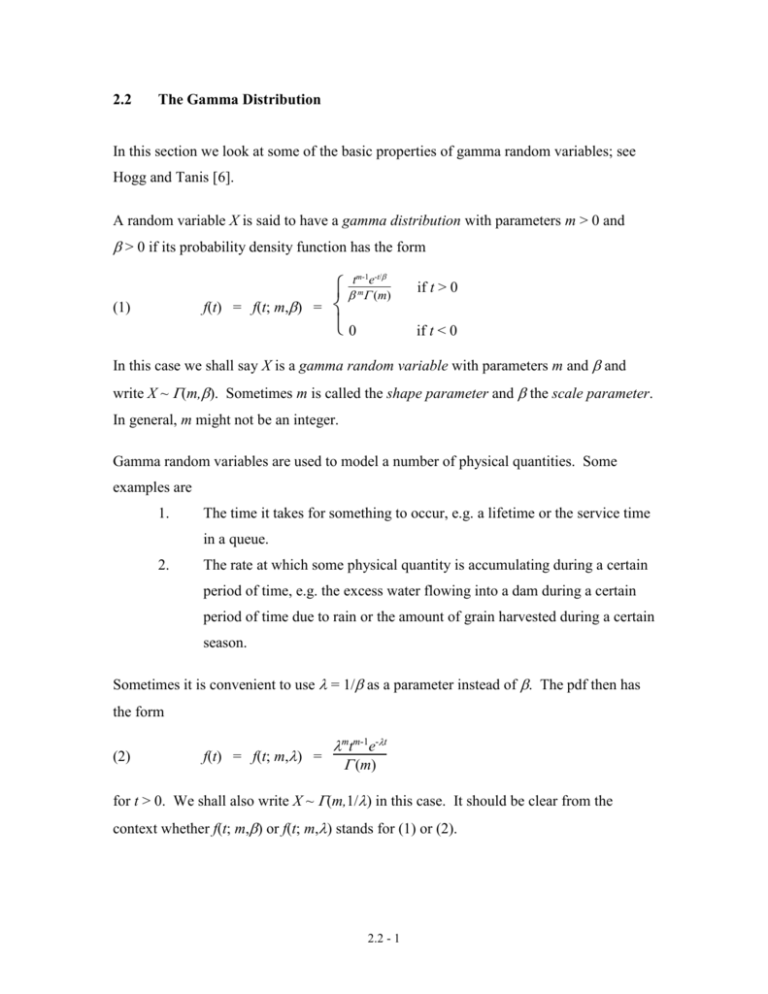

2.2

The Gamma Distribution

In this section we look at some of the basic properties of gamma random variables; see

Hogg and Tanis [6].

A random variable X is said to have a gamma distribution with parameters m > 0 and

> 0 if its probability density function has the form

f(t) = f(t; m,) =

(1)

tm-1e-t/

m

(m)

0

if t > 0

if t < 0

In this case we shall say X is a gamma random variable with parameters m and and

write X ~ (m,). Sometimes m is called the shape parameter and the scale parameter.

In general, m might not be an integer.

Gamma random variables are used to model a number of physical quantities. Some

examples are

1.

The time it takes for something to occur, e.g. a lifetime or the service time

in a queue.

2.

The rate at which some physical quantity is accumulating during a certain

period of time, e.g. the excess water flowing into a dam during a certain

period of time due to rain or the amount of grain harvested during a certain

season.

Sometimes it is convenient to use = 1/ as a parameter instead of . The pdf then has

the form

(2)

f(t) = f(t; m,) =

mtm-1e-t

(m)

for t > 0. We shall also write X ~ (m,1/) in this case. It should be clear from the

context whether f(t; m,) or f(t; m,) stands for (1) or (2).

2.2 - 1

Proposition 1. If m > 0 and > 0 then

m-1 -t/

t m e dt

(m)

(3)

= 1

0

which confirms that f(t) defined by (1) is a valid density function.

0

0

m-1 -t/

um-1e-u

t e

m-1 -u

Proof. m

dt =

du

=

1

since

(m)

=

(m)

u e du.

(m)

0

Proposition 2. If X has a gamma distribution with parameters m and , then the mean of

X is

m-1 -t/

t e

X = E(X) = t m (m) dt = m

(4)

0

Proof. t

m -t/

m-1 -t/

t e

t m e dt =

m (m) dt

(m)

0

0

tme-t/

= m m+1

dt = m

f(t;m+1,) dt = m

(m+1)

0

0

where we have used (m+1) = m (m) and (3).

Proposition 3. If X has a gamma distribution with parameters m and , then the

expected value of X2 is

(5)

m-1 -t/

t e

E(X ) = t2 m (m) dt

2

= m(m+1)2

0

The variance of X is

(6)

(X)2 = E((X - X)2) = m2

The standard deviation of X is

2.2 - 2

X =

(7)

m

tm+1e-t/

t e

2 t e

2

Proof.

t

dt = m

dt = m(m+1) m+2

dt =

m (m)

(m)

(m+2)

m-1 -t/

0

m+1 -t/

0

0

2

m(m+1)

f(t;m+2,) dt = m(m+1) where we have used (m+2) = m(m+1) (m) and

2

0

(3). This proves (5). Since E((X - X)2) = E(X2) - X2 the formula (6) follows from (4)

and (5). (7) follows from (6).

Proposition 4. If f(t) is given by (1) then for t > 0 one has

[(m - 1) - t] tm-2e-t/

m+1 (m)

(8)

f '(t) =

(9)

f(t) has a single local maximum at t = (m - 1) if m > 1.

(10)

f(t) is strictly decreasing for t > 0 if m 1

Proof. (8) is a straightforward computation and (9) and (10) follow from (8).

Proposition 5. Assume X has a gamma distribution with parameters m and and let

Y = cX for some positive number c. Then Y has a gamma distribution with parameters m

and c.

Proof. If f(t) given by (1) is the density function of X then the density function of Y is

tm-1e-t/(c)

(c) (m)

0

m

(1/c)f(t/c)

=

if t > 0

if t < 0

which is equal to f(t; m,c).

2.2 - 3

Proposition 5. If X and Y are independent gamma random variables and X has

parameters m and and Y has parameters q and , then X + Y is a gamma random

variable with parameters m + q and .

Proof. We first show that

1

(12)

um-1(1-u)q-1 du = B(m, q)

0

where

(12)

B(m, q) =

/2

(m) (q)

2m-1

2q-1

= 2

cos (t) sin (t) dt

(m +q )

0

0

0

m-1 q-1 -(t+s)

is the beta function. To see this first note that (m) (q) =

dsdt. Make

t s e

the change of variables r = t + s and u = t/(t+s). Then t = ru and s = r(1-u) and dsdt =

rdudr and the first quadrant in the st-plane gets mapped into the strip {(r,u): 0 < r < , 0

1

(ru)

< u < 1}. So (m) (q) =

m-1

0

1

um-1(1-u)q-1 du

(r(1-u)) e rdudr = (m +q )

q-1 -r

0

0

and (12) follows. Next we show that

(13)

m-1

q-1

t

t

*

(m) (q)

=

tm+q-1

(m +q)

t

To see this note that t

m-1

*t

q-1

sm-1(t-s)q-1 ds. Make the change of variables s = tu.

=

0

1

1

m-1

q-1

m+q-1

m-1

q-1

m+q-1 (m) (q)

We get tm-1 * tq-1 =

(tu)

(t-tu)

tdu

=

t

u (1-u) du = t

(m +q )

0

0

and (13) follows. It follows from (11) and (13) that

tm+q-1e-t/

tm-1e-t/ tq-1e-t/

f(t; m,) * f(t; q,) = m

= f(t; m+q,)

* q

= m+q

(m +q)

(m) (q)

and the propostion follows.

2.2 - 4

Proposition 6. If X has a gamma distribution with parameters m and = 1/, then the

Laplace transform L(s) and moment generating function M(r) of X are given by

(14)

L(s) =

(15)

M(r) =

1

=

(1 + s)m

( + s)m

1

(1 - r)

m

=

( - s)m

Proof. One has

0

0

-st -m m-1 -t/

-1

-m m-1 -(s+1/)t

L(s) = [ (m)]-1

dt

e t e dt = [ (m)]

t e

If one makes the change of variables u = (s + 1/)t one obtains

-m(u/(s + 1/))m-1e-u (1/(s + 1/))du

L(s) = [ (m)]

-1

0

1

um-1e-u du =

= (1/(1 + s)) [ (m)]

(1 + s)m

m

-1

0

This proves (14). (15) follows from the (14) and the fact that M(r) = L(-r).

Let

t

t

(16)

m-1 -s/

s e

f(s;m,) ds = m

G(t) = G(t;m,) =

ds

(m)

0

0

be the cummulative distribution function of the gamma random variable X ~ (m,) and

let

(17)

m-1 -s/

s e

H(t) = H(t;m,) = 1 - G(t) = m (m) ds

t

be the complementary distribution function (or survival function). Let

(18)

m-1 -s

m(t) =

s e ds

t

be the upper incomplete gamma function and

t

(19)

m-1 -s

m(t) =

s e ds

0

be the lower incomplete gamma function.

2.2 - 5

Proposition 7.

(t/)

(20)

G(t;m,) = m (m)

(21)

H(t;m,) =

m(t/)

(m)

Proof. (20) follows by making the change of variables u = s/ in (16) and (21) follows

by making the change of variables u = s/ in (17).

2.2 - 6