Lecture Difference of Means Hypothesis Tests

advertisement

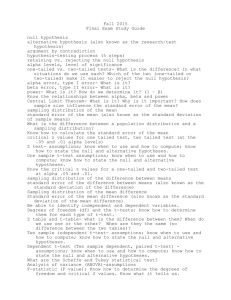

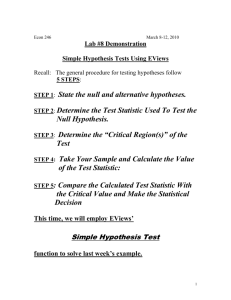

Difference of Means/Proportions Hypothesis Tests Difference of Means/Proportions Hypothesis Test – an inferential statistical approach used with with a two-category (binary) nominal independent variable and a interval/ratio dependent variable. a common bivariate inferential statistical approach is the group of comparison of means hypothesis tests. Students often have difficulty understanding the application of difference of means hypothesis tests because of: a) the conflation of difference of means tests and hypothesis testing, that is difference of means tests are a form of hypothesis testing but not the only one, b) the seemingly wide variety of confusing choices to be made to arrive at the correct test, and c) the complexity of the equations for the denominator (variance) and degrees of freedom for some forms of the test statistic. However, in practice it is not so difficult to understand how difference of means tests fit with other forms of hypothesis testing, the choices to be made are more limited than in the hypothetical world of statistics classes and textbooks, and the equations need only be understood conceptually and need not be memorized or even understood in fine detail. z-test – a) an inferential test statistic based on the standard normal distribution which is only valid in cases of known population variance/standard deviation and sample size greater than 50. b) the test statistic that is generally used in teaching statistics but almost never in practice. t-test – a) an inferential test statistic based on the t-distribution which is more robust than the z-test ater than 50. b) like the t-distribution that it is derived from, as the sample size increases, that is the number of degrees of freedom increase, the t-test more and more closely approximates the z-test. c) the test statistics that is almost always used in practice in hypothesis testing for the difference of means and other types of hypothesis testing. One-tailed Test (One-sided, One-Alternative, Directional) – a) an inferential test in which the hypotheses are directional, that is the alternative hypothesis must state either that the mean being tested is greater than the null mean or less than the null mean, and the test statistic value must fall in the tail of the specified direction in order to reject the null hypothesis. b) in the case of the “greater than” alternative hypothesis, the null hypothesis will be rejected when the value of the test statistic is sufficiently positive and in the case of the “less than” alternative hypothesis, the null hypothesis will be rejected when the value of the test statistic is sufficiently negative. c) the test is one-tailed because a result in only one tail of the normal distribution or bell curve will result in rejecting the null hypothesis. d) for the common 95% confidence, two-tailed test, the critical value of the ttest is 1.4, that is 5% of the observations in the relevant tail of the distribution. Two-tailed Test (Two-sided, Two-Alternative, Non-directional) – a) an inferential test in which the hypotheses are not directional but merely based on difference, that is the null hypothesis states that the means are equal and the alternative hypothesis states that they are not equal. b) the null hypothesis will be rejected when the value of the test statistic is either sufficiently negative or positive. c) the test is two-tailed because a result in either tail of the normal distribution or bell curve will result in rejecting the null hypothesis. d) for the common 95% confidence level two-tailed test, the critical value of the t-test is 1.96, 2.5% of the observations in each tail of the distribution. e) in practice, two-tailed test are more common than one-tailed tests. One-sample t-test of a Sample Mean/Proportion – a) an inferential test of the null hypothesis that the population mean/proportion is equal to a specified value μ0 based on a single sample mean. b) the difference of the sample mean/proportion from a hypothesized null mean in standard error units. t ( X ) / se 0 t ( p ) / se 0 df n 1 The equation for the one sample difference of means should look familiar, because it is very similar to the z-score or t-score formula, which is the difference of an individual value from the sample mean in standard deviation units. t X X sd Calculating the 95% One-Sample On-Tailed Difference of Proportions Test for the Rasmussen Poll 1. n = 1,500 p = 0.47 2. H0: π = 0.50 HA: π ≠ 0.50 3. se p(q) n p(q) 0.47(0.53) 0.000166 0.01289 N 1,500 4. α = .05 5. Zα/2 = Z.05/2 = Z.025 = 1.96 6. t ( p ) (0.47 0.50) 0.03 2.37984 se 0.01289 0.01289 0 7. (|Z|= 2.37984) > (1.96 = Z.025): Reject H0: π = 0.50 So, based on the Rasmussen Poll and our own calculations with 95% confidence the percentage of likely voters approving of President Obama’s performance is less than 50%. Paired Two-sample t-test of a Sample Mean/Proportion (t-test of a Mean of a Difference) – a) an inferential test of the null hypothesis that the population means/proportions of paired observations are equal. b) generally these pairings must be “natural” such as spouses or two test scores from the same student (repeated measures) but observations that have similar characteristics on variables other than the variables of interest can also be analyzed as “matched” pairs. c) computationally it is simplified by calculating the difference between each pair of observations, calculating the mean and standard deviation of the differences and then conducting a One-sample t-test (see above) in which the null hypothesis is that the difference is equal to zero. t ( X ) / se D t ( p ) / se 0 D 0 df n 1 Independent (Unpaired) Two-sample t-test of a Sample Mean/Proportion: a) an inferential test of the null hypothesis that the population means/proportions of two groups or samples are equal. b) although the term is independent samples that does not mean that the samples were drawn independently, men and women in a sample could be considered two independent samples that could be compared using this test. t ( p p ) / se t ( X X ) / se a a b b Equal Population Variance – if the population variances/standard deviations of the two variables are “assumed” to be equal then the pooled or common variance can be used according to the following wickedly intimidating but mathematically not that complicated equation: ( n 1) s ( n 1) s ( n 1) ( n 1) 2 se a a a b b 2 b (n n _ n (n ) a b a b df (n 1) (n 1) a b Where the two samples are symbolized by the subscripts a and b and the variance is symbolized as s2 (although the convention is to symbolize the two samples as 1 and 2, then they are too easily confused with the squared term of the variance symbol). Unequal Population Variance – if the population variances/standard deviations of the two variables are not “assumed” to be equal then the combined variance must be used according to the following simpler and still mathematically not that complicated equation, which generally results in larger standard error than the equation used under the equal variance assumption with a much more complicated equation for the degrees of freedom (Welch-Satterthwaite equation): 2 2 a b a b s s se n n (s / n s / n ) df ( s / n ) /(n 1) ( s / n ) /(n 1) 2 a 2 a 2 a 2 a b b 2 a b 2 b b Test of the Assumption of Equal Population Variance – a) the simplest method for deciding whether equal variance of the two population means/proportions can be assumed is an F-test in which the variances of the two samples/groups are calculated and then the ratio of the two variances is taken by dividing the larger variance the smaller variance which is an F-test. s F s 2 l arg er df 1 2 smaller If the two sample variances are equal then the F-test will be equal to 1, the more different the sample variances the larger the F-test. b) theF-test is not robust and is not generally used but is a simple illustration of the concept of the test of equal population variances. c) when statistical packages estimate difference of means test, they generally produce test statistics for both the assumption of equal variance and not equal variance along with at least one robust test statistics (like Levene’s) for determining the validity of the assumption, with the null hypothesis being equal variances. Confidence Intervals and Difference of Means Tests – are two different approaches to inferential statistics, the former an example of parameter estimation and the latter an example of hypothesis testing, yet they are also closely related. If we calculated a mean for a group and then conducted a single-sample difference of means test for comparing that mean to some hypothesized null population mean, if the test were statistically significant, then we would expect that the hypothesize null population mean would fall outside the 95% confidence interval of the sample mean. Similarly, if we calculated the 95% confidence intervals around the mean for two groups and found the two confidence intervals did not overlap, we could also expect that a 95% confidence level, independentsample, two-tailed difference of means test for the two groups would also be statistically significant. The two independent sample difference of means test is the exact equivalent of a OneWay Analysis of Variance (ANOVA) in which the independent variable is binary, that is has only two possible values. ANOVA is more flexible than the difference of means test because it can accommodate an independent variable with more than two categories.