Hypothesis Testing

advertisement

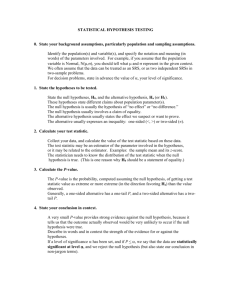

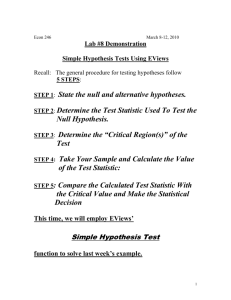

Psy 1191 Intro to Research Methods Dr. Launius 1 Psy 1191 Statistical Methods Workshop: Hypothesis Testing Introduction: Hypothesis testing is one of the most important concepts in Statistics. This is how we decide if: Effects actually occurred. Treatments have effects. Groups differ from each other. One variable predicts another. There are complicated definitions and descriptions of the process. They may confuse you. The logic will seem backward. The idea process of hypothesis-testing if written in plain English, is simple. We will write it out before introducing complex terms. You have an idea that something happened - Your guess or Hypothesis about what happened might be: Groups are different from each other Some treatment has an effect on an outcome measure One variable predicts another variable. There are two possibilities: 1. Nothing Happened - We call this the Null Hypothesis - H0 2. Something Happened - We call this the Alternative Hypothesis - H1 That's it! When you test a statistical hypothesis, you are trying to see if something happened and comparing against the possibility that nothing happened. Let's elaborate these simple ideas! The Null Hypothesis ( Ho): Null = Nothing = Zero = Nada = Bupkus = Zilch You didn't find an effect You didn't find the groups to be different Your treatment doesn't seem to help anybody Your variables are not related Statistical Things to Know: You test your sample statistic against the value based on the Null Hypothesis sampling distribution. Your sample statistic and null hypothesis sampling distribution values are close. You conclude that they are not different; you did not find an effect in your study. You test your sample statistic against the value based on the Null Hypothesis sampling distribution. Your sample statistic and null hypothesis sampling distribution values are close. You conclude that they are not different; you did not find an effect in your study How do we test Hypotheses? This is the counterintuitive part. This is the logic that seems backward. We try to disprove the Null Hypothesis. We try to disprove that nothing happened. If we disprove that nothing happened, then we conclude that SOMETHING HAPPENED. Isn't that counterintuitive? To prove that we have an effect, that our treatment works, that our groups differ - we try to disprove that our treatment didn't work or that our groups don't differ. You have to learn this logic! The Simple Steps of Hypothesis Testing: 1. You come up with your hypothesis (for example - college students sleep less than other folks). 2. You generate a sample (pick a set of college students). 3. You calculate your summary statistics (for example, the mean and standard deviation of number of hours that college students sleep per night). 4. You determine the statistical test that will compare your summary statistic against the value determined by your null hypothesis. - (You would use the single sample t-test for college students’ sleep.) 5. You calculate the test statistic using your summary statistics. The formula for the test statistics is different for each type of test but the basic concept is the same. You calculate how far your sample is from the null hypothesis taking into account that sample values of a statistic vary by chance when smaller samples are taken from a larger population. The standard error tells us how much they vary. 6. You derive the appropriate sampling distribution - or refer to one already listed in the tables in your statistics book. Your computer program can also give you this information. From Wadsworth Publishing: http://www.wadsworth.com/psychology_d/templates/student_resources/workshops/res_methd/ Psy 1191 Intro to Research Methods Dr. Launius 2 Psy 1191 Statistical Methods Workshop: Hypothesis Testing 7. You choose the cut-off value on your sampling distribution that tells you that your sample statistic is very far from the null hypothesis and thus not likely. We call this cutoff value our alpha level or significance level (more about alpha later). 8. You decide whether to reject the null hypothesis or fail to reject the null. You do this by comparing your test statistic to the cutoff value. 9. You draw your conclusion. If you reject the null hypothesis, you say that your result is statistically significant. This simply means that it did not happen by luck or chance. If you fail to reject the null, you conclude that you did not find an effect or difference in this study. Those are the basic steps. Now, let’s attach some numbers to them and see the whole process of testing a hypothesis. 1) H1: College students sleep less than most folks/H0: College students sleep the same or more than most folks 2) You select a random sample of 20 college students from your campus and ask them how many hours they sleep a night. 3) Summary statistics: Hours of sleep per night: Mean = 6.5, SD = 2.5 4) You are testing a single sample. This requires a single sample t-test. 5) You calculate the test statistic. Let’s say that most folks sleep 8 hours a night. Our test statistic is: This gives us a sample t-value of: 6) Derive the appropriate sampling distribution for the null hypothesis t-distribution, df = 19. 7) Choose the cut-off value on the sampling distribution that tell us your sample statistic is very far from the null hypothesis. If college students sleep the same amount as everyone else, the test statistics would be (t=0). Let’s say we wanted a cutoff where only 5% of the samples would have a test statistic that was lower. Our cutoff value for this null hypothesis sampling distribution is –1.729. 8) Compare your test statistic (-2.613) to the cutoff value on the null sampling distribution. See drawing of sampling distribution. 9) You draw your own conclusion. What is your conclusion? Do you reject or fail to reject the null hypothesis? Error: You can make an error or two when you test hypotheses. You might say things are different when they are not. You may miss a relationship that really exists. These are called Type I and Type II errors, respectively. Power: How about finding real differences or relationships? You want that power! Power is an easy idea in the statistical world. You want to correctly reject a false Null Hypothesis. Power is the probability of correctly rejecting a false Null Hypothesis. It means the chance of finding what you are looking for! Going to the Doctor as a Metaphor for Significance Testing, Errors and Power. In statistics, you are usually looking for some result such as: A difference between means (as with a t-test or Anova). A difference between proportions (as with a chi-square). A relationship (as with a correlation or multiple regression). From Wadsworth Publishing: http://www.wadsworth.com/psychology_d/templates/student_resources/workshops/res_methd/ Psy 1191 Intro to Research Methods Dr. Launius 3 Psy 1191 Statistical Methods Workshop: Hypothesis Testing Think of it this way. You go to the doctor because you want to know if you are sick. This is like looking for a statistical difference. However, there are several possible results. You might be sick or you might not be sick. Independent of this, the doctor may say you are sick or the doctor may tell you that you are not sick. This leads to four outcomes. Two are correct and two are wrong. The one we are interested in is when you are really sick and the doctor says you are really sick. The previous situation is like a statistical test. You look for a difference. This is like going to the doctor to see if you are sick. It is when the Ho (Null Hypothesis) is false. The statistical test is like the doctor. It might say there is a difference when there is one (good - what we want). This is statistical power. Power and Statistical Errors: Many experts recommend that you use a power of .80. This means that you have an 80% chance of finding a difference when you really want to find it. You don't want to miss a real difference or correlation. (Bad - missing a difference is called a Type II error with probability equal to Beta). Power is equal to 1 Beta. The test might say there is a difference when there is not one. (Bad - an error called Type I error whose probability equals your alpha rate: .05 or .01). Depending on conditions you may have a good or bad chance of finding the desired result. To increase power you can: Try to increase the effect size or the strength of the relationship. Decrease experimental error. Use a higher alpha level (say .05 as compared to .01). Note this increases power but also Type I error. Increase sample size. Use matched samples or covariance techniques. Bottom Line: You get the data. You calculate the summary statistics. You calculate the test statistic. You look it up. If the probability of the test statistic is less than .05 or .01, you have significance. You reject the Null Hypothesis and conclude that something happened. REMEMBER THIS: Big statistical test statistic value (absolute value) Small p Small p = Significance From Wadsworth Publishing: http://www.wadsworth.com/psychology_d/templates/student_resources/workshops/res_methd/