Rothman KJ, Greenland S, Lash TL. Chapter 10: Precision and

advertisement

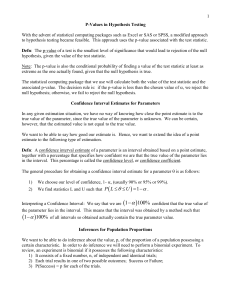

Rothman KJ, Greenland S, Lash TL. Chapter 10: Precision and statistics in epidemiologic studies. Modern Epidemiology: 2008. - - Sampling error = random error due to the process of selecting specific study subjects. All epidemiologic studies are viewed as a figurative sample of possible people who could have been included in the study. A measure of random variation is the variance of the process, i.e.: the root mean squared deviation from the mean. The statistical precision of a measurement or process is often taken to be the inverse of the variance. Precision is the opposite of random error. A study has improved statistical efficiency if it is able to estimate the same quantity with higher precision. Significance and hypothesis testing - Null hypothesis – formulated as a hypothesis of no association between two variables in a superpopulation – the test hypothesis. - If p !< alpha, that does not mean there is no difference between the two groups – describing the groups does not require statistical inference. - If p !< alpha, that does not mean that there is no difference between groups of the super-population – It means only that one cannot reject the null hypothesis that the super-population groups are different. - Conversely, p<0.05 does not necessarily mean there is a true difference in the super-population if the statistical model is wrong, bias exists, or if type I error has occurred. - Upper one-tailed P-value is the probability that a corresponding test statistical will be greater than or equal to its observed value, assuming that the test hypothesis is correct and the statistical model is valid. - Lower one-tailed P-value – the probability that a test statistic will be lower than or equal to its observed value. - Two-tailed P-value – twice the smaller of the upper or lower one-tailed P-value. The two-tailed P-value is not a true probability, since it may sum to greater than 1. - Small P-values indicate that one or more of the test assumptions are wrong – the null hypothesis is typically the assumption taken to be invalid. - P-values do not represent probabilities of test hypotheses. In fact, the probability of the test hypothesis given the data is P(H0| data) = P(data | H0) * P(H0) / P(data), and has nothing to do with the p-value. - The P-value is not the probability of the observed data under the test hypothesis. This quantity is actually the likelihood of the test hypothesis, and can be calculated by modeling the probability distribution giving rise to the data. It is usually under-estimated by the p-value. - The P-value is not the probability that the data would show as strong an association as observed. The P-value refers to values of the test statistic, which may be quite high with a weak association if the variance of the sample is low. Rothman KJ, Greenland S, Lash TL. Chapter 10: Precision and statistics in epidemiologic studies. Modern Epidemiology: 2008. - Choice of alpha - - Type I error = falsely rejecting the null hypothesis. Type 2 error = failing to reject the null hypothesis when the null hypothesis is false. Beta = power = 1-type 2 error. Alpha = 1 – type 1 error. Draw a 2x2 table. - Alpha is a cut-off intended to represent the maximum acceptable level of type I error. It is a fixed cut-off used to force a qualitative decision about the rejection of a hypothesis. It’s origins are somewhat arbitrary – the chi-squared tables from which test statistics could be calculated included values at the 5% level of significance. - The p-value is not the probability of making a type I error. To see this, the p-value = p(|T| >/= t | H0), whereas the probability of making a type I error is p(|T| >/= t* |H0). For a standard normal variate at alpha = 0.05, t* = 1.96 and p(type I error) = 0.05, by definition. - The probability of a type I error must account for the means by which the hypothesis was rejected – especially with respect to previous comparisons. So if p(type I error) = 0.05 = alpha, then the chance of not making a type I error at all over k repetitions is (1-alpha)k. The chance of making one or more type I errors is then the complement of making zero type 1 errors, which is 1-(1-alpha)k. By the time 4 comparisons have been performed, there is almost a 20% chance that 1 or more comparisons will falsely reject the null hypothesis. Appropriateness of statistical testing - Type I and type II errors arise when investigators dichotomize study results into categories “significant” and “non-significant”. This is unnecessary and degrades study information. - Statistical significance testing has roots in industrial and agricultural decisionmaking. However, for public health, making a qualitative decision on the basis of a single study is inappropriate. Meta-analyses often show that “non-significant” findings may actually represent a real effect – epidemiologic knowledge is an accretion of previous findings. - Using statistical significance as the primary basis for inference is misleading, since examining the confidence intervals of an imprecise study show readily that the data are compatible with a wide range of hypotheses, only one of which may be the null hypothesis. - A small P-value may be obtained with a small effect, while a large p-value may be obtained representing a large effect. However, the latter is often offered as evidence against a large effect in standard significance testing. - Also, an association may be verified or refuted by different studies on the basis of statistical hypothesis testing, when the data in both instances may be maximally compatible with the same association. Rothman KJ, Greenland S, Lash TL. Chapter 10: Precision and statistics in epidemiologic studies. Modern Epidemiology: 2008. - Confidence intervals - Compute p-values for a broad range of possible test hypotheses. The interval of parameter values for which the p-value exceeds alpha represents a range of parameters compatible, where compatible means offers insufficient evidence to reject the hypothesized parameter. - The confidence level of a CI is 1-alpha. - - - Using the Wald approximation: t* = t – tbar / (se of tbar). Also 1-alpha = P(-t </= T </= t) = P (-t* </= [(t – tbar) / (se of tbar)] </= t* ). - We say that the confidence interval, over unlimited repetitions of the study, will contain the true value of the parameter no less than its stated confidence level. - We can also say that the confidence interval represents values for the population parameter for which the difference between the parameter and the observed estimate is not statistically significant at the alpha level (Cox DR, Hinkley DV. Theoretical Statistics. Chapman & Hall, 1974. Pp. 214, 225, 233.). - Unfortunately, repeated sampling is seldom realized. (Neither are the probability models.) - The p-value of a parameter falling outside the 1-alpha confidence interval will be less than alpha. - The classical wisdom says that the probability of a parameter is uniform throughout the confidence interval. However, a given confidence interval is one interval among many nested within each other. Confidence limits with higher alpha are said to be more compatible with the data. See the p-value function. Likelihood intervals - The likelihood of a parameter given observed data is the probability of obtaining the observed data given that the true parameter equals the specified parameter value. L(theta | data) = P (data | theta). A parameter with a higher likelihood is said to have more support from the data. - Most data have a maximum-likelihood estimate (MLE). - The likelihood ratio is the LR(theta) = L(theta | data) / L(MLE | data). - The collection of values with LR > 1/7 relative to the MLE is said to comprised a likelihood interval. The 1/7 value corresponds roughly to a 95% confidence interval. Other likelihood intervals may be constructed at different LRs. Bayesian intervals - If one can specify the probability of a parameter independent of the data, the likelihood of that parameter given the data may be used to estimate a posterior probability describing the probability of the parameter given the data. Bayesian methods are rationally coherent procedures for estimating such probabilities, where rationally coherent refers to adherence to the laws of probability. Greenland S, Rothman KJ. Chapter 13: Fundamentals of epidemiologic data analysis. Modern Epidemiology: 2008. - - - Test statistics can be directional (e.g.: Z-values, T-values) or non-directional (e.g.: Chisquared values). Non-directional tests reflect the absolute distance of the actual observations from the observation expected under the test hypothesis. By convention, we usually treat all tests as non-directional, and take special efforts to calculate twotailed P-values from directional tests to facilitate this. The median-unbiased estimate is the point at which the test statistic would have equal probability of being above and below its observed value over repetitions of the study (i.e.: upper and lower single-tailed p-values are equal). The median-unbiased estimate is the parameter at the peak of the two-tailed p-value function. Probability distribution = a model or function that tells us the probability of each possible value for our test statistic. - - - - - - - Binomial distribution: - Chance of obtaining k positives in n trials is pik*(1-pi)n-k. However, k may be distributed nCk ways. Then P(Y = y | pi) = [N! / (k! * (N-k)!)] * pik*(1-pi)n-k. - Guess and check to obtain the median-unbiased estimate. Also check various parameter values until two are found with two-tailed P-values equal to 1-alpha. - Calculating the median-unbiased estimate and confidence limits from this method require guessing and checking. Approximate statistics: The score method - Simplify calculation of estimates and confidence intervals by assuming the parameter is normally distributed with standard error determined by the underlying probability model. - If the test statistic is Y, the number of positives, then Xscore = (Y – N*pi) / (Var(N*pi)^0.5), were Var(N*pi) = N*pi*(1-pi). Xscore is distributed normally with a mean of 0 and a SD = 1. - P-values may be estimated from the standard normal distribution. - CI may be estimated by determining the Z-values corresponding to 1-alpha/2 and calculating Xscore with different values of pi. The values of pi for which Xscore = Z* are the confidence limits. It is not possible merely to substitute Z* for Xscore and calculate pi because the variance of Xscore in the denominator also depends on pi. - The median-unbiased estimate occurs when Xscore = 0. Solving for pi, we get pi = Y/N, which also happens to be the MLE of the proportion. - Score method is valid if N*pi and N * (1-pi) are both greater than 5. Approximate statistics: The Wald method - The Wald method simplifies calculation of CI by substituting the score SD with an unchanging value, the SD when pi is evaluated at its point estimate. Denote the point estimate of pi as pi_hat. - Xwald = (Y-N*pi) / (N * pi_hat * (1 – pi_hat))^0.5. Likelihood-based methods - L(pi | Y) can be calculated from the binomial equation. - LR(pi) = L(pi | Y) / L(pi_hat | Y). - The deviance statistic = X2LR = -2 * ln (LR(pi)), and is distributed chi-squared with one degree of freedom. - X2LR is distributed chi-squared. Using the deviance statistic, we can also calculate confidence intervals easily by substituting X2* values. - As it turns out, the chi-squared value at 1-alpha is 3.84. Solving -2 * ln(LR(pi)) = 3.84 for LR(pi), we find that LR(pi) = exp(-1.92) = 0.147, which is 1/7. Thus, the 95% confidence interval calculated using the likelihood method is equivalent to the likelihood interval bounded by 1/7. Likelihoods in Bayesian analysis - The posterior odds of pi_1 vs pi_2 is equal to LR(pi_1 vs pi_2) multiplied by the prior odds of pi_1 vs pi_2. Criteria for choosing a test statistic - Confidence validity. - Efficiency. - Ease or availability of computation. Adjustments to p-values - - - Continuity correction – intended to err on the side of over-coverage for approximate statistics. Brings the approximate p-value closer to the exact pvalue. Mid-p-values – The lower mid-p-value is the probability under the test hypothesis that the test statistic is < its observed value, plus half the probability that it equals its observed value. The upper mid-p-value substitutes > for <. Mid-p-values emphasize efficiency – some risk of moderate undercoverage is acceptable if worthwhile precision gains can be obtained. Greenland S, Rothman KJ. Chapter 14: Introduction to categorical statistics. Modern Epidemiology: 2008. - - - - Person-time data – large sample methods - Single study group: Specify a probability model for A/T = Poisson, so that P(A=a) = exp(-I*T)(I*T)a/a!. The variance of a Poisson-distributed variable is equal to its mean. The MLE of I is straightforward. The ratio measure of association is the SMR = A/E, and may be estimated as though E were known with certainty. - The expected counts = E. Use the Wald or score methods to calculate a test statistic with E0.5 as the standard deviation. CI may be calculated using a Wald approximation. - Two study groups: Use a two-Poisson solution, where P(A1 = a1 & A2 = a2) = P(A1 = a1) * P(A2 = a2). The MLE of I1 and I2, along with the IR and ID, are straightforward. Calculate E1. The test parameter is E1. A formula for the variance of E1 is provided. The score method may be applied to obtain p-values, the Wald method for CI. Pure count data – large-sample methods - Single study group: Use binomial equation. MLE is straight forward. Test parameter is A = E. Test statistic was provided in the previous chapter. - Two study groups: Use a two-binomial solution. MLEs for measures of association and occurrence straight-forward. Calculate E under the condition that R1 = R0. Test the hypothesis that A1 – E1 / sd(A1 | E1) = 0./ Wald SDs for estimating CIs for RR, OR, and RDs are provided. Person-time data – small sample methods - Single study group: I*T = IR * E. Compute P-values for IR directly using the Poisson distribution. - Two study groups: Condition on M1, the observed total number of cases. Then using the binomial probability model for the number of exposed cases, A1, given M1. The parameter, pi, is a simple function of the incidence rate ratio and the observed person-time. Solve for parameters of s and substitute for IR. Pure count data – small sample methods - Single study group: Use binomial probability distribution. - Two study groups: Condition on all margins, i.e.: total number of cases, noncases, exposed, and un-exposed. The probability that A1 = a1 is provided by the hypergeometric distribution. The fixed margins assumption is a hold-over from experiments – in observational studies, the margins are not truly fixed. The noncentral hypergeometric equation may be used to calculate OR directly – the value of OR that maximizes P(A1 = a1) is the CMLE. Greenland S, Rothman KJ. Chapter 15: Introduction to stratified analysis. Modern Epidemiology: 2008. - Steps in stratified analysis - Examine stratum-specific estimates. - If heterogeneity is present, report stratum-specific estimates. - If the data are reasonably consistent with homogeneity, obtain a single summary estimate. If this summary and its confidnce limits are negligibly altered by ignoring a stratification variable, one may un-stratify on that variable. - Obtain a p-value for the null hypothesis of no stratum-specific association. - Effect measure modification differs from confounding - Effect measure modification is a finding to be reported, rather than a bias to be avoided. - Estimating a homogenous measure – Pooled estimates are weighted averages of stratum-specific measures. The differences between pooling and standardization: External weights applied to occurrence measures without the assumption of homogeneity, vs data-driven weights applied to measures of association under the homogeneity assumption. Pooling is designed to assign weights that reflect the amount of information in each stratum. Direct pooling = precision weighting (Woolf method). But requires large numbers in each cell. - ML methods take the data probabilities for each stratum and multiply them together to produce a total data probability, which it maximizes. ML method for estimating a pooled association has minimum large-sample variance among approximately unbiased estimator, and is the optimal large-sample estimator. Mantel-Haenszel estimators are easy to calculate, and nearly as accurate as ML estimators. - Only conditional-likelihood methods and M-H methods remain approximately valid in sparse data – they require that the total number of subjects contributing to the estimates at each exposure-disease combination be adequate, while unconditional likelihood methods require that the binomial denominators in each stratum are large, where large is approx. 10 cases or more for odds-ratio analyses. - Only exact methods have no sample-size requirements. - Why use unconditional MLE at al? Conditional MLE is computationally demanding, and unconditional or conditional will produce nearly equal estimates with larger samples. Also, only the unconditional method is theoretically justifiable compared to conditional MLE for certain quantities. - M-H calculations provided for ID, IR, RD, RR along with variances required to calculate CI using the Wald method. A formula is provided to ascertain if the M-H “large sample” criteria are met. - P-values for the stratified null-hypothesis – test the overall departure of the data from the null value of no association. - Testing homogeneity - X2Wald = sum[(Ui – U)2/Vi], where U refers to within and between strata MLE estimates of measures of association, and V is the estimated variance of each stratum specific MLE of the measure. For ratio measures, U should be the logarithm of the ratio. Taking the ln of an M-H rate or odds ratio for the ln of the MLE is not theoretically correct, but will not usually make a difference. Do not use an M-H estimate of an ID, RD, or RR in place of an MLE – this will invalidate X2Wald. - The analysis of matched-pair cohort and case control data is an extension of MH methods. It can be shown that McNemar’s test is really the M-H score statistic simplified. Survival analysis accounts for LTFU and competing risks by analyzing within strata of intervals so small that LTFU and competing risks do not occur. Rassen JA, Brookhart MA, Glynn RJ, Mittleman MA, Schneeweiss S. Instrumental variables I: Instrumental variables exploit natural variation in nonexperimental data to estimate causal relationships. Journal of Clinical Epidemiology, 62(12): 2010. - - - - Non-experimental methods of causal inference must rely on an assumption of no unmeasured confounding RCT – we would flip a coin to determine how two patients with MIs should be treated in the emergency room. But coin flipping is no unethical. If would could observe something about these patients, other than their health status, which could in retrospect serve to separate them into two random groups … We are looking for a “natural experiment” in the data, a happenstance occurrence whose randomness can be exploited to perform a retrospective, nonexperimental “trial”. The marker for this occurrence is called an instrumental variable. Instrumental variable = a variable in non-experimental data that can be thought to mimic the coin toss in a randomized trial. Three assumptions 1. Strength = Predicts actual treatment received - can be verified by data. 2. Independence = Must be independent of the outcome except through treatment assignment. E.g.: violated by PPP as a proxy for decreased quality of care – perhaps a physician’s PPP is determined by unawareness of alternative therapies! Unverifiable. 3. Exclusion = exclude associations that arise as a result of common causes of the instrument and the outcome – otherwise conditioning would result in collider bias. E.g.: violated by doctor shopping, where patients at higher risk choose the physicians with certain PPPs. Unverifiable. The marginal subject = one who complies with randomization according to the IV. Examples - Difference in distance to a hospital with a catheterization lab versus one without. - Insurance benefit status as an IV for drug adherence. Physician prescribing preference PPP - Prescribing varies more between physicians than it does within physicians/ - When presented with a patient that could benefit equally, the underlying preference will govern the physician’s choice of drugs. - If preference shows natural variation, and if patients choose their doctors without knowledge of that preference (or of factors associated with preference), than PPP can be substituted for randomization to the study drug. For a dichotomous IV, one can simply analyze data within IV-treatment groups. However, this estimate is biased towards the null due to nonmarginal patients – for whom PPP or - - differential distance to a catheterization lab was not the factor that determined treatment. Non-marginal patients - Rescale by the association between the PPP and the treatment. - E.g.: RD = RD(IV to outcome) / RD(IV to exposure) - Numerator = ITT estimate. Analysis by modeling - Two-stage least squares – 2SLS - Stage 1 – predict the expected value of treatment based on the instrument. - Stage 2 – predict the outcome as a function of the predicted treatment. - Replace the confounded treatment with a prediction of treatment that would have occurred if allocation were random. - Then confounding becomes an issue of crossing-over. - Stage 2 may incorporate covariates – relaxes assumption 2. Sussman JB, Hayward A. An IV for the RCT: using instrumental variables to adjust for treatment contamination in randomized controlled trials. BMJ, 340(2): 2010. - - - - As treated = all patients analyzed on the basis of treatment received. Per-protocol = patient included in analysis only if they followed the assigned protocol, otherwise they are removed. As-treated an per-protocol analyses biased – adherence related to other factors that affect outcome status. ITT recommended – All subjects analyzed as they were randomized. But ITT answers the question - “How much do study participants benefit from being assigned to a treatment group” - Instead of “What are the risks and benefits of receiving a treatment?” - So the estimate of early side effects might be unbiased, but the estimate of later benefits may be biased towards the null – need estimates of the benefits of being treated to help clinical decision-making. IV analysis – designed to learn from natural experiments in which an unbiased “instrument” makes the exposure of interest more or less likely, but has no other effect, either directly or indirectly, on the outcome. Contamination-adjusted ITT (CA-ITT) - Randomization treated as an IV. - The ITT effect is adjusted by the percentage of assigned participants who ultimately receive the treatment. CA-ITT can complement ITT by producing a better estimate of the benefits and harms of receiving a treatment.