Handoutn 12 One-Factor Analysis of Variance

advertisement

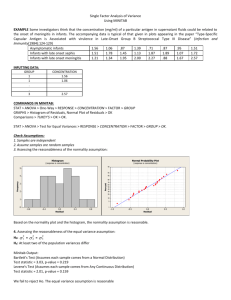

One-Factor Analysis of Variance The procedure is used when one is interested in comparing more than two means. The procedure is a generalization of the pooled-t test. Example A researcher wants to compare three different methods for teaching reading. Factor (Explanatory Variable): Method of teaching This factor would have three levels The Response Variable: reading score Example A researcher wants to compare four different programs designed to help people lose weight. Factor: Program This factor would have four levels The Response Variable: weight loss Hypothesis test for One-Factor Analysis of Variance Ho: 1= 2 = ...= k Ha: at least two of the population means differ Test Statistic: F MST MSE Rejection Region: Reject Ho if F > And F ,df1, df2 where df= (p-1) and df2 = (N-p) , p = number of levels for the factor N = total number of observations ASSUMPTIONS: 1. Each of the k population distributions is normal. 2. The population variances are equal. 3. The observations in the sample from any particular one of the k populations are independent of one another. 4. The k random samples are selected independently of one another. Logic Behind Analysis of Variance Assume that we wish to compare the three ethnic mean hour wages based on samples of five workers selected from each of the ethnic groups Table 1 1 5.9 5.92 5.91 5.89 5.88 5.90 2 5.51 5.50 5.50 5.49 5.50 5.50 3 5.01 5.00 4.99 4.98 5.02 5.00 Do the data in table 1 present sufficient evidence to indicate differences among the three population means? An inspection of the data indicates very little variation within a sample, whereas the variability among the sample means is much larger. Since the variability among the sample means is so large in comparison to the within-sample variation, we might conclude that the corresponding population means are different. Table 2 1 5.90 4.42 7.51 7.89 3.78 5.90 2 6.31 3.54 4.73 7.20 5.72 5.50 3 4.52 6.93 4.48 5.55 3.52 5.00 Table 2 illustrates a situation in which the sample means are the same as given in Table 1 but the variability within a sample is much larger. In contrast to the data in Table 1, the between-sample variation is small relative to the within sample variability. We would be less likely to conclude that the corresponding population means differ based on these data. WHY NOT RUN MULTIPLE t-TESTS? THE PROBLEM WITH RUNNING MULTIPLE t-TESTS IS THAT THE PROBABILITY OF FALSELY REJECTING Ho FOR AT LEAST ONE OF THE TESTS INCREASES AS THE NUMBER OF t-TESTS INCREASES. THUS ALTHOUGH WE MAY HAVE A PROBABILITY OF A TYPE-I ERROR FIXED AT =.05 FOR EACH INDIVIDUAL TEST, THE PROBABILITY OF FALSELY REJECTING Ho FOR AT LEAST ONE OF THOSE TESTS IS LARGER THAN .05. Tukey’s Multiple Comparison Procedure If we reject Ho: 1= 2 = ...= k, we can perform all pairwise comparisons to locate where the differences are. One procedure that is often used for this purpose is Tukey’s multiple comparison procedure. Tukey’s Multiple Comparison Procedure, for doing all pairwise comparisons, controls the experimentwise error rate. In doing multiple comparisons, we need to consider the probability of falsely rejecting at least one of the null hypotheses. When all the null hypotheses are true, the probability of falsely rejecting at least one of the null hypotheses is denoted by E. E is called the experimentwise(family) error rate. The Tukey intervals are constructed using a simultaneous confidence level (say 95%). That is, if the procedure is used repeatedly on many different data sets, in the long run only 5% of the time would at least one of the intervals not include the value of what the interval is estimating. Homogeneous Experimental Units In using a one-factor anova design, one should try to sample experimental units from a homogeneous population. If the experimental units are not homogeneous with respect to characteristics that may affect the response variable, the within sample variation can be large, making it difficult to detect differences among the treatment means. One drawback though is that, in sampling from a homogeneous population, you have limited the inferences that can be draw. The results of your study may generalize only to the homogeneous population. A randomized block design, which will be studied later in this course, is a useful design when the experimental units are not homogeneous. Example In a nutrition experiment, an investigator studied the effects of different rations on the growth of young rats. Forty rats from the same inbred strain were divided at random into four groups of ten and used for the experiment. A different ration was fed to each group and, after a specified length of time, the increase in growth of each rat was measured (in grams). Analyze the data. Use =.05. RATION A 10 6 8 6 12 9 11 5 9 6 RATION B 13 9 15 10 14 8 13 10 17 8 RATION C 12 10 16 12 13 10 11 9 15 9 RATION D 15 21 13 18 15 20 10 19 12 22 Assessing the reasonableness of the ANOVA assumptions: 1. Assessing the reasonableness of the normality assumtpion: Normal Probability Plot of the Residuals (responses are A, B, C, D) 99 95 90 Percent 80 70 60 50 40 30 20 10 5 1 -8 -6 -4 -2 0 Residual 2 4 6 8 Based on the normality plot, the normality assumption is reasonable. 2. Assessing the reasonableness of the equal variance assumption: H0: 2A = 2B = C2 = 2D Bartlett's Test Test Statistic 3.51 P-Value 0.319 Levene's Test Test Statistic 2.73 P-Value 0.058 Based on the p-values, we fail to reject Ho. The equal variance assumption is reasonable 3. From examining the way the study was conducted, we can say that Assumptions 3 and 4 are satisfied. Since assumptions are reasonable, we can perform the Analysis of Variance One-way ANOVA: A, B, C, D Source DF SS MS F Factor Error Total 3 36 39 348.68 342.30 690.98 116.23 9.51 12.22 S = 3.084 R-Sq = 50.46% P 0.000 (Ho: 1= 2 = ...= k) R-Sq(adj) = 46.33% Tukey 95% Simultaneous Confidence Intervals All Pairwise Comparisons A subtracted from: B C D Lower -0.215 -0.215 4.585 Center 3.500 3.500 8.300 Upper 7.215 7.215 12.015 ----+---------+---------+---------+----(-----*-----) (-----*-----) (-----*-----) ----+---------+---------+---------+-----6.0 0.0 6.0 12.0 B subtracted from: C D Lower -3.715 1.085 Center 0.000 4.800 Upper 3.715 8.515 ----+---------+---------+---------+----(-----*-----) (-----*-----) ----+---------+---------+---------+-----6.0 0.0 6.0 12.0 C subtracted from: D Lower 1.085 Center 4.800 Upper 8.515 ----+---------+---------+---------+----(-----*-----) ----+---------+---------+---------+-----6.0 0.0 6.0 12.0 What if the normality assumption is not reasonable? The Kruskal-Wallis test can be used to compare more than 2 means when it is not reasonable to assume that each sample comes from a normal population distribution. This test is easily performed in Minitab. What if the equal variance assumption is not reasonable? When the equal variance assumption is not reasonable, it may be possible to transform the values of the response variable so that the necessary conditions for ANOVA are satisfied on the transformed scale. An analysis of variance is then done on the transformed data.