PROBLEM SET 2 – DUE: OCTOBER 24

advertisement

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

PROBLEM SET 2 – SOLUTIONS

Instructions

Part I – Analytical Questions

Problem 1: Consider the AR(2) process

yt 2.5 1.1 yt 1 0.18 yt 2 t ; t ~ i.i.d .(0,1)

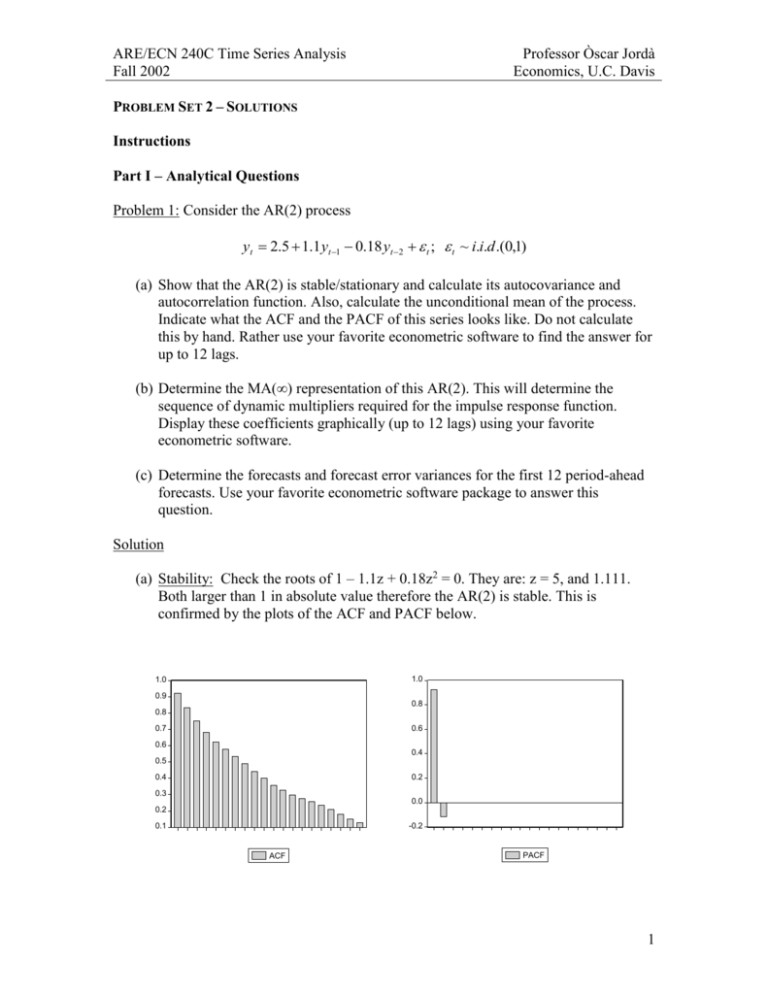

(a) Show that the AR(2) is stable/stationary and calculate its autocovariance and

autocorrelation function. Also, calculate the unconditional mean of the process.

Indicate what the ACF and the PACF of this series looks like. Do not calculate

this by hand. Rather use your favorite econometric software to find the answer for

up to 12 lags.

(b) Determine the MA(∞) representation of this AR(2). This will determine the

sequence of dynamic multipliers required for the impulse response function.

Display these coefficients graphically (up to 12 lags) using your favorite

econometric software.

(c) Determine the forecasts and forecast error variances for the first 12 period-ahead

forecasts. Use your favorite econometric software package to answer this

question.

Solution

(a) Stability: Check the roots of 1 – 1.1z + 0.18z2 = 0. They are: z = 5, and 1.111.

Both larger than 1 in absolute value therefore the AR(2) is stable. This is

confirmed by the plots of the ACF and PACF below.

1.0

1.0

0.9

0.8

0.8

0.6

0.7

0.6

0.4

0.5

0.2

0.4

0.3

0.0

0.2

-0.2

0.1

ACF

PACF

1

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

(b)

1.2

1.1

1.0

0.9

0.8

0.7

0.6

0.5

0.4

0.3

4

6

8

10

12

14

IRF - MA(inf)

(c) Given yt and yt-1, the h-periods ahead forecast is easily calculated by generating

observations yt+h recursively. The forecast error variance can be easily calculated

from the MA(inf) representation since the appropriate sum of squared terms times

the variance of the residuals will give you the right FEV.

Problem 2: If

yt xt zt

xt t t 1

with

i .i .d .

t ~ D(0, 2 )

zt zt 1 ut

i .i .d .

ut ~ D(0, 2 )

with and u independent of each other. Then:

(a) What is the process for yt?

(b) Give conditions to ensure yt is covariance stationary and invertible.

(c) Find the long-horizon forecast for yt and its variance.

Solution

(a) Notice:

(1 L) yt (1 L) (1 L) t (1 L) t 1 ut

or

yt (1 ) yt 1 ( t ut ) ( ) t 1 t 2

which is an ARMA(1,2).

2

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

(b) Stationarity only depends on so the usual condition applies here: || < 1. For

invertibility, we need to ensure that the roots (1 ( ) z z 2 0 are outside the unit

circle. Of course, the roots for this problem are trivial since they are and . Since

stationarity requires that || < 1 already, we only need | | 1 .

(c) The long-run horizon for a stationary model is the unconditional mean which for this

problem is just . The long-run forecast error variance is also easy to calculate since it is

the unconditional variance of y. This variance can be calculated as follows. Notice that:

yt

t ut

t 1

t 2 .

1 L 1 L

1 L

Noting that the are serially independent and that and u are independent, then

V ( yt )

2 u2 ( ) 2 2 2 2 2

1 2

1 2

1 2

Problem 3: Suppose

yt yt 1 st t

t ~ N (0, 2 )

st exp( yt 1 )

(a) Derive the conditional log-likelihood for y0 = 0.

Solution: Omitting constants,

T

yt yt 1

1 T

1

2 2

1

L( ) log( st )

2 1

2

st2 2

2

(b) Derive the score. Assume 2 is known. Solution:

2 2

2 '

2

L

1 2st' st 2 1 2 yt 1 ( yt yt 1 ) st ( yt yt 1 ) st st

2 2

2

2

st

4 st4

Noting that st' yt 1 st , the expression of the score simplifies to

( yt yt 1 ) yt 1 ( yt yt 1 ) 2 yt 1

L

yt 1

2 st2

2 st4

(c) Derive the estimator of the information matrix. Assume 2 is known.

3

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Solution: After some tedious algebra,

yt21

( yt yt 1 ) yt21

( yt yt 1 ) 2 yt21

2L

2

H= 2 4 4 4

st

2 st2

2 st2

Note that since 2 is assumed known, we have not needed to derive the score nor the

cross products of the information matrix, which in this case boils down to a second

derivative.

(d) Derive the LM test for H0: = 0. Assume 2 is known.

Solution: Under the null, st = 1 and = 0. Therefore the information matrix estimate

of –E(H) is

2

2

y t y t21

y t y t21

2 L y t 1

4

2

2

4

2

2

Note that the score evaluated at the null is

y

t 1

y y

t t 1

2

y

2

t t 1

2

y

so that the statistic is:

yy

y2 y

yt 1 t t 1 t t 1

2

2

LM

~ 12

2 2

2

2

yt 1 4 yt yt 1 2 yt yt 1

4

2

2

2

(e) Would it matter if 2 were unknown?

Solution:

If 2 were unknown then we would have a vector of scores and a 2 by 2 hessian

matrix. Although 2 may appear not identified, this is not the case since the

expression for st does not contain a constant term.

4

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Problem 4: Suppose

yt f ( xt , , ) t

with

f ( xt , , ) ln( xt )

Describe an easy approach to testing the hypothesis H0: = 0 and show how you would

conduct the test.

Solution

There are many ways of answering this question, obviously. I have chosen the option that

would be most easily implemented in any computer software that runs linear regression.

Note:

f

H0

xt

H0

xt

;

f

ln( xt ) H ln xt

0

H0

Therefore, the test can be performed as follows:

Step 1: Estimate the regression yt ln xt t and save the residuals ˆt for the next

step.

Step 2: Use the residuals ˆt as a dependent variable in the regression

1

ˆt ln xt ut . The TR2 of this regression will be distributed as a 12

xt

Problem 5: Consider the following stationary data generation process for a random

variable yt

yt = yt-1 + et

et ~ N(0,1) i.i.d.

with || < 1, and y0 ~ N(0, (1 - 2)).

(a) Obtain the population mean, variance, autocovariances and autocorrelations.

t 1

E ( yt ) E i et i 0

i 0

y2 E ( 2 yt21 2 yt 1et et2 ) 2 y2 1

1

(1 2 )

5

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

since {yt} is stationary. Further, notice that E(et4) = 3. Noting only the nonzero terms:

E ( yt4 ) 4 E ( yt41 ) 6 2 E ( yt21et2 ) E (et4 )

3 6 2 y2

1 4

Finally,

COV ( yt , yt 1 ) E ( yt , yt 1 ) E ( yt21 ) E ( yt 1et ) y2

E ( yt , yt k ) E ( yt , yt k 1 )

(b) Derive

(i) E T 1 t 2 yt 1et T 1 t 2 E( yt 1et ) 0

T

T

1

1

y2

2

1

1 2

(ii) E T 1 t 1 yt2 T 1 t 1 E ( yt2 ) T 1 t 1

T

T

T

(iii) E T 1 t 2 yt yt 1 T 1 t 2 E ( yt , yt 1 ) T 1 t 2

T

(iv) Note: E y

4

t

T

3 6 2 y2

1 4

T

y2

2

1

1 2

. Derive

V T 1 t 1 yt2 E T 1 t 1 yt2 y2 T 2 t 1 s 1 E ( yt2 y s2 ) y4

T

T

T

t 1

T 2 t 1 E ( yt4 ) 2T 2 t 1 s 1 E ( yt2 y s2 ) y4

T

t 1

T

3T 1 y2 y2 2T 2 t 1 s 1 (1 2 2( t s ) ) 4y

T

y

3

y4 1 2 2

T

T

t 1

T (T 1)

2 2

T

T t 1 2( t 1)

2

2

(1 )

4

1

1

1 2

2 1

y 3T T 4T (1 ) O(T 2 )

4

2T 1 y4

1 2

1 2

6

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

(c) Derive the limiting distribution of the sample mean. Hint: {yt} is not i.i.d. but it is

ergodic for the mean.

Since yt is stationary, the only difficulty in the derivation of the distribution of the

sample mean is that {yt} is not an i.i.d. process, although it is ergodic. A general

strong law of large numbers and a central limit theorem can be applied to such

processes so that the sample mean converges a.s. to the population mean, which is

zero, and is normally distributed around zero (i.e. using the central limit theorem for

martingale difference sequences we discussed in class). However, the problem can

also be solved from first principles as follows:

T

T

E ( y ) E T 1 yt T 1 E ( yt ) 0;

t 1

t 1

2

T

T T

V ( y ) E T 1 yt T 2 E ( yt y s )

t 1

t 1 s 1

T T

T

j

y2T 2 |t s| y2T 1 1 2 1

T

t 1 s 1

j 1

1

T 1 y2

1

j

As T ,V ( y ) 0 so p lim T y 0 . However

1

1

V ( Ty ) y2

1 (1 ) 2

Next, Ty is a linear function of independent, normally distributed components {et},

with a finite, non-zero variance in large samples, so that:

d

1

Ty N 0,

2

(1 )

(d) Obtain the limiting distribution of the least squares estimator of . Hint: calculate

T ˆ and then derive its distribution.

Using the above results and Slutsky’s theorem:

p lim T

ˆ

p lim T T 1 t 2 yt 1et

T

p lim T T 1 t 2 yt21

T

0

y2

0

7

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Next, from Mann-Wald

1

T

d

1

y

e

N 0,

t 1 t

2

t 2

1

T

Using Cramer’s theorem, we thus have

d

T ˆ N (0, (1 2 ))

Problem 6: Consider the AR(1) model of the previous exercise but suppose instead that

et ~ N (0, 2 ). The conditional density for observation t is therefore

( yt y y 1 ) 2

1

1

log f ( yt | yt 1 ; , 2 ) log( 2 ) log( 2 )

2

2

2 2

~

Let ˆ ,ˆ 2 be the unrestricted MLE estimate of θ = (, 2)’ and let ,~ 2 be the

restricted MLE estimate subject to the constraint R = c, where R and c are known

constants. Also, let

1 1 T 2

2 t 2 yt 1

ˆ ˆ T

0

1 1 T 2

~ ~ 2 T t 2 yt 1

1 ;

0

2(ˆ 2 ) 2

0

1

2(~ 2 ) 2

0

(a) Verify that ˆ minimizes the sum of squared residuals so that if it is the same as

the OLS estimator.

This problem is a lot simpler if we proceed with the conditional likelihood, which is

( yt yt 1 ) 2

T 1

T 1

2

t 2

L( )

log( 2 )

log( )

2

2

2 2

T

and can be viewed as a QMLE problem. The first order conditions are,

yy

L t 2 ( yt yt 1 ) yt 1

ˆ t 2 t t 1

0

,

T

2

yt21

T

T

t 2

8

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

which is the OLS estimator

~

(b) Verify that minimizes the sum of squared residuals subject to R = c so that it

is the restricted LS estimator.

The restricted likelihood is very simple and takes the form,

( yt yt 1 ) 2

T 1

T 1

~

2

t 2

L ( )

log( 2 )

log( )

2 ( R c)

2

2

2 2

T

where the lagrange multiplier is rescaled by 2 for convenience and without loss of

generality. The first order conditions are,

T

~

( yt yt 1 ) yt 1 R

L

t 2

2 0

2

from which

R

~

ˆ

T

t 2

yt21

Of course, in this example the constraint can be trivially imposed in the problem and

it would not require any estimation. The restricted least squares estimator would be

calculated by minimizing,

T

min

,

(y

t 2

t

yt 1 ) 2 2 ( R c)

whose first order conditions can be shown to coincide with those calculated via

restricted maximum likelihood.

(c) What assumptions did you make on f(y1|, ) assuming y0 is unobserved? Discuss

the importance of any simplifying assumptions and the importance that the

parameter has if its value were unrestricted.

We have been using the conditional likelihood for simplicity. However, if we had

used the exact likelihood, the sensible assumption regarding f(y1|, ) is that it is

9

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

distributed N(0, 2/(1 - 2)). Note, however, that the exact likelihood is only valid if

|| < 1, otherwise, the log-likelihood becomes unbounded for = 1.

(d) Let

QT ( )

1 T

log f ( yt | yt 1 ; , 2 )

T t 2

Show that

SSRU

1

1 1

QT (ˆ) log( 2 ) log

2

2 2

T

SSRR

1

1 1

~

QT ( ) log( 2 ) log

2

2 2

T

T

T

~

Where SSRU t 2 ( yt ˆyt 1 ) 2 and SSRU t 2 ( yt yt 1 ) 2 . Hint: Show that

ˆ 2

SSRU

SSRU

and ~ 2

.

T

T

SSRU

SSRR

and ~ 2

all that you beed is to compute the first order

T

T

conditions of the likelihood and the restricted likelihood with respect to 2.

SSRU

SSRR

and ~ 2

Substitution of ˆ 2

into QT(θ) trivially delivers the two

T

T

~

expressions for QT (ˆ) and QT ( ).

To show, ˆ 2

1 T

H ( yt ;ˆ) is

T t 2

~

consistent for –E[H(yt; θ0)]. Verify that , although not the same as

1 T

~

t 2 H ( yt ; ) , is consistent for –E[H(yt; θ0)]. Hint: You may assume that ˆ is

T

~

consistent for θ0 and is consistent for θ0 under the null. This should make proving

consistency of ˆ 2 and ~ 2 easier.

(e) Verify that the ̂ given above, although not the same as

The easiest approach is to calculate the hessian for the conditional and the restricted

likelihoods. Using the hint, it then becomes trivial to show that both

10

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

1 T

H ( yt ;ˆ) E[ H ( yt ; 0 )] and

T t 2

1 T

~

t 2 H ( yt ; ) E[ H ( yt ; 0 )] and

T

~

ˆ E[ H ( yt ; 0 )] and E[ H ( yt ; 0 )]

~

(f) Show that the Wald, LM, and LR statistics, using ̂ and can be written as

W T

( Rˆ c) 2

( R 2 yt21 ) SSRU

SSRR

SSRU

LR T log

log

T

T

~

~

(Yt ' Yt 1 )' Yt 1 (Yt 1 'Yt 1 ) 1 Yt 1 (Yt ' Yt 1 )

LM T

SSRR

No secrets here, just direct application of the formulas.

(g) Show that these three statistics can also be written as

W T

SSRR SSRU

SSRU

SSRR

LR T log

SSRU

SSRR SSRU

LM T

SSRR

This involves simple algebraic manipulations.

11

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Problem 7: Consider the stochastic process {xt} which describes the number of trades per

interval of time of a particular stock. Thus, xt is integer-valued and non-negative. The

Poisson distribution is commonly used to describe this type of process. Its density has the

form:

f ( xt j | t 1 ; )

e t tj

j!

with conditional mean

E ( xt | xt 1 ,...) t exp( xt 1 )

Answer the following questions:

(a) Under what parametric restrictions of and will {xt} be stationary?

This is a tricky question since we have a specification for the conditional mean

directly rather than for {xt}. However, it can be shown that the model is explosive for

> 0.

(b) Write down the log-likelihood function for this problem conditional on x1.

T

L( ) xt ( xt 1 ) exp( xt 1 ) ln xt !

t 2

(c) Compute the first order conditions and obtain the estimators for and .

L

T

t 2 xt exp( xt 1 ) 0

L

T

t 2 xt ( xt 1 ) exp( xt 1 ) 0

12

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

(d) Suppose xt is not Poisson distributed. Are and consistently estimated by the

conditional log-likelihood in (b)?

The Poisson distribution is an example of linear exponential function whose QMLE

properties have been established by Gourieroux, Momfort and Trognon (1984).

Check Cameron’s (1998) book for the references.

(e) Compute the one-step ahead forecast xt+1|t. Next, describe the Monte Carlo

exercise that would allow you to compute the two-step ahead forecast. Finally,

describe what approach would you take if the data were not Poisson distributed

but you wanted to produce multi-step ahead forecasts with the conditional mean

estimated in (b).

The one step ahead forecast xt+1|t is t+1 = exp( + xt).

To compute the two-step ahead forecast note that we need to compute E ( xt 2 | xt ) . To

do this, we will draw from a Poisson distribution with mean parameter t+1 = exp( +

xt). Suppose you draw n times from this Poisson, you will have n values for ~xt j 1

which you can then plug into the expression of the conditional mean to compute the

forecast as:

xt 2|t

1 n

exp( ~

xti1 )

i 1

n

Computing the h-step ahead forecast would consist on drawing n times from a

Poisson distribution whose conditional mean is given by the (h – 1)-step ahead

forecast and calculating the average as is done above.

Note that as long as the conditional mean is correctly specified, multi-step ahead

forecasts computed as described above would be consistent (albeit not efficient if the

distribution is not Poisson).

Problem 8: Let {yt} be a stationary zero-mean time series. Define

xt yt 0.4 yt 1

wt yt 2.5 yt 1

(a) Express the autocovariance functions for {xt} and {wt} in terms of the

autocovariance function of {yt}. (Note: there is no assumption that {yt} is white

noise, only covariance stationary).

13

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Solution:

1

2 y

)

1

2

2.5

2.5

2.52 0x 0w 0y ( 2.52 1) 2 2.5 1y

0x E ( xt2 ) 0y (1

1 y

1

j 1 (1 2 ) jy 0.4 jy1

2.5

2.5

2 x

w

y

2.5 j j 2.5 j 1 ( 2.52 1) jy 2.5 jy1

xj

(b) Show that {xt} and {wt} have the same autocorrelation functions.

Solution:

From (a),

xj 2.52 xj wj

x

0 2.52 0x 0w

x

j

(c) Show that the process ut j 1 0.4 j xt j satisfies the difference equation

ut 2.5ut 1 xt

Solution:

Notice that (1 – 2.5L) is non-invertible. However

1

0.4 L1

1

0.4 L1

(1 2.5L) 0.4 L1 (1 2.5L) (1 0.4 L1 )

0.4

1 0.4 L1 0.4 2 L2 ... 0.4 L1 0.4 L2 ...

L

Hence,

ut

xt

0.4 xt 1 0.4 2 xt 2 ... j 1 xt j

(1 2.5L)

14

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

Problem 9: Find the largest values for | 1 | and | 2 | for an MA(2) model.

Solution:

Notice that for an MA(2)

1

1 (1 2 )

2

; 2

2

2

1 1 2

1 12 22

Maximizing 1 with respect to θ1 and θ2 delivers 1 2 and 2 1 so that the maximum

first order autocorrelation is 2 / 2 . Similarly, the maximum second order

autocorrelation is achieved for 1 0 and 2 1 and a maximum second order

autocorrelation of ½.

Problem 10: Consider an AR(1) process, such as

yt c yt 1 t

where the process started at t = 0 with the initial value y0. Suppose || < 1 and that y0 is

uncorrelated with the subsequent white noise process (1, 2, …).

(a) Write the variance of yt as a function of 2 (= V() ); V(y0), and . Hint: by

successive substitution show

yt (1 2 ... t )c t t 1 ... t 1 t y0

Solution:

Using the hint,

V ( yt ) (1 2 ... 2 ( t 1) ) 2 2 tV ( y0 )

1 2t 2

2tV ( y0 )

2

1

(b) Let and {j} be the mean and the autocovariances of the covariance stationary

AR(1) process, so that

c

j 2

; j

1

12

Show that:

(i) lim E ( yt )

t

(ii) lim V ( y t ) 0

t

15

ARE/ECN 240C Time Series Analysis

Fall 2002

Professor Òscar Jordà

Economics, U.C. Davis

(iii) lim COV ( yt , yt j ) j

t

Solution:

1 t

1t

1

lim E ( yt ) lim E

c

t t y0

c

t

t

1 L

1

1

1 2 t 2

1

lim V ( yt ) lim

2V ( y0 )

2

2

2

t

t 1

1

1 2t 2

j

lim COV ( yt yt j ) lim j

2

2

2

t

t

1

1

16