Acceptance Testing - Computing and Software

advertisement

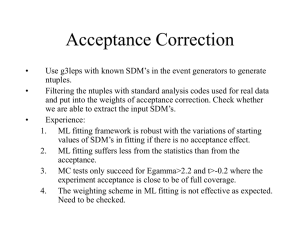

Acceptance Testing V Lifecycle Model Waterfall Model Definition • Acceptance testing consists of comparing a software system to its initial requirements and to the current needs of its end-users or, in the case of a contracted program, to the original contract [Meyers 1979]. Purpose • Other Testing: – intent is principally to reveal errors • Acceptance Testing: – a crucial step that decides the fate of a software system. – Its outcome provides quality indication for customers to determine whether to accept or reject the software product. Goal & Guide • GOAL: 1. verify the man-machine interactions 2. validate the required functionality of the system 3. verify that the system operates within the specified constraints 4. check the system’s external interfaces • • The major guide : the system requirements document The primary focus : on usability and reliability [Hetzel 1984]. Categories of AT Four categories emerged from the survey: • Traceability method • Formal method • Prototyping method • Other method Traceability methods • Traceability methods work by creating a cross reference between requirements and acceptance test cases, fabricating relationships between test cases and requirements. • A straightforward way to match the test cases to the requirements: – allow testers to easily check the requirements not yet tested. – show the customer which requirement is being exercised by which test case(s). • However, each cross reference is created by the tester subjectively. Traceability Methods – cont. • Make software requirement specification (SRS) traceable by requirements tagging: – Number every paragraph and include only one requirement per paragraph – Reference each requirement by paragraph and sentence no. • Use a database to automate traceability task – A matrix or checklist ensures there is a test covering each requirement – Keep track of when tests completed/failed. Figure of Traceability Methods Traceability Methods – samples • TEM – Test Evaluation Matrix [Krause & Diamant 1978] – The TEM shows the traceability from requirements to design to test procedures allocated to verify the requirements. • Allocate requirements to 3 test levels. • Select test scenario that satisfy test objects and exercise requirements in that level. • Generate test cases from test scenarios by selecting target configuration. Traceability Methods – samples • REQUEST – Requirements QUEry and Specification Tool [Wardle 1991] – The REQUEST tool automates much of the routine information handling involved in providing traceability. – A database tool consisting CRD, TRD and VCRI. – Ensure a test covering each requirement. Traceability Methods – samples • SVD – System Verification Diagram [Deutsch 1982] – The SVD tool connects test procedures with requirements. • SVD contains threads • threads stimulus elements • elements represent function associated with a requirement. • The cumulation of threads forms the acceptance test. Formal methods • Apply mathematical modeling to test case generation. They include the application of formal languages, finite state machines, various graphing methods, and others to facilitate the generation of test cases for acceptance testing. Example • A Requirements Language Processor (RLP). It produces a finite state machine (FSM) model whose external behavior matches that of the specified system. Tests generated from the FSM are then used to test the actual system. RLP & FSM example method Prototyping methods • Prototyping methods begin by developing a prototype of the target system. This prototype is then evaluated to see if it satisfies the requirements. Once the prototype does satisfy the requirements, the outputs from the prototype become the expected outputs from the final system. • Therefore, prototypes are used as test oracles for acceptance testing. Prototype Methodology Prototype Methodolgy • Advantage: less function-mismatching errors found in the final test stage and thus saving lots of cost and time. • The difficulty in generating test data for system acceptance test can be greatly reduced. Other Methods • Empirical Data: is an acceptance testing method based on empirical data about the behavior of internal states of program – Define test-bits and combine them into a pattern which replects the internal state. – Collect empirical data by an oracle which stores value of test-bit patterns in the form of a distribution. – Compare pattern observed to data stored in distribution. Other Methods – cond. • Structured analysis (SA) methods involve the use of SA techniques (entityrelationship diagrams, data flow diagrams, state transition diagrams, etc.) in aiding the generation of test plans. Other Methods – cond. • Simulation is more a tool for acceptance testing than a stand-alone method since it is not used in the development of test cases. It is used for testing real-time systems and for systems where “real” tests are not practical. Special characteristics of UAT • Test objective, an identified set of software features or components to be evaluated under specified conditions by comparing the actual behavior with the specified behavior as described in the software requirements. • Test criteria, are used to determine the correctness of the software component under test. • Test strategy, are the methods for generating test cases based on formally or informally defined criteria of test completeness • Test oracle, is any program, process, or body of data that specifies the expected outcome of a set of tests. • Test tool Major problem of Ad hoc testing • • • • • • test plan generation relies on the understanding of the software system, lack of formal test models representing the complete external behavior of the system from the user perspective, no methodology to produce all different usage patterns to exercise the external behavior of the system, lack of rigorous acceptance criteria, no techniques for verifying the correctness, consistency, and completeness of the test cases no methods to check if the acceptance testing plan matches the given requirements. Reference • Software Requirements and Acceptance Testing, Pei Hsia and David Kung, Annals of Software Engineering 3 (1997) 291-317 Strategies for UAT • Behavior (Scenario) based acceptance test • Black-box approach for UAT • Operation-based test strategy Definition • A scenario is an concrete system usage example consisting of an ordered sequence of events which accomplishes a given functional requirement of the system desired by the customer/user. • A scenario schema is a template of scenarios consisting of the same ordered sequence of event types and accomplishing a given functional requirement of a system. – A scenario instance is an instantiation of a scenario schema. Scenario-based Acceptance Test • a new acceptance test model - a formal scenario model to capture and represent the external behavior of a software system. • discuss acceptance test error, test criteria, and test generation. Scenario-based AT • Test model consists of three sub-models: – a user view: a set of scenario schema, describe interactive behavior of user with system. – an external system interface: represents behavior of an interface between a system and its interfaced external system. – an external system view: represents external view of the system in terms of user views and external interface view. Scenario-based AT Procedure 1. elicit and specify scenarios • 2. formalize test model • • 3. formalize the scenario trees and construct the scenario-based finite state machines (FSMs) combine FSMs to form a composite FSM (CFSM); verify test model • 4. 5. identify the user’s and external system views according to requirements; verify the generated FSMs and CFSMs against their man– machine and external system interface. generate test case to achieve a select test criteria execute test cases Scenario-based AT – cont. • Scenario tree: T(V) = (N, E, L) – For a user view V, consists of finite set of nodes N, a finite set of edges E, a finite set of edge labels L. – Node set consists of a set of states as perceived by the user. – A label l∈L of an edge e∈E between nodes N1, N2∈N, shows the state of the system changed from N1 to N2 due to the occurrence of event type l Benefit • Benefit: its ability to allow users with the requirements analysts to specify and generate the acceptance test cases through a systematic approach. Black-box approach for UAT • Source of TC: functional or external requirements specification of system • Use a functional test matrix to select a minimum set of test case that cover system functions. Black-box approach – cond. Steps to select requirements-based test case: 1. Start with normal test cases from the specification and an identification of system functions. 2. Create a minimal set that exercises all inputs/outputs and covers the list of system functions. 3. Decompose any compound or multiple condition cases into single-condition cases. 4. Examine the combinations of single-condition cases and add test cases for any plausible functional combinations. 5. Reduce the list by deleting any test cases dominated by others already in the set. Black-box approach – cond. • Advantage: reduce possibility of having untested procedures • Difficulty: acceptance criteria not clearly defined. Operation-based test • OBT consists of the following components: – Test selection based on operational profiles; – Well-defined acceptance criteria; – Compliant with ISO9001 requirements. Test based on operational profile • An operation is a major task the system performs. • An operational profile (OP) is a set of operations and associated frequencies of occurrence in expected field environment. • Operation profile dependent on users of application. • Most applications have targeted user classes and each user class has their own OP.. • Reliability must be checked against each OP. Operational profiles example Drive testing by using OP • Select test cases according to the occurrence probabilities of the operation. • The amount of testing of an operation is based on its relative frequency of use. • The most recently used operations will receive the most testing and less frequently used operations will receive less testing. Criticality • May classify system operations by their criticality. • Assume criticality of each OP determined separately. • Example, a banking application – teller: the most critical – supervisor: less critical – manager: the least critical Test Selection by Criticality • # of test cases for each OP proportional to its weight criticality: C ' i Ci k i 1 Ci Pi Ci : criticality of OP i Pi : its occurrence probability k : total number of OPs Pi Example of test selection based on criticality Acceptance Criteria • The OBT strategy uses the following acceptance criteria: 1. no critical faults detected, and 2. the software reliability is at an acceptable level. First acceptance criterion • Let T be a set of acceptance test cases andti T with the mapping si Psi' , where si is the input of test ti; and si ' is the output of applying si to program P. • The requirement of no critical fault implies that si' C – where C is the set of incorrect critical output values as defined by the user. Second acceptance criterion (a) reliability of every OP class is acceptable when: Ri i , i where Ri is the estimated reliability of the OP i, and i is the acceptable reliability of the OP i. (b) Reliability of the whole application is acceptable when: R0 0 where R0 is the estimated reliability of the whole application, and 0is the acceptable reliability of the whole application. Acceptance decisions • Accept - no defects are detected during UAT, user accept the software deliverable • Provisional acceptance - accepted without repair by concession • Conditional acceptance - accepted with repair by concession • Rework to meet specified requirements - too many faults found for the whole application, but the reliabilities of individual operational profiles are acceptable • Rejected - too many faults found in both the whole application and the individual operational profiles, reject the software deliverable Acceptance decisions OBT strategy disadvantages • It requires more analysis upfront as the user needs to determine the different operational profiles. • Test selection involves more work as the frequency of occurrence becomes a factor in picking the test cases. Comparing UTA strategies Reference • Pei Hsia and David Kung. Software Requirements and Acceptance Testing. Annuals of Software Engineering 3 (1997) 291-317. • Hareton K.N. Leung and Peter W.L. Wong. A Study of User Acceptance Tests. Software Quality Journal 6, (1997) 137-149 • P. Hsia, J. Gao, J. Samuel, D. Kung, Y. Toyoshima and C. Chen. Behavior-based acceptance testing of software systems : a formal scenario approach, Proceedings of the 18th Annual International Computer Software and Applications Conference (COMPSAC), 1994.