Chapter 12

advertisement

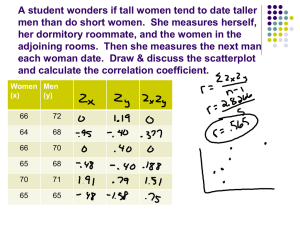

Week 11 November 10-14 Four Mini-Lectures QMM 510 Fall 2014 Chapter Learning Objectives Too much? LO12-1: Calculate and test a correlation coefficient for significance. LO12-2: Interpret the slope and intercept of a regression equation. LO12-3: Make a prediction for a given x value using a regression equation. LO12-4: Fit a simple regression on an Excel scatter plot. LO12-5: Calculate and interpret confidence intervals for regression coefficients. LO12-6: Test hypotheses about the slope and intercept by using t tests. LO12-7: Perform regression analysis with Excel or other software. LO12-8: Interpret the standard error, R2, ANOVA table, and F test. LO12-9: Distinguish between confidence and prediction intervals for Y. LO12-10: Test residuals for violations of regression assumptions. LO12-11: Identify unusual residuals and high-leverage observations. 12-2 Chapter 12 Chapter 12: Correlation and Regression ML 11.1 Visual Displays • Begin the analysis of bivariate data (i.e., two variables) with a scatter plot. • A scatter plot: - displays each observed data pair (xi, yi) as a dot on an X / Y grid. - indicates visually the strength of the relationship between X and Y Sample Scatter Plot 12-3 Chapter 12 Correlation Analysis Chapter 12 Correlation Analysis Strong Positive Correlation Strong Negative Correlation 12-4 Weak Positive Correlation No Correlation Note: r is an estimate of the population correlation coefficient r (rho). Weak Negative Correlation Nonlinear Relation Chapter 12 Correlation Analysis Steps in Testing if r = 0 (population correlation = 0) • Step 1: State the Hypotheses H0: r = 0 H1: r ≠ 0 • Step 2: Specify the Decision Rule For degrees of freedom d.f. = n 2, look up the critical value ta in Appendix D or Excel =T.INV.2T(α,df). for a 2-tailed test • Step 3: Calculate the Test Statistic 1 ≤ r ≤ +1 r = 0 indicates no linear relationship • Step 4: Make the Decision If using the t statistic method, reject H0 if t > ta or if the p-value a. 12-5 Chapter 12 Correlation Analysis Alternative Method to Test for r = 0 • Equivalently, you can calculate the critical value for the correlation coefficient using Critical values of r for various sample sizes • This method gives a benchmark for the correlation coefficient. • However, there is no p-value and is inflexible if you change your mind about a. • MegaStat uses this method, giving two-tail critical values for a = 0.05 and a = 0.01. 12-6 Chapter 12 Simple Regression ML 11.2 What is Simple Regression? • • • Simple regression analyzes the relationship between two variables. It specifies one dependent (response) variable and one independent (predictor) variable. This hypothesized relationship (in this chapter) will be linear. 12-7 Chapter 12 Simple Regression Interpreting an Estimated Regression Equation 12-8 Chapter 12 Simple Regression Prediction Using Regression: Examples 12-9 Chapter 12 Simple Regression Cause-and-Effect? Can We Make Predictions? 12-10 Chapter 12 Regression Terminology Model and Parameters • The assumed model for a linear relationship is y = b0 + b1x + e . • The relationship holds for all pairs (xi, yi). • The error term e is not observable; it is assumed to be independently normally distributed with mean of 0 and standard deviation s. • The unknown parameters are: b0 b1 Intercept Slope 12-11 Chapter 12 Regression Terminology Model and Parameters • The fitted model or regression model used to predict the expected value of Y for a given value of X is • The fitted coefficients are b0 Estimated intercept b1 Estimated slope 12-12 Chapter 12 Regression Terminology A more precise method is to let Excel calculate the estimates. Enter observations on the independent variable x1, x2, . . ., xn and the dependent variable y1, y2, . . ., yn into separate columns, and let Excel fit the regression equation. Excel will choose the regression coefficients so as to produce a good fit. 12-13 Chapter 12 Regression Terminology Scatter plot shows a sample of miles per gallon and horsepower for 15 vehicles. • Slope Interpretation: The slope of 0.0785 says that for each additional unit of engine horsepower, the miles per gallon decreases by 0.0785 mile. This estimated slope is a statistic because a different sample might yield a different estimate of the slope. • Intercept Interpretation: The intercept value of 49.216 suggests that when the engine has no horsepower, the fuel efficiency would be quite high. However, the intercept has little meaning in this case, not only because zero horsepower makes no logical sense, but also because extrapolating to x = 0 is beyond the range of the observed data. 12-14 Chapter 12 Ordinary Least Squares (OLS) Formulas OLS Method • The ordinary least squares method (OLS) estimates the slope and intercept of the regression line so that the sum of squared residuals is minimized. • The sum of the residuals = 0. • The sum of the squared residuals is SSE. 12-15 Chapter 12 Ordinary Least Squares (OLS) Formulas Slope and Intercept • The OLS estimator for the slope is: Excel function: =SLOPE(YData, XData) • The OLS estimator for the intercept is: Excel function: =INTERCEPT(YData, XData) These formulas are built into Excel. 12-16 Example: Achievement Test Scores 20 high school students’ achievement exam scores. Note that verbal scores average higher than quant scores (slope exceeds 1 and intercept shifts the line up almost 20 points). 12-17 Quant Verbal Obs X Y 1 520 398 2 329 505 3 225 183 4 424 332 5 650 737 6 491 578 7 384 344 8 311 367 9 236 298 10 344 600 11 541 643 12 324 328 13 515 556 14 528 527 15 380 504 16 629 695 17 228 133 18 454 478 19 514 413 20 677 742 Chapter 12 Ordinary Least Squares (OLS) Formulas Chapter 12 Ordinary Least Squares (OLS) Formulas Slope and Intercept 12-18 Chapter 12 Assessing Fit Assessing Fit • We want to explain the total variation in Y around its mean (SST for total sums of squares). • The regression sum of squares (SSR) is the explained variation in Y. 12-19 Chapter 12 Assessing Fit Assessing Fit • The error sum of squares (SSE) is the unexplained variation in Y. • If the fit is good, SSE will be relatively small compared to SST. • A perfect fit is indicated by an SSE = 0. • The magnitude of SSE depends on n and on the units of measurement. 12-20 Chapter 12 Assessing Fit Coefficient of Determination • R2 is a measure of relative fit based on a comparison of SSR (explained variation) and SST (total variation). 0 R2 1 • Often expressed as a percent, an R2 = 1 (i.e., 100%) indicates perfect fit. • In simple regression, R2 = r2 where r2 is the squared correlation coefficient). 12-21 Chapter 12 Assessing Fit Example: Achievement Test Scores Strong relationship between quant score and verbal score (68 percent of variation explained) R2 = SSR / SST = 387771 / 567053 = .6838 Regression Statistics Multiple R 0.82694384 R Square 0.68383612 Adjusted R Square 0.66627146 Standard Error 99.8002618 Observations 20 SSR ANOVA Table Source Regression Residual Total df SS MS 1 387771.3 387771.3 18 179281.7 9960.092 19 567053 SST 12-22 F 38.9325 Excel shows the sums needed to calculate R2. Chapter 12 Tests for Significance Standard Error of Regression • The standard error (se) is an overall measure of model fit. Excel’s Data Analysis > Regression calculates se. • If the fitted model’s predictions are perfect (SSE = 0), then se = 0. Thus, a small se indicates a better fit. • Used to construct confidence intervals. • Magnitude of se depends on the units of measurement of Y and on data magnitude. 12-23 Chapter 12 Tests for Significance Confidence Intervals for Slope and Intercept • Standard error of the slope and intercept: Excel’s Data Analysis > Regression constructs confidence intervals for the slope and intercept. • Confidence interval for the true slope and intercept: 12-24 Chapter 12 Tests for Significance Hypothesis Tests • • If b1 = 0, then the regression model collapses to a constant b0 plus random error. Excel ‘s Data Analysis > Regression performs these tests. The hypotheses to be tested are: d.f. = n 2 Reject H0 if tcalc > ta/2 or if p-value α. 12-25 Example: Achievement Test Scores 20 high school students’ achievement exam scores. Chapter 12 Analysis of Variance: Overall Fit Excel shows 95% confidence intervals and t test statistics Intercept Slope Coefficients Standard Error t Stat P-value 19.5924736 75.25768037 0.260339 0.797557 1.03046307 0.165149128 6.239591 6.93E-06 Regression output variables coefficients Intercept 19.5925 Slope 1.0305 12-26 std. error 75.2577 0.1651 t (df=18) 0.260 6.240 p-value .7976 6.93E-06 Lower 95% Upper 95% -138.5180458 177.702993 0.683497623 1.37742851 confidence interval 95% lower 95% upper -138.5180 177.7030 0.6835 1.3774 MegaStat is similar but rounds off and highlights p-values to show significance (light yellow .05, bright yellow .01) F Test for Overall Fit • To test a regression for overall significance, we use an F test to compare the explained (SSR) and unexplained (SSE) sums of squares. 12-27 Chapter 12 Analysis of Variance: Overall Fit Example: Achievement Test Scores 20 high school students’ achievement exam scores. ANOVA Table Source Regression Residual Total ANOVA table Source Regression Residual Total 12-28 df SS MS 1 387771.2894 387771.3 18 179281.6606 9960.092 19 567052.95 SS 387,771.2894 179,281.6606 567,052.9500 df 1 18 19 F Significance F 38.9325 6.92538E-06 MS 387,771.2894 9,960.0923 F 38.93 p-value 6.93E-06 Excel shows the ANOVA sums, the F test statistic , and its p-value. MegaStat is similar, but also highlights p-values to indicate significance (light yellow .05, bright yellow .01) Chapter 12 Analysis of Variance: Overall Fit How to Construct an Interval Estimate for Y • Confidence interval for the conditional mean of Y is shown below. • Prediction intervals are wider than confidence intervals for the mean because individual Y values vary more than the mean of Y. Chapter 12 Confidence and Prediction Intervals for Y Excel does not do these CIs! 12-29 11.3 Three Important Assumptions 1. The errors are normally distributed. 2. The errors have constant variance (i.e., they are homoscedastic). 3. The errors are independent (i.e., they are nonautocorrelated). Non-normal Errors • Non-normality of errors is a mild violation since the regression parameter estimates b0 and b1 and their variances remain unbiased and consistent. • Confidence intervals for the parameters may be untrustworthy because the normality assumption is used to justify using Student’s t distribution. 12-30 Chapter 12 Tests of Assumptions Non-normal Errors • A large sample size would compensate. • Outliers could pose serious problems. Normal Probability Plot • • 12-31 The normal probability plot tests the assumption H0: Errors are normally distributed H1: Errors are not normally distributed If H0 is true, the residual probability plot should be linear, as shown in the example. Chapter 12 Residual Tests What to Do about Non-normality? 1. Trim outliers only if they clearly are mistakes. 2. Increase the sample size if possible. 3. If data are totals, try a logarithmic transformation of both X and Y. 12-32 Chapter 12 Residual Tests Heteroscedastic Errors (Nonconstant Variance) • The ideal condition is if the error magnitude is constant (i.e., errors are homoscedastic). • Heteroscedastic errors increase or decrease with X. • In the most common form of heteroscedasticity, the variances of the estimators are likely to be understated. • This results in overstated t statistics and artificially narrow confidence intervals. Tests for Heteroscedasticity • 12-33 Plot the residuals against X. Ideally, there is no pattern in the residuals moving from left to right. Chapter 12 Residual Tests Tests for Heteroscedasticity • 12-34 Chapter 12 Residual Tests The “fan-out” pattern of increasing residual variance is the most common pattern indicating heteroscedasticity. What to Do about Heteroscedasticity? • Transform both X and Y, for example, by taking logs. • Although it can widen the confidence intervals for the coefficients, heteroscedasticity does not bias the estimates. Autocorrelated Errors • Autocorrelation is a pattern of non-independent errors. • In a first-order autocorrelation, et is correlated with et 1. • The estimated variances of the OLS estimators are biased, resulting in confidence intervals that are too narrow, overstating the model’s fit. 12-35 Chapter 12 Residual Tests Runs Test for Autocorrelation • In the runs test, count the number of the residuals’ sign reversals (i.e., how often does the residual cross the zero centerline?). • If the pattern is random, the number of sign changes should be n/2. • Fewer than n/2 would suggest positive autocorrelation. • More than n/2 would suggest negative autocorrelation. Durbin-Watson (DW) Test • Tests for autocorrelation under the hypotheses H0: Errors are nonautocorrelated H1: Errors are autocorrelated • The DW statistic will range from 0 to 4. DW < 2 suggests positive autocorrelation DW = 2 suggests no autocorrelation (ideal) DW > 2 suggests negative autocorrelation 12-36 Chapter 12 Residual Tests What to Do about Autocorrelation? • Transform both variables using the method of first differences in which both variables are redefined as changes. Then we regress Y against X. • Although it can widen the confidence interval for the coefficients, autocorrelation does not bias the estimates. • Don’t worry about it at this stage of your training. Just learn to detect whether it exists. 12-37 Chapter 12 Residual Tests Example: Excel’s Tests of Assumptions Excel’s Data Analysis > Regression does residual plots and gives the DW test statistic. Its standardized residuals are done in a strange way, but usually they are not misleading. Warning: Excel offers normal probability plots for residuals, but they are done incorrectly. 12-38 Chapter 12 Residual Tests Chapter 12 Residual Tests Example: MegaStat’s Tests of Assumptions MegaStat will do all three tests (if you check the boxes). Its runs plot (residuals by observation) is a visual test for autocorrelation, which Excel does not offer. 12-39 Example: MegaStat’s Tests of Assumptions near-linear plot - indicates normal errors no pattern - suggests homoscedastic errors 12-40 no pattern - suggests homoscedastic errors DW near 2 - suggests no autocorrelation Chapter 12 Residual Tests Standardized Residuals • Use Excel, MINITAB, MegaStat or other software to compute standardized residuals. • If the absolute value of any standardized residual is at least 2, then it is classified as unusual. Leverage and Influence • A high leverage statistic indicates the observation is far from the mean of X. • These observations are influential because they are at the “end of the lever.” • The leverage for observation i is denoted hi. 12-41 Chapter 12 Unusual Observations Leverage • A leverage that exceeds 4/n is unusual. 12-42 Chapter 12 Unusual Observations Example: Achievement Test Scores • • 12-43 If the absolute value of any standardized residual is at least 2, then it is classified as unusual. Leverage that exceeds 4/n indicates an influential X value (far from mean of X). Quant Verbal Obs X Y Predicted Y 1 520 398 555.4 -157.4 0.070 -1.636 2 329 505 358.6 146.4 0.081 1.530 3 225 183 251.4 -68.4 0.171 -0.753 4 424 332 456.5 -124.5 0.050 -1.280 5 650 737 689.4 47.6 0.176 0.526 6 491 578 525.5 52.5 0.059 0.542 7 384 344 415.3 -71.3 0.057 -0.736 8 311 367 340.1 26.9 0.092 0.283 9 236 298 262.8 35.2 0.159 0.385 10 344 600 374.1 225.9 0.073 2.351 11 541 643 577.1 65.9 0.081 0.689 12 324 328 353.5 -25.5 0.084 -0.267 13 515 556 550.3 5.7 0.067 0.059 14 528 527 563.7 -36.7 0.074 -0.382 15 380 504 411.2 92.8 0.058 0.959 16 629 695 667.8 27.2 0.153 0.297 17 228 133 254.5 -121.5 0.168 -1.335 18 454 478 487.4 -9.4 0.051 -0.097 19 514 413 549.3 -136.3 0.067 -1.413 20 677 742 717.2 24.8 0.210 0.279 Residual Leverage Std Residual Chapter 12 Unusual Observations Outliers Outliers may be caused by • an error in recording data. • impossible data (can be omitted). • an observation that has been influenced by an unspecified “lurking” variable that should have been controlled but wasn’t. 12-44 12B-44 Chapter 12 Other Regression Problems To fix the problem • delete the observation(s) if you are sure they are actually wrong. • formulate a multiple regression model that includes the lurking variable. Model Misspecification • If a relevant predictor has been omitted, then the model is misspecified. • For example, Height depends on Gender as well as Age. • Use multiple regression instead of bivariate regression. Ill-Conditioned Data • Well-conditioned data values are of the same general order of magnitude. • Ill-conditioned data have unusually large or small data values and can cause loss of regression accuracy or awkward estimates. 12-45 Chapter 12 Other Regression Problems Ill-Conditioned Data • Avoid mixing magnitudes by adjusting the magnitude of your data before running the regression. • For example, Revenue= 139,405,377 mixed with ROI = .037. Spurious Correlation • In a spurious correlation two variables appear related because of the way they are defined. • This problem is called the size effect or problem of totals. • Expressing variables as per capita or per cent may be helpful. 12-46 Chapter 12 Other Regression Problems Model Form and Variable Transforms • Sometimes a nonlinear model is a better fit than a linear model. Excel offers other model forms for simple regression (one X and one Y) • Variables may be transformed (e.g., logarithmic or exponential functions) in order to provide a better fit. • Log transformations reduce heteroscedasticity. • Nonlinear models may be difficult to interpret. 12-47 Chapter 12 Other Regression Problems Assignments ML 11.4 • Connect C-8 (covers chapter 12) • • • • You get three attempts Feedback is given if requested Printable if you wish Deadline is midnight each Monday • Project P-3 (data, tasks, questions) • • • • 0-48 Review instructions Look at the data Your task is to write a nice, readable report (not a spreadsheet) Length is up to you Projects: General Instructions General Instructions For each team project, submit a short (5-10 page) report (using Microsoft Word or equivalent) that answers the questions posed. Strive for effective writing (see textbook Appendix I). Creativity and initiative will be rewarded. Avoid careless spelling and grammar. Paste graphs and computer tables or output into your written report (it may be easier to format tables in Excel and then use Paste Special > Picture to avoid weird formatting and permit sizing within Word). Allocate tasks among team members as you see fit, but all should review and proofread the report (submit only one report). 0-49 Project P-3 You will be assigned team members and a dependent variable (see Moodle) from the 2010 state database Big Dataset 09 - US States. The team may change the assigned dependent variable (instructor assigned one just to give you a quick start). Delegate tasks and collaborate as seems appropriate, based on your various skills. Submit one report. Data: Choose an interesting dependent variable (non-binary) from the 2010 state database posted on Moodle. Analysis: (a). Propose a reasonable model of the form Y = f(X1, X2, ... , Xk) using not more than 12 predictors. (b) Use regression to investigate the hypothesized relationship. (c) Try deleting poor predictors until you feel that you have a parsimonious model, based on the t-values, p-values, standard error, and R2adj. (d) For the preferred model only, obtain a list of residuals and request residual tests and VIFs. (e) List the states with high leverage and/or unusual residuals. (f) Make a histogram and/or probability plot of the residuals. Are the residuals normal? (g) For the predictors that were retained, analyze the correlation matrix and/or VIFs. Is multicollinearity a problem? If so, what could be done? (h) If you had more time, what might you do? Watch the instructor’s video walkthrough using Assault as an example (posted on Moodle) 0-50 Project P-3 (preview, data, tasks) • Example using the 2005 state database: • 170 variables on n = 50 states • Choose one variable as Y ( the response). • Goal: to explain why Y varies from state to state. • Start choosing X1, X2, … , Xk (the predictors). • Copy Y and X1, X2, … , Xk to a new spreadsheet. • Study the definitions for each variable (e.g., Burglary is the burglary rate per 100,000 population. 12-51 Project P-3 (preview, data, tasks) • Why multiple predictors? • One predictor usually is an incorrect specification. • Fit can usually be improved. • How many predictors: Evans’ Rule (k n/10) • Up to one predictor per 10 observations • For example, n = 50 suggests k = 5 predictors. • Evans’ Rule is conservative. It’s OK to start with more (you will end up with fewer after deleting weak predictors). 12-52 Project P-3 (preview, data, tasks) • Work with partners? Absolutely – it will be more fun. • Post questions for peers or instructor on Moodle. • Get started. But don’t run a bunch of regressions until you have studied Chapter 13. • It’s a good idea to have the instructor look over your list of intended Y and X1, X2, … , Xk in order to avoid unnecessary rework if there are obvious problems. • Look at all the categories of variables – don’t just grab the first one you see (there are 170 variables). Or just use the one your instructor assigned. 12-53