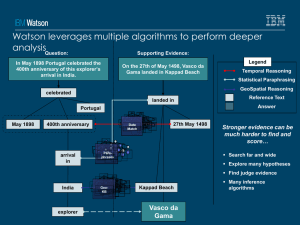

Simulation, learning, and optimization techniques in Watson*s game

advertisement

Simulation, learning, and optimization techniques in Watson’s game strategies Presented by Christina Ortiz Why were advances in questionanswering (QA) technology necessary? IBM Watson needed rapid fire answers to challenging natural-language questions Broad general knowledge High precision Accurate confidence estimates This was achieved over 4 years with 24 IBM researchers Jeopardy! Four Strategies of Jeopardy wagering on a Daily double wagering during Final Jeopardy selecting the next square when in control of the board deciding whether to attempt to answer: “buzz in” Different Circumstances -player must select a square with accordance to getting the daily double -if a player’s score is just below half the leader’s score they may make a “desperation buzz” to avoid a sure loss -if a player’s score is just above half the leader’s score the strategy might be to not buzz in Jeopardy! Simulation Model IBM researchers first needed to create a simulator that would simulate contests between Watson and human contestants Achieved through extensive research of past episodes with a focus on: -Statistical performance profiles of human contestants -Tendencies in wagering -Tendencies in square selection Jeopardy! Simulation Model Four methods for designing, learning and optimizing 1) Properties of the game environment (rules of play, DD placement possibilities, etc.). 2) Performance profiles of human contestants, including tendencies in wagering and square selection 3) Performance profiles of Watson, along with Watson’s actual actual strategy algorithms 4)Buzz-in thresholds in endgames using Approximate Dynamic Programming and estimate user’s relative “buzzability” capabiblity Properties of Game Environment 3,000 past episodes, as early as mid-1990s Recorded the order in which the questions were played, right and wrong contestant answers, daily double and final jeopardy wagers, and daily double locations Three human models: Average Contestant, Champion model, and Grand Champion Daily Double Placement Found that DD s tend to be found in the lower levels, where they cash reward is higher DDs basically never appear in the top row The two second-round DDs never appear in the same column Row location appears to be set independently of the column location and of the rows of other DDs in the game Round 2 column-pair statistics are mostly consistent with independent placement The simulator assigns DD location in Round 1, and the first DD location in Round 2 according to respective row-column frequencies Daily Double accuracy/betting model Accuracy in Average Contestant Model: 64% Champion Model: 75% Grand Champion: 80.5% The lead tend toward by choosing the more conservative cars to maintain their lead Final Jeopardy! Accuracy/Betting Model Average Round 2 DD bets of human contestants in A) First place, B) Second place, and C) Third place Bets as a function of clues played in round Bets of First Place Player (A) Bets of Second Place Player (B) -High density line corresponding to the well-known strategy of betting to cover in the case that B’s score doubles to 2B -2 high density lines: one where B bets everything and one where B bets just enough to overtake A -Notice that there is apparent randomization apart from deterministic wagering principles Betting Strategies Wagering models A, B, and C Ex: (B > 3/4A, B > 2C) : bet “bankroll” nearly everything, 26% probability (Just below B-2c): “keepout C”, 27% probability (Slightly above A-B): “overtake A”, 15% probability (Just below 3B-2A): “two-thirds limit”, 8% probability (Random bets): 24% probability These betting models track actual human win rates Comparison of actual human win rates with model win rates by historic replacement in 2,092 nonlocked (no clear advantage by one player) Final Jeopardy! Situations. Regular Question Model -Researches found the correlation between players attempt to buzz in and players having a correct answer -mean buzz attempt rate: b -buzz correlation: Pb -mean precision: P -right/wrong correlation: Pp b = 0.61, Pp = 0.2, P = 0.87, Pp = 0.2 The right/wrong correlation is due to one player giving the wrong answer which significantly helps the rebound player deduce the correct answer The knowledge correlation is 0.3, with the tip-off of -0.1 producing a net positive correlation 0f 0.2 Regular Question Model The right/wrong correlation is due to one player giving the wrong answer which significantly helps the rebound player deduce the correct answer The knowledge correlation is 0.3, with the tip-off of -0.1 producing a net positive correlation 0f 0.2 Champion model: Substantial increase in attempt rate, (b= 0.61 to b= 0.8) Slight increase in precision (p = 0.87 to p = 0.89) Grand Champion model: b= 0.855 p= 0.915 Square Selection Model Greatest human tendency is to select squares in topto-bottom order within a given category, or to stay in the same category Weaker tendency to select categories moving left-toright across the board 90% probability if the contestant chooses within the same category Champion and Grand Champion square selection is seeking based on DD placement Multigame Wagering Model Researchers must take into account the different wagering strategies for Games 1 and 2 Found that competitors Jennings and Rutter would be very aggressive in their Daily Double and Final Jeopardy wagers in Game 1 Betting in Game 2 would most likely follow this: If B can “keep out” C, B bets a “bankroll” with 35% probability, small random amount satisfying 2/3 and keep out limits with 45% probability, bet to satisfy all limits with 22% proability Optimizing Watson Strategies Testing Watson’s performance with two simulated human opponents showed probability of buzzability (likelihood to win the buzz against humans of all ability levels) Watson’s buzzability against average contestants 80%, against Champions 73%, and Grand Champs 70% Computation speed vital factor because wagering, square selection and buzz-in decisions occur in just seconds. Daily Double Wagering Strategy DD betting is based on estimating Watson’s likelihood of answering the DD question correctly and how a given bet will impact Watson’s overall chances of winning Based on “in-category DD confidence” model: estimates DD accuracy given the number of seen questions and right/wrong Reinforcement learning and the GSE, game-state evaluator Daily Double Wagering Strategy Combination of GSE with in-category confidence: E(bet) : the “equity”, expected winning chances of a bet according to E(bet) = PDD x V(Sw + bet,…) = (1 – PDD) x V(Sw - bet,…) PDD: in-category confidence Sw: Watson’s current score V(): game state evaluation after score increases or decreases by bet and DD has been removed from board Using this equation, you can obtain the optimal risk-neutral bet by selecting the bet with the highest equity Equity estimates getting the DD right -Bet equity curves at five difference in-category confidence levels from 45% ($5) to 85% ($11,000) -Black dots: optimal risk-neutral bet increases with confidence -Risk mitigation: lowered Watson’s equity by 0.2%, but reduced downside risk by more than 10% if DD was wrong Multigame DD Wagering Able to estimate the expected probabilities of Watson ending Game 2 in first, second, or third place based on any combination of Game 1 final scores One issue was how to assign relative utilities to finishing in first, second and third places Ultimately wagering on full credit for first, half for second and zero for third, this kept equal emphasis on finishing in first and avoiding finishing in third Final Jeopardy!Wagering Computation of Best Response Strategy -estimate Watson’s confidence given category title -give Watson’s confidence human accuracy and correlation parameters as stated earlier -use the order of 10,000 Monte Carlo samples of bets to evaluate the bet with the highest equity Final Jeopardy! Wagering Best Response algorithms for Game 1 and Game based on Monte Carlo samples of human betting models Unable to evaluate the Watson’s confidence in Game 1 because there is a second game to play. Instead use interpolation over look up tables Game 2: Logic betting rules guarantee a win as A if Watson answers correctly, as B rules would finish ahead of A if it did not decrease Watson’s chances of finishing ahead of C This comes from assigning half-credit for second place. Wagering as C, the Best Response was unable to derive human-interpretable rules Square Selection Factors: finding the DDs, retaining control of board if DD is not found, learning the essence of the category Against Champion and Grand Champion models where DD seeking is very aggressive Watson’s win rate is mostly first attributed to finding DDs and second to retaining control Learning the essence of the category has some affect only after all DDs have been found Square Selection Unrevealed DDs a square i* is selected that maximizes Pdd(i) + a* Prc(i), where Pdd(i) is the probability that square i contains a DD, Prc(i) is the probability that Watson will retain control if i does not contain a DD After all DDs are found the algorithm switches to selecting the lowest dollar value in a category to learn about the category Square Selection Simulation win rates vs Grand Champions using various square selection strategies 3 Factors: Watson’s win rate against simulated Grand Champions using the strategy of selecting columns with highest estimated accuracy in top-to-bottom order There is a 6.6% improvement by switching to Bayesian DD (if DDs are available) 0.3% improvement by previous strategy with no remaining DDs 0.1% improvement by including a * Prc(i), a = 0.1 Confidence Threshold Watson attempts to buzz if confidence in its toprated answer exceeds a threshold value Threshold value is usually set to maximize expected earnings, however throughout the game the threshold value may vary Four states in which Watson may buzz: the initial state, the first rebound where human #1 answered incorrectly, the first rebound where human #2 answered incorrectly, and the second rebound where both humans answered incorrectly Confidence Threshold -Recursion relation between the value of a current game state with K questions remaining before FJ and values of the possible successor states with K-1 questions remaining P(c): probability density of Watson’s confidence P(Dj): probability that next square selected will be in row j with dollar value Dj= 400 * P(𝝈/B,c): probability of various unit score change combinations 𝝈 S’: various possible successor states after Dj square hasabeen played Confidence Threshold Need to use Approximate Dynamic Programming (DP) techniques because it is faster Approximate DP used to evaluate Vk in terms of Vk-1 where Vk-1 values are based on plain Monte Carlo trials Because of the slowness of the calculation they were unable to estimate for values of K greater than 5 However approximate DP gave threshold estimates within 5% of exact value Buzz-in algorithm: “desperation buzz” in which Watson must buzz in and answer correctly to avoid a lock out, this is a risk free chance to try to buzz and win the game