Document

Making Watson Fast

Daniel Brown

HON111

• Need for Watson to be fast to play Jeopardy successfully

– All computations have to be done in a few seconds

– Initial application speed: 1-2 hours processing time per question

• Unstructured Information Management

Architecture (UIMA): framework for NLP applications; facilitates parallel processing

– UIMA-AS: Asynchronous Scaleout

• UIMA chosen at start for these reasons; other optimization work only began after 2 years

(after QA accuracy/confidence improved)

UIMA implementation of DeepQA

UIMA implementation of DeepQA

• Type System

• Common Analysis Structure (CAS)

• Annotator

– CAS multiplier (CM): creates new “children” CASes

• Flow Controller

• CASes can be spread across multiple systems

(processed in parallel) for efficiency

Scaling out

• Two systems:

– Development (+question processing)

• Meant to analyze many questions accurately

– Production (+speed)

• Meant to answer one question quickly

Scaling out: UIMA-AS

• (UIMA-AS: Asynchronous Scaleout)

– Manages multithreading, communication between processes necessary for parallel processing

• Feasibility test: simulated production system with

110 processes, 110 8-core machines

– Goal: less than 3 seconds; actual: more than 3 seconds

– Two sources of latency: CAS serialization, network communication

– Optimizing CAS serialization resulted in runtime of <1s

Scaling out: Deployment

• 400 processes, 72 machines

• How to find time bottlenecks in such a system?

– Monitoring tool

– Integrated timing measurements (in flow controller component)

RAM Optimizations

• Wanted to avoid disk read/write time delays, so all (production system) data was put into RAM

• Some optimizations:

– Reference size reduction

– Java object size reduction

– Java object overhead

– String size

– Special hash tables

– Java garbage collection with large heap sizes

• *Full GC between games

Indri Search Optimizations

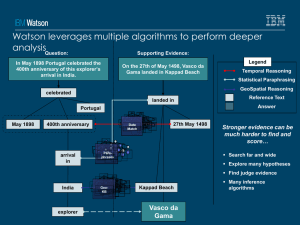

• Indri search: used to find most relevant 1-2 sentences from Watson database

• Using single processor, primary search takes too long

(i.e. 100s)

– Supporting evidence search even longer

• Solution?

– Divide corpus (body of information to search) into chunks, then assign each search daemon a chunk

– (specifically, 50GB corpus of 6.8 million documents, 79 chunks of 100000 documents each, 79 Indri search daemons with 8 CPU cores each; end result, 32 passage queries could be run at once)

Preprocessing and Custom Content

Services

• Watson must first analyze the passage texts before being able to use them

– Deep NLP analysis - semantic/structural parsing, etc.

• Since Watson had to be self-contained, this analysis could be done before run time

(preprocessed)

– Used Hadoop (distributed file system software)

– 50 machines, 16GB/8 cores each

Preprocessing and Custom Content

Services

• Retrieving the preprocessed data?

– Preprocessed data much larger than unprocessed corpus (~300GB total)

– Built custom content server – allocated data to 14 machines, ~20GB each

– Documents then were accessed from these servers

End result

• Parallel processing combined with a number of other performance optimizations resulted in a final average latency of less than 3 seconds.

– No one “silver bullet” solution