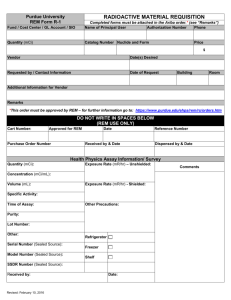

- GNU Radio

advertisement

TED AND KARYN HUME CENTER FOR NATIONAL SECURITY AND TECHNOLOGY GREM: A Radio Environment Map Implementation September 16, 2014 Tim O’Shea Member of Technical Staff, Hume Center oshea@vt.edu 1 Bob McGwier, PhD Director of Research, Hume Center rwmcgwi@vt.edu http://www.hume.ictas.vt.edu Outline • • • • The concept of Radio Environment Map The constituent components and architecture Concepts of operations and operators derived Use in Dynamic Spectrum Sharing with some examples. • Collection of data • Conclusions and further work 2 The Concept of REM • Origins – Developed at Virginia Tech by Dr. Jeff Reed and his graduate students: Zhao, Youping; Reed, Jeffrey H.; Mao, Shiwen; Bae, Kyung K.; , "Overhead Analysis for Radio Environment Mapenabled Cognitive Radio Networks," Networking Technologies for Software Defined Radio Networks, 2006. • It is a database which contains information on your radio environment such as: • What do you populate the database with initially? • What do your radio sensors see in your environment? • What regulation and policy is in effect in the area of your sensor? • What service is being provided by the signals? 3 The Concept of REM (2) • Was initially proposed to be a tool to be used in TV White Space Systems (hereinafter TVWS, Reed, et. al.) • Spectrum Sharing has “grown up a lot” since TVWS and much more sophisticated systems are being proposed to enable wireless mobile to share spectrum rather than get exclusive licenses – Federated Wireless, Google have shown much interest in spectrum sharing proposed by the FCC in its NPRM on wireless providers sharing with USG (see their comments at FCC and subsequent slides) 4 Concept of REM (Final) • It is storage of needed/desired information built as a dumb database with various intelligent query and intelligence (autonomous) manipulation tools 5 Would you buy a used car from this man? 6 YES! 7 Algorithmic Tools Needed • Spectrum Analysis Tools • Signal Identification Tools – Detect presence – Classify or Identify Signal • Aided greatly by a prior knowledge in the REM database – Reduced time, energy, computational complexity of the system needed to detect/identify/classify signals – Able to identify licensed or known users in very weak signal conditions based on a very reduced set of computational tasks • Known and new statistical methods for signal classification – Cyclostationarity based signal classification tools – New method for computing Kurtosis of the signal to aid classification 8 More Algorithmic Tools Needed • Wideband great for energy search • Narrowband great for complex algorithms to work – Polyphase Filterbank Channelizer (Analysis) – Polyphase Filterbank Synthersizer and Arbitrary Rate Resampler (Synthesis of new channels: harris, Rondeau, McGwier) • GnuRadio blocks done by Rondeau starting in 2008 • FPGA code done by Thaddeus Koehn, discussed at 11 AM on Wednesday’s session and soon to be released to GnuRadio, Ettus, and others • Combined Wideband and channelization ideal 9 Kurtosis and classification • Headley, McGwier, and Reed have shown that Kurtosis is a very powerful tool in signal classification • Kurtosis is a statistical measure and is fourth moment divided by the variance squared. • Take a sampled digital communication signal as a time series and compute the kurtosis of the samples. – The sample kurtosis is known for many constellation types under the assumption of random data – It is mostly insensitive to carrier offset and timing offset – It is impacted by channel but, again, kurtosis aids us. Shalvi and Weinstein have shown that a stochastic gradient process minimizing kurtosis in a feed forward linear equalizer is THE GLOBALLY OPTIMUM linear equalizer and on static or nearly static channels, will converge. The kurtosis is that of the emitted stream with the channel impairments greatly reduced. 10 Kurtosis as a tool • Headley produced a GnuRadio block doing these computations. • I’m optimizing and using GSL for many of the computations done by Headley in C++ and replacing those with the library code since it is already included. • Examples are done in tool Headley has created. • To be released after these modifications are done and checked to GnuRadio and assigned to FSF. 11 Cyclostationarity Tools • Gardner introduced us all to use of Cyclostationarity for Signal ID, classification, etc. • Reed and his students at VT have published several important papers on using these tools and possibly the most important for us is Kim, Reed, et. al. in IEEE Dyspan in 2007. 12 Signal ID and REM • We use these tools just covered (superficially) to verify the contents of our current REM and to add new unknowns to the REM • When we have new unknown signals in the REM an autonomous system so directed by priority scheduler, will take up the task of further identifying the found signal • These techniques require computation engines and we at VT and we know at Federated and Google they are building towards use of distributed databases and existing cloud for doing these computations. MINIMIZES THE NEEDED INFRASTRUCTURE. 13 Without major computation? • In mobile, dynamic, shared spectrum the database is good for a few minutes or even a few seconds • Not covered here because it is under active investigation and will be used in commercial offerings are the amazing, large volume of data already existing in the “cloud” of available data • We need distributed databases, sensors, and all of the “data in the cloud” to do a really good job of maintaining these dynamic REM’s in applications of use to wireless users and providers. 14 BUT! Distributed, Mobile, Shared, ARGGG • The CAP Theorem applies! You desire consistency, availability, and partition tolerance in your distributed database for REM – You cannot afford to ship your “global database” to every sensor in your network of sensors and some sensors won’t allow this anyway. To the ones you can, to aid in the local production of updates to REM, you partition the REM to the “relevant parts near the sensor”. • The CAP theorem says you cannot simultaneously guarantee consistency, availability, and partition tolerance! • What can you do? Demand EVENTUAL consistency since the others are “non-negotiable” and design to minimize the lag to consistency and the inevitable clashes that will arise for distributed temporal consistencies. 15 Using the REM and some GR tools • FCC releases Notice of Proposed Rule Making for Spectrum Sharing of 3.4 GHz bands now held exclusively in the USA by USG and in widespread use by DOD on airplanes, ships, etc. • NTIA and FCC propose HUGE restriction zones where no sharing is to be allowed around the coastlines of USA (removing 90+% of the available market!) • Google, Federated Wireless, (Tim and Bob) undertake a measurement campaign to show the exclusion zones are ridiculous and are now the basis for public comments submitted to FCC and available for download at FCC or Federated Wireless web site. 16 Interference Map • To prove the exclusion was ridiculous we built a large DATABASE (REM) of signal measurements. • Tim and Bob measured the radar strength • Google and VT (not including Tim and Bob) measured impact on extremely expensive LTE equipment. • Tim and Bob showed that for these purposes using a USRP B210 was a good as, and produced results consistent with, the expensive equipment for measuring the impact on LTE! • So our used car salesmen was telling us the TRUTH! 17