Intro to Scanners

advertisement

The Front End

Source

code

Front

End

IR

Back

End

Machine

code

Errors

The purpose of the front end is to deal with the input language

• Perform a membership test: code source language?

• Is the program well-formed (semantically) ?

• Build an IR version of the code for the rest of the compiler

The front end deals with form (syntax) & meaning (semantics)

0

The Front End

Source

code

tokens

Scanner

IR

Parser

Errors

Implementation Strategy

Scanning

Parsing

Specify Syntax

regular expressions

context-free

grammars

Implement

Recognizer

deterministic finite

automaton

push-down

automaton

Perform Work

Actions on transitions in automaton

1

The Front End

stream of

characters

Scanner

microsyntax

stream of

tokens

Parser

syntax

IR +

annotations

Errors

Why separate the scanner and the parser?

• Scanner classifies words

• Parser constructs grammatical derivations

• Parsing is harder and slower

Scanner is only pass

that touches every

character of the input.

Separation simplifies the implementation

token is a pair

• Scanners are simple

<part of speech, lexeme >

• Scanner leads to a faster, smaller parser

2

The Big Picture

The front end deals with syntax

• Language syntax is specified with parts of speech, not words

• Syntax checking matches parts of speech against a grammar

Simple expression grammar

1. goal expr

2. expr expr op term

3.

| term

S = goal

4. term number

5.

| id

N = { goal, expr, term, op }

6. op

7.

+

|

–

T = { number, id, +, - }

P = { 1, 2, 3, 4, 5, 6, 7 }

parts of speech

syntactic variables

The scanner turns a stream of characters into a stream

of words, and classifies them with their part of speech.

3

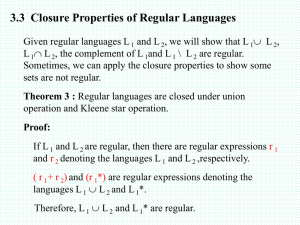

The Big Picture

Why study automatic scanner construction?

• Avoid writing scanners by hand

• Harness theory

compile

time

design

time

source code

specifications

Scanner

parts of speech & words

tables

or code

Scanner

Generator

Represent

words as

indices into a

global table

Specifications written as

Goals:

“regular expressions”

• To simplify specification & implementation of scanners

• To understand the underlying techniques and technologies

4

Regular Expressions

We constrain programming languages so that the spelling of a

word always implies its part of speech

The rules that impose this mapping form a regular language

Regular expressions (REs) describe regular languages

Regular Expression (over alphabet )

• is a RE denoting the set {}

• If a is in , then a is a RE denoting {a}

• If x and y are REs denoting L(x) and L(y) then

— x | y is an RE denoting L(x) L(y)

— xy is an RE denoting L(x)L(y)

— x* is an RE denoting L(x)*

Precedence is closure,

then concatenation,

then alternation

5

Regular Expressions

How do these operators help?

Regular Expression (over alphabet )

• is a RE denoting the set {}

• If a is in , then a is a RE denoting {a}

the spelling of any specific word is an RE

• If x and y are REs denoting L(x) and L(y) then

— x |y is an RE denoting L(x) L(y)

any finite list of words can be written as an RE

— xy is an RE denoting L(x)L(y)

— x* is an RE denoting L(x)*

( w0 | w1 | … | wn )

we can use concatenation & closure to write more concise patterns

and to specify infinite sets that have finite descriptions

6

Examples of Regular Expressions

Identifiers:

Letter

(a|b|c| … |z|A|B|C| … |Z)

Digit

(0|1|2| … |9)

Identifier Letter ( Letter | Digit )*

Numbers:

Integer (+|-|) (0| (1|2|3| … |9)(Digit *) )

Decimal Integer . Digit *

Real

( Integer | Decimal ) E (+|-|) Digit *

Complex ( Real , Real )

Numbers can get much more complicated!

underlining indicates

a letter in the input

stream

7

Regular Expressions

We use regular expressions to specify the mapping of

words to parts of speech for the lexical analyzer

Using results from automata theory and theory of algorithms,

we can automate construction of recognizers from REs

We study REs and associated theory to automate scanner

construction !

Fortunately, the automatic techiques lead to fast scanners

used in text editors, URL filtering software, …

8

Example

Consider the problem of recognizing ILOC register names

Register r (0|1|2| … | 9) (0|1|2| … | 9)*

• Allows registers of arbitrary number

• Requires at least one digit

RE corresponds to a recognizer (or DFA)

(0|1|2| … 9)

(0|1|2| … 9)

r

S0

S1

S2

Recognizer for Register

Transitions on other inputs go to an error state, se

9

Example

(continued)

DFA operation

• Start in state S0 & make transitions on each input character

• DFA accepts a word x iff x leaves it in a final state (S2 )

(0|1|2| … 9)

(0|1|2| … 9)

r

S0

S1

S2

Recognizer for Register

So,

• r17 takes it through s0, s1, s2 and accepts

• r takes it through s0, s1 and fails

• a takes it straight to se

10

Example

(continued)

To be useful, the recognizer must be converted into code

Char next character

State s0

while (Char EOF)

State (State,Char)

Char next character

if (State is a final state )

then report success

else report failure

Skeleton recognizer

All

others

r

0,1,2,3,4,

5,6,7,8,9

s0

s1

se

se

s1

se

s2

se

s2

se

s2

se

se

se

se

se

Table encoding the RE

O(1) cost per character (or per transition)

11

Example

(continued)

We can add “actions” to each transition

Char next character

State s0

while (Char EOF)

Next (State,Char)

Act (State,Char)

perform action Act

State Next

Char next character

if (State is a final state )

then report success

else report failure

Skeleton recognizer

r

0,1,2,3,4,

All

5,6,7,8,9 others

s0

s1

start

se

error

se

error

s1

se

error

s2

add

se

error

s2

se

error

s2

add

se

error

se

se

error

se

error

se

error

Table encoding RE

Typical action is to capture the lexeme

12

What if we need a tighter specification?

r Digit Digit* allows arbitrary numbers

• Accepts r00000

• Accepts r99999

• What if we want to limit it to r0 through r31 ?

Write a tighter regular expression

— Register r ( (0|1|2) (Digit | ) | (4|5|6|7|8|9) | (3|30|31) )

— Register r0|r1|r2| … |r31|r00|r01|r02| … |r09

Produces a more complex DFA

• DFA has more states

• DFA has same cost per transition

• DFA has same basic implementation

(or per character)

More states implies a larger table. The larger table might have mattered

when computers had 128 KB or 640 KB of RAM. Today, when a cell phone has

13

megabytes and a laptop has gigabytes, the concern seems outdated.

Tighter register specification

(continued)

The DFA for

Register r ( (0|1|2) (Digit | ) | (4|5|6|7|8|9) | (3|30|31) )

(0|1|2| … 9)

S2

S3

0,1,2

S0

r

S1

3

S5

0,1

S6

4,5,6,7,8,9

S4

• Accepts a more constrained set of register names

• Same set of actions, more states

14

Tighter register specification

(continued)

r

0,1

2

3

4-9

All

others

s0

s1

se

se

se

se

se

s1

se

s2

s2

s5

s4

se

s2

se

s3

s3

s3

s3

se

s3

se

se

se

se

se

se

s4

se

se

se

se

se

se

s5

se

s6

se

se

se

se

s6

se

se

se

se

se

se

se

se

se

se

se

se

se

Table encoding RE for the tighter register specification

15

Tighter register specification

State

Action

0

r

1

start

1

e

2

e

3,4

e

5

e

6

e

e

e

(continued)

0,1

2

3

4,5,6

7,8,9

other

e

e

e

e

e

2

2

5

4

add

add

add

add

3

3

3

3

add

add

add

add

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

6

add

e

e

exit

e

exit

e

exit

e

exit

e

(0|1|2| … 9)

S2

S3

0,1,2

S0

r

S1

3

S5

0,1

S6

16

4,5,6,7,8,9

S4

Table-Driven Scanners

Common strategy is to simulate DFA execution

• Table + Skeleton Scanner

— So far, we have used a simplified skeleton

state s0 ;

while (state exit) do

char NextChar( )

state (state,char);

// read next character

// take the transition

• In practice, the skeleton is more complex

— Character classification for table compression

s0

— Building the lexeme

— Recognizing subexpressions

0…9

r

0…9

sf

Practice is to combine all the REs into one DFA

Must recognize individual words without hitting EOF

17

Table-Driven Scanners

Character Classification

• Group together characters by their actions in the DFA

— Combine identical columns in the transition table,

— Indexing by class shrinks the table

state s0 ;

while (state exit) do

char NextChar( )

cat CharCat(char)

state (state,cat)

// read next character

// classify character

// take the transition

• Idea works well in ASCII (or EBCDIC)

— compact, byte-oriented character sets

— limited range of values

• Not clear how it extends to larger character sets (unicode)

18

Table-Driven Scanners

Building the Lexeme

• Scanner produces syntactic category

(part of speech)

— Most applications want the lexeme (word), too

state s0

lexeme empty string

while (state exit) do

char NextChar( )

lexeme lexeme + char

cat CharCat(char)

state (state,cat)

// read next character

// concatenate onto lexeme

// classify character

// take the transition

• This problem is trivial

— Save the characters

19

Table-Driven Scanners

Choosing a Category from an Ambiguous RE

• We want one DFA, so we combine all the REs into one

— Some strings may fit RE for more than 1 syntactic category

Keywords versus general identifiers

Would like to encode them into the RE & recognize them

— Scanner must choose a category for ambiguous final states

Classic answer: specify priority by order of REs

Alternate Implementation Strategy

(return 1st)

(Quite popular)

• Build hash table of keywords & fold keywords into identifiers

• Preload keywords into hash table

• Makes sense if

Separate keyword

•

table can make

— Scanner will enter all identifiers in the table

matters worse

— Scanner is hand coded

Othersise, let the DFA handle them

(O(1) cost per character)

20

Table-Driven Scanners

Scanning a Stream of Words

• Real scanners do not look for 1 word per input stream

— Want scanner to find all the words in the input stream, in order

— Want scanner to return one word at a time

— Syntactic Solution: can insist on delimiters

Blank, tab, punctuation, …

Do you want to force blanks everywhere? in expressions?

— Implementation solution

Run DFA to error or EOF, back up to accepting state

• Need the scanner to return token, not boolean

— Token is < Part of Speech, lexeme > pair

— Use a map from DFA’s state to Part of Speech (PoS)

21

Table-Driven Scanners

Handling a Stream of Words

// recognize words

state s0

lexeme empty string

clear stack

push (bad)

while (state se) do

char NextChar( )

lexeme lexeme + char

if state ∈ SA

then clear stack

push (state)

cat CharCat(char)

state (state,cat)

end;

// clean up final state

while (state ∉ SA and state ≠ bad) do

state ← pop()

truncate lexeme

roll back the input one character

end;

// report the results

if (state ∈ SA )

then return <PoS(state), lexeme>

else return invalid

Need a clever buffering scheme, such as

double buffering to support roll back

22

Avoiding Excess Rollback

• Some REs can produce quadratic rollback

— Consider ab | (ab)* c and its DFA

— Input “ababababc”

s0, s1, s3, s4, s3, s4, s3, s4, s5

— Input “abababab”

Not too

pretty

s0, s1, s3, s4, s3, s4, s3, s4, rollback 6 characters

s0, s1, s3, s4, s3, s4, rollback 4 characters

s0

DFA for

ab | (ab)* c

s1

b

s4

c

b

a s

2

s3

a

a

c

c

s5

s0, s1, s3, s4, rollback 2 characters

s0, s1, s3

• This behavior is preventable

— Have the scanner remember paths that fail on particular inputs

— Simple modification creates the “maximal munch scanner”

23

Maximal Munch Scanner

// recognize words

state s0

lexeme empty string

clear stack

push (bad,bad)

while (state se) do

char NextChar( )

InputPos InputPos + 1

lexeme lexeme + char

if Failed[state,InputPos]

then break;

if state ∈ SA

then clear stack

push (state,InputPos)

cat CharCat(char)

state (state,cat)

end

// clean up final state

while (state ∉ SA and state ≠ bad) do

Failed[state,InputPos) true

〈state,InputPos〉← pop()

truncate lexeme

roll back the input one character

end

// report the results

if (state ∈ SA )

then return <PoS(state), lexeme>

else return invalid

InitializeScanner()

InputPos 0

for each state s in the DFA do

for i 0 to |input| do

Failed[s,i] false

end;

end;

24

Maximal Munch Scanner

• Uses a bit array Failed to track dead-end paths

— Initialize both InputPos & Failed in InitializeScanner()

— Failed requires space ∝ |input stream|

Can reduce the space requirement with clever implementation

• Avoids quadratic rollback

— Produces an efficient scanner

— Can your favorite language cause quadratic rollback?

If so, the solution is inexpensive

If not, you might encounter the problem in other applications of

these technologies

Thomas Reps, “`Maximal munch’ tokenization in linear

25

time”, ACM TOPLAS, 20(2), March 1998, pp 259-273.

Table-Driven Versus Direct-Coded Scanners

Table-driven scanners make heavy use of indexing

• Read the next character

state s0 ;

index • Classify it

while (state exit) do

char NextChar( )

index • Find the next state

cat CharCat(char )

• Branch back to the top

state (state,cat);

Alternative strategy: direct coding

• Encode state in the program counter

— Each state is a separate piece of code

Code locality as

opposed to random

access in

• Do transition tests locally and directly branch

• Generate ugly, spaghetti-like code

• More efficient than table driven strategy

— Fewer memory operations, might have more branches

26

Table-Driven Versus Direct-Coded Scanners

Overhead of Table Lookup

• Each lookup in CharCat or involves an address calculation

and a memory operation

— CharCat(char) becomes

@CharCat0 + char x w

w is sizeof(el’t of CharCat)

@0 + (state x cols + cat) x w

cols is # of columns in

w is sizeof(el’t of )

— (state,cat) becomes

• The references to CharCat and expand into multiple ops

• Fair amount of overhead work per character

• Avoid the table lookups and the scanner will run faster

27

Building Faster Scanners from the DFA

A direct-coded recognizer for r Digit Digit

start: accept se

lexeme “”

count 0

goto s0

s0:

s1:

char NextChar

lexeme lexeme + char

count++

if (char = ‘r’)

then goto s1

else goto sout

char NextChar

lexeme lexeme + char

count++

if (‘0’ char ‘9’)

then goto s2

else goto sout

s2: char NextChar

lexeme lexeme + char

count 0

accept s2

if (‘0’ char ‘9’)

then goto s2

else goto sout

sout: if (accept se )

then begin

for i 1 to count

RollBack()

report success

end

else report failure

Fewer (complex) memory operations

No character classifier

28

Use multiple strategies for test & branch

Building Faster Scanners from the DFA

A direct-coded recognizer for r Digit Digit

start: accept se

lexeme “”

count 0

goto s0

s0:

s1:

char NextChar

lexeme lexeme + char

count++

if (char = ‘r’)

then goto s1

else goto sout

char NextChar

lexeme lexeme + char

count++

if (‘0’ char ‘9’)

then goto s2

else goto sout

s2: char NextChar

lexeme lexeme + char

count 1

accept s2

if (‘0’ char ‘9’)

then goto s2

else goto sout

sout: if (accept se )

then begin

for i 1 to count

If end of stateRollBack()

test is complex (e.g.,

success

many cases),report

scanner

generator should

endschemes

consider other

else (with

reportclassification?)

failure

• Table lookup

• Binary search

Direct coding the maximal munch scanner is easy, too.

29

What About Hand-Coded Scanners?

Many (most?) modern compilers use hand-coded scanners

• Starting from a DFA simplifies design & understanding

• Avoiding straight-jacket of a tool allows flexibility

— Computing the value of an integer

In LEX or FLEX, many folks use sscanf() & touch chars many times

Can use old assembly trick and compute value as it appears

— Combine similar states

(serial or parallel)

• Scanners are fun to write

— Compact, comprehensible, easy to debug, …

— Don’t get too cute

(e.g., perfect hashing for keywords)

30

Building Scanners

The point

• All this technology lets us automate scanner construction

• Implementer writes down the regular expressions

• Scanner generator builds NFA, DFA, minimal DFA, and then

writes out the (table-driven or direct-coded) code

• This reliably produces fast, robust scanners

For most modern language features, this works

• You should think twice before introducing a feature that

defeats a DFA-based scanner

• The ones we’ve seen (e.g., insignificant blanks, non-reserved

keywords) have not proven particularly useful or long lasting

31