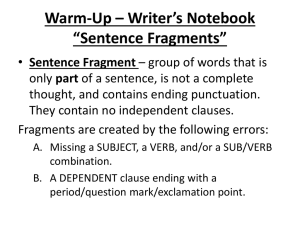

What is “fragment”?

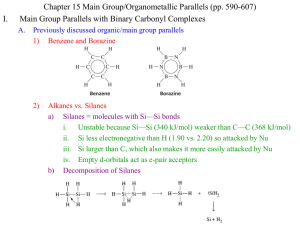

advertisement

Learning to Segment Authors: Eran Borenstein and Shimon Ullman Presented by: Dan Wang Some slices are from Ira Kemelmacher-Shlizerman and Sharon Alpert. 2015年4月13日 1 Eran Borenstein • Position – Computer vision director at Videosurf ( a company). – Leading the development of the face-detection and recognition technology – Visiting scientist at the Division of Applied Mathematics Brown University • Academic Background – Post-doctoral Fellow, MSRI and EECS UC Berkeley. – Ph.D. & M.Sc., Department of Computer Science and Applied Mathematics , Weizmann Institute of Science. – B.Sc., Electrical Engineering Department, Technion - Israel Institute of Technology. • Publication: – 2 ECCV papers & 2 CVPR papers • Main interests – machine and human vision, in particular object classification and recognition, figure-ground segmentation, the interaction between top-down and bottomup visual processes and other problems related to perceptual organization. • Website: http://www.dam.brown.edu/people/eranb/ Shimon Ullman • Current Position – Samy and Ruth Cohn Professor of Computer Science – Head, Department of Computer Science and Applied Mathematics – The Weizmann Institute of Science, Rehovot, Israel • Academic background – 1970-1973 Hebrew University, Jerusalem B.Sc. in Mathematics, Physics and Biology – 1973-1977 M.I.T. Cambridge MA Ph.D. Elec. Engr. and Computer Science • Previous Positions – – – – 1981-1985 MIT Associate Professor 1981-1985 Weizmann Inst. Associate Professor 1986-1993 M.I.T. Professor 1986-present Weizmann Inst. Professor • Papers: – 50+ papers on CVPR, ICML, NIPS, etc. • Website: http://www.wisdom.weizmann.ac.il/~shimon/ Abstract • We describe a new approach for learning to perform class-based segmentation using only unsegmented training examples. • As in previous methods, we first use training images to extract fragments that contain common object parts. We then show how these parts can be segmented into their figure and ground regions in an automatic learning process. This is in contrast with previous approaches, which required complete manual segmentation of the objects in the training examples. • The figure-ground learning combines top-down and bottom-up processes and proceeds in two stages, an initial approximation followed by iterative refinement. The initial approximation produces figure-ground labeling of individual image fragments using the unsegmented training images. It is based on the fact that on average, points inside the object are covered by more fragments than points outside it. The initial labeling is then improved by an iterative refinement process, which converges in up to three steps. At each step, the figure-ground labeling of individual fragments produces a segmentation of complete objects in the training images, which in turn induce a refined figure-ground labeling of the individual fragments. • In this manner, we obtain a scheme that starts from unsegmented training images, learns the figure-ground labeling of image fragments, and then uses this labeling to segment novel images. Our experiments demonstrate that the learned segmentation achieves the same level of accuracy as methods using manual segmentation of training images, producing an automatic and robust top-down segmentation. 中文摘要 • 我们提出了一种新的方法,该方法仅利用未分割的训练样本,学习如何进行 特定类(class-based)的分割。 • 和之前的方法一样,我们首先利用训练图片提取包含物体部件的碎片 (fragment)。然后介绍如何通过一个自动学习过程,将这些片段分割为前景和 背景区域。相比之下,之前工作则要求训练集合的完全手动分割。 • 本文的前背景学习融合了自顶向下和自底向上的过程,分两个步骤进行:初 始的粗分割和之后的迭代改进。初始的分割是利用未分割的训练图片,学习 产生单个图像碎片的前背景标定。这一步骤基于一个事实:平均而言,覆盖 于物体上的fragment数目比覆盖在物体外的多。而后,初始的分割通过最多3 步迭代改进,即可收敛。每一步中,各个fragment的前背景标定产生训练图 片的完整物体分割;这些随即用以改进单个fragment标定。 • 通过这种方式,我们得到了一个由未标定的训练集开始,学习图像碎片的前 背景标定,然后利用这种标定分割新的图片的策略。我们的实验表明,和使 用手动分割图片进行学习的分割相比,本文学习到的分割取得了,同等水平 的精度,产生了一种自动、鲁棒的自顶向下的分割。 Backgrounds • Goal of figure-ground segmentation: – Identify an object in the image and separate it from the background. • Bottom-up approach: – First segment the image into regions, relying on the image-based criteria: • • • • gray level texture uniformity of image regions Smoothness and continuity of bounding contours … – Then identify the image regions that correspond to a single object. Problems (1/2) Scale III Scale II Scale I Input 8 Problems (2/2) • Shortcomings of bottom-up approach: – An object may be segmented into multiple regions. – Some of the regions may be incorrectly merge the object with its background. • Complementary approach – top-down segmentation, using prior knowledge of the object – Shape, color, texture… Basic idea in this paper • Introducing shape of the specific class into the segmentation Top-down Learn how to segment this type of images Result: Binary segmentation Background / foreground Training images 10 Basic idea in this paper Basic framework Previous works • Top-down approaches – Deformable templates – Active shape models (ASM) – Active contours(snakes) • All of these top-down segmentation schemes requires extensive manual intervention. Main contributions of this paper • Top down approach • Automatic fragments labeling 14 Organization • Introduction • Constructing a fragment set (Feature extraction) • Leaning the fragments figure-ground segmentation • Image segmentation by covering fragments • Improving the figure-ground labeling of fragments • Results • What is “fragment”? – Fragments represent local structure of common object parts (such as nose, leg, neck region etc. for the class of the horses) – Fragments are used as shape primitives. • Fragment extraction (F.E.) – Uses unsegmented class and non-class training images to extract and store image fragments. – These fragments represent local structure of common object parts (such as a nose, leg, neck region etc. for the class of horses). • Fragment labeling (F.L.) – This stage is obtained manually in the past. – This paper learns the segmentation scheme from unsegmented training images. • Fragment detection (F.D.) Top-Down Randomly collect a large set of candid. fragments Select subset of informative fragments Figure-Background Labeling Detection and segmentation class non-class E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 17 Constructing a fragment set (F.E.) Randomly collect a large set of candid. fragments class Select subset of informative fragments non-class Ullman, S., Sali, E., Vidal-Naquet, M.: A fragment based approach to object representation and classification. In: 18 Proc. of 4th international workshop on visual form, Capri, Italy (2001) 85–100 Selecting Informative Fragments • Informative = likely to be detected in class compared with non-class images • Fragments are added to maximize the gain in mutual information • Highly overlapping • Well-distributed over the figure •The process produces a set of fragments that are more likely to be detected in class compared with nonclass images. 19 Organization • Introduction • Constructing a fragment set (Feature extraction) • Leaning the fragments figure-ground segmentation • Image segmentation by covering fragments • Improving the figure-ground labeling of fragments • Results Top-Down Randomly collect a large set of candid. fragments Select subset of informative fragments Figure-Background Labeling Detection and segmentation class non-class E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 21 Learning the fragments figure-ground segmentation Randomly collect a large set of candid. fragments Select subset of informative fragments Figure-Background Labeling Detection and segmentation class non-class E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 22 Learning the fragments figure-ground segmentation • The learning relies on two main criteria: – The degree of cover – Border consistency Segmenting Informative Fragments (degree of cover) Segmentation ? Informative fragments Bottom-Up segmentation E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 Degree of cover 24 Formulation • Fragment: F • Different regions in a fragment: R1, R2, …, Rn • rj is the degree of cover. The higher rj , the higher its likelihood to be a figure region. • The figure part is defined by: , set Segmenting Informative Fragments (Border consistency) Fragment hits location Fragment Fragment hits Consistent edges Edge map of each fragment hit Segmenting Informative Fragments (Border consistency) • Fragment hit: Hj – The image patches where the fragment was detected. • Hj (x, y): the grey level value of pixel (x,y) • Apply an edge detector in each fragment hit • Determine the boundary that optimally separates figure from background regions. – The class-specific edges, will be consistently present among hits. – Other edges are arbitrary and change from one hit to the other. • Three types of edges: – Border edge, separating the figure part of the fragment from its background part. (we are looking for) – Interior edge, the edge within the figure part. – Noise edge, which is arbitrary and can appear anywhere in the fragment hit. (background textures or artifacts coming from the edge detector). Segmenting Informative Fragments (combine the border consistency and the degree of cover) Segmentation ? Likelihood determined by the degree of cover + = Overlap with the average Edges D(x,y) of fragment in all the training images. E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 29 Formulation • • • • F : Fragment P : figure part of F Pc : background part of F P : boundary between P and Pc where Overall process Organization • Introduction • Constructing a fragment set (Feature extraction) • Leaning the fragments figure-ground segmentation • Image segmentation by covering fragments • Improving the figure-ground labeling of fragments • Results Top-Down Randomly collect a large set of candid. fragments Select subset of informative fragments Figure-Background Labeling Detection and segmentation class non-class E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 33 Segmenting a test image • For the overlapping pixels, each fragment votes for the classification of pixels it covers weight detection rate false alarms rate • Count the number of votes for figure and background • Check consistency test between the received labeling and labeling of each fragment E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 34 Organization • Introduction • Constructing a fragment set (Feature extraction) • Leaning the fragments figure-ground segmentation • Image segmentation by covering fragments • Improving the figure-ground labeling of fragments • Results Top-Down Randomly collect a large set of candid. fragments Select subset of informative fragments Figure-Background Labeling Detection and segmentation class non-class E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 36 Improving the figure-ground labeling of fragments Detection and segmentation refine refine … Improving the figure-ground labeling of fragments • Once the labeled fragments produce consistent covers that segment complete objects in the training images, a region’s degree of cover can be estimated more accurately. • Use the average number of times its pixels cover figure parts in the segmented training images, rather than the average number of times its pixels overlap with other detected fragments. • The refined degree of cover is then used to update the fragment’s figure-ground labeling… Experiments • 3 classes – Horse heads (quantitatively) – Human faces (qualitatively) – Cars (qualitatively) • Horse head class – Datasets • A training set of 139 class images (size 32x36). – Three independent experiments: • In each experiment, fragments are extracted from 15 images randomly from the training set. • Two different sizes of the fragment set: – 100 – The 40 most informative fragments within 100. • 100 horse head images are manually labeled out of the 139 images. • Two types of tests – Compare the automatic labeling with manual figure ground labeling of individual fragments. – Compare the segmentation of complete objects: • Automatically labeled fragments • Manually labeled fragments • Bottom-up segmentation Results Results This Alg. labeling Manual labeling Bottom-up seg. E. Borenstein, S. Ullman. Learning to Segment. ECCV (3) 2004: 315-328 43 This Alg. labeling Manual labeling Bottom-up seg. This Alg. labeling Manual labeling Bottom-up seg. •The approach is general and can be applied to different classes. Zoom-in to the results Top-down Bottom-up The shape of the horse is captured The boundaries are exact The boundaries are not exact We did not get the horse as one piece Need to make a combination of Top-Down & Bottom-Up approaches 45 Main contributions of this paper • Top down approach • Automatic fragments labeling 46 Thanks for your attention!