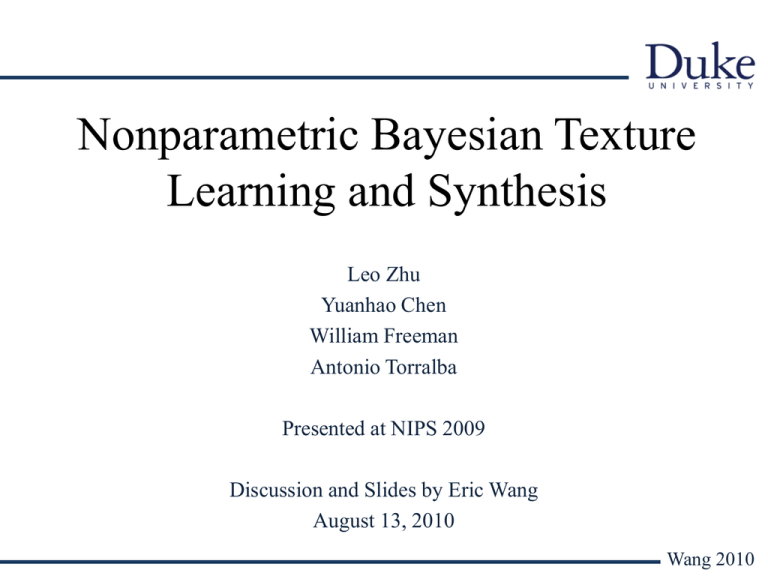

Nonparametric Bayesian Texture Learning and Synthesis

advertisement

Nonparametric Bayesian Texture Learning and Synthesis Leo Zhu Yuanhao Chen William Freeman Antonio Torralba Presented at NIPS 2009 Discussion and Slides by Eric Wang August 13, 2010 Wang 2010 Outline • Introduction • Image Patches and Features • HDP-2DHMM • Inference • Texture Synthesis • Image Segmentation and Synthesis • Conclusion Wang 2010 Introduction • The authors consider texture learning and synthesis with spatial dependence. • Two basic approaches to this problem have been considered – Represent texture using textons and spatial layout. This approach is sensitive to parameter settings, has low rendering quality, and is slow. – Patch based approaches offer improved rendering quality and are faster, but do not have semantic understanding and texture modeling. • In this paper, the authors adopt a patch based approach, and augment it with nonparametric Bayesian modeling and statistical learning. A spatial HMM (2D-HMM) whose states (texton vocabulary) are generated from an HDP. • Once the parameters of the HDP-2DHMM are learned, large textures can be synthesized according to the spatial transition matrix and dictionary of textures. Wang 2010 Introduction Wang 2010 Image Patches and Features • Each patch is characterized by a set of filter responses correspond to values b of image filter response h at location l. that • Specifically, each 24x24 patch is divided into 6x6 cells (l=1,…,36), each of which is size 4x4 pixels. For each pixel in cell l, the response to h=37 image filter responses are computed. • For each 4x4 cell, the responses to each filter are averaged across all pixels and quantized (binned) into 1 of b=6 bins. Therefore, each patch is represented by 37x36x6=7992 dimensional feature responses. • The authors point out that, unlike previous work that used Kmeans to first form a visual vocabulary, their approach directly applies a nonparametric model Wang 2010 HDP-2DHMM • Let L(i), T(i), R(i), D(i) denote the four neighbors of node i. • In the above graphical model, only L(i) and T(i) dependencies are shown. • • indexes the state of node i, and associates it with the cluster label of a texton. and are the emission and transition parameters, respeectively Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM Wang 2010 HDP-2DHMM • The authors also allow the textons to be slightly shifted from their original locations by introducing two hidden variables that indicate the displacements of textons relative to location i. • For simplicity, the shift variables are given a uniform prior over a small shift neighborhood (10% in either direction). Wang 2010 Inference • Collapsed Gibbs sampling is used to learn the parameters of HDP2DHMM. The inference alternates between sampling the state indicators z and the global state probabilities . • The state indicators z are sampled as • The global state probabilities are sampled as in HDP, and the equation not given. Wang 2010 Texture Synthesis • The primary objective of this paper was to synthesize textures from smaller texture observations. • The authors note, however, that HDP-2DHMM is a generative model for image features and not actual image patches. To do this, they integrate image quilting, and define a dictionary of image patches (from the original image) from which the new image is synthesized (with much lower synthesis cost over standard image quilting). Wang 2010 Synthesis Examples Synthesis Examples Wang 2010 Synthesis Examples Wang 2010 Image Segmentation and Synthesis Wang 2010 Conclusion • A novel nonparametric Bayesian model called HD-2DHMM for texture learning and synthesis was presented in this paper. • The main contributions of this paper are that it learns the textons and spatial HMM structure jointly. • Inference is performed using a collapsed Gibbs Sampler • The clustering nature of the model allows for faster image quilting and synthesis with good qualitative results. • Image segmentation and synthesis results were also presented. Wang 2010