Slides

Distinctive Image Feature from

Scale-Invariant KeyPoints

David G. Lowe, 2004

Presentation Content

• Introduction

• Related Research

• Algorithm

– Keypoint localization

– Orientation assignment

– Keypoint descriptor

• Recognizing images using keypoint descriptors

• Achievements and Results

• Conclusion

Introduction

• Image matching is a fundamental aspect of many problems in computer vision.

Scale Invariant Feature Transform

(SIFT)

• Object or Scene recognition.

• Using local invariant image features.

(keypoints)

– Scaling

– Rotation

– Illumination

– 3D camera viewpoint (affine)

– Clutter / noise

– Occlusion

• Realtime

Related Research

– Corner detectors

• Moravec 1981

• Harris and Stepens 1988

• Harris 1992

• Zhang 1995

• Torr 1995

• Schmid and Mohr 1997

– Scale invariant

• Crowley and Parker 1984

• Shokoufandeh 1999

• Lindeberg 1993, 1994

• Lowe 1999 (this author)

– Invariant to full affine transformation

• Baumberg 2000

• Tuytelaars and Van Gool 2000

• Mikolajczyk and Schmid 2002

• Schaffalitzky and Zisserman 2002

• Brown and Lowe 2002

Keypoint Detection

• Goal: Identify locations and scales that can be repeatably assigned under differing views of the same object.

• Keypoints detection is done at a specific scale and location

• Difference of gaussian function

• Search for stable features across all possible scales

D(x, y, σ) = (G(x, y, kσ) − G(x, y, σ)) ∗ I (x, y)

= L(x, y, kσ) − L(x, y, σ).

σ = amount of smoothing k = constant : 2^(1/s)

KeyPoint Detection

• Reasonably low cost

• Scale sensative

• Number of scale samples per octave?

• 3 scale samples per octave where used (although more is better).

• Determine amount of smoothing (σ)

• Loss of high frequency information so double up

Accurate Keypoint Localization

(1/2)

• Use Taylor expansion to determine the interpolated location of the extrema (local maximum).

Calculate the extrema at this exact location and discart extrema below 3% difference of it surroundings.

Accurate Keypoint Localization

(2/2)

• Eliminating Edge Responses

• Deffine a Hessian matrix with derivatives of pixel values in 4 directions

• Detirmine ratio of maxiumum eigenvalue divided by smaller one.

• #KeyPoints

0 832

729 536

Orientation Assignment

• Caluculate orientation and magnitude of gradients in each pixel

• Histogram of orientations of sample points near keypoint.

• Weighted by its gradient magnitude and by a

Gaussian-weighted circular window with a σ that is

1.5 times that of the scale of the keypoint.

Stable orientation results

• Multiple keypoints for multiple histogram peaks

• Interpolation

The Local Image Discriptor

• We now can find keypoints invariant to location scale and orientation.

• Now compute discriptors for each keypoint.

• Highly distinctive yet invariant for illumination and 3D viewpoint changes.

• Biologically inspired approach.

• Divide sample points around keypoint in 16 regions

(4 regions used in picture)

• Create histogram of orientations of each region (8 bins)

• Trilinear interpolation.

• Vector normalization

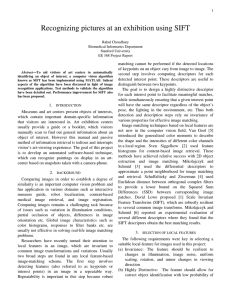

Descriptor Testing

This graph shows the percent of keypoints giving the correct match to a database of 40,000 keypoints as a function of width of the n×n keypoint

descriptor and the number of orientations in each histogram. The graph is computed for images with affine viewpoint change of 50 degrees and addition of 4% noise

.

Keypoint Matching

• Look for nearest neighbor in database

(euclidean distance)

• Comparing the distance of the closest neighbor to that of the second-closest neighbor.

• Distance closest / distance second-closest >

0.8 then discard.

Efficient Nearest Neighbor Indexing

.

• 128-dimensional feature vector

• Best-Bin-First (BBF)

• Modified k-d tree algorithm.

• Only find an approximate answer.

• Works well because of 0.8 distance rule.

Clustering with the Hough

Transform

• Select 1% inliers among 99% outliers

• Find clusteres of features that vote for the same object pose.

– 2D location

– Scale

– Orientation

– Location relative to original training image.

• Use broad bin sizes.

Solution for Affine Parameters

• An affine transformation correctly accounts for 3D rotation of a planar surface under orthographic projection, but the approximation can be poor for 3D rotation of non-planar objects.

Basiclly: we do not create a 3D representation of the object.

• The affine transformation of a model point [x

y] to an image point [u v] can be written as

•Outliers are discarded

•New matches can be found by top-down matching

Results

Results

Conclusion

• Invariant to image rotation and scale and robust across a substantial range of affine distortion, addition of noise, and change in illumination.

• Realtime

• Lots of applications

Further Research

• Color

• 3D representation of world.