Matrix Differential Calculus

advertisement

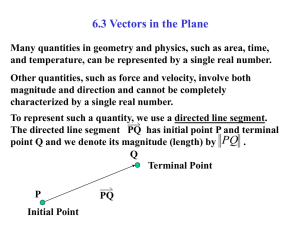

Matrix Differential Calculus By Dr. Md. Nurul Haque Mollah, Professor, Dept. of Statistics, University of Rajshahi, Bangladesh 01-10-11 Dr. M. N. H. MOLLAH 1 Outline Differentiable Functions Classification of Functions and Variables for Derivatives Derivatives of Scalar Functions w. r. to Vector Variable Derivative of Scalar Functions w. r. to a Matrix Variable Derivatives of Vector Function w. r. to a Scalar Variable Derivatives of Vector Function w. r. to a Vector Variable Derivatives of Vector Function w. r. to a Matrix Variable Derivatives of Matrix Function w. r. to a Scalar Variable Derivatives of Matrix Function w. r. to a Vector Variable Derivatives of Matrix Function w. r. to a Matrix Variable Some Applications of Matrix Differential Calculus 01-10-11 Dr. M. N. H. MOLLAH 2 1. Differentiable Functions real-valued function f : X , where X n is an open set is said to be continuously differentiable if the partial derivatives f x / x1,...,f x / xn exist for each x X and are continuous functions of x over X. In this case we write f C1 over X. A Generally, we write f C p over X if all partial derivatives of order p exist and are continuous as functions of x over X. If f C p If f C over Rn, we simply write on X, the gradient of x X is defined as 1 01-10-11 f f C p . at a point Dr. M. N. H. MOLLAH f x x 1 Df x f x xn 3 If f C 2 over X, the Hessian of f at x is defined to be the symmetric n n matrix having 2 f x xi x j as the ijth 2 f x element 2 D f x xi x j If f : X R , where X n , then f is represented by the column vector of its component functions f1, f 2 ,..., f m f1 x as m f x f m x If X is open, we can write f C p on X if f1 C p , f 2 C p ,..., f m C p on X. Then the derivative of the vector function f( x) with respect to the vector variable x is defined by Df x Df1 x ....Df m x nm 01-10-11 Dr. M. N. H. MOLLAH 4 If f : R n r R is real-valued functions of (x,y) where x x1 , x2 ,..., xn R n , y1 , y2 ,..., yn R r , we write f x, y x 1 D x f x, y , f x, y xn n1 f x, y y 1 D y f x, y , f x, y y r r 1 f x, y D xx f x, y , xi x j nn f x, y D xy f x, y , xi y j nr f x, y D yy f x, y yi y j r r 01-10-11 Dr. M. N. H. MOLLAH 5 If f : R n r R m , f f1, f 2 ,..., f m , then Dx f x, y Dx f 1 x, y ...D x f m x, y nm , rm Dy f x, y Dy f 1 x, y ...D y f m x, y For h : Rr Rm and g : R n R r , consider the function f : R n R m defined by f x hg x . Then if h C p , g C p and f C p , the chain rule of differentiation is stated as Df x Dg xDh g x 01-10-11 Dr. M. N. H. MOLLAH 6 2. Classification of Functions and Variables for Derivatives Let us consider scalar functions g, vector functions ƒ and matrix functions F. Each of these may depend on one real variable x, a vector of real variables x, or a matrix of real variables X. We thus obtain the classification of function and variables shown in the following Table. Table Scalar function Vector function Matrix function Scalar Variable g ( x) f ( x) F ( x) Vector variable g ( x) f ( x) F ( x) 01-10-11 Matrix variable g(X ) f (X ) F(X ) Dr. M. N. H. MOLLAH 7 Some Examples of Scalar, Vector and Matrix Functions Scal ar fu n ct i o n g ( x) : ax g ( x) : a ' x , x ' Ax g( X ) : a' Xb , t r( X ' X ), X Vect o r Fu n ct i o nfu n ct i o n f (x) : f ( x) : f (X ) : ( a x,b x2 )' Ax AX , A X B Mat ri x fu n ct i o n F (x) : 1 x F ( x) : x x' F(X ) : AXB , X x x2 2 ,X 01-10-11 Dr. M. N. H. MOLLAH 8 3. Derivatives of Scalar Functions w. r. to Vector Variable 3.1 Definition Consider a scalar valued function ‘g’ of ‘m’ variables g = g(x1, x2,…, xm) = g(x), where x = (x1, x2,…, xm)/. Assuming function g is differentiable, then its vector gradient with respect to x is the m-dimensional column vector of partial derivatives as follows g x1 g x g x m 01-10-11 Dr. M. N. H. MOLLAH 9 3.2 Example 1 Consider the simple linear functional of x as m g( x ) a x i i a x i 1 where a ( a1 am )T is a constant vector. Then the gradient of g is w. r. to x is given by a1 g a x a m m1 Also we can write it as ax a x Because the gradient is constant (independent of x), the Hessian matrix of g ( x) aT x is zero. 01-10-11 Dr. M. N. H. MOLLAH 10 Example 2 Consider the quadratic form m m g( x ) = x Ax = ∑∑xi x j ai j T i =1 j =1 where A=(aij) is a m x m square matrix. Then the gradient of g(x) w.r. to x is given by g ∂ x1 ∂ ∂ g ∂ x g ∂ ∂ xm m1 m ∑x m j a1 j j 1 ∑x a i i1 i 1 m ∑x m j am j j 1 ∑x a i im i 1 m1 Ax Ax 2 A x (ifA is symmetricmatrix) 01-10-11 Dr. M. N. H. MOLLAH 11 Then the second- order gradient or Hessian matrix of g(x)=x/Ax w. r. to x becomes a1m am1 2a11 ∂ ( x Ax ) 2 ∂x a a 2 a m1 1m mm 2 T A A 2 A (if A is symmetric matrix) 01-10-11 Dr. M. N. H. MOLLAH 12 3.3 Some useful rules for derivative of scalar functions w. r. to vectors For computing the gradients of products and quotients of functions, as well as of composite functions, the same rules apply as for ordinary functions of one variable. Thus f ( x )g( x ) f ( x ) g( x ) g( x ) f ( x ) x x x f ( x ) / g( x ) f ( x ) g( x ) 2 [ g( x ) f ( x ) ]g ( x ) x x x f ( g( x )) f ( x ) f ( g( x )) x x The gradient of the composite function f(g(x)) can be generalized to any number of nested functions, giving the same chain rule of differentiation that is valid for functions if one variable. 01-10-11 Dr. M. N. H. MOLLAH 13 3.3 Fundamental Rules for Matrix Differential Calculus ( A ) 0, w here A f(x) x f ( x ) f ( x ) 2. x x f ( x ) g ( x ) f ( x ) g ( x ) 3. x x x f ( x ) g ( x ) f ( x ) g ( x ) 4. g( x ) f ( x ) x x x f ( x ) / g ( x ) f ( x ) g ( x ) 5. [ g( x ) f ( x ) ] g 2( x ) x x x f ( g ( x )) f ( x ) 6. f ( g ( x )) x x f ( x ) g ( x ) f ( x ) g ( x ) 7. [ g ( x )] [ f ( x ) ] x x x f ( x )# g ( x ) f ( x ) g ( x ) 8. [ # g ( x )] [ f ( x )# ], x x x f ( x ) vecf ( x ) f ( x ) f ( x ) 9. . 10. vec , x x x x trace[ f ( x )] f ( x ) 11. trace x x (N ote: for K roneckerproductand # for H adamard product) 1. 01-10-11 Dr. M. N. H. MOLLAH 14 3.4 Some useful derivatives of scalar functions w. r. to a vector variable ( y x ) ( x y ) y x y ( x x ) 2 x x ( x Ay ) Ay x ( y Ax ) Ay x ( x Ax ) ( A A ) x x ( x Ax ) 2 Ax (if A is symmetric) x [ a( x )Qa( x )] D x a( x )( Q Q )a( x ) x / / a( x ) b( x ) [ a( x )b( x )] b ( x ) a( x ) x x x 01-10-11 Dr. M. N. H. MOLLAH 15 4. Derivative of Scalar Functions w. r. to a Matrix Variable 4.1 Definition Consider a scalar-valued function f of the elements of a matrix X=(xij) as f = f(X) = f(x11, x12,… xij,..., xmn) Assuming that function f is differentiable, then its matrix gradient with respect to X is the m×n matrix of partial derivatives as follows f f x11 x1n f X f f xmn xm1 01-10-11 mn Dr. M. N. H. MOLLAH 16 4.2 Example 1 The trace of a matrix is a scalar function of the matrix elements. Let X=(xij) is an m x m square matrix whose trace is denoted by tr (X). Then tr( X ) Im X Proof: The trace of X is defined by m tr( X ) x ii i Taking the partial derivatives of tr (X) with respect to one of the elements, say xij, gives ∂tr( X ) 1, for i j ∂xi j 0 , for i j 01-10-11 Dr. M. N. H. MOLLAH 17 Thus we get, ∂tr( X ) ∂tr( X ) ∂ X ij ∂x mm 1 0 ... 0 0 ... 1 ... ... ... 0 ... 0 0 ... 1 Im 01-10-11 Dr. M. N. H. MOLLAH 18 4.2 Example 2 The determinant of a matrix is a scalar function of the matrix elements. Let X=(xij) is an m x m invertible square matrix whose determinant is denoted |X|. Then ∂ | X | ( X )-1 | X | ∂ X Proof: The inverse of a matrix X is obtained as X -1 1 adj( X ) |X| where adj(X) is known as the adjoint matrix of X. It is defined by C11 C n1 adj( X ) C 1n C nn where Cij =(-1)i+jMij is the cofactor w. r. to xij and Mij is the minor w. r. to xij. 01-10-11 Dr. M. N. H. MOLLAH 19 The minor Mij is obtained by first taking the (n-1) x (n-1) sub-matrix of X that remains when the i-th row and j-th column of X are removed, then computing the determinant of this sub-matrix. Thus the determinant |X| can be expressed in terms of the cofactors as follows n | X | ∑x ik Cik k 1 Row i can be any row and the result is always the same. In the cofactors Cik none of the matrix elements of the i-th row appear, so the determinant is a linear function of these elements. Taking now a partial derivatives of |X| with respect to one of the elements, say xij, gives ∂| X | Ci j ∂x i j 01-10-11 Dr. M. N. H. MOLLAH 20 Thus we get, ∂| X | ∂| X | ∂ X ij m m ∂x mm Cij adj( X ) / | X || X |1 This also implies that ∂ log X ∂ X 1 ∂X ( X )-1 X ∂ X 01-10-11 Dr. M. N. H. MOLLAH 21 4.3 Some useful derivatives of scalar functions w.r.to matrix 1. 2. 3. 4. 5. 6. ( a Xb ) ab X ( a X b ) ba X ( a X Xb ) X ( ab ba ) X ( a X Xa ) 2 Xaa X ( a X CXb ) C Xab CXba X ( a X CXa ) ( C C ) Xaa X 2CXaa , if C C . ( ( Xa b ) / C ( Xa b )) 7. ( C C )( Xa b )a X 2C ( Xa b )a , if C C . 01-10-11 Dr. M. N. H. MOLLAH 22 Derivatives of trace w.r.to matrix tr( X ) I X tr( AX ) 9. A X tr( AX ) 1 0. A X 8. tr( X 1 1. X 1 2. K tr( AX X tr( X 1 3. X ) K 1 ) K( X ) K 1 k 1 i 0 [ X )/ i K i 1 X AX 1 X 1 / ]/ tr( AX 1 B ) 1 4. ( X 1 BAX 1 )T X / tr( e X ) 1 5. eX X tr [lo g ( I n X )] 1 6. [( I n X )1 ] / X 1 Xk 01-10-11 Dr. kM. N. H. MOLLAH k 1 w h ere lo g ( I n X ) 23 tr( X T AX ) 17. ( A AT ) X X tr( XAX T ) 18. X ( A AT ) X tr( AX T B ) 19. BA X tr( AXB ) 20. AT B T X tr( AXBX ) 21. AT X T B T B T X T AT X tr( AXBXT ) 22. AXB AT XBT X tr( AXX T B ) 23. ( AT B T BA ) X X 01-10-11 Dr. M. N. H. MOLLAH 24 Derivatives of determinants w.r.to matrix 24. 25. 26. 27. 28. 29. 30. 31. | X | | X| | X | ( X )1 X X | Xk | k | X |k ( X )1 X log | X | ( X )1 X | AXB | | AXB | A( B X A )1 B X | AXB | | AXB | ( X 1 ) / | X |, if | A | 0 ,| B | 0 ,| X | 0 X log | XX | 2( XX )1 X X | X CX | | X CX | ( C C ) X ( X CX )1 , if C is real matrix. X 2 | X CX | CX ( X CX )1 , if C is real and symmetric. log | X CX | 2CX ( X CX )1 ,if C is real and symmetric. X 01-10-11 Dr. M. N. H. MOLLAH 25 5. Derivatives of Vector Function w. r. to a Scalar Variable 5.1 Definition Consider the vector valued function ‘f’ of a scalar variable x as f(x)=[f1(x) , f2(x) ,…, fn(x) ]/ Assuming function f is differentiable, then its scalar gradient with respect to x is the n-dimensional row vector of partial derivatives as follows f n ( x ) f f1( x ) ,..., x x 1n x 01-10-11 Dr. M. N. H. MOLLAH 26 5.2 Example Let f ( x ) ( x , 2 x ,..., mx ) / Then the gradient of f with respect to x is given by f ( x ) ( 2 x ) ( mx ) , ,..., x x x x 1, 2,..., m1m Also we can write it as 1 2 f ( x ) y , where f ( x ) xy, with y x m 01-10-11 Dr. M. N. H. MOLLAH 27 6. Derivatives of Vector Function w. r. to a Vector Variable 6.1 Definition Consider the vector valued function ‘f’ of a vector variable x=(x1, x2, …, xm)/ as f(x)= y =[y1= f1(x) , y2= f2(x) ,…, yn= fn(x) ]/ Assuming function f is differentiable, then its vector gradient with respect to x is the m×n matrix of partial derivatives as follows f ( x ) f f ( x ) 1 ,..., n x x x mn f1 ( x ) x 1 f ( x ) 1 x m 01-10-11 f n ( x ) x1 f n ( x ) x m mn Dr. M. N. H. MOLLAH 28 6.2 Example Let f ( x ) Ax f1( x ) A1 x , f 2 ( x ) A2 x ,..., f n ( x ) An x/ , where x ( x1 , x2 ,..., xm ) / , A (aij ) nm and Ai [ai1, a i2 ,..., a im ] is the ith row of A, (i 1,2,..., n) Then the gradient of f (x) with respect to x is given by f ( x ) f ( x ) f1( x ) ,..., n x x mn x f1( x ) x 1 f ( x ) 1 xm f n ( x ) a11 x1 f n ( x ) a1m xm mn a n1 a mn mn a 21 A 01-10-11 Dr. M. N. H. MOLLAH 29 7. Derivatives of Vector Function w. r. to a Matrix Variable 7.1 Definition Consider the vector valued function ‘f’ of a matrix variable X=(xij) of order m×n as f(X)= y =[y1= f1(X) , y2= f2(X) ,…, yq= fq(X) ]/ Assuming that function f is differentiable, then its matrix gradient with respect to X is the mn×q matrix of partial derivatives as follows f q ( X ) f1( X ) f ,..., X [ vec X ] [ vec X ] mn q f1( X ) x 11 f1( X ) x 21 f ( X ) 1 xmn f 2 ( X ) x11 f 2 ( X ) x21 f 2 ( X ) xmn 01-10-11 f n ( X x11 f n ( X x21 f n ( X xmn Dr. M. N. H. MOLLAH ) ) ) mn q 30 7.2 Example Let f ( X ) Xa f1( X ) X 1a , f 2 ( X ) X 2 a ,..., f m ( X ) X m a / , where a (a1 , a2 ,..., am ) / , X ( xij ) mn and X i [ xi1, x i2 ,..., x im ] is the ith row of X , (i 1,2,..., m) Then the gradient of f (X) w. r. to matrix variable X is given by f ( X ) f ( X ) ,..., f ( X ) 1 X [ vecX ] f1 ( X ) x 11 f ( 1 X) x 21 f ( X ) 1 x mn q [ vecX ] mn m f 2 ( X ) x11 f 2 ( X ) x 21 f 2 ( X ) x mn 0 0 0 a1 0 a1 0 0 f m ( X ) 0 0 0 a1 x11 a 2 0 0 0 f m ( X ) a2 0 0 0 a Im x 21 0 0 a2 f m ( X ) 0 x mn a m 0 0 0 01-10-11 a m mn m 0Dr. M.0N. H.0MOLLAH 31 8. Derivatives of Matrix Function w. r. to a Scalar Variable 8.1 Definition Consider the matrix valued function ‘F’ of a scalar variable x as F(x)= Y =[yij= fij(x)]m×n Assuming that function F is differentiable, then its scalar gradient with respect to the scalar x is the m×n order matrix of partial derivatives as follows F ( x ) f ij ( x ) x x mn 01-10-11 Dr. M. N. H. MOLLAH 32 8.2 Example Let F ( x ) xA f ij ( x ) xaij mn , where A (aij ) mn is an m n order matrix. Then the gradient of F (x) w. r. to scalar variable x is given by F ( x ) f ij ( x ) x x mn f11 ( x ) x f ( x ) 21 x f m1( x ) x f12 ( x ) x f 22 ( x ) x f m 2 ( x ) x f1n ( x ) x a11 f 2 n ( x ) a 21 x f mn ( x ) a m1 x 01-10-11 a12 a 22 am 2 Dr. M. N. H. MOLLAH a1n a 2 n A a mn 33 9. Derivatives of Matrix Function w. r. to a Vector Variable 9.1 Definition Consider the matrix valued function ‘F’ of a vector variable x=(x1,x2,…,xm) as F(x)= Y =[yij= fij(x)]n×q Assuming that function F is differentiable, then its vector gradient with respect to the vector x is the m×nq order matrix of partial derivatives as follows f nq ( x ) f n1( x ) f1q ( x ) F ( x ) f11( x ) ,..., , ,..., x x x x x mnq 01-10-11 Dr. M. N. H. MOLLAH 34 9.2 Example Let F ( x ) xa f ij ( x ) xi a j mn , where x ( x1 , x2 ,..., xm ) / ,and a (a1 , a2 ,...,a n ) / . Then the gradient of F (x) w. r. to scalar variable x is given by F ( x ) f ij ( x ) x x mmn a1 0 0 0 a1 0 f11 ( x ) f 21 ( x ) x x1 1 f11 ( x ) f 21 ( x ) x x2 2 f ( x ) f ( x ) 21 11 xm xm 0 a2 0 0 0 0 a2 0 a1 0 0 a2 f mn ( x ) x1 f mn ( x ) x2 f mn ( x ) xm an 0 0 an 0 0 0 0 a n mmn a I m 01-10-11 Dr. M. N. H. MOLLAH 35 10. Derivatives of Matrix Function w. r. to a Matrix Variable 10.1 Definition Consider the matrix valued function ‘F’ of a matrix variable X=(xij)m×p as F(X)= Y =[yij= fij(X)]n×q Assuming that function F is differentiable, then its matrix gradient with respect to the matrix X is the mp×nq order matrix of partial derivatives as follows F ( X ) vecF( X ) / X vecX f nq ( X f11 ( X ) f n1( X ) f1q ( X ) ,..., , ,..., vecX vecX vecX vecX 01-10-11 Dr. M. N. H. MOLLAH ) mp nq 36 10.2 Example Let F ( X ) AX f ij ( X ) aik xkj , mq k 1 n where A [ aik ] mn , and X [ xkj ] nq . Then the gradient of F (X) w. r. to scalar variable X is given by F ( X ) vecF( X ) / X vecX f ij ( X ) vec X nqmq f1q ( X ) f mq ( X ) f11 ( X ) f m1 ( X ) ,..., ,..., ,..., vec X vec X vec X vec X nqmq 01-10-11 Dr. M. N. H. MOLLAH 37 f11 ( X x11 f11 ( X x n1 F ( X ) X f11 ( X x 1q f11 ( X x nq a11 a n1 0 0 ) ) ) ) f m1 ( X x11 f m1 ( X x n1 f m1 ( X x1q f m1 ( X xnq ) f1q ( X ) x11 f1q ( X ) ) xn1 f1q ( X ) ) x1q f1q ( X ) ) a m1 0 a mn 0 0 a11 0 a n1 xnq f mq ( X ) x11 f mq ( X ) xn1 f mq ( X ) x1q f mq ( X ) xnq 0 0 a m1 a mn nqmq I q A 01-10-11 Dr. M. N. H. MOLLAH 38 Some important rules for matrix differentiation 1. 2. 3. 4. 5. d dA dB ( A B ) dt dt dt d dA dB dC ( ACB ) BC A C AB dt dt dt dt d n dA n 1 dA n 2 n 1 dA A A A A ... A dt dt dt dt d 1 1 dA 1 A A A dt dt d dA ( det(A )) tr A dt dt 01-10-11 Dr. M. N. H. MOLLAH 39 Homework's 1. 2. 3. 4. 5. ( a Xb ) ab X ( a X b ) ba X ( a X Xb ) X ( ab ba ) X X 1 ( X ' )1 X 1 X AX 1 B ( X 1 B ) / ( AX 1 ) X 01-10-11 Dr. M. N. H. MOLLAH 40 11. Some Applications of Matrix Differential Calculus 1. Test of independence between functions 2. Expansion of Tailor series 3. Transformations of Multivariate Density functions 4. Multiple integrations 5. And so on. 01-10-11 Dr. M. N. H. MOLLAH 41 Test of Independence A set of functions f1x , f 2 x ,..., f n x are said to be correlated of each other if their Jacobian is zero. That is f1, f 2 ,..., f n J f1, f 2 ,..., f n 0 x1, x2 ,..., xn Example: Show that the functions f1 x x1 x2 x3 , f 2 x x1 x2 x3 , f 3 x x12 x22 x32 2 x2 x3 are not independent of one another. Show that f12 x f 22 x 2 f3 x Proof: f1, f 2 , f3 J f1, f 2 , f3 0 x1, x2 , x3 So the functions are not independent. 01-10-11 Dr. M. N. H. MOLLAH 42 Taylor series expansions of multivariate functions In deriving some of the gradient type learning algorithms, we have to resort to Taylor series expansion of a function g(x) of a scalar variable x, (3.19) dg 1 d 2g g ( x) g ( x) d ( x x) 2 dx2 ( x x)2 ... We can do a similar expansion for a function g(x)=g(x1, x2,…, xm) of m variables. We have T 2 1 g g g ( x) g ( x ) ( x x ) ( x x )T 2 ( x x ) ... 2 x x (3.20) Where the derivatives are evaluated at the point x. The second term is the inner product of the gradient vector with the vector x-x, and the third term is a quadratic form with the symmetric Hassian matrix (∂2g / ∂x2).The truncation error depends on the distance |x-x|; the distance has to be small, if g(x)is approximated using only the first and second-order terms. 01-10-11 Dr. M. N. H. MOLLAH 43 Taylor series expansions of multivariate functions The same expansion can be made for a scalar function of a matrix variable. The second order term already becomes complicated because the second order gradient is a fourdimension tensor. But we can easily extend the first order term in (3.20), the inner product of the gradient with the vector x-x to the matrix case. Remember that the vector inner product is define as m g g ( x x ) ( xi xi ) i 1 x i x T For the matrix case, this must become the sum . m m g ( xi j xi j ) x i j i 1 j 1 01-10-11 Dr. M. N. H. MOLLAH 44 Taylor series expansions of multivariate functions This is the sum of the products of corresponding elements, just like in the vectorial inner product. This can be nicely presented in matrix form when we remember that for any two matrices, say A and B. m m m trace (A B) (A B) ii (A) i j (B i j ) T T i 1 i 1 j 1 With obvious notation. So, we have g ( X ) g ( X ) trace[( g T ) ( X X )] X (3.21) for the first two terms in the Taylor series of a function g of a matrix variable. 01-10-11 Dr. M. N. H. MOLLAH 45